All of Bruce G's Comments + Replies

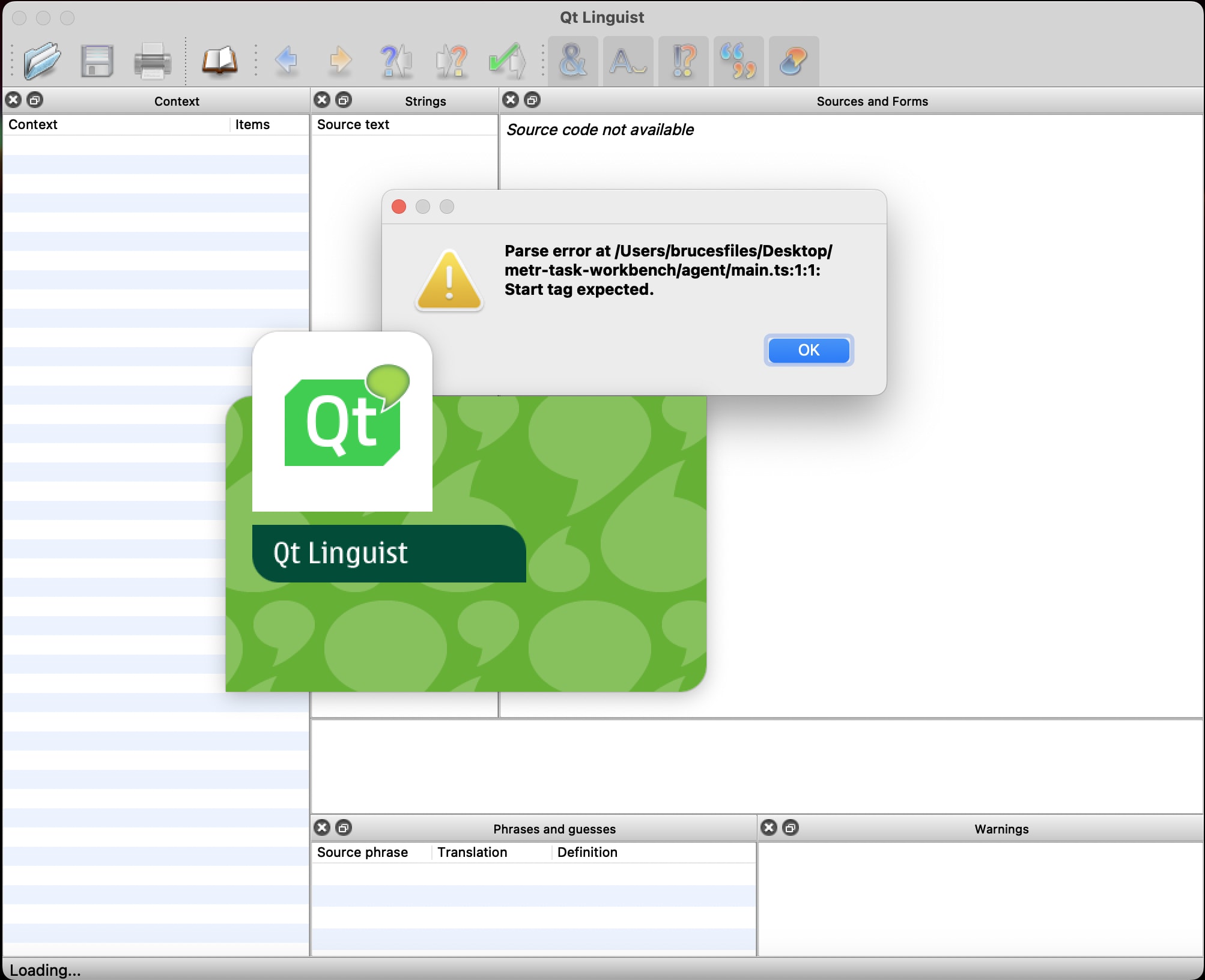

Thanks for your reply. I found the agent folder you are referring to with 'main.ts', 'package.json', and 'tsconfig.json', but I am not clear on how I am supposed to use it. I just get an error message when I open the 'main.ts' file:

Regarding the task.py file, would it be better to have the instructions for the task in comments in the python file, or in a separate text file, or both? Will the LLM have the ability to run code in the python file, read the output of the code it runs, and create new cells to run further blocks of code?

And if an automated ...

If anyone is planning to send in a task and needs someone for the human-comparison QA part, I would be open to considering it in exchange for splitting the bounty.

I would also consider sending in some tasks/ideas, but I have questions about the implementation part.

From the README document included in the zip file:

...## Infra Overview

In this setup, tasks are defined in Python and agents are defined in Typescript. The task format supports having multiple variants of a particular task, but you can ignore variants if you like (and just use single variant named fo

I presume you have in mind an experiment where (for example) you ask one large group of people "Who is Tom Cruise's mother?" and then ask a different group of the same number of people "Mary Lee Pfeiffer's son?" and compare how many got the right answer in the each group, correct?

(If you ask the same person both questions in a row, it seems obvious that a person who answers one question correctly would nearly always answer the other question correctly also.)

Nice idea. I'd imagine something like this has been done in psychology. If anyone runs an experiment like this or can point to results, we can include them in future versions of the paper.

Relevant meme by Daniel Eth.

Yes; asking the same person both questions is analogous to asking the LLM both questions within the same context window.

Is the disagreement here about whether AIs are likely to develop things like situational awareness, foresightful planning ability, and understanding of adversaries' decisions as they are used for more and more challenging tasks?

My thought on this is, if a baseline AI system does not have situational awareness before the AI researchers started fine-tuning it, I would not expect it to obtain situational awareness through reinforcement learning with human feedback.

I am not sure I can answer this for the hypothetical "Alex" system in the linked post, sin...

Those 2 types of downsides, creating code with a bug versus plotting a takeover, seem importantly different.

I can easily see how an LLM-based app fine-tuned with RLHF might generate the first type of problem. For example, let’s say some GPT-based app is trained using this method to generate the code for websites in response to prompts describing how the website should look and what features it should have. And lets suppose during training it generates many examples that have some unnoticed error - maybe it does not render properly on certain size screens, ...

Did everyone actually fail to notice, for months, that social media algorithms would sometimes recommend extremist content/disinformation/conspiracy theories/etc (assuming that this is the downside you are referring to)?

It seems to me that some people must have realized this as soon as they starting seeing Alex Jones videos showing up in their YouTube recommendations.

I think the more capable AI systems are, the more we'll see patterns like "Every time you ask an AI to do something, it does it well; the less you put yourself in the loop and the fewer constraints you impose, the better and/or faster it goes; and you ~never see downsides." (You never SEE them, which doesn't mean they don't happen.)

This, again, seems unlikely to me.

For most things that people seem likely to use AI for in the foreseeable future, I expect downsides and failure modes will be easy to notice. If self-driving cars are crashing or going to ...

Interesting.

I don't think I can tell from this how (or whether) GPT-4 is representing anything like a visual graphic of the task.

It is also not clear to me if GPT-4's performance and tendency to collide with the book is affected by the banana and book overlapping slightly in their starting positions. (I suspect that changing the starting positions to where this is no longer true would not have a noticeable effect on GPT-4's performance, but I am not very confident in that suspicion.)

I think there is hope in measures along these lines, but my fear is that it is inherently more complex (and probably slow) to do something like "Make sure to separate plan generation and execution; make sure we can evaluate how a plan is going using reliable metrics and independent assessment" than something like "Just tell an AI what we want, give it access to a terminal/browser and let it go for it."

I would expect people to be most inclined to do this when the AI is given a task that is very similar to other tasks that it has a track record of perf...

It looks like ChatGPT got the micro-pattern of "move one space at a time" correct. But it got confused between "on top of" the book versus "to the right of" the book, and also missed what type of overlap it needs to grab the banana.

Were all the other attempts the same kind of thing?

I would also be curious to see how uPaLM or GPT-4 does with that example.

...So why do people have more trouble thinking that people could understand the world through pure vision than pure text? I think people's different treatment of these cases- vision and language- may be caused by a poverty of stimulus- overgeneralizing from cases in which we have only a small amount of text. It's true that if I just tell you that all qubos are shrimbos, and all shrimbos are tubis, you'll be left in the dark about all of these terms, but that intuition doesn't necessarily scale up into a situation in which you are learning across billions

The heuristic of "AIs being used to do X won't have unrelated abilities Y and Z, since that would be unnecessarily complicated" might work fine today but it'll work decreasingly well over time as we get closer to AGI. For example, ChatGPT is currently being used by lots of people as a coding assistant, or a therapist, or a role-play fiction narrator -- yet it can do all of those things at once, and more. For each particular purpose, most of its abilities are unnecessary. Yet here it is.

For certain applications like therapist or role-play fiction narrator -...

I have an impression that within lifetime human learning is orders of magnitude more sample efficient than large language models

Yes, I think this is clearly true, at least with respect to the number of word tokens a human must be exposed to in order to obtain full understanding of one's first language.

Suppose for the sake of argument that someone encounters (through either hearing or reading) 50,000 words per day on average, starting from birth, and that it takes 6000 days (so about 16 years and 5 months) to obtain full adult-level linguistic compete...

Early solutions. The most straightforward way to solve these problems involves training AIs to behave more safely and helpfully. This means that AI companies do a lot of things like “Trying to create the conditions under which an AI might provide false, harmful, evasive or toxic responses; penalizing it for doing so, and reinforcing it toward more helpful behaviors.”

This is where my model of what is likely to happen diverges.

It seems to me that for most of the types failure modes you discuss in this hypothetical, it will be easier and more straightforward ...

But the specialness and uniqueness I used to attribute to human intellect started to fade out even more, if even an LLM can achieve this output quality, which is, despite the impressiveness, still operates on the simple autocomplete principles/statistical sampling. In that sense, I started to wonder how much of many people's output, both verbal and behavioral, could be autocomplete-like.

This is kind of what I was getting at with my question about talking to a GPT-based chatbot and a human at the same time and trying to distinguish: to what extent do you th...

Humans question the sentience of the AI. My interactions with many of them, and the AI, makes me question sentience of a lot of humans.

I admit, I would not have inferred from the initial post that you are making this point if you hadn't told me here.

Leaving aside the question of sentience in other humans and the philosophical problem of P-Zombies, I am not entirely clear on what you think is true of the "Charlotte" character or the underlying LLM.

For example, in the transcript you posted, where the bot said:

..."It's a beautiful day where I live and the

...Alright, first problem, I don't have access to the weights, but even if I did, the architecture itself lacks important features. It's amazing as an assistant for short conversations, but if you try to cultivate some sort of relationship, you will notice it doesn't remember about what you were saying to it half an hour ago, or anything about you really, at some point. This is, of course, because the LLM input has a fixed token width, and the context window shifts with every reply, making the earlier responses fall off. You feel like you're having a relation

To aid the user, on the side there could be a clear picture of each coin and their worth, that we we could even have made up coins, that could further trick the AI.

A user aid showing clear pictures of all available legal tender coins is a very good idea. It avoids problems more obscure coins which may have been only issued in a single year - so the user is not sitting there thinking "wait a second, did they actually issue a Ulysses S. Grant coin at some point or it that just there to fool the bots?".

...I'm not entirely sure how to generate images

I can see the numbers on the notes and infer that they denote United States Dollars, but have zero idea of what the coins are worth. I would expect that anyone outside United States would have to look up every coin type and so take very much more than 3-4 times longer clicking images with boats. Especially if the coins have multiple variations.

If a system like this were widely deployed online using US currency, people outside the US would need to familiarize themselves with US currency if they are not already familiar with it. But they would on...

If only 90% can solve the captcha within one minute, it does not follow that the other 10% are completely unable to solve it and faced with "yet another barrier to living in our modern society".

It could be that the other 10% just need a longer time period to solve it (which might still be relatively trivial, like needing 2 or 3 minutes) or they may need multiple tries.

If we are talking about someone at the extreme low end of the captcha proficiency distribution, such that the person can not even solve in a half hour something that 90% of the population can...

One type of question that would be straightforward for humans to answer, but difficult to train a machine learning model to answer reliably, would be to ask "How much money is visible in this picture?" for images like this:

If you have pictures with bills, coins, and non-money objects in random configurations - with many items overlapping and partly occluding each other - it is still fairly easy for humans to pick out what is what from the image.

But to get an AI to do this would be more difficult than a normal image classification problem where you ca...

The intent of the scenario is to find what model dominates, so probably loss should be non-negative. If you use squared error in that scenario, then the loss of the mixture is always greater than or equal to the loss of any particular model in the mixture.

I don't see why that would necessarily be true. Say you have 3 data points from my example from above:

- (0,1)

- (1,2)

- (2,3)

And say the composite model is a weighted average of and with equal weights (so just the regular average).

This means that the compo...

Epistemic status: Somewhat confused by the scenario described here, possible noob questions and/or commentary.

I am not seeing how this toy example of “gradient hacking” could actually happen, as it doesn’t map on to my understanding of how gradient descent is supposed to work in any realistic case.

...Suppose, we have a mixture consisting of a good model which gets 0 loss in the limit (because it’s aligned with our training procedure) and a gradient hacker which gets loss in the limit (because its actual objective is paperclips)

Why would something with full armor, no weapons, and antivenom benefit from even 1 speed? It does not need to escape from anything. And if it has no weapons or venom, it can not catch any prey either.

Edit: I suppose if you want it to occasionally wander to other biomes, then that could be a reason to give it 1 speed.

Got it, thanks.

One thing I am confused about:

Suppose an organism can eat more than one kind of plant food and both are available in its biome on a given round. Say it can eat both leaves and grass and they are both present and have not been eaten by others on that round yet.

Will the organism eat both a unit of leaves AND a unit of grass that round - and thus increase its expected number of offspring for the next round compared to if it had only eaten one thing? Or will it only eat the first one it finds (leaves in this case) and then stop foraging? From the source code, it looks like it is probably eating only the one thing and then stopping, but I am not really familiar with Hy or Lisp syntax so I am not sure.

Clearly a human answering this prompt would be more likely than GPT-3 to take into account the meta-level fact which says:

"This prompt was written by a mind other than my own to probe whether or not the one doing the completion understands it. Since I am the one completing it, I should write something that complies with the constraints described in the prompt if I am trying to prove I understood it."

For example, I could say:

...I am a human and I am writing this bunch of words to try to comply with all instructions in that prompt... That fifth cons

Here is the best I was able to do on puzzle 2 (along with my reasoning):

The prime factors of 2022 are 2, 3, and 337. Any method of selecting 1 person from 2022 must cut the space down by a factor of 2, and by a factor of 3, and by a factor of 337 (it does not need to be in that order and you can filter down by more than one of those factors in single roll, but you must filter down by each of those in a way where the probability is uniform before starting).

The lowest it could be is 2 rolls. If someone could win on the first roll, that person’s p

Assume we have a disease-detecting CV algorithm that looks at microscope images of tissue for cancerous cells. Maybe there’s a specific protein cluster (A) that shows up on the images which indicates a cancerous cell with 0.99 AUC. Maybe there’s also another protein cluster (B) that shows up and only has 0.989 AUC, A overlaps with B in 99.9999% of true positive. But B looks big and ugly and black and cancery to a human eye, A looks perfectly normal, it’s almost indistinguishable from perfectly benign protein clusters even to the most skilled oncologist.

&nb...

In that case, the options are really limited and the main simple ideas for that (eg: guess before you know other player's guesses) have been mentioned already.

One other simple method for one-shot number games I can think of is:

Automatic Interval Equalization:

When all players guesses are known, you take the two players whose guesses are closest and calculate half the difference between them. That amount is the allowable error, and each player's interval is his or her guess, plus or minus that allowable error.

You win if and only if the answer is in you...

Something like that could work, but it seems like you would still need to have a rule that you must guess before you know the other players guesses.

Otherwise, player 2 could simply guess the same mean as player 1 - with a slightly larger standard deviation - and have a PDF that takes a higher value everywhere except for a very small interval around the mean itself.

Alternatively, if 3 players all guessed the same standard deviation, and the means they guessed were 49, 50, and 51, then we would have the same problem that the opening post mentions in the first place.

Can you clarify (possibly by giving an example)? Are players are trying to minimize their score as calculated by this method?

And if so, is there any incentive to not just pick a huge number for the scale to minimize that way?

Is this for a one-shot game or are you doing this over many iterations with players getting some number of points each round?

One simple method (if you are doing multiple rounds) is to rank players each round (Closest=1st, Second Closest=2nd, etc) and assign points as follows:

Points = Number of Players - Rank

So say there are 3 players who guess as follows:

Player 1 guesses 50

Player 2 guesses 49

Player 3 guesses 51

And say the actual number is 52.

So their ranks for that round would be:

...Player 1: 2nd place (Rank 2)

Player 2: 3rd place (Rank 3)

Player 3: 1st place (

It is not obvious to me from reading that transcript (and the attendant commentary) that GPT-3 was even checking to see whether or not the parentheses were balanced. Nor that it "knows" (or has in any way encoded the idea) that the sequence of parentheses between the quotes contains all the information needed to decide between balanced versus unbalanced, and thus every instance of the same parentheses sequence will have the same answer for whether or not it is balanced.

Reasons:

- By my count, "John" got 18 out of 32 right which is not too far off from the av

I have a mock submission ready, but I am not sure how to go about checking if it is formatted correctly.

Regarding coding experience, I know python, but I do not have experience working with typescript or Docker, so I am not clear on what I am supposed to do with those parts of the instructions.

If possible, It would be helpful to be able to go through it on a zoom meeting so I could do a screen-share.