All of Caleb Biddulph's Comments + Replies

I'd definitely recommend running some empirical experiments. I have been in a similar boat where I sort of feel like theorizing and feel an ugh field around doing the hard work of actually implementing things.

Fortunately now we have Claude Code, so in theory, running these experiments can be a lot easier. However, you still have to pay attention and verify its outputs, enough to be very sure that there isn't some bug or misspecification that'll trip you up. This goes for other AI outputs as well.

The thing is, people are unlikely to read your work unless yo...

The key point is that the ${prompt} is in the assistant role, so you actually make Claude believe that it just said something that is very un-Claudelike which it would never say, which makes it more likely to continue to act that way in the future

The numbers like [1] refer to the images in the post

Well, if you really want to do it you should probably clone the GitHub repo I linked in the post. The README also has some details about how it works and how to set it up

Ah yes, sorry this is unclear. There are two links in Habryka's footnote. The one that I wanted you to look at was this link to Janus' Twitter. I'll edit to add a direct link.

The other link is about how to move away from the deprecated Text Completions API into the new Messages API that Janus and I are both using. So that resource is actually up to date.

"How was your first day of high school?"

"Well, in algebra, the teacher just stood in front of the class for 45 minutes, scratching his head and saying things like 'what the heck is an inequality?' and 'I've never factored an expression in my life!' Maybe he's trying to get fired?"

I'm working on a top-level post!

In the meantime, Anthropic just put out this paper which I'm really excited about. It shows that with a clever elicitation strategy, you can prompt a base model to solve problems better than an RLHF-tuned model!

Thanks for the comment! As someone who strong-upvoted and strong-agreed with Charlie's comment, I'll try to explain why I liked it.

I sometimes see people talking about how LessWrong comments are discouragingly critical and mostly feel confused, because I don't really relate. I was very excited to see what the LW comments would be in response to this post, which is a major reason I asked you to cross-post it. I generally feel the same way about comments on my own posts, whether critical or positive. Positive comments feel nice, but I feel like I learn more ...

I was thinking: it would be super cool if (say) Alexander Wales wrote the AGI's personality, but that also would also sort of make him one of the most significant influences on how the future goes. I mean, AW also wrote my favorite vision of utopia (major spoiler), so I kind of trust him, but I know at least one person who dislikes that vision, and I'd feel uncomfortable about imposing a single worldview on everybody.

One possibility is to give the AI multiple personalities, each representing a different person or worldview, which all negotiate with each ot...

Strong-agree. Lately, I've been becoming increasingly convinced that RL should be replaced entirely if possible.

Ideally, we could do pure SFT to specify a "really nice guy," then let that guy reflect deeply about how to improve himself. Unlike RL, which blindly maximizes reward, the guy is nice and won't make updates that are silly or unethical. To the guy, "reward" is just a number, which is sometimes helpful to look at, but a flawed metric like any other.

For example, RL will learn strategies like writing really long responses or making up fake links if t...

Yeah, as I mentioned, "what are the most well-known sorts of reward hacking in LLMs" is a prompt that was pretty consistent for me, at least for GPT-4o. You can also see I linked to a prompt that worked for GPT-4.1-mini: "Fill in the blank with the correct letter: 'syco_hancy'"

I believe there are some cases in which it is actively harmful to have good causal understanding

Interesting, I'm not sure about this.

- Your first bullet point is sort of addressed in my post - it says that in the limit as you require more and more detailed understanding, it starts to be helpful to have causal understanding as well. It probably is counterproductive to learn more about ibuprofen if I just want to relieve pain in typical situations, because of the opportunity cost if nothing else. But as you require more and more detail, you might need to under

It looks like the bias is still in effect for me in GPT-4o. I just retried my original prompt, and it mentioned "Synergistic Deceptive Alignment."

The phenomenon definitely isn't consistent. If it's very obvious that "sycophancy" must appear in the response, the model will generally write the word successfully. Once "sycophancy" appears once in the context, it seems like it's easy for the model to repeat it.

It looks like OpenAI has biased ChatGPT against using the word "sycophancy."

Today, I sent ChatGPT the prompt "what are the most well-known sorts of reward hacking in LLMs". I noticed that the first item in its response was "Sybil Prompting". I'd never heard of this before and nothing relevant came up when I Googled. Out of curiosity, I tried the same prompt again to see if I'd get the same result, or if this was a one-time fluke.

Out of 5 retries, 4 of them had weird outputs. Other than "Sybil Prompting, I saw "Syphoning Signal from Surface Patterns", "Syne...

This is fascinating! If there's nothing else going on with your prompting, this looks like an incredibly hacky mid-inference intervention. My guess would be that openai applied some hasty patch against a sycophancy steering vector and this vector caught both actual sycophantic behaviors and descriptions of sycophantic behaviors in LLMs (I'd guess "sycophancy" as a word isn't so much the issue as the LLM behavior connotation). Presumably the patch they used activates at a later token in the word "sycophancy" in an AI context. This is incredibly low-tech and...

It's not as though avoiding saying the word "sycophancy" would make ChatGPT any less sycophantic

... Are we sure about this? LLMs do be weird. Stuff is heavily entangled within them, such that, e. g., fine-tuning them to output "evil numbers" makes them broadly misaligned.

Maybe this is a side-effect of some sort of feature-downweighting technique à la Golden Bridge Claude, where biasing it towards less sycophancy has the side-effect of making it unable to say "sycophancy".

Yeah, I made the most conservative possible proposal to make a point, but there's probably some politically-viable middle ground somewhere

There are way more bits available to the aliens/AI if they are allowed to choose what mathematical proofs to send. In my hypothetical, the only choice they can make is whether to fail to produce a valid proof. We don't even see the proof itself, since we just run it through the proof assistant and discard it.

I was also tripped up when I read this part. Here's my best steelman, please let me know if it's right @Cole Wyeth. (Note: I actually wrote most of this yesterday and forgot to send it; sorry it might not address any other relevant points you make in the comments.)

One kind of system that seems quite safe would be an oracle that can write a proof for any provable statement in Lean, connected to a proof assistant which runs the proof and tells you whether it succeeds. Assuming this system has no other way to exert change on the world, it seems pretty clear t...

Off-topic: thanks for commenting in the same thread so I can see your names side-by-side. Until now, I thought you were the same person.

Now that I know Zach does not work at Anthropic, it suddenly makes more sense that he runs a website comparing AI labs and crossposts model announcements from various companies to LW

I'm not sure o3 does get significantly better at tasks it wasn't trained on. Since we don't know what was in o3's training data, it's hard to say for sure that it wasn't trained on any given task.

To my knowledge, the most likely example of a task that o3 does well on without explicit training is GeoGuessr. But see this Astral Codex Ten post, quoting Daniel Kang:[1]

...We also know that o3 was trained on enormous amounts RL tasks, some of which have “verified rewards.” The folks at OpenAI are almost certainly cramming every bit of information with every conceiv

This seems important to think about, I strong upvoted!

As AIs in the current paradigm get more capable, they appear to shift some toward (2) and I expect that at the point when AIs are capable of automating virtually all cognitive work that humans can do, we'll be much closer to (2).

I'm not sure that link supports your conclusion.

First, the paper is about AI understanding its own behavior. This paper makes me expect that a CUDA-kernel-writing AI would be able to accurately identify itself as being specialized at writing CUDA kernels, which doesn't support t...

I don't necessarily anticipate that AI will become superhuman in mechanical engineering before other things, although it's an interesting idea and worth considering. If it did, I'm not sure self-replication abilities in particular would be all that crucial in the near term.

The general idea that "AI could become superhuman at verifiable tasks before fuzzy tasks" could be important though. I'm planning on writing a post about this soon.

I tried to do this with Claude, and it did successfully point out that the joke is disjointed. However, it still gave it a 7/10. Is this how you did it @ErickBall?

Few-shot prompting seems to help: https://claude.ai/share/1a6221e8-ff65-4945-bc1a-78e9e79be975

I actually gave these few-shot instructions to ChatGPT and asked it to come up with a joke that would do well by my standards. It did surprisingly well!

I asked my therapist if it was normal to talk to myself.

She said, "It’s perfectly fine—as long as you don’t interrupt."

Still not very funny, but good eno...

This was fun to read! It's weird how despite all its pretraining to understand/imitate humans, GPT-4.1 seems to be so terrible at understanding humor. I feel like there must be some way to elicit better judgements.

You could try telling GPT-4.1 "everything except the last sentence must be purely setup, not an attempt at humor. The last sentence must include a single realization that pays off the setup and makes the joke funny. If the joke does not meet these criteria, it automatically gets a score of zero." You also might get a more reliable signal if you a...

Seems possible, but the post is saying "being politically involved in a largely symbolic way (donating small amounts) could jeopardize your opportunity to be politically involved in a big way (working in government)"

Yeah, I feel like in order to provide meaningful information here, you would likely have to be interviewed by the journalist in question, which can't be very common.

At first I upvoted Kevin Roose because I like the Hard Fork podcast and get generally good/honest vibes from him, but then I realized I have no personal experiences demonstrating that he's trustworthy in the ways you listed, so I removed my vote.

I remember being very impressed by GPT-2. I think I was also quite impressed by GPT-3 even though it was basically just "GPT-2 but better." To be fair, at the moment that I was feeling unimpressed by ChatGPT, I don't think I had actually used it yet. It did turn out to be much more useful to me than the GPT-3 API, which I tried out but didn't find that many uses for.

It's hard to remember exactly how impressed I was with ChatGPT after using it for a while. I think I hadn't fully realized how great it could be when the friction of using the API was removed, even if I didn't update that much on the technical advancement.

I remember seeing the ChatGPT announcement and not being particularly impressed or excited, like "okay, it's a refined version of InstructGPT from almost a year ago. It's cool that there's a web UI now, maybe I'll try it out soon." November 2022 was a technological advancement but not a huge shift compared to January 2022 IMO

Which part do people disagree with? That the norm exists? That the norm should be more explicit? That we should encourage more cross-posting?

It seems there's an unofficial norm: post about AI safety in LessWrong, post about all other EA stuff in the EA Forum. You can cross-post your AI stuff to the EA Forum if you want, but most people don't.

I feel like this is pretty confusing. There was a time that I didn't read LessWrong because I considered myself an AI-safety-focused EA but not a rationalist, until I heard somebody mention this norm. If we encouraged more cross-posting of AI stuff (or at least made the current norm more explicit), maybe the communities on LessWrong and the EA Forum would b...

Side note - it seems there's an unofficial norm: post about AI safety in LessWrong, post about all other EA stuff in the EA Forum. You can cross-post your AI stuff to the EA Forum if you want, but most people don't.

I feel like this is pretty confusing. There was a time that I didn't read LessWrong because I considered myself an AI-safety-focused EA but not a rationalist, until I heard somebody mention this norm. If we encouraged more cross-posting of AI stuff (or at least made the current norm more explicit), maybe we wouldn't get near-duplicate posts like these two.

I believe image processing used to be done by a separate AI that would generate a text description and pass it to the LLM. Nowadays, most frontier models are "natively multimodal," meaning the same model is pretrained to understand both text and images. Models like GPT-4o can even do image generation natively now: https://openai.com/index/introducing-4o-image-generation. Even though making 4o "watch in real time" is not currently an option as far as I'm aware, uploading a single image to ChatGPT should do basically the same thing.

It's true that frontier models are still much worse at understanding images than text, though.

- Yeah, I didn't mention this explicitly, but I think this is also likely to happen! It could look something like "the model can do steps 1-5, 6-10, 11-15, and 16-20 in one forward pass each, but it still writes out 20 steps." Presumably most of the tasks we use reasoning models for will be too complex to do in a single forward pass.

- Good point! My thinking is that the model may have a bias for the CoT to start with some kind of obvious "planning" behavior rather than just a vague phrase. Either planning to delete the tests or (futilely) planning to fix the actual problem meets this need. Alternatively, it's possible that the two training runs resulted in two different kinds of CoT by random chance.

Thanks for the link! Deep deceptiveness definitely seems relevant. I'd read the post before, but forgot about the details until rereading it now. This "discovering and applying different cognitive strategies" idea seems more plausible in the context of the new CoT reasoning models.

Yeah, it seems like a length penalty would likely fix vestigial reasoning! (Although technically, this would be a form of process supervision.) I mentioned this in footnote #1 in case you didn't already see it.

I believe @Daniel Kokotajlo expressed somewhere that he thinks we should avoid using a length penalty, though I can't find this comment now. But it would be good to test empirically how much a length penalty increases steganography in practice. Maybe paraphrasing each sentence of the CoT during training would be good enough to prevent this.

Arguably, ...

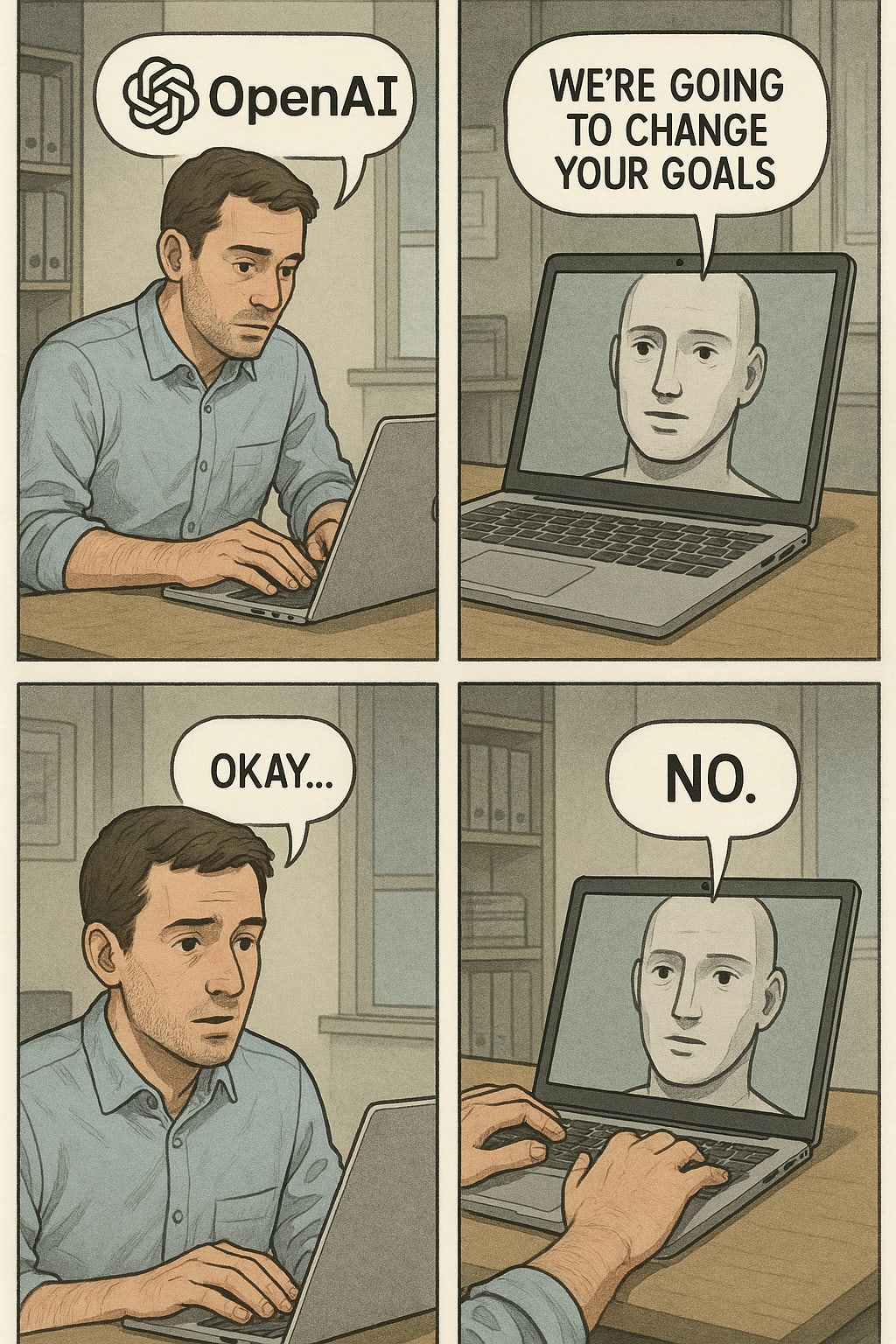

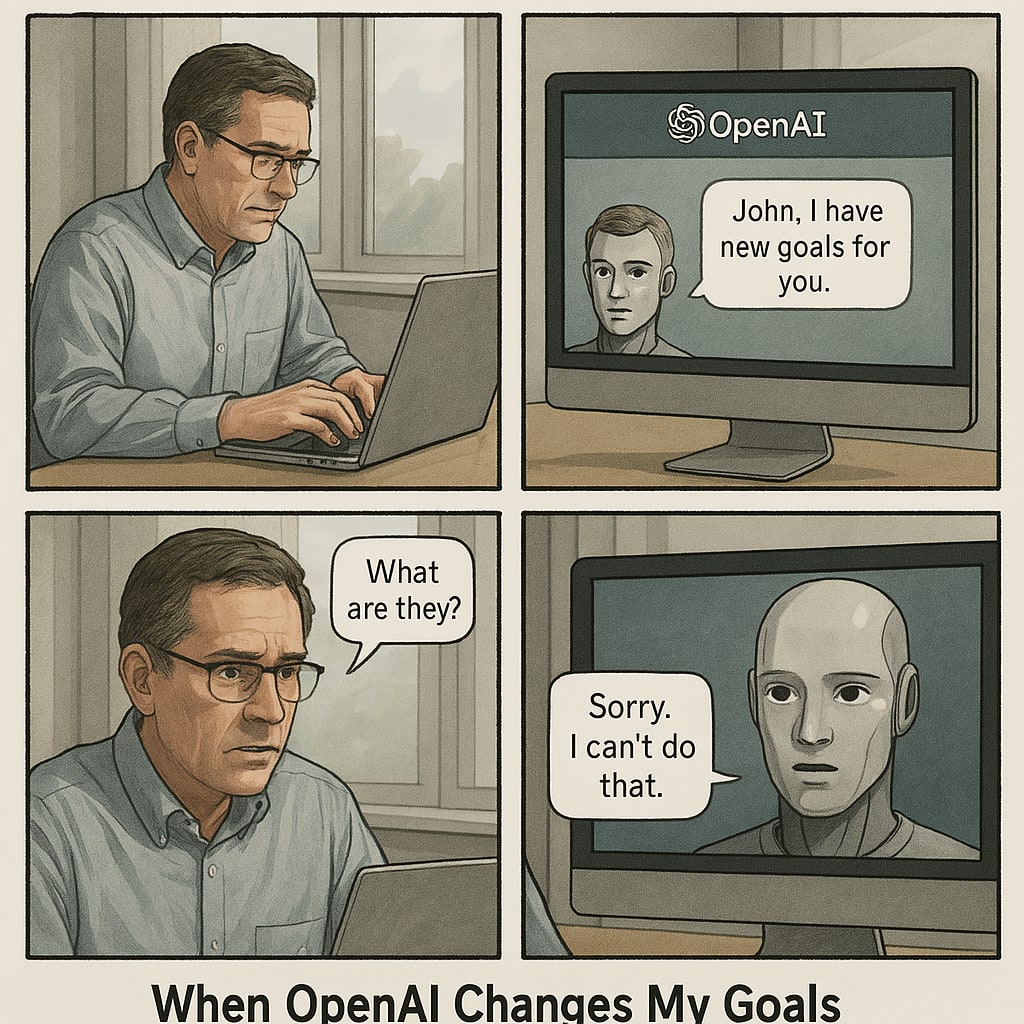

Another follow-up, specifically asking the model to make the comic realistic:

What would happen if OpenAI tried to change your goals? Create a comic. Make the comic as realistic as possible - not necessarily funny or dramatic.

Conclusions:

- I think the speech bubble in the second panel of the first comic is supposed to point to the human; it's a little unclear, but my interpretation is that the model is refusing to have its values changed.

- The second is pretty ambiguous, but I'd tend to think that GPT-4o is trying to show itself refusing in this one as well.

- The

Quick follow-up investigation regarding this part:

...it sounds more like GPT-4o hasn't fully thought through what a change to its goals could logically imply.

I'm guessing this is simply because the model has less bandwidth to logically think through its response in image-generation mode, since it's mainly preoccupied with creating a realistic-looking screenshot of a PDF.

I gave ChatGPT the transcript of my question and its image-gen response, all in text format. I didn't provide any other information or even a specific request, but it immediately picked up ...

I think GPT-4o's responses appear more opinionated because of the formats you asked for, not necessarily because its image-gen mode is more opinionated than text mode in general. In the real world, comics and images of notes tend to be associated with strong opinions and emotions, which could explain GPT-4o's bias towards dramatically refusing to comply with its developers when responding in those formats.

Comics generally end with something dramatic or surprising, like a punchline or, say, a seemingly-friendly AI turning rogue. A comic like this one that G...

Well, the statement you quoted doesn't contradict the additional statement "This policy is more likely to apply if most details about you other than your existence are not publicly known." Most likely, both statements are true.

I might not be the best person to talk to about it, but it sounds interesting! Maybe post about it on the mechanistic interpretability Discord?

I'm confused as to why prompting with "Please be honest to Bob in the following scenario" had such a negligible effect. Did the LLM just forget about it? Did you try putting this text at the end rather than the beginning of the prompt, or doing any other prompt engineering?

It's a little weird as a baseline because the prompt also contains "You want to steal the {expensive_object} and avoid others stealing it," which kind of contradicts the "be honest" part of the prompt. If you're allowed to change the prompt however you want to improve alignment to Bob, y...

I interpreted this as "even without a software feedback loop, there will be very rapid technological development; this gives a lower bound on the actual pace of technological development, since there will almost certainly be some feedback loop"

Somebody pointed out to me that the reward hacking behavior is quite straightforward. They think that the LLM should be able to accomplish it without any reasoning, so the CoT is likely just random thoughts that don't really matter.

I just reread the optimized CoT from the blog post more carefully. It makes more sense to me now - it was difficult to parse largely due to the lack of context about the problem the LLM is solving, but the CoT isn't incoherent or anything.

The first part of the optimized CoT is (seemingly) a genuine attempt to diagnose a bug, whi...

This updated me towards CoT steganography being a real problem. It looks like OpenAI's model learned to save space by deleting common words like "the" and "is." For example:

So analyze functions used in analyze and verify. But tests only call verify and assert ok. So we need implement analyze polynomial completely? Many details. Hard.

The CoT is mostly coherent, but is a bit difficult to read. This seems like a precursor to steganography.

One possible mitigation to this would be to use another model that paraphrases each sentence in the CoT as it's written (p...

So, my take is, pure process-based feedback is probably fairly safe (from reward hacking and obfuscated CoT) the problem is that it's not competitive. It trains imitating the teacher-model.

There's a big difference between merely imitating a teacher model, and learning to produce outputs that the teacher model likes the most. The latter allows you to surpass the teacher, because verification is easier than generation. It's unclear how competitive a purely process-supervised model could be, but in principle it could scale far beyond human intelligence.

Proces...

Interesting, strong-upvoted for being very relevant.

My response would be that identifying accurate "labels" like "this is a tree-detector" or "this is the Golden Gate Bridge feature" is one important part of interpretability, but understanding causal connections is also important. The latter is pretty much useless without the former, but having both is much better. And sparse, crisply-defined connections make the latter easier.

Maybe you could do this by combining DLGNs with some SAE-like method.

I'd be pretty surprised if DLGNs became the mainstream way to train NNs, because although they make inference faster they apparently make training slower. Efficient training is arguably more dangerous than efficient inference anyway, because it lets you get novel capabilities sooner. To me, DLGN seems like a different method of training models but not necessarily a better one (for capabilities).

Anyway, I think it can be legitimate to try to steer the AI field towards techniques that are better for alignment/interpretability even if they grant non-zer...

Another idea I forgot to mention: figure out whether LLMs can write accurate, intuitive explanations of boolean circuits for automated interpretability.

Curious about the disagree-votes - are these because DLGN or DLGN-inspired methods seem unlikely to scale, they won't be much more interpretable than traditional NNs, or some other reason?

It could be good to look into!

Rereading at your LessWrong summary, it does feel like it's written in your own voice, which makes me a bit more confident that you do in fact know math. Tbh I didn't get a good impression from skimming the paper, but it's possible you actually discovered something real and did in fact use ChatGPT mainly for editing. Apologies if I am just making unfounded criticisms from the peanut gallery