All of ChosunOne's Comments + Replies

I think there is another assumption (or another way of framing your assumption, I'm not sure if it is really distinct), which is that all people throughout time are alike. It's not necessarily groundless, but it is an assumption. There could be some symmetry breaking factor that determines your position that doesn't simply align with "total humans that ever exist", and thus renders using the "birth rank" inappropriate to determine what number you have. This thing doesn't have to be related to reproduction though.

It still seems slightly fuzzy in that other than check/mate situations no moves are fully mandatory and eg recaptures may occasionally turn out to be the wrong move?

Indeed it can be difficult to know when it is actually better not to continue the line vs when it is, but that is precisely what MCTS would help figure out. MCTS would do actual exploration of board states and the budget for which states it explores would be informed by the policy network. It's usually better to continue a line vs not, so I would expect MCTS to spend most of its bud...

In chess, a "line" is sequence of moves that are hard to interrupt. There are kind of obvious moves you have to play or else you are just losing (such as recapturing a piece, moving king out of check, performing checkmate etc). Leela uses the neural network more for policy, which means giving a score to a given board position, which then the MCTS can use to determine whether or not to prune that direction or explore that section more. So it makes sense that Leela would have an embedding of powerful lines as part of its heuristic, since it...

The cited paper in Section 5 (Conclusion-Limitations) states plainly:

(2) We focus on look-ahead along a single line of play; we do not test whether Leela compares multiple different lines of play (what one might call search). ... (4) Chess as a domain might favor look-ahead to an unusually strong extent.

The paper is more just looking at how Leela evaluates a given line rather than doing any kind of search. And this makes sense. Pattern recognition is an extremely important part of playing chess (as a player myself), and it is embedded in ...

My crux is that LLMs are inherently bad at search tasks over a new domain. Thus, I don't expect LLMs to scale to improve search.

Anecdotal evidence: I've used LLMs extensively and my experience is that LLMs are great at retrieval but terrible at suggestion when it comes to ideas. You usually get something resembling an amalgamation of Google searches vs. suggestions from some kind of insight.

To your question of what to do if you are outmatched and you only have an ASI at your disposal, I think the most logical thing to do is "do what the ASI tells you to". The problem is that we have no way of predicting the outcomes if there is truly an ASI in the room. If it's a superintelligence it is going to have better suggestions than anything you can come up with.

Then I wonder, at what point does that matter? Or more specifically, when does that matter in the context of ai-risk?

Clearly there is some relationship between something like "more compute" and "more intelligence" since something too simple cannot be intelligent, but I don't know where that relationship breaks down. Evolution clearly found a path for optimizing intelligence via proxy in our brains, and I think the fear is that you may yet be able to go quite further than human-level intelligence before the extra compute fails to deliver more me...

So if I understand your point correctly, you expect something like "give me more compute" at some point fail to deliver more intelligence since intelligence isn't just "more compute"?

I don't think you claim has support as presented. Part of the problem surrounding the question is that we still don't really have any way of measuring how "conscious" something is. In order to claim that something is or isn't conscious, you should have some working definition of what conscious means, and how it can be measured. If you want to have a serious discussion instead of competing emotional positions, you need to support the claim with points that can be confirmed or denied. Why doesn't a goldfish have consciousness, or an e...

Well ultimately no information about the past is truly lost as far as we know. A hyper-advanced civilization could collect all the thermal radiation from earth reflected off of various celestial bodies and recover a near complete history, at least in principle. So I think the more you make it easy for yourself to be reconstructed/resurrected/what have you the sooner it would likely be, and the less alien of an environment you would find yourself in after the fact. Cryo is a good example of having a reasonable expectation of where to end up barring catastrophe since you are preserving a lot of you in good form.

An interesting consequence of your description is that resurrection is possible if you can manage to reconstruct the last brain state of someone who had died. If you go one one step further, then I think it is fairly likely that experience is eternal, since you don't experience any of the intervening time (akin to your film reel analogy with adding extra frames in between) being dead and since there is no limit to how much intervening time can pass.

I'm curious how much space is left after learning the MSP in the network. Does representing the MSP take up the full bandwidth of the model (even if it is represented inefficiently)? Could you maintain performance of the model by subtracting out the contributions of anything else that isn't part of the MSP?

I observe this behavior a lot when using GPT-4 to assist in code. The moment it starts spitting out code that has a bug, the likelihood of future code snippets having bugs grows very quickly.

I've found that using Bing/Chat-GPT has been enormously helpful in my own workflows. No need to have to carefully read documentation and tutorials just to get a starter template up and running. Sure it breaks here and there, but it seems way more efficient to look up stuff when it goes wrong vs. starting from scratch. Then, while my program is running, I can go back and try to understand what all the options do.

It's also been very helpful for finding research on a given topic and answering basic questions about some of the main ideas.

I'm not sure how that makes the problem much easier? If you get the maligned superintelligence mask, it only needs to get out of the larger model/send instructions to the wrong people once to have game over scenario. You don't necessarily get to change it after the fact. And changing it once doesn't guarantee it doesn't pop up again.

This could be true, but then you still have the issue of there being "superintelligent malign AI" as one of the masks if your pile of masks is big enough.

At a crude level, the earth represents a source of resources with which to colonize the universe shard and maximize its compute power (and thus find the optimal path to achieve its other goals). Simply utilizing all the available mass on earth to do that as quickly as possible hedges against the possibility of other entities in the universe shard from impeding progress toward its goals.

The question I've been contemplating is "Is it worth it to actually try to spend any resources dissassembling all matter on Earth given the cost of needing to d...

Thanks for the measured response.

If I understand the following correctly:

...Putin made it very clear on the day of the attack that he was threatening nukes to anyone who "interfered" in Ukraine, with his infamous "the consequences will be such as you have never seen in your entire history" -speech. NATO has been helping Ukraine by training their forces and supplying materiell for years before the invasion, and vowed to keep doing so. This can be considered "calling his bluff" to an extent, or as a piecemeal maneuver in it's own right. Yet they withdrew their

Yes I did, and it doesn't follow that nuclear retaliation is immediate.

Beaufre notes that for piecemeal maneuvers to be effective, they have to be presented as fait accompli – accomplished so quickly that anything but nuclear retaliation would arrive too late to do any good and of course nuclear retaliation would be pointless

Failure to perform the fait accompli means that options other than nuclear retaliation are possible.

...When Putin called that obvious bluff, it would have damaged the credibility and thus the deterrence value of that same sta

Given that Russia's attempt at a fait accompli in Ukraine has failed, and that the situation already is a total war, I fail to see Russia's logic of nuclear deterrence against NATO involvement. In a sense, NATO has already crossed the red lines that Russia stated would be considered acts of war, such as economic sanctions and direct military supply. From the Russian perspective, would NATO intervention really invite a total nuclear response the way that a Russian attack on Poland would?

NATO intervention and subsequent obliteration ...

In Zelenskyy's latest appeal to congress, he offered an alternative to a No Fly Zone, which is massive support for AA equipment and additional fighters. By creating pressure for a NFZ, he's bought himself significant AA equipment boosts.

This is more or less what Kasparov believed back in 2015:

I think one of the things to consider with this hypothesis is what is the signal that indicates an area is "overpopulated", and how should members of the species respond to that signal? And how can this signal be distinguished from other causes? For instance, an organism that has offspring that are unable to reproduce because they had limited resources will likely be outcompeted by an organism that produces fertile offspring regardless of the availability of resources.

If you open up a variable that determines how likely your offspring ar...

Are we also presuming that you can acquire all desired things instantaneously? Even in a situation when all agents are functionally identical, if it costs 1 unit of time per x units of a resource, wouldn't trade still be useful in acquiring more than x units of a resource in 1 unit of time? Time seems to me the ultimate currency that still needs to be "traded" in this scenario.

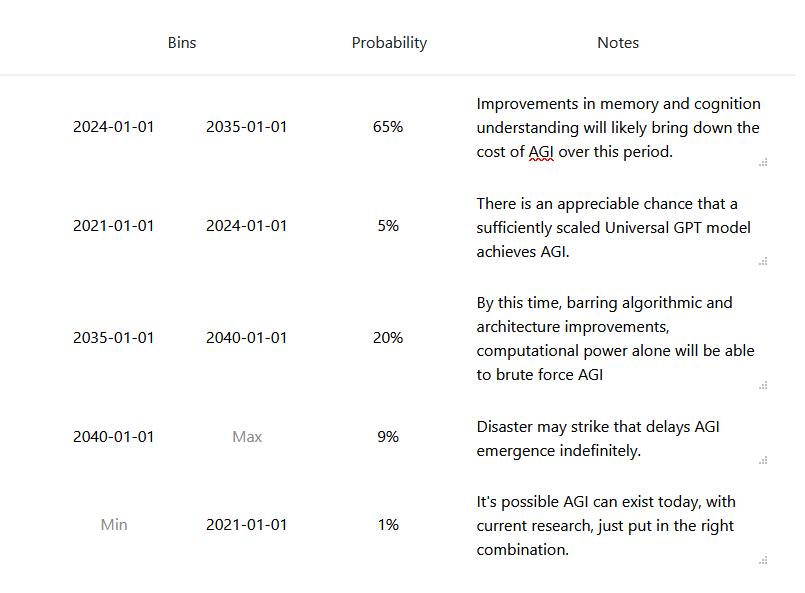

My point here was that even if the deep learning paradigm is not anywhere close to as efficient as the brain, it has a reasonable chance of getting to AGI anyway since the brain does not use all that much energy. The biggest models from GPT-3 can run on a fraction of what a datacenter can supply, hence the original question, how do we know AGI isn't just a question of scale in the current deep learning paradigm.

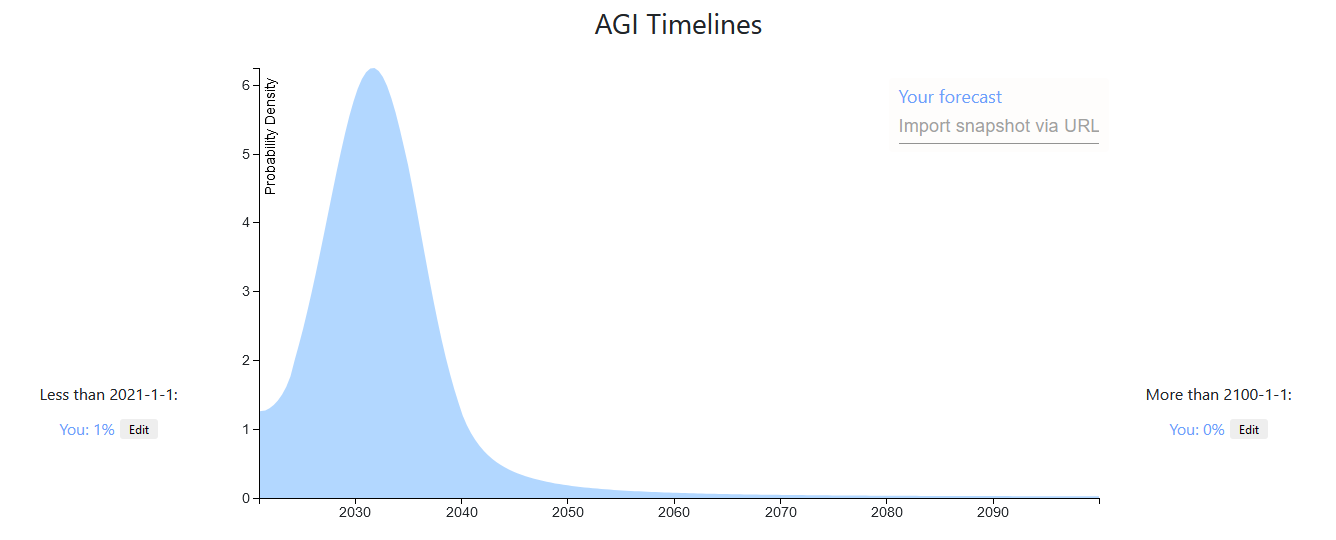

Here is a link to my forecast

And here are the rough justifications for this distribution:

I don't have much else to add beyond what others have posted, though it's in part influenced by an AIRCS event I attended in the past. Though I do remember being laughed at for suggesting GPT-2 represented a very big advance toward AGI.

I've also never really understood the resistance to why current models of AI are incapable of AGI. Sure, we don't have AGI with current models, but how do we know it isn't a question of scale? Our bra...

Thanks for the recommendation!

I've been feeding my parents a steady stream of facts and calmly disputing hypotheses that they couldn't support with evidence ("there are lots of unreported cases", "most cases are asymptomatic", etc.). It's taken time but my father helped influence a decision to shut down schools for the whole Chicago area, citing statistics I've been supplying from the WHO.

I think the best thing you can do if they don't take it seriously is to just whittle down their resistance with facts. I tend to only pick a few to tal...

It seems to me that trying to create a tulpa is like trying to take a shortcut with mental discipline. It seems strictly better to me to focus my effort on a single unified body of knowledge/model of the world than to try to maintain two highly correlated ones at the risk of losing your sanity. I wouldn't trust that a strong imitation of another mind would somehow be more capable than my own, and it seems like having to simulate communication with another mind is just more wasteful than just integrating what you know into your own.

Thinking about i...

I think the factors that determine your reference class can be related to changes over time in the environment you inhabit, not just how you are built. This is what I mean by not necessarily related to reproduction. If I cloned a billion people with the same cloning vat over 1000 years, how then would you determine reference class? But maybe something about the environment would narrow that reference class despite all the people being made by the same process (like the presence or absence of other things in the environment as they relate to the people coming out of the vat).