All of DPiepgrass's Comments + Replies

I've seen a lot of finance videos talking about the stock market and macroeconomics/future trends that never once mention AI/AGI. And many who do talk about AI think it's just a bubble and/or that AI ≅ LLMs/DALL·E; prices seem high for such a person. And as Francois Chollet noted, "LLMs have sucked the oxygen out of the room" which I think could possibly slow down progress toward AGI enough that a traditional Gartner hype cycle plays out, leading to temporarily cooler investment/prices... hope so, fingers crossed.

I'm curious, what makes it more of an AI stock than... whatever you're comparing it to?

Well, yeah, it bothers me that the "bayesian" part of rationalism doesn't seem very bayesian―otherwise we'd be having a lot of discussions about where priors come from, how to best accomplish the necessary mental arithmetic, how to go about counting evidence and dealing with ambiguous counts (if my friends Alice and Bob both tell me X, it could be two pieces of evidence for X or just one depending on what generated the claims; how should I count evidence by default, and are there things I should be doing to find the underlying evidence?)

So―vulnerable in th...

TBH, a central object of interest to me is people using Dark Epistemics. People with a bad case of DE typically have "gishiness" as a central characteristic, and use all kinds of fallacies of which motte-and-bailey (hidden or not) is just one. I describe them together just because I haven't seen LW articles on them before. If I were specifically naming major DE syndromes, I might propose the "backstop of conspiracy" (a sense that whatever the evidence at hand doesn't explain is probably still explained by some kind of conspiracy) and projection (a tendency...

The Hidden-Motte-And-Bailey fallacy: belief in a Bailey inspires someone to invent a Motte and write an article about it. The opinion piece describes the Motte exclusively with no mention of the Bailey. Others read it and nod along happily because it supports their cherished Bailey, and finally they share it with others in an effort to help promote the Bailey.

Example: Christian philosopher describes new argument for the existence of a higher-order-infinity God which bears no resemblance to any Abrahamic God, and which no one before the 20th century had eve...

I know this comment was 17 years ago, but nearly half of modern US politics in 2024 is strongly influenced by the idea "don't trust experts". I have listened to a lot of cranks (ex), and the popular ones are quite good at what they do, rhetorically, so most experts have little chance against them in a debate. Plus, if the topic is something important, the public was already primed to believe one side or the other by whatever media they happen to consume, and if the debate winner would otherwise seem close (to a layman) then such preconceptions will dominat...

I actually think Yudkowsky's biggest problem may be that he is not talking about his models. In his most prominent posts about AGI doom, such as this and the List of Lethalities, he needs to provide a complete model that clearly and convincingly leads to doom (hopefully without the extreme rhetoric) in order to justify the extreme rhetoric. Why does attempted, but imperfect, alignment lead universally to doom in all likely AGI designs*, when we lack familiarity with the relevant mind design space, or with how long it will take to escalate a given design fr...

P.S. if I'm wrong about the timeline―if it takes >15 years―my guess for how I'm wrong is (1) a major downturn in AGI/AI research investment and (2) executive misallocation of resources. I've been thinking that the brightest minds of the AI world are working on AGI, but maybe they're just paid a lot because there are too few minds to go around. And when I think of my favorite MS developer tools, they have greatly improved over the years, but there are also fixable things that haven't been fixed in 20 years, and good ideas they've never tried, and MS has ...

Doesn't the problem have no solution without a spare block?

Worth noting that LLMs don't see a nicely formatted numeric list, they see a linear sequence of tokens, e.g. I can replace all my newlines with something else and Copilot still gets it:

brief testing doesn't show worse completions than when there are newlines. (and in the version with newlines this particular completion is oddly incomplete.)

Anyone know how LLMs tend to behave on text that is ambiguous―or unambiguous but "hard to parse"? I wonder if they "see" a superposition of meanings "mixed toget...

I'm having trouble discerning a difference between our opinions, as I expect a "kind-of AGI" to come out of LLM tech, given enough investment. Re: code assistants, I'm generally disappointed with Github Copilot. It's not unusual that I'm like "wow, good job", but bad completions are commonplace, especially when I ask a question in the sidebar (which should use a bigger LLM). Its (very hallucinatory) response typically demonstrates that it doesn't understand our (relatively small) codebase very well, to the point where I only occasionally bother asking. (I keep wondering "did no one at GitHub think to generate an outline of the app that could fit in the context window?")

A title like "some people can notice more imperfections than you (and they get irked)" would be more accurate and less clickbaity, though when written like that it it sounds kind of obvious.

Do you mean the "send us a message" popup at bottom-right?

Yikes! Apparently I said "strong disagree" when I meant "strong downvote". Fixed. Sorry. Disagree votes generally don't bother me either, they just make me curious what the disagreer disagrees about.

Shoot, I forgot that high-karma users have a "small-strength" of 2, so I can't tell if it was strong-downvoted or not. I mistakenly assumed it was a newish user. Edit: P.S. I might feel better if karma was hidden on my own new comments, whether or not they are hidden on others, though it would then be harder to guess at the vote distribution, making the information even more useless than usual if it survives the hiding-period. Still seems like a net win for the emotional benefits.

I wrote a long comment and someone took the "strong downvote, no disagreement, no reply" tack again. Poppy-cutters[1] seem way more common at LessWrong than I could've predicted, and I'd like to see statistics to see how common they are, and to see whether my experience here is normal or not.

- ^

Meaning users who just seem to want to make others feel bad about their opinion, especially unconstructively. Interesting statistics might include the distributions of users who strong-downvote a positive score into a negative one (as compared to their other voti

Given that I think LLMs don't generalize, I was surprised how compelling Aschenbrenner's case sounded when I read it (well, the first half of it. I'm short on time...). He seemed to have taken all the same evidence I knew about it, and arranged it into a very different framing. But I also felt like he underweighted criticism from the likes of Gary Marcus. To me, the illusion of LLMs being "smart" has been broken for a year or so.

To the extent LLMs appear to build world models, I think what you're seeing is a bunch of disorganized neurons and connections th...

I have a feature request for LessWrong. It's the same as my feature request for every site: you should be able to search within a user (i.e. visit a user page and begin a search from there). This should be easy to do technically; you just have to add the author's name as one of the words in the search index. And in terms of UI, I think you could just link to https://www.lesswrong.com/search?query=@author:username.

Preferably do it in such a way that a normal post cannot do the same, e.g. if "foo authored this post" is placed in the index as @author:foo, if ...

Wow, is this the newest feature-request thread? Well, it's the newest I easily find given that LessWrong search has very poor granularity when it comes to choosing a time frame within which to search...

My feature request for LessWrong is the same as my feature request for every site: you should be able to search within a user. This is easy to do technically; you just have to add the author's name as one of the words in the search index.

Preferably do it in such a way that a normal post cannot do the same, e.g. you might put "foo authored this post" in the i...

I have definitely taken actions within the bounds of what seems reasonable that have aimed at getting the EA community to shut down or disappear (and will probably continue to do so).

Wow, what a striking thing for you to say without further explanation.

Personally, I'm a fan of EA. Also am an EA―signed the GWWC/10% pledge and all that. So, I wonder what you mean.

I'm confused why this is so popular.

Sure, it appears to be espousing something important to me ("actually caring about stuff, and for the right reasons"). But specifically it appears to be about how non-serious people can become serious. Have you met non-serious people who long to be serious? People like that seem very rare to me. I've spent my life surrounded by people who work 9 to 5 and will not talk shop at 6; you do some work, then you stop working and enjoy life.

...some of the most serious people I know do their serious thing gratis and make their

For me, I felt like publishing in scientific journals required me to be dishonest.

...what?

I can't speak to what the OP meant by that. But scientific publishing does require spin, at least if you are aiming for a good journal. There is not some magic axis by which people care about some things and not about others, so its your job as an author to persuade people to care about your results. This shifts the dial in all sorts of little ways.

"Well, in the end it seems like we learned nothing." If that is the conclusion you don't get to publish the paper, ...

it's wrong to assume that because a bunch of Nazis appeared, they were mostly there all along but hidden

I'd say it's wrong as an "assumption" but very good as a prior. (The prior also suggests new white supremacists were generated, as Duncan noted.) Unfortunately, good priors (as with bad priors) often don't have ready-made scientific studies to justify them, but like, it's pretty clear that gay and mildly autistic people were there all along, and I have no reason to think the same is not true of white supremacists, so the prior holds. I also agree that it...

I'd say (1) living in such a place just makes you much less likely to come out, even if you never move, (2) suspecting you can trust someone with a secret is not a good enough reason to tell the secret, and (3) even if you totally trust someone with your secret, you might not trust that he/she will keep the secret.

And I'd say Scott Alexander meets conservatives regularly―but they're so highbrow that he wasn't thinking of them as "conservatives" when he wrote that. He's an extra step removed from the typical Bush or MAGA supporter, so doesn't meet those. Or does he? Social Dark Matter theory suggests that he totally does.

that the person had behaved in actually bad and devious ways

"Devious" I get, but where did the word "bad" come from? (Do you appreciate the special power of the sex drive? I don't think it generalizes to other areas of life.)

Your general point is true, but it's not necessarily true that a correct model can (1) predict the timing of AGI or (2) that the predictable precursors to disaster occur before the practical c-risk (catastrophic-risk) point of no return. While I'm not as pessimistic as Eliezer, my mental model has these two limitations. My model does predict that, prior to disaster, a fairly safe, non-ASI AGI or pseudo-AGI (e.g. GPT6, a chatbot that can do a lot of office jobs and menial jobs pretty well) is likely to be invented before the really deadly one (if any[1]). B...

Evolutionary Dynamics

The pressure to replace humans with AIs can be framed as a general trend from evolutionary dynamics. Selection pressures incentivize AIs to act selfishly and evade safety measures.

Seems like the wrong frame? Evolution is based on mutation, which AIs won't have. However, in the human world there is a similar and much faster dynamic based on the large natural variability between human agents (due to both genetic and environmental factors) which tends to cause people with certain characteristics to rise to the top (e.g. high intelligence,...

given intense economic pressure for better capabilities, we shall see a steady and continuous improvement, so the danger actually is in discontinuities that make it harder for humanity to react to changes, and therefore we should accelerate to reduce compute overhang

I don't feel like this is actually a counterargument? You could agree with both arguments, concluding that we shouldn't work for OpenAI but a outfit better-aligned to your values is okay.

I expect there are people who are aware that there was drama but don't know much about it and should be presented with details from safety-conscious people who closely examined what happened.

I think there may be merit in pointing EAs toward OpenAI safety-related work, because those positions will presumably be filled by someone and I would prefer it be filled by someone (i) very competent (ii) who is familiar with (and cares about) a wide range of AGI risks, and EA groups often discuss such risks. However, anyone applying at OpenAI should be aware of the previous drama before applying. The current job listings don't communicate the gravity or nuance of the issue before job-seekers push the blue button leading to OpenAI's job listing:

I guess th...

Hi Jasper! Don't worry, I definitely am not looking for rapid responses. I'm always busy anyway. And I wouldn't say there are in general 'easy or strong answers in how to persuade others'. I expect not to be able to persuade the majority of people on any given subject. But I always hope (and to some extent, expect) people in the ACX/LW/EA cluster to be persuadable based on evidence (more persuadable than my best friend whom I brought to the last meetup, who is more of an average person).

By the way, after writing my message I found out that I had a limited ...

Followup: this morning I saw a really nice Vlad Vexler video that ties Russian propaganda to the decline of western democracy. The video gives a pretty standard description of modern Russian propaganda, but I always have to somewhat disagree with that. Vlad's variant of the standard description says that Russian propaganda wants you to be depoliticized (not participate in politics) and to have "a theory of Truth which says 'who knows what if anything is true'".

This is true, but what's missing from this description is a third piece, which is that the Kremli...

Hi all, I wanted to follow up on some of the things I said about the Ukraine war at the last meetup, partly because I said some things that were inaccurate, but also because I didn't complete my thoughts on the matter.

(uh, should I have put this on the previous meetup's page?)

First, at one point I said that Russia was suffering about 3x as many losses as Russia and argued that this meant the war was sustainable for Ukraine, as Russia has about 3½ times the population of Ukraine and is unlikely to be able to mobilize as many soldiers as Ukraine can (as a pe...

9 respondents were concerned about an overreliance or overemphasis on certain kinds of theoretical arguments underpinning AI risk

I agree with this, but that "the horsepower of AI is instead coming from oodles of training data" is not a fact that seems relevant to me, except in the sense that this is driving up AI-related chip manufacturing (which, however, wasn't mentioned). The reason I argue it's not otherwise relevant is that the horsepower of ASI will not, primarily, come from oodles of training data. To the contrary, it will come from being able to re...

Another thing: not only is my idea unpopular, it's obvious from vote counts that some people are actively opposed to it. I haven't seen any computational epistemology (or evidence repository) project that is popular on LessWrong, either. Have you seen any?

If in fact this sort of thing tends not to interest LessWrongers, I find that deeply disturbing, especially in light of the stereotypes I've seen of "rationalists" on Twitter and EA forum. How right are the stereotypes? I'm starting to wonder.

I can't recall another time when someone shared their personal feelings and experiences and someone else declared it "propaganda and alarmism". I haven't seen "zero-risker" types do the same, but I would be curious to hear the tale and, if they share it, I don't think anyone one will call it "propaganda and killeveryoneism".

My post is weirdly aggressive? I think you are weirdly aggressive against Scott.

Since few people have read the book (including, I would wager, Cade Metz), the impact of associating Scott with Bell Curve doesn't depend directly on what's in the book, it depends on broad public perceptions of the book.

Having said that, according to Shaun (here's that link again), the Bell Curve relies heavily of the work of Richard Lynn, who was funded by, and later became the head of, the Pioneer Fund, which the Southern Poverty Law Center classifies as a hate group. In con...

I like that HowTruthful uses the idea of (independent) hierarchical subarguments, since I had the same idea. Have you been able to persuade very many to pay for it?

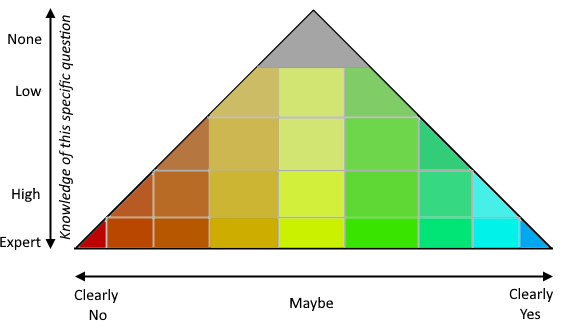

My first thought about it was that the true/false scale should have two dimensions, knowledge & probability:

One of the many things I wanted to do on my site was to gather user opinions, and this does that. ✔ I think of opinions as valuable evidence, just not always valuable evidence about the question under discussion (though to the extent people with "high knowledge" really have high knowle...

Ah, this is nice. I was avoiding looking at my notifications for the last 3 months for fear of a reply by Christian Kl, but actually it turned out to be you two :D

I cannot work on this project right now because busy I'm earning money to be able to afford to fund it (as I don't see how to make money on it). I have a family of 4+, so this is far from trivial. I've been earning for a couple of years, and I will need a couple more years more. I will leave my thoughts on HowTruthful on one of your posts on it.

Yesterday Sam Altman stated (perhaps in response to the Vox article that mentions your decision) that "the team was already in the process of fixing the standard exit paperwork over the past month or so. if any former employee who signed one of those old agreements is worried about it, they can contact me and we'll fix that too."

I notice he did not include you in the list of people who can contact him to "fix that", but it seems worth a try, and you can report what happens either way.

This is practice sentence to you how my brain. I wonder how noticeable differences are to to other people.

That first sentence looks very bad to me; the second is grammatically correct but feels like it's missing an article. If that's not harder for you to understand than for other people, I still think there's a good chance that it could be harder for other dyslexic people to understand (compared to correct text), because I would not expect that the glitches in two different brains with dyslexia are the same in every detail (that said, I don't really under...

Doublecrux sounds like a better thing than debate, but why such an event should be live? (apart from "it saves money/time not to postprocess")

You guys were using an AI that generated the music fully formed (as PCM), right?

It ticks me off that this is how it works. It's "good", but you see the problems:

Poor audio quality[edit: the YouTube version is poor quality, but the "Suno" versions are not. Why??]- You can't edit the music afterward or re-record the voices

- You had to generate 3,000-4,000 tracks to get 15 good ones

Is there some way to convince AI people to make the following?

- An AI (or two) whose input is a spectral decomposition of PCM music (I'm guessing exponentially-spaced wavelets will be b

Even if the stars should die in heaven

Our sins can never be undone

No single death will be forgiven

When fades at last the last lit sun.Then in the cold and silent black

As light and matter end

We’ll have ourselves a last look back.And toast an absent friend.

[verse 2]

I heard that song which left me bitter

For all the sins that had been done

But I had thought the wrong way 'bout it

[cuz] I won't be there to see that sun

I noticed then I could let go

Before my own life ends

It could have been much worse you know

Relaxing with my friends

Hard work I leave with them

Someda...

I guess you could try it and see if you reach wrong conclusions, but that only works isn't so wired up with shortcuts that you cannot (or are much less likely to) discover your mistakes.

I've been puzzling over why EY's efforts to show the dangers of AGI (most notably this) have been unconvincing enough so that other experts (e.g. Paul Christiano) and, in my experience, typical rationalists have not adopted p(doom) > 90% like EY, or even > 50%. I was unconvinced because he simply didn't present a chain of reasoning that shows what he's trying to show....

Speaking for myself: I don't prefer to be alone or tend to hide information about myself. Quite the opposite; I like to have company but rare is the company that likes to have me, and I like sharing, though it's rare that someone cares to hear it. It's true that I "try to be independent" and "form my own opinions", but I think that part of your paragraph is easy to overlook because it doesn't sound like what the word "avoidant" ought to mean. (And my philosophy is that people with good epistemics tend to reach similar conclusions, so our independence doesn...

Scott tried hard to avoid getting into the race/IQ controversy. Like, in the private email LGS shared, Scott states "I will appreciate if you NEVER TELL ANYONE I SAID THIS". Isn't this the opposite of "it's self-evidently good for the truth to be known"? And yes there's a SSC/ACX community too (not "rationalist" necessarily), but Metz wasn't talking about the community there.

My opinion as a rationalist is that I'd like the whole race/IQ issue to f**k off so we don't have to talk or think about it, but certain people like to misrepresent Scott and make unre...

Huh? Who defines racism as cognitive bias? I've never seen that before, so expecting Scott in particular to define it as such seems like special pleading.

What would your definition be, and why would it be better?

Scott endorses this definition:

Definition By Motives: An irrational feeling of hatred toward some race that causes someone to want to hurt or discriminate against them.

Setting aside that it says "irrational feeling" instead of "cognitive bias", how does this "tr[y] to define racism out of existence"?

I think about it differently. When Scott does not support an idea, but discusses or allows discussion of it, it's not "making space for ideas" as much as "making space for reasonable people who have ideas, even when they are wrong". And I think making space for people to be wrong sometimes is good, important and necessary. According to his official (but confusing IMO) rules, saying untrue things is a strike against you, but insufficient for a ban.

Also, strong upvote because I can't imagine why this question should score negatively.

In the short term, yes. In the medium term, the entire economy would be transformed by AGI or quasi-AGI, likely increasing broad stock indices (I also expect there will be various other effects I can't predict, maybe including factors that mute stock prices, whether dystopian disaster or non-obvious human herd behavior).