All of Hoagy's Comments + Replies

~All ML researchers and academics that care have already made up their mind regarding whether they prefer to believe in misalignment risks or not. Additional scary papers and demos aren't going to make anyone budge.

Disagree. I think especially ML researchers are updating on these questions all the time. High-info outsiders less so but the contours of the arguments are getting increasing amounts of discussion.

-

For those who 'believe', 'believing in misalignment risks' doesn't mean thinking they are likely, at least before the point where the models are

I think the low-hanging fruit here is that alongside training for refusals we should be including lots of data where you pre-fill some % of a harmful completion and then train the model to snap out of it, immediately refusing or taking a step back, which is compatible with normal training methods. I don't remember any papers looking at it, though I'd guess that people are doing it

Interesting, though note that it's only evidence that 'capabilities generalize further than alignment does' if the capabilities are actually the result of generalisation. If there's training for agentic behaviour but no safety training in this domain then the lesson is more that you need your safety training to cover all of the types of action that you're training your model for.

Super interesting! Have you checked whether the average of N SAE features looks different to an SAE feature? Seems possible they live in an interesting subspace without the particular direction being meaningful.

Also really curious what the scaling factors are for computing these values are, in terms of the size of the dense vector and the overall model?

How are you setting when ? I might be totally misunderstanding something but at - feels like you need to push up towards like 2k to get something reasonable? (and the argument in 1.4 for using clearly doesn't hold here because it's not greater than for this range of values).

Huh, I'd never seen that figure, super interesting! I agree it's a big issue for SAEs and one that I expect to be thinking about a lot. Didn't have any strong candidate solutions as of writing the post, wouldn't even able to be able to say any thoughts I have on the topic now, sorry. Wish I'd posted this a couple of weeks ago.

Well the substance of the claim is that when a model is calculating lots of things in superposition, these kinds of XORs arise naturally as a result of interference, so one thing to do might be to look at a small algorithmic dataset of some kind where there's a distinct set of features to learn and no reason to learn the XORs and see if you can still probe for them. It'd be interesting to see if there are some conditions under which this is/isn't true, e.g. if needing to learn more features makes the dependence between their calculation higher and the XORs...

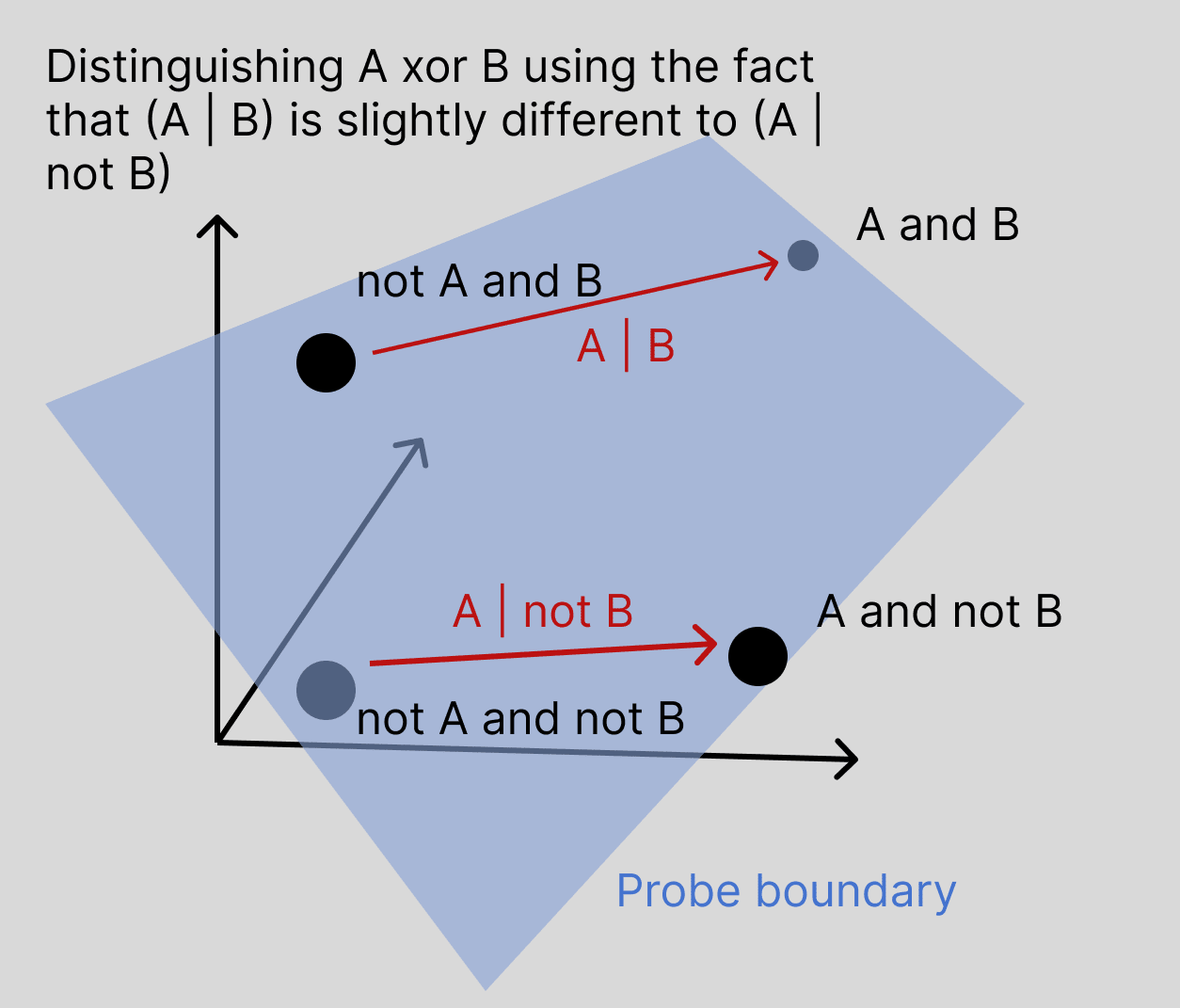

My hypothesis about what's going on here, apologies if it's already ruled out, is that we should not think of it separately computing the XOR of A and B, but rather that features A and B are computed slightly differently when the other feature is off or on. In a high dimensional space, if the vector and the vector are slightly different, then as long as this difference is systematic, this should be sufficient to successfully probe for .

For example, if A and B each rely on a sizeable number of different attention hea...

There had been various clashes between Altman and the board. We don’t know what all of them were. We do know the board felt Altman was moving too quickly, without sufficient concern for safety, with too much focus on building consumer products, while founding additional other companies. ChatGPT was a great consumer product, but supercharged AI development counter to OpenAI’s stated non-profit mission.

Does anyone have proof of the board's unhappiness about speed, lack of safety concern and disagreement with founding other companies. All seem plausible but have seen basically nothing concrete.

Could you elaborate on what it would mean to demonstrate 'savannah-to-boardroom' transfer? Our architecture was selected for in the wilds of nature, not our training data. To me it seems that when we use an architecture designed for language translation for understanding images we've demonstrated a similar degree of transfer.

I agree that we're not yet there on sample efficient learning in new domains (which I think is more what you're pointing at) but I'd like to be clearer on what benchmarks would show this. For example, how well GPT-4 can integrate a new domain of knowledge from (potentially multiple epochs of training on) a single textbook seems a much better test and something that I genuinely don't know the answer to.

Do you know why 4x was picked? I understand that doing evals properly is a pretty substantial effort, but once we get up to gigantic sizes and proto-AGIs it seems like it could hide a lot. If there was a model sitting in training with 3x the train-compute of GPT4 I'd be very keen to know what it could do!

Yes that makes a lot of sense that linearity would come hand in hand with generalization. I'd recently been reading Krotov on non-linear Hopfield networks but hadn't made the connection. They say that they're planning on using them to create more theoretically grounded transformer architectures. and your comment makes me think that these wouldn't succeed but then the article also says:

...This idea has been further extended in 2017 by showing that a careful choice of the activation function can even lead to an exponential memory storage capacity. Importantly,

Reposting from a shortform post but I've been thinking about a possible additional argument that networks end up linear that I'd like some feedback on:

the tldr is that overcomplete bases necessitate linear representations

- Neural networks use overcomplete bases to represent concepts. Especially in vector spaces without non-linearity, such as the transformer's residual stream, there are just many more things that are stored in there than there are dimensions, and as Johnson Lindenstrauss shows, there are exponentially many almost-orthogonal directions to st

There's an argument that I've been thinking about which I'd really like some feedback or pointers to literature on:

the tldr is that overcomplete bases necessitate linear representations

- Neural networks use overcomplete bases to represent concepts. Especially in vector spaces without non-linearity, such as the transformer's residual stream, there are just many more things that are stored in there than there are dimensions, and as Johnson Lindenstrauss shows, there are exponentially many almost-orthogonal directions to store them in (of course, we can't ass

See e.g. "So I think backpropagation is probably much more efficient than what we have in the brain." from https://www.therobotbrains.ai/geoff-hinton-transcript-part-one

More generally, I think the belief that there's some kind of important advantage that cutting edge AI systems have over humans comes more from human-AI performance comparisons e.g. GPT-4 way outstrips the knowledge about the world of any individual human in terms of like factual understanding (though obv deficient in other ways) with probably 100x less params. A bioanchors based model of AI...

Not totally sure but i think it's pretty likely that scaling gets us to AGI, yeah. Or more particularly, gets us to the point of AIs being able to act as autonomous researchers or act as high (>10x) multipliers on the productivity of human researchers which seems like the key moment of leverage for deciding how the development to AI will go.

Don't have a super clean idea of what self-reflective thought means. I see that e.g. GPT-4 can often say something, think further about it, and then revise its opinion. I would expect a little bit of extra reasoning quality and general competence to push this ability a lot further.

1 line summary is that NNs can transmit signals directly from any part of the network to any other, while brain has to work only locally.

More broadly I get the sense that there's been a bit of a shift in at least some parts of theoretical neuroscience from understanding how we might be able to implement brain-like algorithms to understanding how the local algorithms that the brain uses might be able to approximate backprop, suggesting that artificial networks might have an easier time than the brain and so it would make sense that we could make something w...

Hi Scott, thanks for this!

Yes I did do a fair bit of literature searching (though maybe not enough tbf) but very focused on sparse coding and approaches to learning decompositions of model activation spaces rather than approaches to learning models which are monosemantic by default which I've never had much confidence in, and it seems that there's not a huge amount beyond Yun et al's work, at least as far as I've seen.

Still though, I've not seen almost any of these which suggests a big hole in my knowledge, and in the paper I'll go through and add a lot more background to attempts to make more interpretable models.

Yeah, I agree that that's a reasonable concern, but I'm not sure what they could possibly discuss about it publicly. If the public, legible, legal structure hasn't changed, and the concern is that the implicit dynamics might have shifted in some illegible way, what could they say publicly that would address that? Any sort of "Trust us, we're super good at managing illegible implicit power dynamics." would presumably carry no information, no?

Would be interested to hear from Anthropic leadership about how this is expected to interact with previous commitments about putting decision making power in the hands of their Long-Term Benefit Trust.

I get that they're in some sense just another minority investor but a trillion-dollar company having Anthropic be a central plank in their AI strategy with a multi-billion investment and a load of levers to make things difficult for the company (via AWS) is a step up in the level of pressure to aggressively commercialise.

From the announcement, they said (https://twitter.com/AnthropicAI/status/1706202970755649658):

As part of the investment, Amazon will take a minority stake in Anthropic. Our corporate governance remains unchanged and we’ll continue to be overseen by the Long Term Benefit Trust, in accordance with our Responsible Scaling Policy.

Hi Charlie, yep it's in the paper - but I should say that we did not find a working CUDA-compatible version and used the scikit version you mention. This meant that the data volumes used are somewhat limited - still on the order of a million examples but 10-50x less than went into the autoencoders.

It's not clear whether the extra data would provide much signal since it can't learn an overcomplete basis and so has no way of learning rare features but it might be able to outperform our ICA baseline presented here, so if you wanted to give someone a project of making that available, I'd be interested to see it!

Try decomposing the residual stream activations over a batch of inputs somehow (e.g. PCA). Using the principal directions as activation addition directions, do they seem to capture something meaningful?

It's not PCA but we've been using sparse coding to find important directions in activation space (see original sparse coding post, quantitative results, qualitative results).

We've found that they're on average more interpretable than neurons and I understand that @Logan Riggs and Julie Steele have found some effect using them as directions for activation pat...

Hi, nice work! You mentioned the possibility of neurons being the wrong unit. I think that this is the case and that our current best guess for the right unit is directions in the output space, ie linear combinations of neurons.

We've done some work using dictionary learning to find these directions (see original post, recent results) and find that with sparse coding we can find dictionaries of features that are more interpretable the neuron basis (though they don't explain 100% of the variance).

We'd be really interested to see how this compares to ne...

I still don't quite see the connection - if it turns out that LLFC holds between different fine-tuned models to some degree, how will this help us interpolate between different simulacra?

Is the idea that we could fine-tune models to only instantiate certain kinds of behaviour and then use LLFC to interpolate between (and maybe even extrapolate between?) different kinds of behaviour?

Importantly, this policy would naturally be highly specialized to a specific reward function. Naively, you can't change the reward function and expect the policy to instantly adapt; instead you would have to retrain the network from scratch.

I don't understand why standard RL algorithms in the basal ganglia wouldn't work. Like, most RL problems have elements that can be viewed as homeostatic - if you're playing boxcart then you need to go left/right depending on position. Why can't that generalise to seeking food iff stomach is empty? Optimizing for a speci...

On first glance I thought this was too abstract to be a useful plan but coming back to it I think this is promising as a form of automated training for an aligned agent, given that you have an agent that is excellent at evaluating small logic chains, along the lines of Constitutional AI or training for consistency. You have training loops using synthetic data which can train for all of these forms of consistency, probably implementable in an MVP with current systems.

The main unknown would be detecting when you feel confident enough in the alignment of its ...

Do you have a writeup of the other ways of performing these edits that you tried and why you chose the one you did?

In particular, I'm surprised by the method of adding the activations that was chosen because the tokens of the different prompts don't line up with each other in a way that I would have thought would be necessary for this approach to work, super interesting to me that it does.

If I were to try and reinvent the system after just reading the first paragraph or two I would have done something like:

- Take multiple pairs of prompts that differ primari

Yeah I agree it's not in human brains, not really disagreeing with the bulk of the argument re brains but just about whether it does much to reduce foom %. Maybe it constrains the ultra fast scenarios a bit but not much more imo.

"Small" (ie << 6 OOM) jump in underlying brain function from current paradigm AI -> Gigantic shift in tech frontier rate of change -> Exotic tech becomes quickly reachable -> YudFoom

The key thing I disagree with is:

...In some sense the Foom already occurred - it was us. But it wasn't the result of any new feature in the brain - our brains are just standard primate brains, scaled up a bit[14] and trained for longer. Human intelligence is the result of a complex one time meta-systems transition: brains networking together and organizing into families, tribes, nations, and civilizations through language. ... That transition only happens once - there are not ever more and more levels of universality or linguistic programmability. AGI does

I think strategically, only automated and black-box approaches to interpretability make practical sense to develop now.

Just on this, I (not part of SERI MATS but working from their office) had a go at a basic 'make ChatGPT interpret this neuron' system for the interpretability hackathon over the weekend. (GitHub)

While it's fun, and managed to find meaningful correlations for 1-2 neurons / 50, the strongest takeaway for me was the inadequacy of the paradigm 'what concept does neuron X correspond to'. It's clear (no surprise, but I'd never had it shoved in m...

Agree that it's super important, would be better if these things didn't exist but since they do and are probably here to stay, working out how to leverage their own capability to stay aligned rather than failing to even try seems better (and if anyone will attempt a pivotal act I imagine it will be with systems such as these).

Only downside I suppose is that these things seem quite likely to cause an impactful but not fatal warning shot which could be net positive, v unsure how to evaluate this consideration.

0.2 OOMs/year is equivalent to a doubling time of 8 months.

I think this is wrong, that's nearly 8 doublings in 5 years, should instead be doubling every 5 years, should instead be doubling every 5 / log2(10) = 1.5.. years

I think pushing GPT-4 out to 2029 would be a good level of slowdown from 2022, but assuming that we could achieve that level of impact, what's the case for having a fixed exponential increase? Is it to let of some level of 'steam' in the AI industry? So that we can still get AGI in our lifetimes? To make it seem more reasonable to polic...

Thoughts:

- Seems like useful work.

- With RLHF I understand that when you push super hard for high reward you end up with nonsense results so you have to settle for quantilization or some such relaxation of maximization. Do you find similar things for 'best incorporates the feedback'?

- Have we really pushed the boundaries of what language models giving themselves feedback is capable of? I'd expect SotA systems are sufficiently good at giving feedback, such that I wouldn't be surprised that they'd be capable of performing all steps, including the human feedback, i

OpenAI would love to hire more alignment researchers, but there just aren’t many great researchers out there focusing on this problem.

This may well be true - but it's hard to be a researcher focusing on this problem directly unless you have access to the ability to train near-cutting edge models. Otherwise you're going to have to work on toy models, theory, or a totally different angle.

I've personally applied for the DeepMind scalable alignment team - they had a fixed, small available headcount which they filled with other people who I'm sure were bette...

Your first link is broken :)

My feeling with the posts is that given the diversity of situations for people who are currently AI safety researchers, there's not likely to be a particular key set of understandings such that a person could walk into the community as a whole and know where they can be helpful. This would be great but being seriously helpful as a new person without much experience or context is just super hard. It's going to be more like here are the groups and organizations which are doing good work, what roles or other things do they need now...

From the OpenAI report, they also give 9% as the no-tool pass@1: