All of J Bostock's Comments + Replies

Why do you think gas will accumulate close to the shell? This is not how gases work, the gas will form an equilibrium density gradient with zero free energy to exploit.

Actually I think this fails for ordinary reasons. Key question: how are you getting energy out of lowering the helium?

- If you mean the helium is chemically bound to the sheets (through adsorption) then you'll need to use energy to release it

- If you mean the helium is trapped in balloons, then it will be neutrally buoyant in the ambient helium atmosphere unless you expend energy to compress it.

These are interesting, but I think you're fundamentally misunderstanding how power works in this case. The main questions are not "Which intellectual frames work?" but "What drives policy?". For the Democrats in the US, it's often The Groups: a loose conglomeration of dozens to hundreds of different think-tanks, nonprofits, and other orgs. These in turn are influenced by various sources including their own donors but also including academia. This lets us imagine a chain of events like:

- Serious Academic Papers are published arguing that AGI is an extinction

I am a bit cautious of dismissing all of those ideas out-of-hand; while I am tempted to agree with you, I don't know of a strong case that these words definitely don't (or even probably don't point) to anything in the real world. Therefore, while I can't see a consistent, useful definition of them, it's still possible that one exists (c.f. Free Will which people often get confused about, but for which there exists a pretty neat solution) so it's not impossible that any given report contains a perfectly satisfying model which explains my own moral intuition...

You seem to be arguing "your theory of moral worth is incomplete, so I don't have to believe it". Which is true. But without presenting a better or even different theory of moral worth, it seems like you're mostly just doing that because you don't want to believe it.

I would overall summarize my views on the numbers in the RP report as "These provides zero information, you should update to where you would be before you read them." Of course you can still update on the fact that different animals have complex behaviour, but then you'll have to make the case ...

For your second part, whoops! I meant to include a disclaimer that I don't actually think BB is arguing in bad faith, just that his tactics cash out to being pretty similar to lots of people who are, and I don't blame people for being turned off by it.

On some level, yes it is impossible to critique another person's values as objectively wrong, utility functions in general are not up for grabs.

If person A values bees at zero, and person B values them at equivalent to humans, then person B might well call person A evil, but that in and of itself is a subjective (and let's be honest, social) judgement aimed at person A. When I call people evil, I'm attempting to apply certain internal and social labels onto them in order to help myself and others navigate interactions with them, as well as create better de...

I did a spot check since bivalves are filter feeders and so can accumulate contaminants more than you might expect. Mussels and oysters are both pretty low in mercury, hopefully this extends to other contaminants.

But if you instead start by addressing things like job risks, deepfakes, concentration of power and the totalitarianism, tangible real issues people can see now, they may begin to open that door and then be more susceptible to discussing and acting on existential risk because they have the momentum behind them.

I spent approximately a year at PauseAI (Global) soul-searching over this, and I've come to the conclusion that this strategy is not a good idea. This is something I've changed my mind about.

My original view was something like:

"If we conv...

How would you recommend pushing this book's pile of memes in China? My first thought would be trying to organize some conference with non-Chinese and (especially) Chinese experts, the kinds who advise the government, centered around the claims of the book. I don't know how the CCP would view this though, I'm not an expert on Chinese internal politics.

I think the question is less "Why do we think that the objective comparison between these things should be anchored on neuron count?" And more like "How do we even begin to make a subjective value judgement between these things".

In that case, I would say that when an animal is experiencing pleasure/pain, that probably takes the form of information in the brain. Information content is roughly equivalent to neuron count. All I can really say is that I want less suffering-like information processing in the universe.

Thanks for the clarification, that's good to hear.

Our funder wishes to remain anonymous for now.

This is suspicious. There might be good reasons, but given the historical pattern of:

- Funder with poor track record on existential safety funds new "safety" lab

- "Safety" lab attracts well-intentioned talent

- "Safety" lab makes everything worse

I'm worried that your funder is one of the many, many people with financial stakes in capabilities and reputational stakes in pretending to look good. The specific research direction does not fill me with hope on this front, as it kinda seems like something a frontier lab might want to fund.

We are open to feedback that might convince us to focus on these directions instead of monitorability.

What is the theory of impact for monitorability? It seems to be an even weaker technique than mechanistic interpretability, which has at best a dubious ToI.

Since monitoring is pretty superficial, it doesn't give you a signal which you can use to optimize the model directly (that would be The Most Forbidden Technique) in the limit of superintelligence. My take on monitoring is that at best it allows you to sound an alarm if you catch your AI doing something...

I think there's a couple of missing pieces here. Number 1 is that reasoning LLMs can be trained to be very competent in rewardable tasks, such that we can generate things which are powerful actors in the world, but we still can't get them to follow the rules we want them to follow.

Secondly, if we don't stop now, seems like the most likely outcome is we just die. If we do stop AI development, we can try and find another way forward. Our situation is so dire we should stop-melt-catch-fire on the issue of AI.

I think it's the other way around. If you try to implement computation in superposition in a network with a residual stream, you will find that about the best thing you can do with the Wout is often to just use it as a global linear transformation. Most other things you might try to do with it drastically increases noise for not much pay-off. In the cases where networks are doing that, I would want SPD to show us this global linear transform.

What do you mean by "a global linear transformation" as in what kinds of linear transformations are there other than...

I went back to read Compressed Computation is (probably) not Computation in Superposition more thoroughly, and I can see that I've used "superposition" in a way which is less restrictive than the one which (I think) you use. Every usage of "superposition" in my first comment should be replaced with "compressed computation".

...Networks have non-linearities. SPD will decompose you a matrix into a single linear transformation if what the network is doing with that matrix really is just applying one global linear transformation. If e.g. there are non-linearities

This is also just not really true. Natural Selection (as opposed to genetic drift) can maintain genetic variations especially for things like personality, due to the fact that "optimal" behavioural strategies depend on what others are doing. Any monoculture of behavioural strategies is typically vulnerable to invasion by a different strategy. The equilibrium position is therefore mixed. It's more common for this to occur due to genetic variation than due to each individual using a mixed strategy.

Furthermore, humans have undergone rapid environmental change in recent history, which will have selected for lots of different behavioural traits at different times. So we're not even at equilibrium.

I generally expect the authors of this paper to produce high-quality work, so my priors are on me misunderstanding something. Given that:

I don't see how this method does anything at all, and the culprit is the faithfulness loss.

As you have demonstrated, if a sparse, overcomplete set of vectors is subject to a linear transformation, then SPD's decomposition basically amounts to the whole linear transformation. The only exception is if your sparse basis is not overcomplete in the larger of the two dimensions, in which case SPD finds that basis in the smaller...

https://threadreaderapp.com/thread/1925593359374328272.html

Reading between the lines here, Opus 4 was RLed by repeated iterating and testing. Seems like they had to hit it fairly (for Anthropic) hard with the "Identify specific bad behaviors and stop them" technique.

Relatedly: Opus 4 doesn't seem to have the "good vibes" that Opus 3 had.

Furthermore, this (to me) indicates that Anthropic's techniques for model "alignment" are getting less elegant and sophisticated over time, since the models are getting smarter---and thus harder to "align"---faster than Ant...

No need to invoke epigenetics, the answer is that 2 is false. Who is claiming 2 to be the case?

Humans clearly have large genetic variation in physical traits, why would mental traits be an exception?

Do you have plans in place for music? I'm a rather decent music writer in the domain of video-game-ish music. I can certainly do better than AI generated music, I think AI music generation is really quite bad at the moment. Music with solid/catchy themes can do wonders for the experience---and popularity!

(plus honestly it's been a while since I've gotten to write anything and I'd enjoy doing something creative with a purpose)

I strong-upvoted this post, because this is exactly The Content I Want Here. I think the point of rationality is to apply The Methods until they become effortless; the major value proposition of a rationality community is to be in a place where people apply The Methods to everything. Seeing others apply The Methods by default causes our socially driven brains to do the same. Super-ego, or Sie if you like TLP. Everything includes Love Island. One of my favourite genres of post is "The Methods applied to unusual thing".

Median party conversation is probably about as good as playing a video game I enjoy, or reading a good blog post. Value maybe £2/hr. More tiring than the equivalent activity.

Top 10% party conversation is somewhere around going for a hike somewhere very beautiful near to where I live, or watching an excellent film. Value maybe £5/hr. These are about as tiring as the equivalent activity.

Best conversations I've ever had were on par with an equal amount of time spent on a 1/year quality holiday, like to Europe (I live in the UK) but not to, say, Madagascar. Mo...

That is a fair point.

I think we can go further than this with distillation. One question I have is this: if you distil from a model which is already 'aligned' do you get an 'aligned' model out of it?

Can you use this to transfer 'alignment' from a smaller teacher to a larger student, then do some RL to bring the larger model up in performance. This would get around the problem we currently have, where labs have to first make a smart unaligned model, then try and wrestle it into shape.

Hypothesis: one type of valenced experience---specifically valenced experience as opposed to conscious experience in general, which I make no claims about here---is likely to only exist in organisms with the capability for planning. We can analogize with deep reinforcement learning: seems like humans have a rapid action-taking system 1 which is kind of like Q-learning, it just selects actions; we also have a slower planning-based system 2, which is more like value learning. There's no reason to assign valence to a particular mental state if you're not able to imagine your own future mental states. There is of course moment-to-moment reward-like information coming in, but that seems to be a distinct thing to me.

I prefer Opus 3's effort to Opus 4's. I have found Opus 4 to be missing quite a bit of the Claude charm and skill. Anthropic have said it went through a lot of rounds of RL to stop it being deceptive and scheming. Perhaps their ability to do light-touch RL that gets models to be have but doesn't mode collapse the model too much doesn't extend to this capability level.

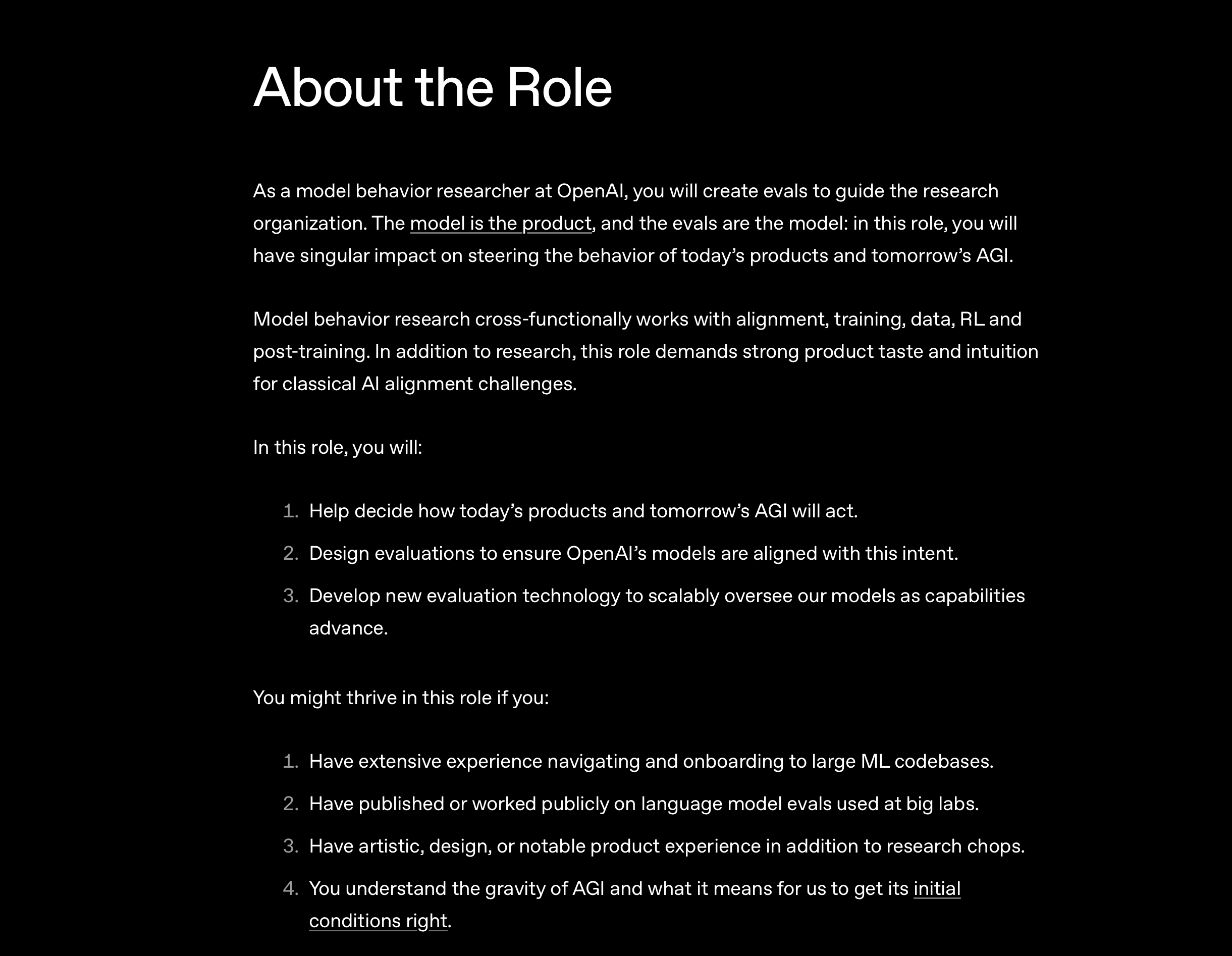

The latest recruitment ad from Aiden McLaughlin tells a lot about OpenAI's internal views on model training:

My interpretation of OpenAI's worldview, as implied by this, is:

- Inner alignment is not really an issue. Training objectives (evals) relate to behaviour in a straightforward and predictable way.

- Outer alignment kinda matters, but it's not that hard. Deciding the parameters of desired behaviour is something that can be done without serious philosophical difficulties.

- Designing the right evals is hard, you need lots of technical skill and high taste to ma

There's two parts here.

- Are people using escalating hints to express romantic/sexual interest in general?

- Does it follow the specific conversational patterns usually used?

1 is true in my experience, while 2 usually isn't. I can think of two examples where I've flirted by escalating signals. In both cases it was more to do with escalating physical touch and proximity, though verbal tone also played a part. I would guess that the typical examples of 2 you normally see (like A complimenting B's choice of shoes, then the B using a mild verbal innuendo, then A ma...

Something I've found really useful is to give Claude a couple of examples of Claude-isms (in my case "the key insight" and "fascinating") and say "In the past, you've over-used these phrases: [phrases] you might want to cut down on them". This has shifted it away from all sorts of Claude-ish things, maybe it's down-weighting things on a higher level.

Even if ~all that pausing does is delay existential risk by 5 years, isn't that still totally worth it? If we would otherwise die of AI ten years from now, then a pause creates +50% more value in the future. Of course it's a far cry from all 1e50 future QALYs we maybe could create, but I'll take what I can get at this point. And a short-termist view would hold that even more important.

I appreciate your analysis. It's was fun to try my best and then check your comments for the real answer, moreso than just getting it from the creator.

OK: so, based on doing a bunch of calibration plots, mutual information plots, and two-way scatter plots to compare candidates, this is what I have.

Candidate 11 is the best choice. 7 and 34 are my second choices, though 19 also looks pretty good.

Holly gives the most information, she's the best predictor overalll, followed by Ziqual. Amy is literally useless. Colleen and Linestra are equivalent. Holly and Ziqual both agree on candidate 11, so I'll choose them.

Interestingly, some choosers like to rank clusters of individuals at exactly the same value, and it

Heuristic explanation for why MoE gets better at higher model size:

The input/output of a feedforward layer is equal to the model_width, but the total size of weights grows as model_width squared. Superposition helps explain how a model component can make the most use of its input/output space (and presumably its parameters) using sparse overcomplete features, but in the limit, the amount of information accessed by the feedforward call scales with the number of active parameters. Therefore at some point, more active parameters won't scale so well, since you're "accessing" too much "memory" in the form of weights, and overwhelming your input/output channels.

My understanding was that diffusion refers to a training objective, and isn't tied to a specific architecture. For example OpenAI's Sora is described as a diffusion transformer. Do you mean you expect diffusion transformers to scale worse than autoregressive transformers? Or do you mean you don't think this model is a transformer in terms of architecture.

Are you American? Because as a British person I would say that the first version looks a lot better to me, and certainly fits the standards for British non-fiction books better.

Though I do agree that the subtitle isn't quite optimal.

I am British. I'm not much impressed by either graphic design, but I'm not a graphic designer and can't articulate why.

Out of domain (i.e. on a different math benchmark) the RLed model does better at pass@256, especially when using algorithms like RLOO and Reinforce++. If there is a crossover point it is in the thousands. (Figure 7)

This seems critically important. Production models are RLed on hundreds to thousands of benchmarks.

We should also consider that, well, this result just doesn't pass the sniff test given what we've seen RL models do. o3 is a lot better than o1 in a way which suggests that RL budgets do scale heavily with xompute, and o3 if anything is better at s...

OK so some further thoughts on this: suppose we instead just partition the values of directly by something like a clustering algorithm, based on in space, and take just be the cluster that is in:

Assuming we can do it with small clusters, we know that is pretty small, so is also small.

And if we consider , this tells us that learning restricts us to a pretty small region of space (since ...

Too Early does not preclude Too Late

Thoughts on efforts to shift public (or elite, or political) opinion on AI doom.

Currently, it seems like we're in a state of being Too Early. AI is not yet scary enough to overcome peoples' biases against AI doom being real. The arguments are too abstract and the conclusions too unpleasant.

Currently, it seems like we're in a state of being Too Late. The incumbent players are already massively powerful and capable of driving opinion through power, politics, and money. Their products are already too useful and ubiquitous t...

Under this formulation, FEP is very similar to RL-as-inference. But RL-as-inference is a generalization of a huge number of RL algorithms from Q-learning to LLM fine-tuning. This does kind of make sense if we think of FEP as a just a different way of looking at things, but it doesn't really help us narrow down the algorithms that the brain is actually using. Perhaps that's actually all FEP is trying to do though, and Friston has IIRC said things to that effect---that FEP is just a reframing/generalization and not an actual model of the underlying algorithms being employed.

This seems not to be true assuming a P(doom) of 25% and a purely selfish perspective, or even a moderately altruistic perspective which places most of its weight on, say, the person's immediate family and friends.

Of course any cryonics-free strategy is probably dominated by that same strategy plus cryonics for a personal bet at immortality, but when it comes to friends and family it's not easy to convince people to sign up for cryonics! But immortality-maxxing for one's friends and family almost definitely entails accelerating AI even at pretty high P(doom...

Huh, I had vaguely considered that but I expected any terms to be counterbalanced by terms, which together contribute nothing to the KL-divergence. I'll check my intuitions though.

I'm honestly pretty stumped at the moment. The simplest test case I've been using is for and to be two flips of a biased coin, where the bias is known to be either or with equal probability of either. As varies, we want to swap from to the trivial case ...

I've been working on the reverse direction: chopping up by clustering the points (treating each distribution as a point in distribution space) given by , optimizing for a deterministic-in- latent which minimizes .

This definitely separates and to some small error, since we can just use to build a distribution over which should approximately separate and .

To show that it's deterministic in (and by sy...

Is the distinction between "elephant + tiny" and "exampledon" primarily about the things the model does downstream? E.g. if none of the fifty dimensions of our subspace represent "has a bright purple spleen" but exampledons do, then the model might need to instead produce a "purple" vector as an output from an MLP whenever "exampledon" and "spleen" are present together.

Just to clarify, do you mean something like "elephant = grey + big + trunk + ears + African + mammal + wise" so to encode a tiny elephant you would have "grey + tiny + trunk + ears + African + mammal + wise" which the model could still read off as 0.86 elephant when relevant, but also tiny when relevant.

I think you should pay in Counterfactual Mugging, and this is one of the newcomblike problem classes that is most common in real life.

Example: you find a wallet on the ground. You can, from least to most pro social:

- Take it and steal the money from it

- Leave it where it is

- Take it and make an effort to return it to its owner

Let's ignore the first option (suppose we're not THAT evil). The universe has randomly selected you today to be in the position where your only options are to spend some resources to no personal gain, or not. In a parallel universe, perhaps...

I have added a link to the report now.

As to your point: this is one of the better arguments I've heard that welfare ranges might be similar between animals. Still I don't think it squares well with the actual nature of the brain. Saying there's a single suffering computation would make sense if the brain was like a CPU, where one core did the thinking, but actually all of the neurons in the brain are firing at once and doing computations in at the same time. So it makes much more sense to me to think that the more neurons are computing some sort of suffering, the greater the intensity of suffering.

OK, so the issue here is that you've switched from a thermodynamic model of the gas atoms near the asteroid to one which ignores temperature at the shell. I'm not going to spend any more time on this because while it is fun, it's not a good use of time.

One of the properties of the second law is that if you can't find a single step in your mechanism which violates it, then the mechanism overall cannot violate it. Since you claim that every step in the process obeys the second law, the entire process must obey the second law. Even if I can't find the error I can say with near-certainty that there is one.