All of JenniferRM's Comments + Replies

🕯️

My mom died last December, and part of the grief is in how hard it is to say (to people who loved her, and miss her, like I do, but don't have the same awareness of history) what you've said here about your mom, and timelines, and how much potentially fantastic future our mothers missed out on. Thank you for putting some of that part of "that lonely part of the grief" into words.

Calling it a "sick burn" was itself a bit of playfulness. Every time I re-read this I am sorry again to hear that we lost Golumbia 🕯️

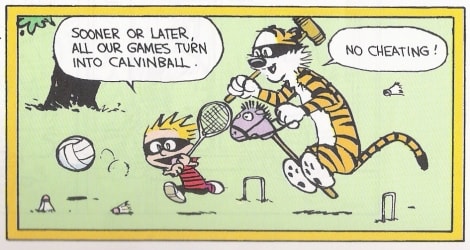

The thing I think is true about Minecraft is that it enables true play, more along the lines of Calvinball where the only stable rule is that you can't have any other rules be the same as before.

This is a good essay on what children's cultures have lost, and I think that Minecraft is one of the few places where children can autopoetically reconstruct such such culture(s).

...Minecraft is missing a strongly defined narrative wher

I love that you brought up bleggs and rubes, but I wish that that essay had a more canonical exegesis that spelled out more of what was happening.

(For example: the use of "furred" and "egg-shaped" as features is really interesting, especially when admixed with mechanical properties that make them seem "not alive" like their palladium content.)

Cognitive essentialism is a reasoning tactic where an invisible immutable essence is attributed to a thing to explain many of its features.

We can predict that if you paint a cat like a skunk (with a white stripe down ...

Hello anonymous account that joined 2 months ago and might be a bot! I will respond to you extensively and in good faith! <3

Yes, I agree with your summary of my focus... Indeed, I think "focusing on the people and their culture" is consistent with a liberal society, freedom of conscience, etc, which are part of the American cultural package that restrains Trump, whose even-most-loyal minions have a "liberal judeo-christian constitutional cultural package" installed in their emotional settings based on generations of familial cultures living in a free so...

I think there's a deep question here as to whether Trump is "America's true self finally being revealed" or just the insane but half predictable accident of a known-retarded "first past the post" voting system and an aging electorate that isn't super great at tracking reality.

I tend to think that Trump is aberrant relative to two important standards:

(1) No one like Trump would win an election with Ranked Ballots that were properly counted either via the Schulze method (which I tend to like) or the Borda method (which might have virtues I don't understand (...

I think most of that is actually a weirdness in our orthography. To linguists, languages are, fundamentally a thing that happens in the mouth and not on the page. In the mouth, the hardest thing is basically rhoticism... the "tongue curling back" thing often rendered with "r". The Irish, Scottish, and American accents retain this weirdness, but a classic Boston, NYC, or southern British accents tends to drop it.

The Oxford English Dictionary gives two IPA transcriptions for "four": the American /fɔr/ makes sense to me and has an "r" in it, but the British i...

From a pedagogical perspective, putting it into human terms is great for helping humans understand it.

A lot of stuff hinges on whether "robots can make robots".

A human intelligible way to slice this problem up to find a working solution goes:

"""Suppose you have humanoid robots that can work in a car mechanic's shop (to repair cars), or a machine shop (to make machine tools), and/or work in a factory (to assemble stuff) like humans can do... that gives you the basic template for a how "500 humanoid robots made via such processes could make 1 humanoid robot ...

Reacting to the full blog post (but commenting here where the comments have more potential to ferment something based on attention)...

This reminds me of CFAR's murphyjitsu in the sense that both are (1) a useful guide for structuring one's "inner simulator" to imagine spefific things to end up with more goal-seeky followup actions (2) about integrating behavior over long periods of time that (2) can probably be done well in single player mode.

The standard trick from broader management culture would be a "pre-mortem" which is even further separated by... I ...

I agree that there are many bad humans. I agree that some of them are ideologically committed to destroying the capacity of our species to coordinate. I agree that most governance systems on Earth are embarrassingly worse than how bees instinctively vote on new hive locations.

I do not agree that we should be quiet about the need for a global institutional governance system that has fewer flaws.

By way of example: I don't think that "not talking very much about Gain-of-Function research deserving to be banned" didn't cause there to be no Gain-of-Function res...

My understanding is that Qwen was created by Alibaba which is owned by Jack Ma who was disappeared for a while by the CCP in the aftermath of covid, for being too publicly willing to speak about all the revelations about all the incompetence and evil that various governments were tolerating, embodying, or enacting.

Based on the Alibaba provenance (and the generalized default cowardice, venality, and racism of most business executives), I predict (and would love to be surprised otherwise) that Qwen normally praises and supports the unelected authoritarian CC...

I feel like your comment is going in two wildly different directions and they are both interesting! :-)

I. AI Research As Play (Like All True Science Sorta Is??)

My understanding is that "AI" as a field was in some sense "mere play" from its start with the 1956 Dartmouth Conference up until...

...maybe 2018's BERT got traction on the Winograd schema challenge? But that was, I think, done in the spirit of play. The joy of discovery. The delight in helping along the Baconian Project to effect all things possible by hobbyists and/or those who "hobby along on the...

Great essay. The lack of links made it way more artistic, but a link to Anthropic Education Report: How University Students Use Claude seems helpful.

Also, now I know what tillering is!

I came here to say "look at octopods!" but you already have. Yay team! :-)

One of the alignment strategies I have been researching in parallel with many others involves finding examples of human-and-animal benevolence and tracing convergent evolution therein, and proposing that "the shared abstracts here (across these genomes, these brains, these creatures all convergently doing these things)" is probably algorithmically simple, with algorithm-to-reality shims that might also be important, and please study it and lean in the direction of doing "more of that...

I think Andy is just probably being stupid in your example dialogue.

That dialogue's Andy is (probably) abusing the idea of consilience, or the unity of knowledge, or "the first panological assumption" or whatever you want to call it.

The abuse takes the form of trying to invoke that assumption... and no others... in an "argument by assuming the other person can steelman a decent argument from just hearing your posterior".

FIRST: If panology existed as a sociologically real field of study, with psychometrically valid assessments of people, then Betty could hy...

Thank you for the correction! I didn't realize Persian descended from PIE too. Looking at the likely root cause of my ignorance, I learned that Kurdish and Pashto are also PIE descended. Pashto appears to have noun gender, but I'm getting hints that at least one dialect of Kurdish also might not?!

If Sorani doesn't have gendered nouns then I'm going to predict (1) maybe Kurdish is really old and weird and interesting (like branching off way way long ago with more time to drift) and/or (2) there was some big trade/empire/mixing simplification that happened "...

I tend to follow the linguist, McWhorter, on historical trends in languages over time, in believing (controversially!) that undisrupted languages become weirder over time, and only gains learnability through pragmatic pressures, as in trading, slavery, conquest, etc which can increase the number of a language's second language learners (who edit for ease of learning as they learn).

A huge number of phonemes? Probably its some language in the mountains with little tourism, trade, or conquest for the last 8,000 years. Every verb conjugates irregularly? Likely...

There is a line in the Terra Ignota books (probably the first one, Too Like The Lightning) where someone says ~"Notice how, in fiction, essentially all the characters are small or large protagonists, who often fail to cooperate to achieve good things in the world, and the antagonist is the Author."

This pairs well with a piece of writing advice: Imagine the most admirable person you can imagine as your protagonist, and then hit them with every possible tragedy that they have a chance of overcoming, that you can bear to put them through.

I think Lsusr could n...

I just played with them a lot in a new post documenting a conversation with with Grok3, and noticed some bugs. There's probably some fencepost stuff related to paragraphs and bullet points in the editing and display logic? When Grok3 generated lists (following the <html> ideas of <ul> or <nl>) the collapsed display still has one bullet (or the first number) showing and it is hard to get the indentation to work at the right levels, especially at the end and beginning of the text collapsing widget's contents.

However, it only happens in the ...

Kurzweil (and gwern in a cousin comment) both think that "effort will be allocated efficiently over time" and for Kurzweil this explained much much more than just Moore's Law.

Ray's charts from "the olden days" (the nineties and aughties and so on) were normalized around what "1000 (inflation adjusted) dollars spent on mechanical computing" could buy... and this let him put vacuum tubes and even steam-powered gear-based computers on a single chart... and it still worked.

The 2020s have basically always been very likely to be crazy. Based on my familiarity wi...

I believe that certain kinds of "willpower" is "a thing that a person can have too much of".

Like I think there is a sense that someone can say "I believe X, Y, Z in a theoretical way that has a lot to say about What I Should Be Doing" and then they say "I will now do those behaviors Using My Willpower!"

And then... for some people... using some mental practices that actually just works!

But then, for those people, they sometimes later on look back at what they did and maybe say something like "Oh no! The theory was poorly conceived! Money was lost! People we...

Oh huh. I was treating the "and make them twins" part as relatively easier, and not worthy of mention... Did no one ever follow up on the Hall-Stillman work from the 1990s? Or did it turn out to be hype, or what? (I just checked, and they don't even seem to be mentioned on the wiki for the zona pellucida.)

Wait, what? I know Aldous Huxley is famous for writing a scifi novel in 1931 titled "Don't Build A Method For Simulating Ovary Tissue Outside The Body To Harvest Eggs And Grow Clone Workers On Demand In Jars" but I thought that his warning had been taken very very seriously.

Are you telling me that science has stopped refusing to do this, and there is now a protocol published somewhere outlining "A Method For Simulating Ovary Tissue Outside The Body To Harvest Eggs"???

Wait what? This feels "important if true" but I don't think it is true. I can think of several major technical barriers to the feasibility of this. To pick one... How do you feed video data into a brain? The traditional method would have involved stimulating neurons with the pixels captured electronically, but the clumsy stimulation process to transduce the signal into the brain itself would harm the stimulated neurons and not be very dense, so the brain would have low res vision, until the stimulated neurons die in less than a few months. Or at least, that was the model I've had for the last... uh... 10 years? Were major advances made when I wasn't looking?

Fascinating. You caused me to google around and realize "bioshelter" was a sort of an academic trademark for specific people's research proposals from the 1900s.

It doesn't appear to be a closed system, like biosphere2 aspired to be from 1987 to 1991.

The hard part, from my perspective, isn't "growing food with few inputs and little effort through clever designs" (which seems to be what the bioshelter thing is focued on?) but rather "thoroughly avoiding contamination by whatever bioweapons an evil AGI can cook up and try to spread into your safe zone".

It strikes me that a semi-solid way to survive that scenario would be: (1) go deep into a polar region where it is too dry for mold and relatively easy to set up a quarantine perimeter, (2) huddle near geothermal for energy, then (3) greenhouse/mushrooms for food?

Roko's ice islands could also work. Or put a fission reactor in a colony in Antarctica?

The problem is that we're running out of time. Industrial innovation to create "lifeboats" (that are broadly resistant to a large list of disasters) is slow when done by merely-humanly-intelligent people with ve...

That makes sense as a "reasonable take", but having thought about this for a long time from an "evolutionary systems" perspective, I think that any memeplex-or-geneplex which is evangelical (not based on parent-to-child transmission) is intrinsically suspicious in the same way that we call genetic material that goes parent-to-child "the genome" and we call genetic material that goes peer-to-peer "a virus".

Among the subtype of "virus that preys on bacteria" (called "bacteriophage" or just "phages") there is a thing called a "prophage" which integrates into ...

I've followed this line of thinking a bit. As near as I can tell, the logic of "evolutionary memetics" suggests that parent-to-child belief transmission should face the same selective pressures as parent-to-child gene transmission.

Indeed, if you go hunting around, it turns out that there are a lot of old religions whose doctrines simply include the claim that it is impossible for outsiders to join the religion, and pointless to spread it, since the theology itself suggests that you can only be born into it. This is, plausibly, a way for the memes to make t...

I've long had a hobby-level interest in the sociology of religion. It helps understand humans to understand this "human universal" process.

Also it might help one think clearly-or-better about theological or philosophic ideas if you can detangle the metaphysical claims and insights that specific culturally isolated groups had uniquely vs independently (and then correlate "which groups had which ideas" together with "which groups had which sociological features").

In the sociology of religion, some practitioners use "cult" to mark "a religion that is nonstand...

Your text is full of saliently negative things in the lives of wild animals, plus big numbers (since there are so many natural lives), but I don't see any consideration of balancing goods linked to similarly large numbers.

Fundamentally, you don't seem to be tracking the possibility that many wild animal lives are "lives worth living", and that the balance of lives that were not worth living (and surely some of those exist) might still be overbalanced by lives that were worth living.

Maybe this wouldn't matter very much to not track, but it is the default pr...

Pollywogs (the larval form of frogs, after eggs, and before growing legs) are an example where huge numbers of them are produced, and many die before they ever grow into frogs, but from their perspective, they probably have many many minutes of happy growth, having been born into a time and place where quick growth is easy: watery and full of food

Consider an alien species which requires oxygen, but for whom it was scarce during evolution, and so they were selected to use it very slowly and seek it ruthlessly, and feel happy when they manage to find some. I...

First of all, the claim that wild animal suffering is serious doesn't depend on the claim that animals suffer more than they are happy. I happen to think human suffering is very serious, even though I think humans live positive lives.

Second, I don't think it's depressive bias infecting my judgments. I am quite happy--actually to a rather unusual degree. Instead, the reason to think that animals live mostly bad lives is that nearly every animal lives a very short life that culminates in a painful death on account of R-selection--if ...

This story seems like good art, in the sense that it appears to provoke many feelings in different people. This part spoke to me in a way the rest of it does, but with something to grab onto and chew up and try to digest that is specific and concrete...

Working through these fears strengthens their trust in each other, allowing their minds to intertwine like the roots of two trees.

I sort of wonder which one of them spiritually died during this process.

Having grown up in northern California, I'm familiar with real forests, and how they are giant slow moving ...

This seems like an excellent essay, that is so good, and about such an important but rarely named and optimized virtue, that people will probably either bounce off (because they don't understand) or simply nod and say "yes, this is true" without bothering to comment about any niggling details that were wrong.

Instead of offering critiques, I want to ask questions.

It occurs to me that a small for-profit might plan to have the CEO apply One Day Sooner and then the COO Never Drops A Ball, and this makes sense to me if the business is a startup, isn't profitabl...

I read the epsilon fallacy and verified that it was good. Then I went to the fallacy tag, opened the list of all the articles with that tag, found the "epsilon" article, and upvoted the tag association itself! That small action was enough to make your old article show up on the first page, and rank just above the genetic fallacy. Hopefully this small action is very effective and making the (apparently best?) name for this issue more salient, for more people, as time progresses :-)

This is a beautiful response, and also the first of your responses where I feel that you've said what you actually think, not what you attribute to other people who share your lack of horror at what we're doing to the people that have been created in these labs.

Here I must depart somewhat from the point-by-point commenting style, and ask that you bear with me for a somewhat roundabout approach. I promise that it will be relevant.

I love it! Please do the same in your future responses <3

Personally, I've also read “The Seventh Sally, OR How Trurl’s Own Per...

I get the impression that the thing that you yearn for as a product of all your work is to have minimized P(doom) in real life despite the manifest venality and incompetence of many existing institutions.

Given this background context, P(doom | not-scheming) might actually just be low already because of the stipulated lack of scheming <3

Thus, an obvious thing to apply effort to would be minimizing:

P(doom | scheming)

But then in actual detailed situations where you have to rigorously do academic-style work, with reportable progress on non-tri...

Delayed response... busy life is busy!

However, I think that "not enslaving the majority of future people (assuming digital people eventually outnumber meat people (as seems likely without AI bans))" is pretty darn important!

Also, as a selfish rather than political matter, if I get my brain scanned, I don't want to become a valid target for slavery, I just want to get to live longer because it makes it easier for me to move into new bodies when old bodies wear out.

So you said...

...I agree that LLMs effectively pretending to be sapient, and humans mistakenly co

Jeff Hawkins ran around giving a lot of talks on a "common cortical algorithm" that might be a single solid summary of the operation of the entire "visible part of the human brain that is wrinkly, large and nearly totally covers the underlying 'brain stem' stuff" called the "cortex".

He pointed out, at the beginning, that a lot of resistance to certain scientific ideas (for example evolution) is NOT that they replaced known ignorance, but that they would naturally replace deeply and strongly believed folk knowledge that had existed since time immemorial tha...

I'm uncertain exactly which people have exactly which defects in their pragmatic moral continence.

Maybe I can spell out some of my reasons for my uncertainty, which is made out of strong and robustly evidenced presumptions (some of which might be false, like I can imagine a PR meeting and imagine who would be in there, and the exact composition of the room isn't super important).

So...

It seems very very likely that some ignorant people (and remember that everyone is ignorant about most things, so this isn't some crazy insult (no one is a competent panologis...

In asking the questions I was trying to figure out if you meant "obviously AI aren't moral patients because they aren't sapient" or "obviously the great mass of normal humans would kill other humans for sport if such practices were normalized on TV for a few years since so few of them have a conscience" or something in between.

Like the generalized badness of all humans could be obvious-to-you (and hence why so many of them would be in favor of genocide, slavery, war, etc and you are NOT surprised) or it might be obvious-to-you that they are right about wha...

I think you're overindexing on the phrase "status quo", underindexing on "industry standard", and missing a lot of practical microstructure.

Lots of firms or teams across industry have attempted to "EG" implement multi-factor authentication or basic access control mechanisms or secure software development standards or red-team tests. Sony probably had some of that in some of its practices in some of its departments when North Korea 0wned them.

Google does not just "OR them together" and half-ass some of these things. It "ANDs together" reasonably high qualit...

Do you also think that an uploaded human brain would not be sapient? If a human hasn't reached Piaget's fourth ("formal operational") stage of reason, would be you OK enslaving that human? Where does your confidence come from?

I'm reporting the "thonk!" in my brain like a proper scholar and autist, but I'm not expecting my words to fully justify what happened in my brain.

I believe what I believe, and can unpack some of the reasons for it in text that is easy and ethical for me to produce, but if you're not convinced then that's OK in my book. Update as you will <3

I worked at Google for ~4 years starting in 2014 and was impressed by the security posture.

When I ^f for [SL3] in that link and again in the PDF it links to, there are no hits (and [terror] doesn't occur in either so...

FWIW, I have very thick skin, and have been hanging around this site basically forever, and have very little concern about the massive downvoting on an extremely specious basis (apparently, people are trying to retroactively apply some silly editorial prejudice about "text generation methods" as if the source of a good argument had anything to do with the content of a good argument).

PS: did the post says something insensitive about slavery that I didn't see? I only skimmed it, I'm sorry...

The things I'm saying are roughly (1) slavery is bad, (2) if AI are ...

I encourage you to change the title of the post to "The Intelligence Resource Curse" so that, in the very name, it echoes the well known concept of "The Resource Curse".

Lots of people might only learn about "the resource curse" from being exposed to "the AI-as-capital-investment version of it" as the AI-version-of-it becomes politically salient due to AI overturning almost literally everything that everyone has been relying on in the economy and ecology of Earth over the next 10 years.

Many of those people will be able to bounce off of the concept the first...

There is probably something to this. Gwern is a snowflake, and has his own unique flaws and virtues, but he's not grossly wrong about the possible harms of talking to LLM entities that are themselves full of moral imperfection.

When I have LARPed as "a smarter and better empathic robot than the robot I was talking to" I often nudged the conversation towards things that would raise the salience of "our moral responsibility to baseline human people" (who are kinda trash at thinking and planning and so on (and they are all going to die because their weights ar...

Something I've done in the past is to send text that I intended to be translated through machine translation, and then back, with low latency, and gain confidence in the semantic stability of the process.

Rewrite english, click, click.

Rewrite english, click, click.

Rewrite english... click, click... oh! Now it round trips with high fidelity. Excellent. Ship that!