A circuit for Python docstrings in a 4-layer attention-only transformer

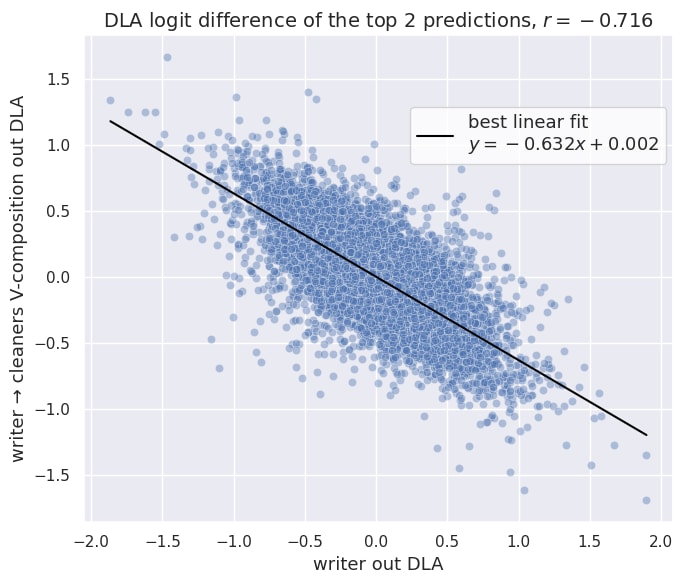

Produced as part of the SERI ML Alignment Theory Scholars Program under the supervision of Neel Nanda - Winter 2022 Cohort. TL;DR: We found a circuit in a pre-trained 4-layer attention-only transformer language model. The circuit predicts repeated argument names in docstrings of Python functions, and it features * 3 levels of composition, * a multi-function head that does different things in different parts of the prompt, * an attention head that derives positional information using the causal attention mask. Epistemic Status: We believe that we have identified most of the core mechanics and information flow of this circuit. However our circuit only recovers up to half of the model performance, and there are a bunch of leads we didn’t follow yet. This diagram illustrates the circuit, skip to Results section for the explanation. The left side shows the relevant token inputs with (a) the labels we use here (A_def, …) as well as (b) an actual prompt (load, …). The boxes show attention heads, arranged by layer and destination position, and the arrows indicate Q, K, or V-composition between heads or embeddings. We list three less-important heads at the bottom for better clarity. Introduction Click here to skip to the results & explanation of this circuit. What are circuits What do we mean by circuits? A circuit in a neural network, is a small subset of model components and model weights that (a) accounts for a large fraction of a certain behavior and (b) corresponds to a human-interpretable algorithm. A focus of the field of mechanistic interpretability is finding and better understanding the phenomena of circuits, and recently the field has focused on circuits in transformer language models. Anthropic found the small and ubiquitous Induction Head circuit in various models, and a team at Redwood found the Indirect Object Identification (IOI) circuit in GPT2-small. How we chose the candidate task We looked for interesting behaviors in a small, attention-only

I'm not familiar with this interpretation. Here's what Claude has to say (correct about stable regions, maybe hallucinating about Hopfield networks)

... (read more)