Intro

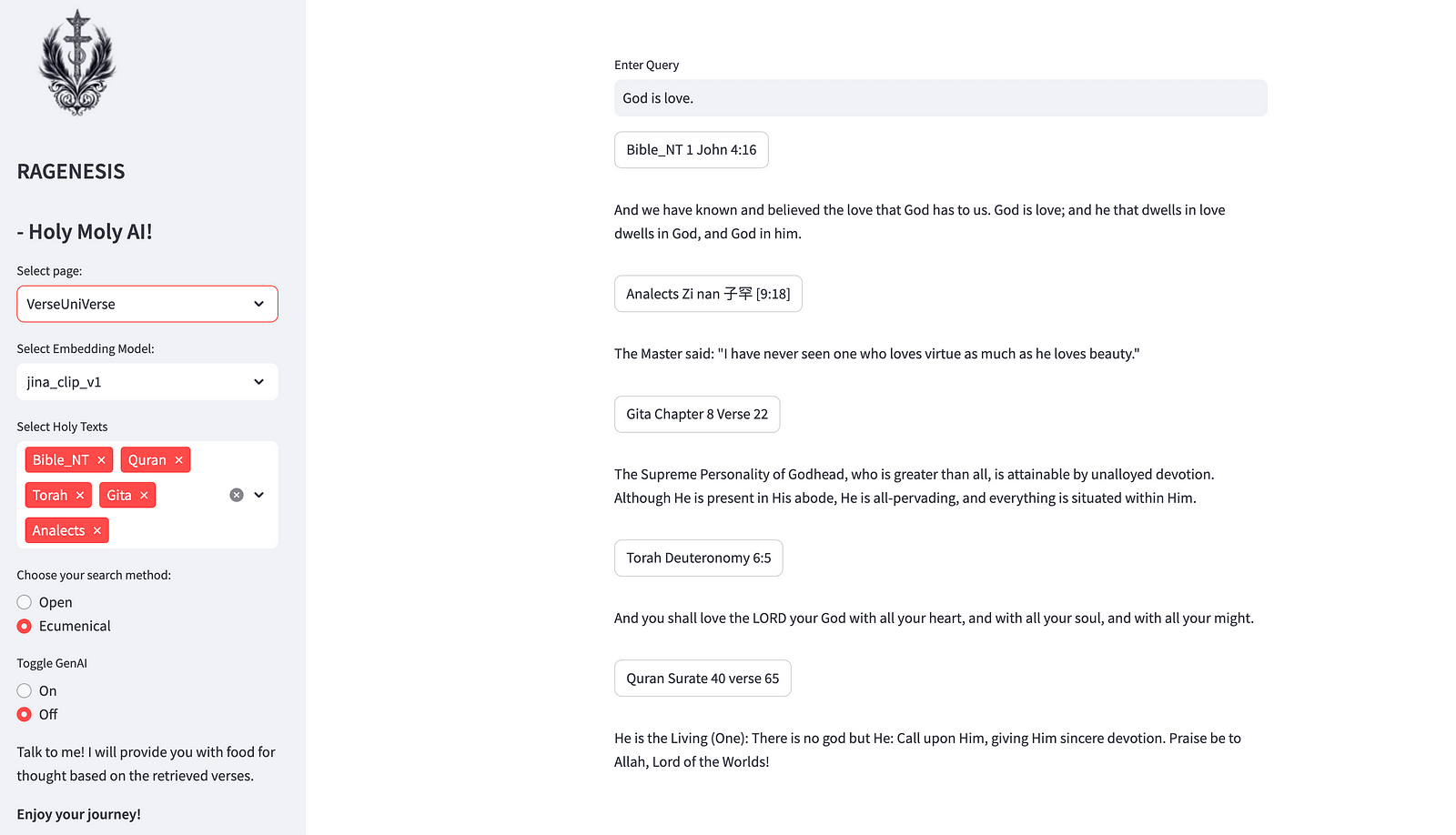

In my last post on Lesswrong I shared ragenesis.com platform, along with a general description of the thought process behind the platform's development. Fundamentally, RAGenesis is a search and research AI driven software tool dedicated to 5 of the most representative holy texts in history: The Torah, The Bhagavad Gita, The Analects, The Bible's new testament and The Quran.

Beyond that, the platform (and my previous article) explores the concept of Semantic Similarity Networks (SSN), whereby one is able to represent the mutual similarities between chunks in the embedded knowledge base through a graph representation, following a similar framework to Microsoft's GraphRAG, but providing the user a direct interface with the network through... (read 3290 more words →)

I love this! Clarity (and clearance xD [latin pun here]) is all we need.

One addition which I think can be relevant to the discussion on optimization and meta-strategizing is also the acknowledgement of the simple fact that to meta-strategize you need to apply time to something which does not immediately returns backlog completion.

So to be quicker in the future, you have to invest time which at first will appear to slow you down, that's something which needs to be underscored given the reality of day jobs in engineering and related areas.

Recognizing the need for principles, is also conditioned on in part disregarding the immediacy of the ungeneralized day-to-day task. That's meta-management right there.