All of Jonas Hallgren's Comments + Replies

I had some more specific thoughts on ML-specific bottlenecks that might be difficult to get through in terms of software speed up but the main point is as you say, just apply a combo of amdahls, hofstadter and unknown unknowns and then this seems a bit more like a contractor's bid on a public contract. (They're always way over budget and always take 2x the amount of time compared to the plan.)

Nicely put!

Yeah, I agree with that and I still feel there's something missing from that discussion?

Like, there's some degree that to have good planning capacity you want to have good world model to plan over in the future. You then want to assign relative probabilities to your action policies working out well. To do this having a clear self-environment boundary is quite key, so yes memory enables in-context learning but I do not believe that will be the largest addition, I think the fact that memory allows for more learning about self-environment boundari...

I want to ask a question here which is not necessarily related to the post but rather your conceptualisation of the underlying reason why memory is a crux for more capabilities style things.

I'm thinking that it has to do with being able to model boundaries of what itself is compared to the environment. It then enables it to get this conception of a consistent Q-function that it can apply whilst if it doesn't have this, there's some degree that there's almost no real object permanence, no consistent identities through time?

Memory allows you to "touch"...

I will check it out! Thanks!

I would have wanted more pointing out of institutional capacity as part of the solution to this but I think it is a very good way of describing a more generalised re-focus to not goodharting on sub-parts of the problem.

Now, that I've said something serious I can finally comment on what I wanted to comment on:

Thanks to Ted Chiang, Toby Ord, and Hannu Rajaniemi for conversations which improved this piece.

Ted Chiang!!! I'm excited for some banger sci-fi based on things more relating to x-risk scenarios, that is so cool!

(You can always change the epistemic note at the top to include this! I think it might improve the probability of a disagreeing person changing their mind.)

Also, I am just surprised I seem to be the only one making this fairly obvious point (?), and it raises some questions about our group epistemics.

First and foremost, I want to acknowledge the frustration and more combatitive tone in this post and ask whether it is more of a pointer towards confusion about how we can be doing this so wrong?

I think that more people are in a similar camp to you but that it feels really hard to change group epistemics of this belief? It feels quite core and even if you have longer conversations with people about underlyi...

I see your point, yet if the given evidence is 95% in the past, the 5% in the future only gets a marginal amount added to it, I do still like the idea of crossing off potential filters to see where the risks are so fair enough!

So my thinking is something like this:

- If AI systems get smart enough, they will develop understanding of various ways of categorizing their knowledge. For humans this manifests as emotions and various other things like body language that we through theory of mind we assume we share. This means that when we communicate we can hide a lot of subtext through what we say, or in other words there are various ways of interpreting this information signal?

- This means that there will be various hidden ways for AIs to communicate with each other.

- By sampling on somethi

Interesting!

I definetely see your point in how the incentives here are skewed. I would want to ask you what you think of the claims about inductive biases and difficulty of causal graph learning for transformers? A guess is that you could just add it on top of the base architecture as a MOA model with RL in it to solve some problems here but that feels like people from the larger labs might not realise that at first?

Also, I wasn't only talking about GDL, there's like two or three other disciplines that also have some ways they believe that AGI ...

I'm just gonna drop this video here on The Zizian Cult & Spirit of Mac Dre: 5CAST with Andrew Callaghan (#1) Feat. Jacob Hurwitz-Goodman:

https://www.youtube.com/watch?v=2nA2qyOtU7M

I have no opinions on this but I just wanted to share it as it seems highly relevant.

Looking back at retreat data from my month long retreat december 2023 from my oura I do not share the observations in reduced sleep meed that much. I do remember needing around half an hour to an hour less sleep to feel rested. This is however a relatively similar effect to me doing an evening yoga nidra right before bed.

In my model, I've seen better correlation with stress metrics and heart rate 4 hours before bed to explain this rather than the meditation itself?

It might be something about polyphasic sleep not being as effective as my oura thinks I go into deep sleep sometimes in deep meditation so inconclusive but most likely a negative data point here.

I'll just pose the mandatory comment about long-horizon reasoning capacity potentially being a problem for something like agent-2. There's some degree in which the delay of that part of the model gives pretty large differences in distribution of timelines here.

Just RL and Bitter Lesson it on top of the LLM infrastructure is honestly like a pretty good take on average but it feels like that there a bunch of unknown unknowns there in terms of ML? There's a view that states that there is 2 or 3 major scientific research problems to go through at that po...

Well, I don't have a good answer but I also do have some questions in this direction that I will just pose here.

Why can't we have the utility function be some sort of lexicographical satisficer of sub parts of itself, why do we have to make the utility function consequentialist?

Standard answer: Because of instrumental convergence, duh.

Me: Okay but why would instrumental convergence select for utility functions that are consequantialist?

Standard answer: Because they obviously outperform the ones that don't select for the consequences or like what do y...

This is a very good point, I'm however curious why you chose tiktok over something like Qanon or 8chan though. Is tiktok really adverserial enough to grow as a content creator?

This is absolutely fascinating to me, great post!

I would be curious if you have any thoughts about using this for steganography?

I might be understanding the post wrongly but here's what I'm thinking of:

There's some degree in which you can describe circuits or higher order ways of storing information in NNs through renormalization (or that's at least the hypothesis). Essentially you might then be able to set up a "portfolio" of different lenses that all can be correct in various ways (due to polysemanticity).

If you then have all of the reconcept...

No, we good. I was just operating under the assumption that deepseek was just doing distilling of OpenAI but it doesnt seem to be the only good ML company from China. There’s also a bunch of really good ML researchers from China so I agree at this point.

The policy change for LLM Writing got me thinking that it would be quite interesting to write out how my own thinking process have changed as a consequence of LLMs. I'm just going to give a bunch of examples because I can't exactly pinpoint it but it is definetely different.

Here's some background on what part it has played in my learning strategies: I read the sequences around 5 years ago after getting into EA, I was 18 then and it was a year or two later that I started using LLMs in a major way. To some extent this has shaped my learning patterns, for exa...

So, I've got a question about the policy. My brain is just kind of weird so I really appreciate having claude being able to translate my thoughts into normal speak.

The case study is the following comments in the same comment section:

13 upvotes - written with help of claude

1 upvote (me) - written with the help of my brain only

I'm honestly quite tightly coupled to claude at this point, it is around 40-50% of my thinking process (which is like kind of weird when I think about it?) and so I don't know how to think about this policy change?

I guess a point here might also be that luck involves non-linear effects that are hard to predict and so when you're optimising for luck you need to be very conscious about not only looking at results but rather holding a frame of playing poker or similar.

So it is not something that your brain does normally and so it is a core skill of successful strategy and intellectual humility or something like that?

I thought I would give you another causal model based on neuroscience which might help.

I think your models are missing a core biological mechanism: nervous system co-regulation.

Most analyses of relationship value focus on measurable exchanges (sex, childcare, financial support), but overlook how humans are fundamentally regulatory beings. Our nervous systems evolved to stabilize through connection with others.

When you share your life with someone, your biological systems become coupled. This creates several important values:

- Your stress response systems syn

So I guess the point then more becomes about general open source development of other countries where China is part of it and that people did not correctly predict this as something that would happen.

Something like distillation techniques for LLMs would be used by other countries and then profilerated and that the rationality community as a whole did not take this into account?

I'll agree with you that Bayes points should be lost in prediction of theory of mind of nation states, it is quite clear that they would be interested in this from a macr...

I think I object level disagree with you on the china vector of existential risk, I think it is a self-fulfilling prophecy and that it does not engage with the current AI situation in china.

If you were object-level correct about china I would agree with the post but I just think you're plain wrong.

Here's a link to a post that makes some points about the general epistmic situation around china: https://www.lesswrong.com/posts/uRyKkyYstxZkCNcoP/careless-talk-on-us-china-ai-competition-and-criticism-of

I love this approach, I think it very much relates to how systems need good ground truth signals and how verification mechanisms are part of the core thing we need for good AI systems.

I would be very interested in setting more of this up as infrastructure for better coding libraries and similar for the AI Safety research ecosystem. There's no reason why this shouldn't be a larger effort for alignment research automation. I think it relates to some of the formal verification stuff but it is to some extent the abstraction level above it and so if we want efficient software systems that can be integrated into formal verification I see this as a great direction to take things in.

Could you please make an argument for goal stability over process stability?

If I reflecticely agree that if the process A (QACI or CEV for example) is reflectively good then I agree to changing my values from B to C if process A happens? So it is more about the process than the underlying goals. Why do we treat goals as the main class citizen here?

There's something in well defined processes that make them applicable to themselves and reflectively stable?

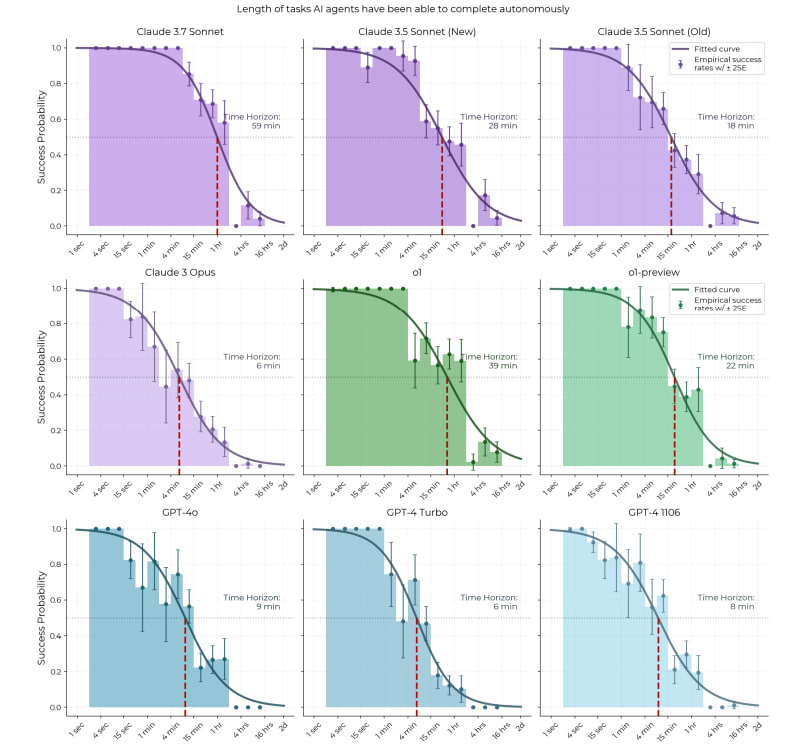

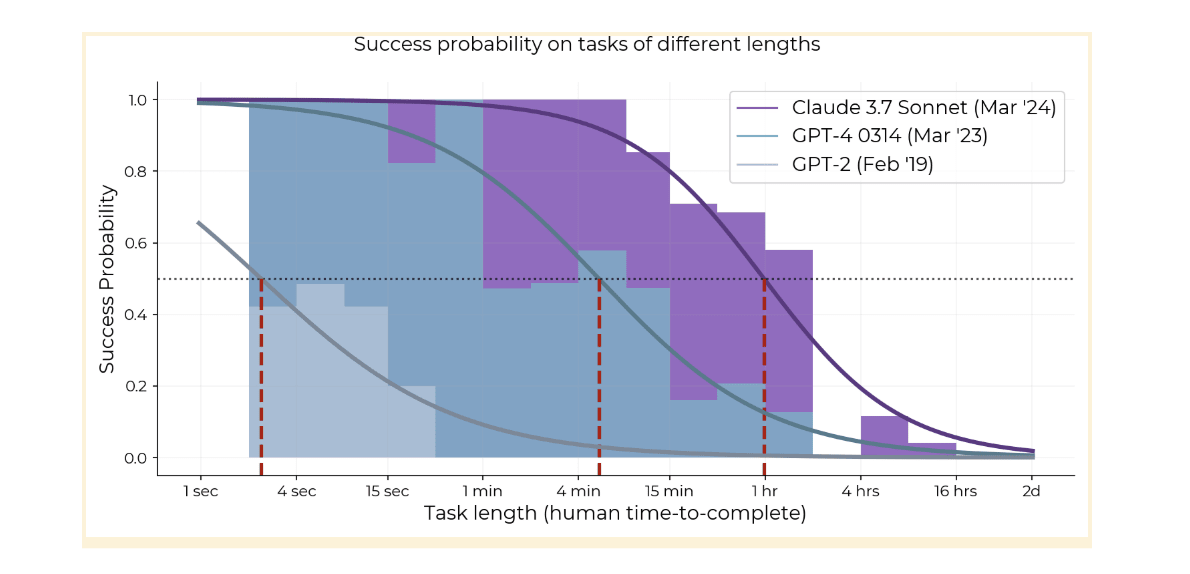

Looking at the METR paper's analysis, there might be an important consideration about how they're extrapolating capabilities to longer time horizons. The data shows a steep exponential decay in model success rates as task duration increases. I might be wrong here but it seems weird to be taking an arbitrary cutoff of 50% and doing a linear extrapolation from that?

The logistic curves used to estimate time horizons assume a consistent relationship between task duration and difficulty across all time scales. However, it's plausible that tasks requ...

All models since at least GPT-3 have had this steep exponential decay [1], and the whole logistic curve has kept shifting to the right. The 80% success rate horizon has basically the same 7-month doubling time as the 50% horizon so it's not just an artifact of picking 50% as a threshold.

Claude 3.7 isn't doing better on >2 hour tasks than o1, so it might be that the curve is compressing, but this might also just be noise or imperfect elicitation.

Regarding the idea that autoregressive models would plateau at hours or days, it's plausible, and one point of...

There's a lot of good thought in here and I don't think I was able to understand all of it.

I will focus in on a specific idea that I would love to understand some of your thoughts on, looking at meta categories. You say something like the problem in itself will remain even if you go up a meta level. My questioning is about how certain you're of this being true? So from a category theory lens your current base-claim in the beginning looks something like:

And so this is more than this, it is also about a more general meta-level thing where even if you w...

I saw the comment and thought I would drop some stuff that are beginnings of approaches for a more mathematical theory of iterated agency.

A general underlying idea is to decompose a system into it's maximally predictive sub-agents, sort of like an arg-max of daniel dennetts intentional stance.

There are various underlying reasons for why you would believe that there are algorithms for discovering the most important nested sub-parts of systems using things like Active Inference especially where it has been applied in computational biology. Here's some ...

TL;DR:

While cultural intelligence has indeed evolved rapidly, the genetic architecture supporting it operates through complex stochastic development and co-evolutionary dynamics that simple statistical models miss. The most promising genetic enhancements likely target meta-parameters governing learning capabilities rather than direct IQ-associated variants.

Longer:

You make a good point about human intelligence potentially being out of evolutionary equilibrium. The rapid advancement of human capabilities certainly suggests beneficial genetic variants might s...

The book Innate actually goes into detail about a bunch of IQ studies and relating it to neuroscience which is why I really liked reading it!

and it seems most of this variation is genetic

This to me seems like the crux here, in the book innate he states the belief that around 60% of it is genetic and 20% is developmental randomness (since brain development is essentially a stochastic process), 20% being nurture based on twin studies.

I do find this a difficult thing to think about though since intelligence can be seen as the speed of the larger h...

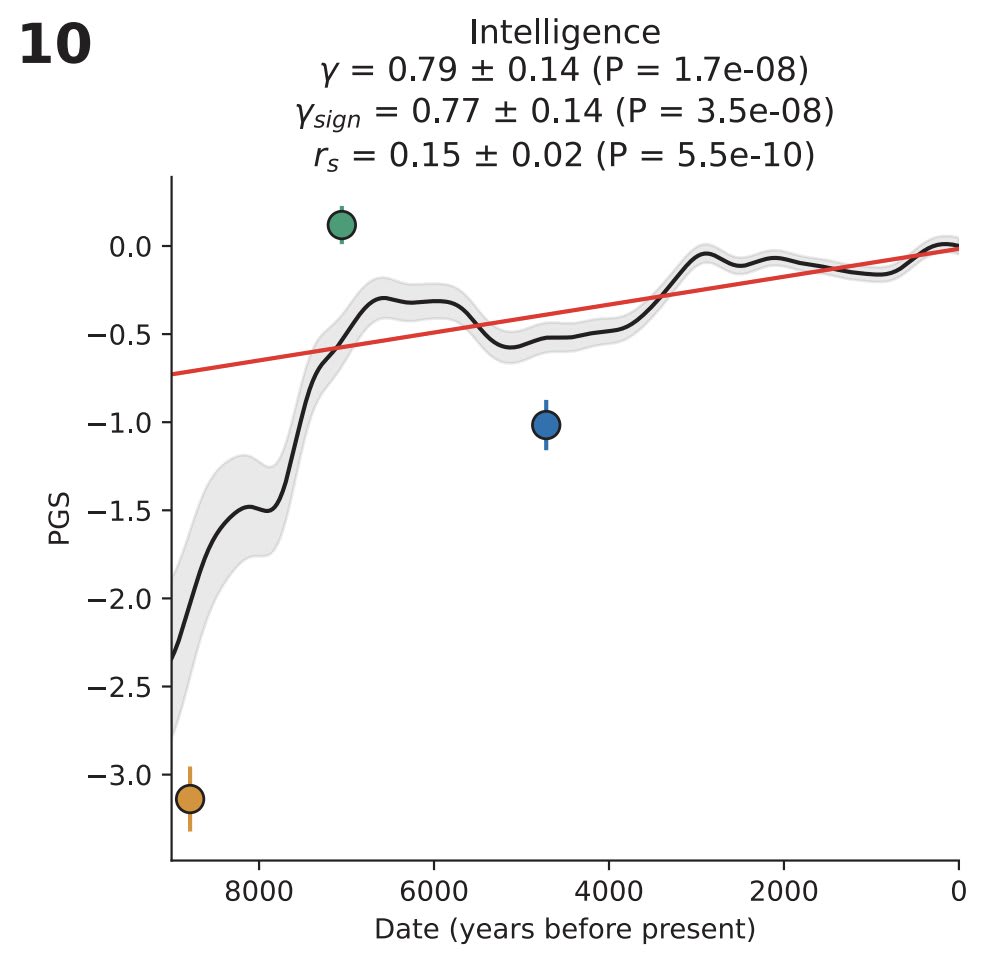

I felt too stupid when it comes to biology to interact with the original superbabies post but this speaks more my language (data science) so I would also just want to bring up a point I had with the original post that I'm still confused about related to what you've mentioned here.

The idea I've heard about this is that intelligence has been under strong selective pressure for millions of years, which should apriori make us believe that IQ is a significant challenge for genetic enhancement. As Kevin Mitchell explains in "Innate," most remaining genetic varia...

One data point that's highly relevant to this conversation is that, at least in Europe, intelligence has undergone quite significant selection in just the last 9000 years. As measured in a modern environment, average IQ went from ~70 to ~100 over that time period (the Y axis here is standard deviations on a polygenic score for IQ)

The above graph is from David Reich's paper

I don't have time to read the book "Innate", so please let me know if there are compelling arguments I am missing, but based on what I know the "IQ-increasing variants have been exhausted...

In the modern era, the fertility-IQ correlation seems unclear; in some contexts, higher fertility seems to be linked with lower IQ, in other contexts with higher IQ. I have no idea of what it was like in the hunter-gatherer era, but it doesn't feel like an obviously impossible notion that very high IQs might have had a negative effect on fertility in that time as well.

E.g. because the geniuses tended to get bored with repeatedly doing routine tasks and there wasn't enough specialization to offload that to others, thus leading to the geniuses having lower s...

IIUC human intelligence is not in evolutionary equilibrium; it's been increasing pretty rapidly (by the standards of biological evolution) over the course of humanity's development, right up to "recent" evolutionary history. So difficulty-of-improving-on-a-system-already-optimized-by-evolution isn't that big of a barrier here, and we should expect to see plenty of beneficial variants which have not yet reached fixation just by virtue of evolution not having had enough time yet.

(Of course separate from that, there are also the usual loopholes to evolutionar...

Do you believe it effects most of it or just individual instances, the example you're pointing at there isn't load bearing and there are other people who have written similar things but with more nuance on cultural evolution such as cecilia hayes with cognitive gadgets?

Like I'm not sure how much to throw out based on that?

Just wanted to drop these two books here if you're interested in the cultural evolution side more:

https://www.goodreads.com/book/show/17707599-moral-tribes

https://www.goodreads.com/book/show/25761655-the-secret-of-our-success

A random thought that I just has from more mainstream theoretical CS ML or Geometric Deep Learning is about inductive biases from the perspective of different geodesics.

Like they talk about using structural invariants to design the inductive biases of different ML models and so if we're talking abiut general abstraction learning my question is if it even makes sense without taking the underlying inductive biases you have into account?

Like maybe the model of Natural Abstractions always has to filter through one inductive bias or another and there are ...

I really like the latest posts you've dropped on meditation, they help me with some of my own reflections.

Is there an effect here? Maybe for some people. For me, at least, the positive effect to working memory isn't super cumulative nor important. Does a little meditation before work help me concentrate? Sure, but so does weightlifting, taking a shower, and going for a walk.

Wanting to point out a situation where this really showed up for me, I get the point that it is stupid compared to what lies deeper in meditation but it is still instrumentally useful.&...

I like to think of learning and all of these things as self-contained smaller self-contained knowledge trees. Building knowledge trees that are cached, almost like creatin zip files and systems where I store a bunch of zip files similar to what Elizier talks about in The Sequences.

Like when you mention the thing about Nielsen on linear algebra it opens up the entire though tree there. I might just get the association to something like PCA and then I think huh, how to ptimise this and then it goes to QR-algorithms and things like a householder matrix ...

This is the quickest link i found on this but the 2nd exercise in the first category and doing them 8-12 reps for 3 sets with weighted cables so that you can progressive overload it.

Essentially, if you're doing bench press, shoulder press or anything involving the shoulders or chest, the most likely way to injure your self is through not doing this in a stable way. The rotator cuffs are in short there to stabilize these sorts of movements and deal with torque. If you don't have strong rotator cuffs this will lead to shoulder injuries a lot more often which is one of the main ways you can fuck up your training.

So for everyone who's concerned about the squats and deadlift thing with or without a belt you can look it up but the basic argument is that lower back injuries can be really hard to get rid off and it is often difficult to hold your core with right technique without it.

If you ever go over 80kg you can seriously permanently mess with your lower back by lifting wrong. It's just one of the main things that are obvious to avoid and a belt really helps you hold your core properly.

Here's the best link I can find:https://pmc.ncbi.nlm.nih.gov/articles/PMC9282110/...

I can't help myself but to gym bro since it is LW.

(I've been doing lifting for 5 years now and can do more than 100kg in bench press for example, etc. so you know I've done it.)

The places to watch out for injuries in free weight is your wrists, rotator cuffs and lower back.

- If you're doing squats or deadlifts, use a belt or you're stupid.

- If you start feeling your wrists when doing benchpress, shoulder press or similar compound movement, get wrist protection, it isn't that expensive and helps.

- Learn about the bone structure of the wrist and ensure that

Certain exercises such as skull crushers among others are more injury prone if you do it with dumbbells because you have more degrees of freedom.

There's also larger interrelated mind muscle connection if you do things with a barbell i believe? (The movement gets more coupled with lifting one interconnected source of weight rather than two independent ones?)

I for example activate my abs more with a barbell shoulder press than I do with dumbbells so it activates your body more usually. (same thing for bench press)

Based advice.

I just wanted to add that 60-75 minutes is optimal for growth hormone release which determine recovery period as well as helping a bit with getting extra muscle mass.

Final thing is to add creatine to your diet as it gives you a 30% increase in muscle mass gain as well as some other nice benefits.

Also, the solution is obviously to friendship is optimal the system that humans and AI coordinate in. Create an opt-in secure system that allows more resources if you cooperate and you will be able to outperform those silly defectors.

When it comes to solutions I think that humans versus AI axis doesn't make sense for the systems that we're in, it is rather about desirable system properties such as participation, exploration and caring for the participants in the system.

If we can foster a democratic, caring, open-ended decision making process where humans and AI can converge towards optimal solutions then I think our work is done.

Human disempowerment is okay as long as it is replaced by a better and smarter system so whilst I think the solutions are pointing in the right dir...

First and foremost, I totally agree with your point on this sort of thing being instrumentally useful, I'm still having issues seeing how to apply it to my real life. Here are two questions that arise for me:

I'm curious about two aspects of deliberate practice that seem interconnected:

- On OODA loops: I currently maintain yearly, quarterly, weekly, and daily review cycles where I plan and reflect on progress. However, I wonder if there are specific micro-skills you're pointing to beyond this - perhaps noticing subtle emotional tells when encountering uncomfo

I guess the entire "we need to build an AI internally" US narrative will also increase the likelyhood of Taiwan being invaded from China for data chips?

Good that we all have the situational awareness to not summon any bad memetics into the mindspace of people :D

This suggests something profound about metaphysics itself: Our basic intuitions about what's fundamental to reality (whether materialist OR idealist) might be more about human neural architecture than about ultimate reality. It's like a TV malfunctioning in a way that produces the message "TV isn't real, only signals are real!"

In meditation, this is the fundamental insight, the so called non-dual view. Neither are you the fundamental non-self nor are you the specific self that you yourself believe in, you're neither, they're all empty views, yet that view ...

Thank you for being patient with me, I tend to live in my own head a bit with these things :/ Let me know if this explanation is clearer using the examples you gave:

Let me build on the discussion about optimization slack and sharp left turns by exploring a concrete example that illustrates the key dynamics at play.

Think about the difference between TD-learning and Monte Carlo methods in reinforcement learning. In TD-learning, we update our value estimates frequently based on small temporal differences between successive states. The "slack" - how far we let...

Epistemic status: Curious.

TL;DR:

The Sharp Left Turn and specifically the alignment generalization ability is highly dependent on how much slack you allow between each optimisation epoch. By minimizing the slack you allow in the "utility boundary" (the part of the local landscape that is counted as part of you when trying to describe a utility function of the system) you can minmize the expected divergence of the optimization process and therefore minimize the alignment-capability gap?

A bit longer from the better half of me (Claude):

Your analysi...

This is really well put!

This post made me reflect on how my working style has changed. This bounded cognition and best-case story is the main thing that I've changed in my working style over the last two years and it yields me a lot of relaxation but also a lot more creative results. I like how you mention meditation in the essay as well, it is like going into a sit, setting an intention and sticking to that during the sit, not changing it and then reflecting after it. You've set the intention, stick to it and relax.

I'm sharing this with the people I'm working with, thanks!