All of Jsevillamol's Comments + Replies

The ability to pay liability is important to factor in and this illustrates it well. For the largest prosaic catastrophes this might well be the dominant consideration

For smaller risks, I suspect in practice mitigation, transaction and prosecution costs are what dominates the calculus of who should bear the liability, both in AI and more generally.

I've been tempted to do this sometime, but I fear the prior is performing one very important role you are not making explicit: defining the universe of possible hypothesis you consider.

In turn, defining that universe of probabilities defines how bayesian updates look like. Here is a problem that arises when you ignore this: https://www.lesswrong.com/posts/R28ppqby8zftndDAM/a-bayesian-aggregation-paradox

shrug

I think this is true to an extent, but a more systematic analysis needs to back this up.

For instance, I recall quantization techniques working much better after a certain scale (though I can't seem to find the reference...). It also seems important to validate that techniques to increase performance apply at large scales. Finally, note that the frontier of scale is growing very fast, so even if these discoveries were done with relatively modest compute compared to the frontier, this is still a tremendous amount of compute!

even a pause which completely stops all new training runs beyond current size indefinitely would only ~double timelines at best, and probably less

I'd emphasize that we currently don't have a very clear sense of how algorithmic improvement happens, and it is likely mediated to some extent by large experiments, so I think is more likely to slow timelines more than this implies.

I think Tetlock and cia might have already done some related work?

Question decomposition is part of the superforecasting commandments, though I can't recall off the top of my head if they were RCT'd individually or just as a whole.

ETA: This is the relevant paper (h/t Misha Yagudin). It was not about the 10 commandments. Apparently those haven't been RCT'd at all?

I cowrote a detailed response here

https://www.cser.ac.uk/news/response-superintelligence-contained/

Essentially, this type of reasoning proves too much, since it implies we cannot show any properties whatsoever of any program, which is clearly false.

Here is some data through Matthew Barnett and Jess Riedl

Number of cumulative miles driven by Cruise's autonomous cars is growing as an exponential at roughly 1 OOM per year.

That is to very basic approximation correct.

Davidson's takeoff model illustrates this point, where a "software singularity" happens for some parameter settings due to software not being restrained to the same degree by capital inputs.

I would point out however that our current understanding of how software progress happens is somewhat poor. Experimentation is definitely a big component of software progress, and it is often understated in LW.

More research on this soon!

algorithmic progress is currently outpacing compute growth by quite a bit

This is not right, at least in computer vision. They seem to be the same order of magnitude.

Physical compute has growth at 0.6 OOM/year and physical compute requirements have decreased at 0.1 to 1.0 OOM/year, see a summary here or a in depth investigation here

Another relevant quote

Algorithmic progress explains roughly 45% of performance improvements in image classification, and most of this occurs through improving compute-efficiency.

Thanks Neel!

The difference between tf16 and FP32 comes to a x15 factor IIRC. Though also ML developers seem to prioritise other characteristics than cost effectiveness when choosing GPUs like raw performance and interconnect, so you can't just multiply the top price performance we showcase by this factor and expect that to match the cost performance of the largest ML runs today.

More soon-ish.

Note that Richard is not treating knightian uncertainty as special and unquantifiable, but instead is giving examples of how to treat it like any other uncertainty, that he is explicitly quantifying and incorporating in his predictions.

I'd prefer calling Richard's "model error" to separate the two, but I'm also okay appropriating the term as Richard did to point to something coherent.

To my knowledge, we currently don’t have a way of translating statements about “loss” into statements about “real-world capabilities”.

This site claims that the strong SolidGoldMagikarp was the username of a moderator involved somehow with Twitch Plays Pokémon

I agree with the sentiment that indiscriminate regulation is unlikely to have good effects.

I think the step that is missing is analysing the specific policies No-AI Art Activist are likely to advocate for, and whether it is a good idea to support it.

My current sense is that data helpful for alignment is unlikely to be public right now, and so harder copyright would not impede alignment efforts. The kind of data that I could see being useful are things like scores and direct feedback. Maybe at most things like Amazon reviews could end up being useful for to...

Great work!

Stuart Armstrong gave one more example of a heuristic argument based in the presumption of independence here.

There are a huge number of examples like that floating around in the literature, we link to some of them in the writeup. I think Terence Tao's blog is the easiest place to get an overview of these arguments, see this post in particular but he discusses this kind of reasoning often.

I think it's easy to give probabilistic heuristic arguments for about 80 of the ~100 conjectures in the wikipedia category unsolved problems in number theory.

About 30 of those (including the Goldbach conjecture) follow from the Cramer random model of the primes. Another 9 a...

Here are my quick takes from skimming the post.

In short, the arguments I think are best are A1, B4, C3, C4, C5, C8, C9 and D. I don't find any of them devastating.

A1. Different calls to ‘goal-directedness’ don’t necessarily mean the same concept

I am not sure I parse this one.I am reading it as "AI systems might be more like imitators than optimizers" from the example, which I find moderately persuasive

A2. Ambiguously strong forces for goal-directedness need to meet an ambiguously high bar to cause a risk

I am not sure I understand this one either.I am readi...

As it is often the case, I just found out that Jaynes was already discussing a similar issue to the paradox here in his seminal book.

I also found this thread of math topics on AI safety helpful.

Thank you for bringing this up!

I think you might be right, since the deck is quite undiverse and according to the rest diversity is important. That being said, I could not find the mistake in the code at a glance :/

Do you have any opinions on [1, 1, 0, 1, 0, 1, 2, 1, 1, 3, 0, 1]? This would be the worst deck amongst the decks that played against a deck similar to the rival's in my code, according to my code.

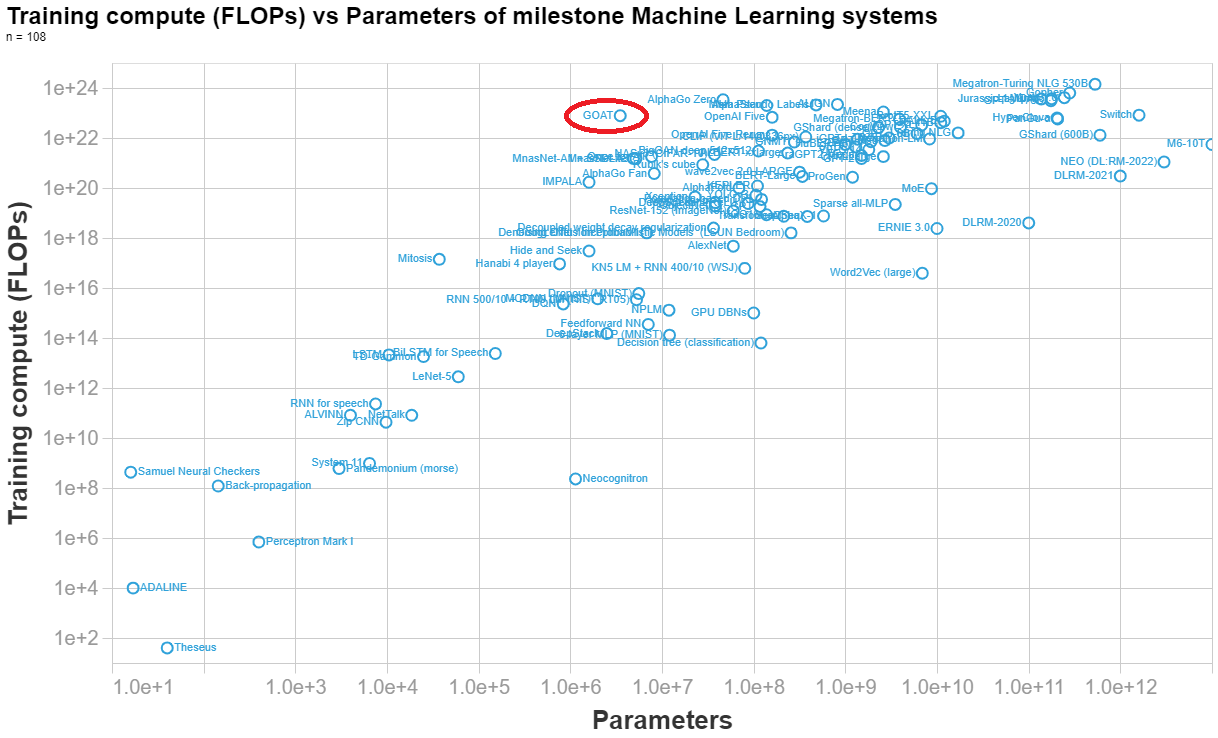

Marius Hobbhahn has estimated the number of parameters here. His final estimate is 3.5e6 parameters.

Anson Ho has estimated the training compute (his reasoning at the end of this answer). His final estimate is 7.8e22 FLOPs.

Below I made a visualization of the parameters vs training compute of n=108 important ML system, so you can see how DeepMind's syste (labelled GOAT in the graph) compares to other systems.

...[Final calculation]

(8 TPUs)(4.20e14 FLOP/s)(0.1 utilisation rate)(32 agents)(7.3e6 s/agent) = 7.8e22 FLOPs==========================

NOTES BELOW[Ha

Here is my very bad approach after spending ~one hour playing around with the data

- Filter decks that fought against a similar to the rivals deck, using a simple measure of distance (sum of absolute differences between the deck components)

- Compute a 'score' of the decks. The score is defined as the sum of 1/deck_distance(deck) * (1 or -1 depending on whether the deck won or lost against the challenger)

- Report the deck with the maximum score

So my submission would be: [0,1,0,1,0,0,9,0,0,1,0,0]

Thanks for the comment!

I am personally sympathetic to the view that AlphaGo Master and AlphaGo Zero are off-trend.

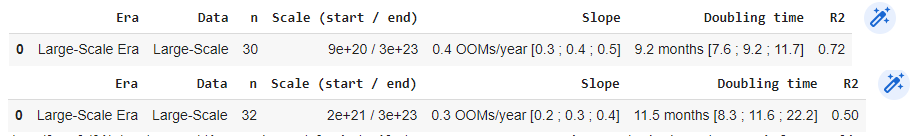

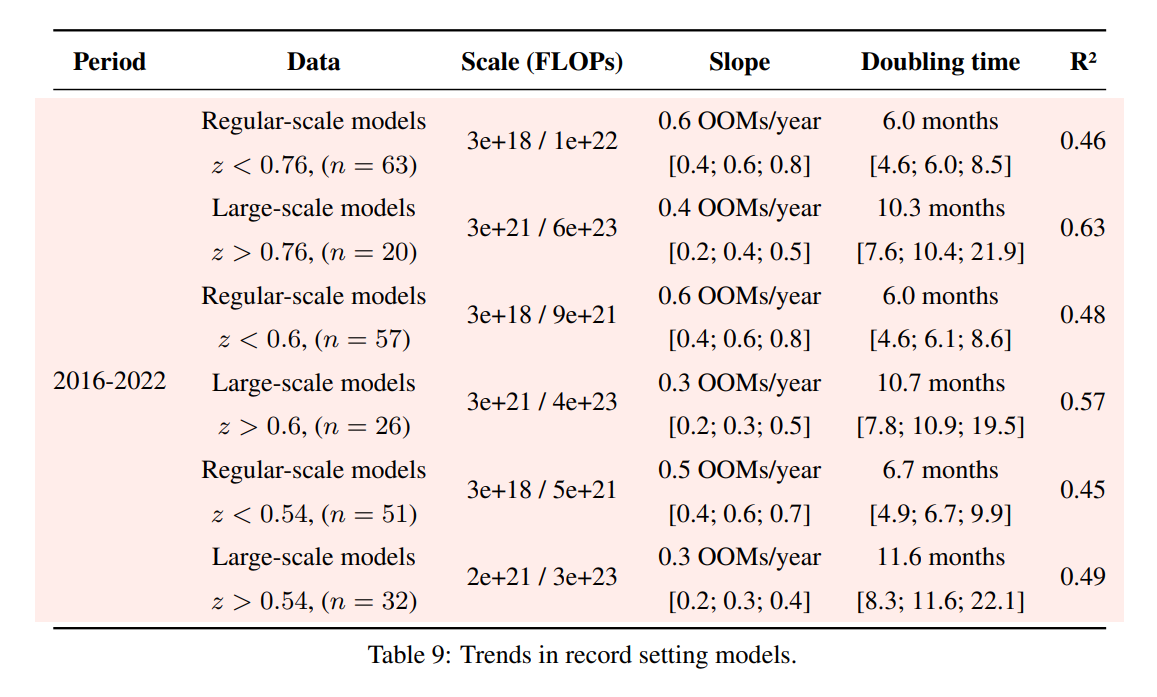

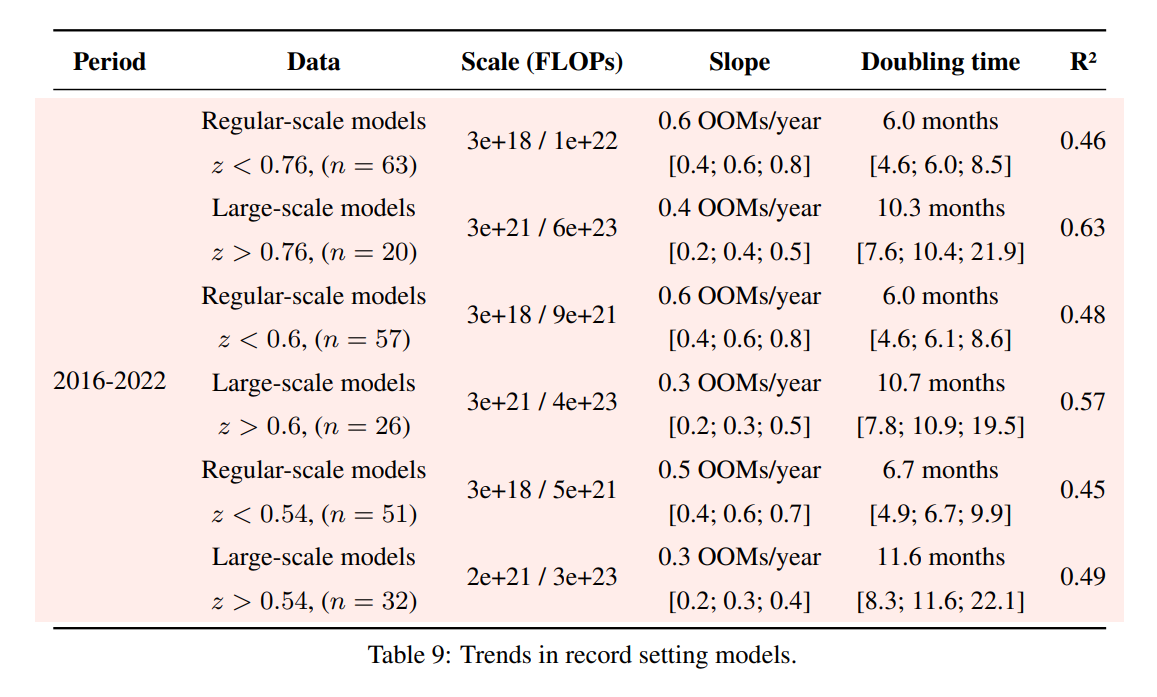

In the regression with all models the inclusion does not change the median slope, but drastically increases noise, as you can see for yourself in the visualization selecting the option 'big_alphago_action = remove' (see table below for a comparison of regressing the large model trend without vs with the big AlphaGo models).

In appendix B we study the effects of removing AlphaGo Zero and AlphaGo Master when studying record-setting models. The upp...

Following up on this: we have updated appendix F of our paper with an analysis of different choices of the threshold that separates large-scale and regular-scale systems. Results are similar independently of the threshold choice.

Thanks for engaging!

To use this theorem, you need both an (your data / evidence), and a (your parameter).

Parameters are abstractions we use to simplify modelling. What we actually care about is the probability of unkown events given past observations.

You start out discussing what appears to be a combination of two forecasts

To clarify: this is not what I wanted to discuss. The expert is reporting how you should update your priors given the evidence, and remaining agnostic on what the priors should be.

...A likelihood is

Those I know who train large models seem to be very confident we will get 100 Trillion parameter models before the end of the decade, but do not seem to think it will happen, say, in the next 2 years.

FWIW if the current trend continues we will first see 1e14 parameter models in 2 to 4 years from now.

There's also a lot of research that didn't make your analysis, including work explicitly geared towards smaller models. What exclusion criteria did you use? I feel like if I was to perform the same analysis with a slightly different sample of papers I could come to wildly divergent conclusions.

It is not feasible to do an exhaustive analysis of all milestone models. We necessarily are missing some important ones, either because we are not aware of them, because they did not provide enough information to deduce the training compute or because we haven't gott...

Great questions! I think it is reasonable to be suspicious of the large-scale distinction.

I do stand by it - I think the companies discontinuously increased their training budgets around 2016 for some flagship models.[1] If you mix these models with the regular trend, you might believe that the trend was doubling very fast up until 2017 and then slowed down. It is not an entirely unreasonable interpretation, but it explains worse the discontinuous jumps around 2016. Appendix E discusses this in-depth.

The way we selected the large-scale models is half ...

Following up on this: we have updated appendix F of our paper with an analysis of different choices of the threshold that separates large-scale and regular-scale systems. Results are similar independently of the threshold choice.

I'm talking from a personal perspective here as Epoch director.

- I personally take AI risks seriously, and I think they are worth investigating and preparing for.

- I co-started Epoch AI to get evidence and clarity on AI and its risks and this is still a large motivation for me.

- I have drifted towards a more skeptical position on risk in the last two years. This is due to a combination of seeing the societal reaction to AI, me participating in several risk evaluation processes, and AI unfolding more gradually than I expected 10 years ago.

- Currently I am more worr

... (read more)