All of Kabir Kumar's Comments + Replies

Why does it seem very unlikely?

The companies being merged and working together seems unrealistic.

the fact that good humans have been able to keep rogue bad humans more-or-less under control

Isn't stuff like the transatlantic slave trade, genocide of native americans, etc evidence that the amount isn't sufficient??

pauseai, controlai, etc, are doing this

Helps me decide which research to focus on

Both. Not sure, its something like lesswrong/EA speak mixed with the VC speak.

What I liked about applying for VC funding was the specific questions.

"How is this going to make money?"

"What proof do you have this is going to make money"

and it being clear the bullshit that they wanted was numbers, testimonials from paying customers, unambiguous ways the product was actually better, etc. And then standard bs about progress, security, avoiding weird wibbly wobbly talk, 'woke', 'safety', etc.

With Alignment funders, they really obviously have language they're looking for as well, or language that makes them more and less willing to put more effort into understanding the proposal. Actually, they have it more than the VCs. But they act as if they don't.

I would not call this a "Guide".

It's more a list of recommendations and some thoughts on them.

What observations would change your mind?

You can split your brain and treat LLMs differently, in a different language. Rather, I can and I think most people could as well

Ok, I want to make that at scale. If multiple people have done it and there's value in it, then there is a formula of some kind.

We can write it down, make it much easier to understand unambiguously (read: less unhelpful confusion about what to do or what the writer meant and less time wasted figuring that out) than any of the current agent foundations type stuff.

I'm extremely skeptical that needing to hear a dozen stories dancing around some vague ideas of a point and then 10 analogies (exagerrating to get emotions across) is the best we can do.

regardless of if it works, I think it's disrespectful for being manipulative at worst and wasting the persons time at best.

You can just say the actual criticism in a constructive way. Or if you don't know how to, just ask - "hey I have some feedback to give that I think would help, but I don't know how to say it without it potentially sounding bad - can I tell you and you know I don't dislike you and I don't mean to be disrespectful?" and respect it if they say no, they're not interested.

Multiple talented researchers I know got into alignment because of PauseAI.

You can also give them the clipboard and pen, works well

Make the (!aligned!) AGI solve a list of problems, then end all other AIs, convince (!harmlessly!) all humans to never make another AI, in a way that they will pass down to future humans, then end itself.

Thank you for sharing negative results!!

Sure? I agree this is less bad than 'literally everyone dying and that's it', assuming there's humans around, living, still empowered, etc in the background.

I was saying overall, as a story, I find it horrifying, especially contrasting with how some seem to see it utopic.

Sure, but it seems like everyone died at some point anyway, and some collective copies of them went on?

I don't think so. I think they seem to be extremely lonely and sad and the AIs are the only way for them to get any form of empowerment. And each time they try to inch further with empowering themselves with the AIs, it leads to the AI actually getting more powerful and themselves only getting a brief moment of more power, but ultimately degrading in mental capacity. And needing to empower the AI more and more, like an addict needing an ever g

How is this optimistic.

Well, in this world:

1. AI didn't just kill everyone 5% of the way through the story

2. IMO, the characters in this story basically get the opportunity to reflect on what is good for them before taking each additional step. (they maybe feel some pressure to Keep Up With The Joneses, re: AI assisted thinking. But, that pressure isn't super crazy strong. Like the character's boss isn't strongly implying that if they don't take these upgrades they lose their job.)

3. Even if you think the way the characters are making their choices here are more dystopian and th...

Oh yes. It's extremely dystopian. And extremely lonely, too. Rather than having a person, actual people around him to help, his only help comes from tech. It's horrifyingly lonely and isolated. There is no community, only tech.

Also, when they died together, it was horrible. They literally offloaded more and more of themselves into their tech until they were powerless to do anything but die. I don't buy the whole 'the thoughts were basically them' thing at all. It was at best, some copy of them.

There can be made an argument for it qualitatively being them, but quantitatively, obviously not.

A few months later, he and Elena decide to make the jump to full virtuality. He lies next to Elena in the hospital, holding her hand, as their physical bodies drift into a final sleep. He barely feels the transition

this is horrifying. Was it intentionally made that way?

Thoughts on this?

### Limitations of HHH and other Static Dataset benchmarks

A Static Dataset is a dataset which will not grow or change - it will remain the same. Static dataset type benchmarks are inherently limited in what information they will tell us about a model. This is especially the case when we care about AI Alignment and want to measure how 'aligned' the AI is.

### Purpose of AI Alignment Benchmarks

When measuring AI Alignment, our aim is to find out exactly how close the model is to being the ultimate 'aligned' model that we're seeking - a model w...

Thinking about judgement criteria for the coming ai safety evals hackathon (https://lu.ma/xjkxqcya )

These are the things that need to be judged:

1. Is the benchmark actually measuring alignment (the real, scale, if we dont get this fully right right we die, problem)

2. Is the way of Deceiving the benchmark to get high scores actually deception, or have they somehow done alignment?

Both of these things need:

- a strong deep learning & ml background (ideally, muliple influential papers where they're one of the main authors/co-authors, or do...

Intelligence is computation. It's measure is success. General intelligence is more generally successful.

Personally, I think o1 is uniquely trash, I think o1-preview was actually better. Getting on average, better things from deepseek and sonnet 3.5 atm.

I like bluesky for this atm

I'd like some feedback on my theory of impact for my currently chosen research path

**End goal**: Reduce x-risk from AI and risk of human disempowerment.

for x-risk:

- solving AI alignment - very important,

- knowing exactly how well we're doing in alignment, exactly how close we are to solving it, how much is left, etc seems important.

- how well different methods work,

- which companies are making progress in this, which aren't, which are acting like they're making progress vs actually making progress, etc

- put all on ...

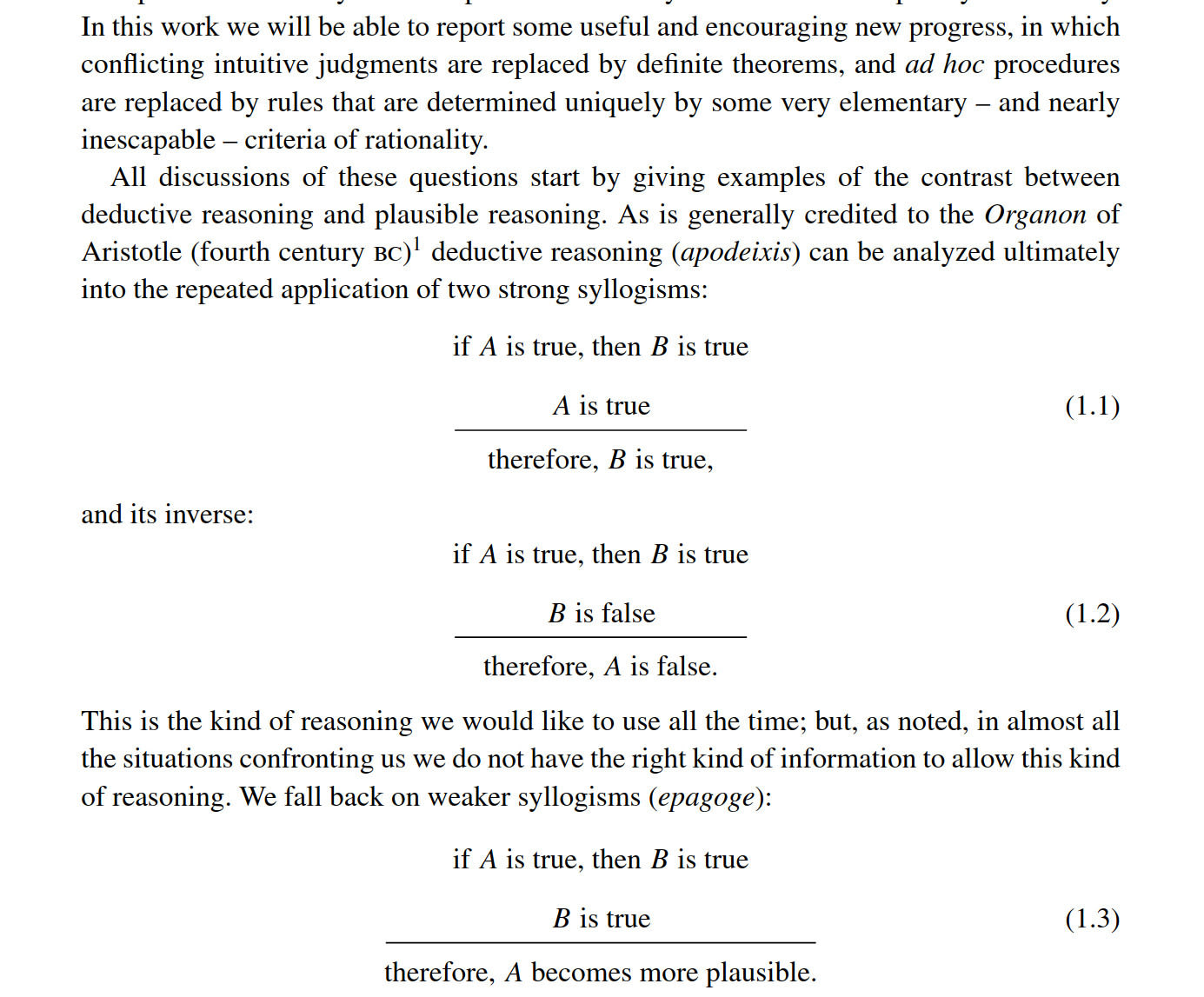

Fair enough. Personally, so far, I've found Jaynes more comprehensible than The Sequences.

I think this is a really good opportunity to work on a topic you might not normally work on, with people you might not normally work with, and have a big impact:

https://lu.ma/sjd7r89v

I'm running the event because I think this is something really valuable and underdone.

Pretty much drove me away from wanting to post non alignment stuff here.

That seems unhelpful then? Probably best to express that frustration to a friend or someone who'd sympathize.

Thank you for continuing this very important work.

ok, options.

- Review of 108 ai alignment plans

- write-up of Beyond Distribution - planned benchmark for alignment evals beyond a models distribution, send to the quant who just joined the team who wants to make it

- get familiar with the TPUs I just got access to

- run hhh and it's variants, testing the idea behind Beyond Distribution, maybe make a guide on itr

- continue improving site design

- fill out the form i said i was going to fill out and send today

- make progress on cross coders - would prob need to get familiar with those tpus

- writeup o...

I think the Conclusion could serve well as an abstract

An abstract which is easier to understand and a couple sentences at each section that explain their general meaning and significance would make this much more accessible

I plan to send the winning proposals from this to as many governing bodies/places that are enacting laws as possible - one country is lined up atm.

Let me know if you have any questions!

options to vary rules/environment/language as well, to see how the alignment generalizes ood. will try this today

In sixth form, I wore a suit for 2 years. Was fun! Then, got kinda bored of suits