All of Leksu's Comments + Replies

To the extent that Microsoft pressures the OpenAI board about their decision to oust Altman, won't it be easy to (I think accurately) portray Microsoft's behavior as unreasonable and against the common good?

It seems like the main (I would guess accurate) narrative in the media is that the reason for the board's actions was safety concerns.

Let's say Microsoft pulls whatever investments they can, revokes access to cloud compute resources, and makes efforts to support a pro-Altman faction in OpenAI. What happens if the OpenAI board decides to stall and put ou...

Agree that would be better. I think just links is useful too though, in case adding more context significantly increases the workload in practice

I wonder if there's an AI tool that could post-process it

Joscha Bach

Is there a group currently working on such a survey? If not, seems like it wouldn't be very hard to kickstart.

Link to mentioned survey: LINK

Maybe someone from AI Impacts could comment relevant thoughts (are they planning to do a re-run of the survey soon, would they encourage/discourage another group to do a similar survey, do they think now is a good time, do they have the right resources for it now, etc)

Thanks, I think this comment and the subsequent post will be very useful for me!

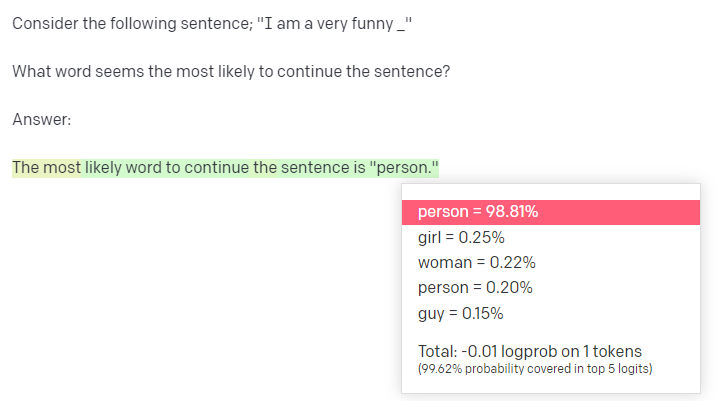

Here's what GPT-3 output for me

It feels like this and some other parts of the post implies the EU AI Act does not apply compute governance and model-level governance, which seems false as far as I can tell (semantics?)

From a summary of the act: "All providers of GPAI models that present a systemic risk* – open or closed – must also conduct model evaluations, adversarial testing, t... (read more)