All of lennart's Comments + Replies

Agree that this discussion is surprisingly often confusing and people use the terms interchangeably. Unfortunately, readers often referred to our training compute measurement as a measure of performance, rather than a quantity of executed operations. However, I don't think that this is necessarily due to the abbreviations but also due to the lack of understanding of what one measures. Next to making the distinction more clear with the terms, one should probably also explain it more and use terms such as quantity and performance.

For my research, I've been t...

They trained it on TPUv3s, however, the robot inference was run on a Geforce RTX 3090 (see section G).

TPUs are mostly designed for data centers and are not really usable for on-device inference.

I'd be curious to hear more thoughts on how much we could already scale it right now. Looks like that data might be a bottleneck?

Some thoughts on compute:

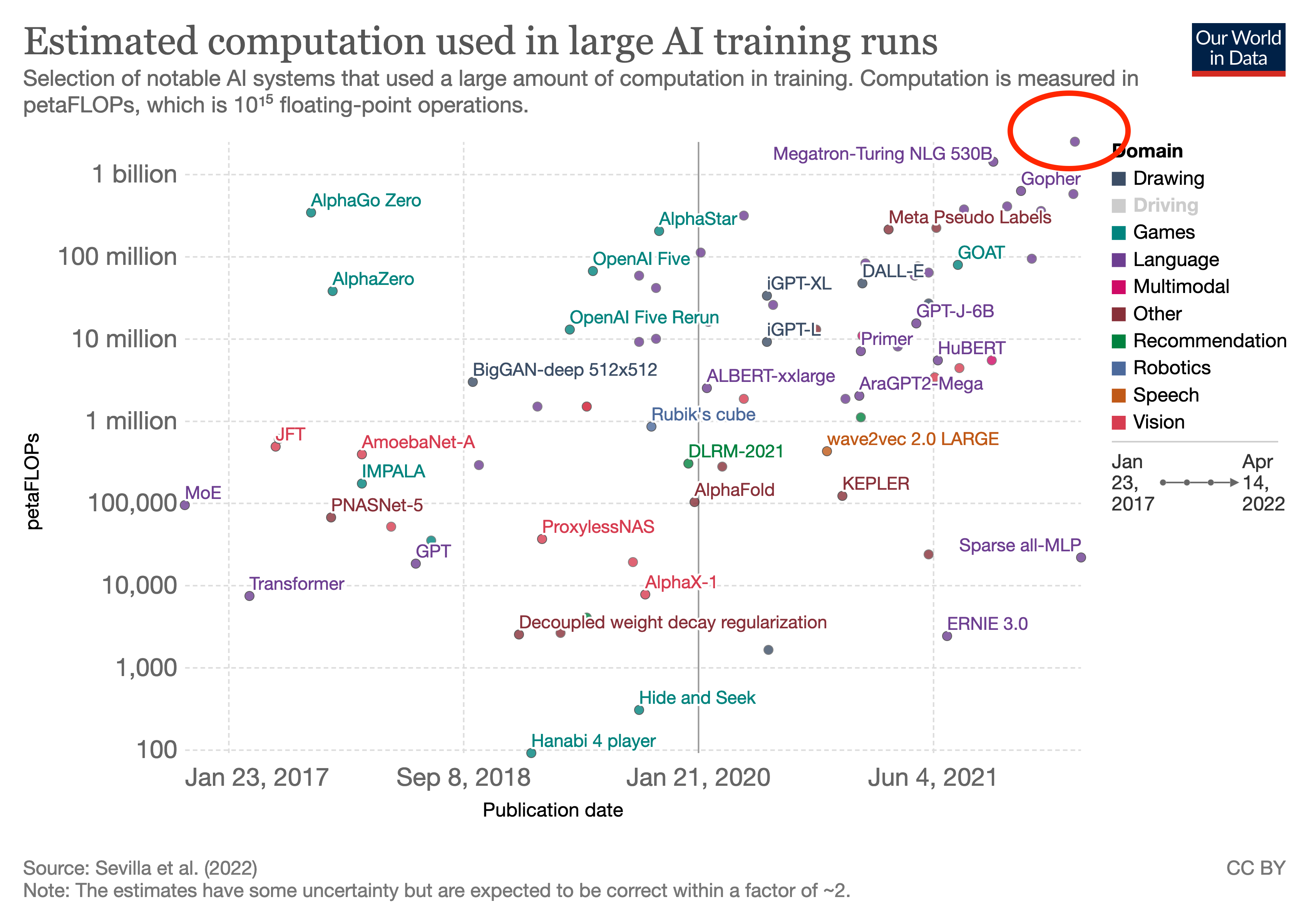

Gato estimate: 256 TPUv3 chips for 4 days a 24hours = 24'574 TPUv3-hours (on-demand costs are $2 per hour for a TPUv3) =$49'152

In comparison, PaLM used 8'404'992 TPUv4 hours and I estimated that it'd cost $11M+. If we'd assume that someone would be willing to spend the same compute budget on it, we could make the model 106x bigger (assuming Chinchilla scaling laws). Also tweeted about this here.

The size o...

It took Google 64 days to train PaLM using more than 6'000 TPU chips. Using the same setup (which is probably one of the most interconnected and capable ML training systems out there), it'd take 912 years.

I recently estimated the training cost of PaLM to be around $9M to $17M.

Please note all the caveats and this is only estimating the final training run costs using commercial cloud computing (Google's TPUv3).

As already, said a 10T parameter model using Chinchilla scaling laws would be around FLOPs. That's 5200x more compute than PaLM ( FLOPs).

Therefore, .

So a conservative estimate is around $47 to $88 billion.

It took Google 64 days to train PaLM using more than 6'000 TPU chips. Using the same setup (which is probably one of the most interconnected and capable ML training systems out there), it'd take 912 years.

Thanks for the thoughtful response, Connor.

I'm glad to hear that you will develop a policy and won't be publishing models by default.

Glad to see a new Alignment research lab in Europe. Good luck with the start and the hiring!

I'm wondering, you're saying:

That being said, our publication model is non-disclosure-by-default, and every shared work will go through an internal review process out of concern for infohazards.

That's different from Eleuther's position[1]. Is this a change of mind or a different practice due to the different research direction? Will you continue open-sourcing your ML models?

- ^

"A grassroots collective of researchers working to open source AI research."

TL;DR: For the record, EleutherAI never actually had a policy of always releasing everything to begin with and has always tried to consider each publication’s pros vs cons. But this is still a bit of change from EleutherAI, mostly because we think it’s good to be more intentional about what should or should not be published, even if one does end up publishing many things. EleutherAI is unaffected and will continue working open source. Conjecture will not be publishing ML models by default, but may do so on a case by case basis.

Longer version:

Firs...

From their paper:

We trained PaLM-540B on 6144 TPU v4 chips for 1200 hours and 3072 TPU v4 chips for 336 hours including some downtime and repeated steps.

That's 64 days.

It's roughly an order of magnitude more compute than GPT-3.

| ML Model | Compute [FLOPs] | x GPT-3 |

|---|---|---|

| GPT-3 (2020) | 3.1e23 | 1 |

| Gopher (2021-12) | 6.3e23 | ≈2x |

| Chinchilla (2022-04) | 5.8e23 | ≈2x |

| PaLM (2022-04) | 2.5e24 | ≈10x |

Which is reasonable. It has been about <2.5 years since GPT-3 was trained (they mention the move to Azure disrupting training, IIRC, which lets you date it earlier than just 'May 2020'). Under the 3.4 month "AI and Compute" trend, you'd expect 8.8 doublings or the top run now being 445x. I do not think anyone has a 445x run they are about to unveil any second now. Whereas on the slower >5.7-month doubling in that link, you would expect <36x, which is still 3x PaLM's actual 10x, but at least the right order of magnitude.

There may also be other runs...

From their paper:

We trained PaLM-540B on 6144 TPU v4 chips for 1200 hours and 3072 TPU v4 chips for 336 hours including some downtime and repeated steps.

That's 64 days.

Minor correction. You're saying:

> So training a 1-million parameter model on 10 books takes about as many FLOPS as training a 10-million parameter model on one book.

You link to FLOP per second aka FLOPS, whereas you're talking about the plural of FLOP, a quantity (often used is FLOPs).

I'm wondering: could one just continue training Gopher (the previous bigger model) on the newly added data?

Thanks for the comment! That sounds like a good and fair analysis/explanation to me.

We basically lumped the reduced cost of FLOP per $ and increased spending together.

A report from CSET on AI and Compute projects the costs by using two strongly simplified assumptions: (I) doubling every 3.4 months (based on OpenAI's previous report) and (II) computing cost stays constant. This could give you some ideas on rather upper bounds of projected costs.

Carey's previous analysis uses this dataset from AI Impacts and therefore assumes:

...[..] while the cost per unit of computation is decreasing by an order of magnitude every 4-12 years (the long-run

Thanks for sharing your thoughts. As you already outlined, the report mentions at different occasions that the hardware forecasts are the least informed:

“Because they have not been the primary focus of my research, I consider these estimates unusually unstable, and expect that talking to a hardware expert could easily change my mind.”

This is partially the reason why I started looking into this a couple of months ago and still now on the side. A couple of points come to mind:

-

I discuss the compute estimate side of the report a bit in my TAI and Comput

Thanks, appreciate the pointers!

co-author here

I like your idea. Nonetheless, it's pretty hard to make estimates on "total available compute capacity". If you have any points, I'd love to see them.

Somewhat connected is the idea of: What ratio of this progress/trend is due to computational power improvements versus increased spending? To get more insights on this, we're currently looking into computing power trends and get some insights into the development of FLOPS/$ over time.

Comparing custom ML hardware (e.g. Google's TPUs or Baidu's Kunlun, etc) is tricky to put on these sorts of comparisons. For those I think the MLPerf Benchmarks are super useful. I'd be curious to hear the authors' expectations of how this research changes in the face of more custom ML hardware.

I'd be pretty excited to see more work on this. Jaime already shared our hardware sheet where we collect information on GPUs but as you outline that's the peak performance and sometimes misleading.

Indeed, the MLPerf benchmarks are useful. I've already gathered ...

Great post! I especially liked that you outlined potential emerging technologies and the economic considerations.

Having looked a bit into this when writing my TAI and Compute sequence, I agree with your main takeaways. In particular, I'd like to see more work on DRAM and the interconnect trends and potential emerging paradigms.

I'd be interested in you compute forecasts to inform TAI timelines. For example Cotra's draft report assumes a doubling time of 2.5 years for the FLOPs/$ but acknowledges that this forecast could be easily improved by someone with mo...

Thanks!

- I'm working with a colleague on the trends of the three components (compute, memory, and interconnect) over time of compute systems and then comparing it to our best estimates for the human brain (or other biological anchors). However, this will still take some time but I hope we will be able to share it in the future (≈ till the end of the year).

Thanks for the correction and references. I just followed my "common sense" from lectures and other pieces.

What do you think made AlexNet stand out? Is it the depth and use of GPUs?

Thanks for the feedback, Gunnar. You're right - it's more of a recap and introduction. I think the "newest" insight is probably the updates in Section 2.3.

I also would be curious to know in which aspects and questions you're most interested in.

The Bay is an AI hub, home to OpenAI, Google, Meta, etc., and therefore an AI governance hub. Governance is not governments. Important decisions are being made there - maybe more important decisions than in DC. To quote Allan Dafoe:

Also, many, many A... (read more)