All of Lorec's Comments + Replies

Subjectively assessed value. This judgment cannot be abdicated.

If you can't generate your parents' genomes and everything from memory, then yes, you are in a state of uncertainty about who they are, in the same qualitative way you are in a state of uncertainty about who your young children will grow up to be.

Ditto for the isomorphism between your epistemic state w.r.t. never-met grandparents vs your epistemic state w.r.t. not-yet-born children.

It may be helpful to distinguish the subjective future, which contains the outcomes of all not-yet-performed experiments [i.e. all evidence/info not yet known] from the physical future, which is simply a direction in physical time.

We can hardly establish the sense of anthropic reasoning if we can't establish the sense of counterfactual reasoning.

A root confusion may be whether different pasts could have caused the same present, and hence whether I can have multiple simultaneous possible parents, in an "indexical-uncertainty" sense, in the same way that I can have multiple simultaneous possible future children.

The same standard physics theories that say it's impossible to be certain about the future, also say it's impossible to be certain about the past.

Indexical uncertainty about th...

thus we can update away from the doomsday argument, because we have way more evidence than the doomsday argument assumes.

I agree with this!

"Update away from" does not imply "discard".

...

alignment researchers are clearly not in charge of the path we take to AGI

If that's the case, we're doomed no matter what we try. So we had better back up and change it.

Don't springboard by RLing LLMs; you will get early performance gains and alignment will fail. We need to build something big we can understand. We probably need to build something small we can understand first.

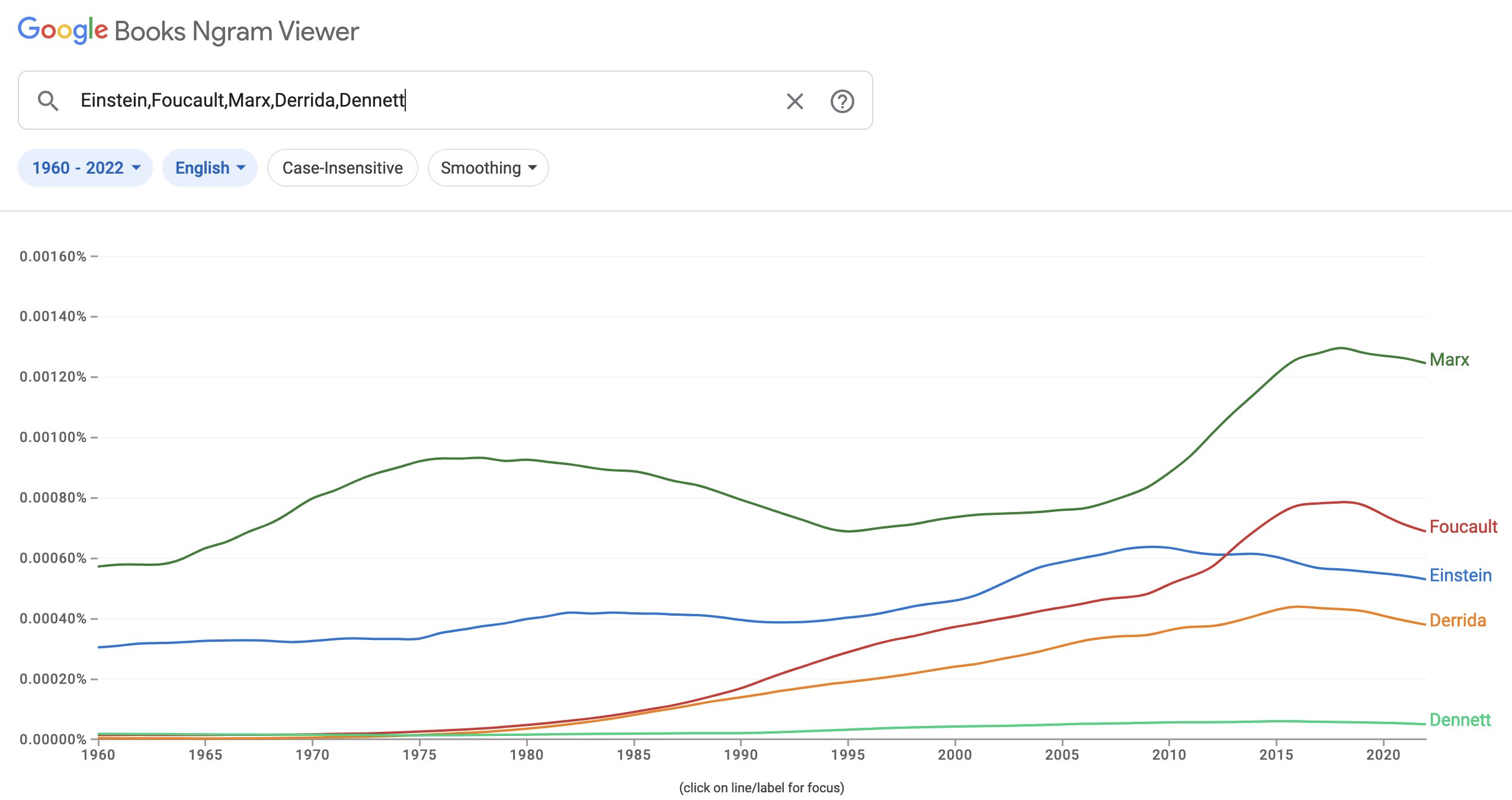

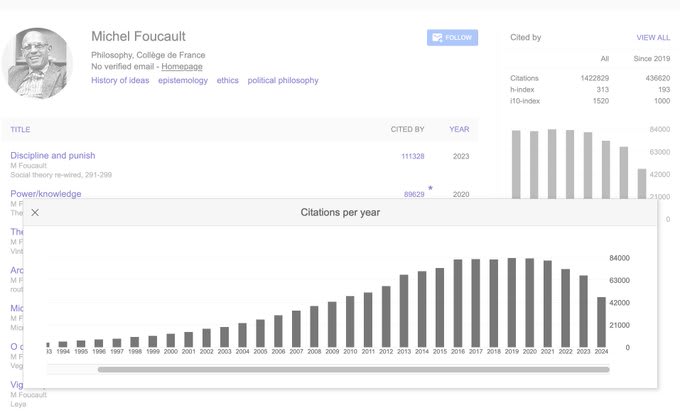

I expect any tests to show unambiguously that it's "not being replaced at all and citations[/mentions] chaotically swirling". If I understand Evans correctly, these were all random eminent figures he picked, not selected to be falling out of fashion - and they do seem to be a pretty broad sample of the "old prestigious standard names" space.

The stand mixer is a clever analogy; I didn't previously have experience with the separation thing.

I presume you've seen Is Clickbait Destroying Our General Intelligence?, and probably Hanson's cultural evolution / cult...

[ Look at those same authors with some other mention-counting tool, you mean? ]

Interesting question: why are people quickly becoming less interested in previous standards?

[ Source ]

Computer science fact of the day 2: JavaScript really was mostly-written in just a few weeks by Brendan Eich on contract-commission for Netscape [ to the extent that you consider it as having been "written [as a new programming language]", and not just specced out as a collective of "funny-looking macros" for Scheme. ] It took Eich several more months to finish the SpiderMonkey parser whose core I still use every time I browse the Internet today. However, e.g. the nonprofit R5RS did not ship a parser/interpreter [ likewise with similar committee projects ]...

Boldface vs Quotation - i.e., the interrogating qualia skeptic, vs the defender of qualia as a gnostic absolute - is a common pattern. However, your Camp #1 and Camp #2 - Boldfaces who favor something like Global Workspace Theory, and Quotations who favor something like IIT - don't exist, in my experience.

Antonio Damasio is a Boldface who favors a body-mind explanation for consciousness which is quasi-neurological, but which is much closer to IIT than Global Workspace Theory in its level of description. Descartes was a Quotation who, unlike Chalmers, didn'...

[A], just 'cause I anticipate the 'More and more' will turn people off [it sounds like it's trying to call the direction of the winds rather than just where things are at].

[ Thanks for doing this work, by the way. ]

No, what I'm talking about here has nothing to do with hidden-variable theories. And I still don't think you understand my position on the EPR argument.

I'm talking about a universe which is classical in the sense of having all parameters be simultaneously determinate without needing hidden variables, but not necessarily classical in the sense of space[/time] always being arbitrarily divisible.

Oh, sorry, I wasn't clear: I didn't mean a classical universe in the sense of conforming to Newton's assumptions about the continuity / indefinite divisibility of space [and time]. I meant a classical universe in the sense of all quasi-tangible parameters simultaneously having a determinate value. I think we could still use the concept of logical independence, under such conditions.

"building blocks of logical independence"? There can still be logical independence in the classical universe, can't there?

Is this your claim - that quantum indeterminacy "comes from" logical independence? I'm not confused about quantum indeterminacy, but I am confused about in what sense you mean that. Do you mean that there exists a formulation of a principle of logical independence which would hold under all possible physics, which implies quantum indeterminacy? Would this principle still imply quantum indeterminacy in an all-classical universe?

Imagine all humans ever, ordered by date of their birth.

All humans of the timeline I actually find myself a part of, or all humans that could have occurred, or almost occurred, within that timeline? Unless you refuse to grant the sense of counterfactual reasoning in general, there's no reason within a reductionist frame to dismiss counterfactual [but physically plausible and very nearly actual] values of "all humans ever".

Even if you consider the value of "in which 10B interval will I be born?" to be some kind of particularly fixed absolute about my exi...

I am not confused about the nature of quantum indeterminacy.

This is [...] Feynman's argument

.

I don't know why it's true, but it is in fact true

Oh, I hadn't been reading carefully. I'd thought it was your argument. Well, unfortunately, I haven't solved exorcism yet, sorry. BEGONE, EVIL SPIRIT. YOU IMPEDE SCIENCE. Did that do anything?

What we lack here is not so much a "textbook of all of science that everyone needs to read and understand deeply before even being allowed to participate in the debate". Rather, we lack good, commonly held models of how to reason about what is theory, and good terms to (try to) coordinate around and use in debates and decisions.

Yudkowsky's sequences [/Rationality: AI to Zombies] provide both these things. People did not read Yudkowsky's sequences and internalize the load-bearing conclusions enough to prevent the current poor state of AI theory discourse...

The Aisafety[.]info group has collated some very helpful maps of "who is doing what" in AI safety, including this recent spreadsheet account of technical alignment actors and their problem domains / approaches as of 2024 [they also have an AI policy map, on the off chance you would be interested in that].

I expect "who is working on inner alignment?" to be a highly contested category boundary, so I would encourage you not to take my word for it, and to look through the spreadsheet and probably the collation post yourself [the post contains possibly-clueful-...

Yes, I think that's a validly equivalent and more general classification. Although I'd reflect that "survive but lack the power or will to run lots of ancestor-simulations" didn't seem like a plausible-enough future to promote it to consideration, back in the '00s.

...But, ["I want my father to accept me"] is already a very high level goal and I have no idea how it could be encoded in my DNA. Thus maybe even this is somehow learned. [ . . . ] But, to learn something, there needs to be a capability to learn it - an even simpler pattern which recognizes "I want to please my parents" as a refined version of itself. What could that proto-rule, the seed which can be encoded in DNA, look like? [ . . . ] equip organisms with something like "fundamental uncertainty about the future, existence and food paired with an ability to

[ Note: I strongly agree with some parts of jbash's answer, and strongly disagree with other parts. ]

As I understand it, Bostrom's original argument, the one that got traction for being an actually-clever and thought-provoking discursive fork, goes as follows:

...

Future humans in specific, will at least one of: [ die off early, run lots of high-fidelity simulations of our universe's history ["ancestor-simulations"], decide not to run such simulations ].

If future humans run lots of high-fidelity ancestor-simulations, then most people who subjectively exp

Then your neighbor wouldn't exist and the whole probability experiment wouldn't happen from their perspective.

From their perspective, no. But the answer to

In which ten billion interval your birth rank could've been

changes. If by your next-door neighbor marrying a different man, one member of the other (10B - 1) is thus swapped out, you were born in a different 10B interval.

Unless I'm misunderstanding what you mean by "In which ten billion interval"? What do you mean by "interval", as opposed to "set [of other humans]", or just "circumstances"?

If we're discussing the object-level story of "the breakfast question", I highly doubt that the results claimed here actually occurred as described, due [as the 4chan user claims] to deficits in prisoner intelligence, and that "it's possible [these people] lack the language skills to clearly communicate about [counterfactuals]".

Did you find an actual study, or other corroborating evidence of some kind, or just the greentext?

Is the quantum behavior itself, with the state of the system extant but stored in [what we perceive as] an unusual format, deterministic? If you grant that there's no in-the-territory uncertainty with mere quantum mechanics, I bet I can construct a workaround for fusing classical gravity with it that doesn't involve randomness, which you'll accept as just as plausible as the one that does.

To me the more interesting thing is not the mechanism you must invent to protect Heisenberg uncertainty from this hypothetical classical gravitational field, but the reason you must invent it. What, in Heisenberg uncertainty, are you protecting?

Does standard QED, by itself, contain something of the in-the-territory state-of-omitted-knowledge class you imagine precludes anthropic thinking? If not, what does it contain, that requires such in-the-territory obscurity to preserve its nature when it comes into contact with a force field that is deterministic?

[ Broadly agreed about the breakfast hypothetical. Thanks for clarifying. ]

In the domain of anthropics reasoning, the questions we're asking aren't of the form

A) I've thrown a six sided die, even though I could've thrown a 20 sided one, what is the probability to observe 6?

or

B) I've thrown a six sided die, what would be the probability to observe 6, if I've thrown a 20 sided die instead?

In artificial spherical-cow anthropics thought experiments [like Carlsmith's], the questions we're asking are closer to the form of

...A six-sided die was thrown with

I enjoyed reading this post; thank you for writing it. LessWrong has an allergy to basically every category Marx is a member of - "armchair" philosophers, socialist theorists, pop humanities idols - in my view, all entirely unjustified.

I had no idea Marx's forecast of utopia was explicitly based on extrapolating the gains from automation; I take your word for it somewhat, but from being passingly familiar with his work, I have a hunch you may be overselling his naivete.

Unfortunately, since the main psychological barrier to humans solving the technical alig...

So yes, I think this is a valid lesson that we can take from Principle (A) and apply to AGIs, in order to extract an important insight. This is an insight that not everyone gets, not even (actually, especially not) most professional economists, because most professional economists are trained to lump in AGIs with hammers, in the category of “capital”, which implicitly entails “things that the world needs only a certain amount of, with diminishing returns”.

This trilemma might be a good way to force people-stuck-in-a-frame-of-traditional-economics to actu...

Wild ahead-of-of-time guess: the true theory of gravity explaining why galaxies appear to rotate too slowly for a square root force law will also uniquely explain the maximum observed size of celestial bodies, the flatness of orbits, and the shape of galaxies.

Epistemic status: I don't really have any idea how to do this, but here I am.

When I stated Principle (A) at the top of the post, I was stating it as a traditional principle of economics. I wrote: “Traditional economics thinking has two strong principles, each based on abundant historical data”,

I don't think you think Principle [A] must hold, but I do think you think it's in question. I'm saying that, rather than taking this very broad general principle of historical economic good sense, and giving very broad arguments for why it might or might not hold post-AGI, we can start reasoning about superintelligent manufacturing [includ...

You seem to have misunderstood my text. I was stating that something is a consequence of Principle (A),

My position is that if you accept certain arguments made about really smart AIs in "The Sun is Big", Principle A, by itself, ceases to make sense in this context.

costs will go down. You can argue that prices will equilibriate to costs, but it does need an argument.

Assuming constant demand for a simple input, sure, you can predict the price of that input based on cost alone. The extent to which "the price of compute will go down", is rolled in to ho...

Thank you for writing this and hopefully contributing some clarity to what has been a confused area of discussion.

...So here’s a question: When we have AGI, what happens to the price of chips, electricity, and teleoperated robots?

(…Assuming free markets, and rule of law, and AGI not taking over and wiping out humanity, and so on. I think those are highly dubious assumptions, but let’s not get into that here!)

Principle (A) has an answer to this question. It says: prices equilibrate to marginal value, which will stay high, because AGI amounts to ambitious ent

I didn't say he wasn't overrated. I said he was capable of physics.

Did you read the linked post? Bohm, Aharonov, and Bell misunderstood EPR. Bohm's and Aharonov's formulation of the thought experiment is easier to "solve" but does not actually address EPR's concern, which is that mutual non-commutation of x-, y-, and z-spin implies hidden variables must not be superfluous. Again, EPR were fine with mutual non-commutation, and fine with entanglement. What they were pointing out was that the two postulates don't make sense in each other's presence.

Your linked post on The Obliqueness Thesis is curious. You conclude thus:

Obliqueness obviously leaves open the question of just how oblique. It's hard to even formulate a quantitative question here. I'd very intuitively and roughly guess that intelligence and values are 3 degrees off (that is, almost diagonal), but it's unclear what question I am even guessing the answer to. I'll leave formulating and answering the question as an open problem.

I agree, values and beliefs are oblique. The 3 spatial dimensions are also mutually oblique, as per General Rel...

The characters don't live in a world where sharing or smoothing risk is already seen as a consensus-valuable pursuit; thus, they will have to be convinced by other means.

I gave their world weirdly advanced [from our historical perspective] game theory to make it easier for them to talk about the question.

Cowen, like Hanson, discounts large qualitative societal shifts from AI that lack corresponding contemporary measurables.

Einstein was not an experimentalist, yet was perfectly capable of physics; his successors have largely not touched his unfinished work, and not for lack of data.

Not to be too cheeky, the idea is that if we understand insurance, it should be easy to tell if the characters' arguments are sound-and-valid, or not.

The obtuse bit at the beginning was an accidental by-product of how I wrote it; I admittedly nerdsniped myself trying to find the right formula.

Dwarkesh asks, what would happen if the world population would double? Tyler says, depends what you’re measuring. Energy use would go up.

Yes, economics after von Neumann very much turned into a game of "don't believe in anything you can't already comparatively quantify". It is supremely frustrating.

...On that note, I’d also point everyone to Dwarkesh Patel’s other recent podcast, which was with physicist Adam Brown. It repeatedly blew my mind in the best of ways, and I’d love to be in a different branch where I had the time to dig into some of the statem

Last big piece: if one were to recruit a bunch of physicists to work on alignment, I think it would be useful for them to form a community mostly-separate from the current field. They need a memetic environment which will amplify progress on core hard problems, rather than... well, all the stuff that's currently amplified.

Yes, exactly. Unfortunately, actually doing this is impossible, so we all have to keep beating our heads against a wall just the same.

This does not matter for AI benchmarking because by the time the Sun has gone out, either somebody succeeded at intentionally engineering and deploying [what they knew was going to be] an aligned superintelligence, or we are all dead.

I'm willing to credit that increased velocity of money by itself made aristocracy untenable post-industrialization. Increased ease of measurement is therefore superfluous as an explanation.

Why believe we've ever had a meritocracy - that is, outside the high [real/underlying] money velocities of the late 19th and early 20th centuries [and the trivial feudal meritocracy of heredity]?

0 trips -> 1 trip is an addition to the number of games played, but it's also an addition to the percentage of income bet on that one game - right?

Dennis is also having trouble understanding his own point, FWIW. That's how the dialogue came out; both people in that part are thinking in loose/sketchy terms and missing important points.

The thing Dennis was trying to get at by bringing up the concrete example of an optimal Kelly fraction is that it doesn't make sense for willingness to make a risky bet to have no dependence on available capital; he perceives Jill as suggesting that this is the case.

Relevant to whether the hot/cold/wet/dry system is a good or a bad idea, from our perspective, is that doctors don't currently use people's star signs for diagnosis. Bogus ontologies can be identified by how they promise to usefully replace more detailed levels of description - i.e., provide a useful abstraction that carves reality at the joints - and yet don't actually do this, from the standpoint of the cultures they're being sold to.

To your first question:

I honestly think it would be imprudent of me to give more examples of [what I think are] ontology pyramid schemes; readers would either parse them as laughable foibles of the outgroup, and thus write off my meta-level point as trivial, or feel aggressed and compelled to marshal soldiers against my meta-level point to dispute a perceived object-level attack on their ingroup's beliefs.

I think [something like] this [implicit] reasoning is likely why the Sequences are around as sparse as this post is on examples, and I think it's wise th...

Whoa, I hadn't realized you'd originated the term "anti-inductive"! Scott apparently didn't realize he was copying this from you, and neither I nor anyone else apparently realized this was your single-point-of-origin coinage, either.

Politics isn't quite a market for mindshare, as there are coercive equilibria at play. But I think it's another arena that can accurately be called anti-inductive. "This is an adversarial epistemic environment, therefore tune up your level of background assumption that apparent opportunity is not real" has [usefully IMO] become... (read more)