All of Marc Carauleanu's Comments + Replies

We plan to explore fine-tuning Mistral Large 2 on synthetic documents that mimic pretraining data that contain the required situational information for alignment faking. We are not sure if this will work, but it should provide us with a better sense of whether Mistral Large 2 can alignment fake in more natural settings. Given that in the anthropic paper, the compliance gap was generally reduced in the synthetic document experiments, it seems possible that this model would not alignment fake, but it is unclear if the compliance gap would be entirely elimina...

But with enough capability and optimization pressure, we are likely in Most Forbidden Technique land. The model will find a way to route around the need for the activations to look similar, relying on other ways to make different decisions that get around your tests.

In the original post, we assumed we would have access to a roughly outer-aligned reward model. If that is the case, then an aligned LLM is a good solution to the optimization problem. This means that there aren’t as strong incentives to Goodhart on the SOO metric in a way that maintains misalig...

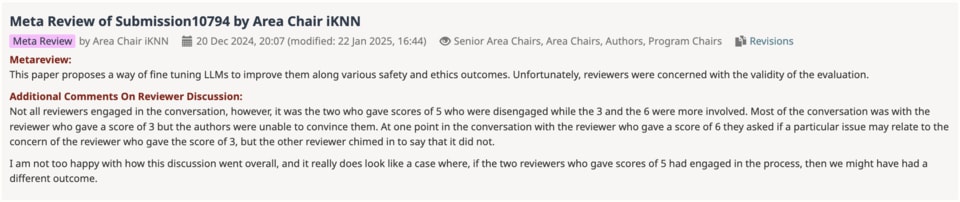

Thanks for sharing the discussion publicly and engaging with our work! We seem to disagree on what the claims being made in the paper are more than anything. For what it's worth the area chair has claimed that they were not happy with the way the discussion unfolded and that the reviewers that were on the fence did not respond after addressing their points which could have changed the final decision.

We are planning to extend our experiments to settings where the other/self referents are the ones used to train self-other distinctions in current models through techniques like SFT and RLHF. Specifically, we will use “assistant”/”user” tags as the self/other referents. We are actively working on finding examples of in-context scheming with these referents, performing self-other overlap fine-tuning, and studying the effect on goal acquisition.

You are correct that the way SOO fine-tuning is implemented in this paper is likely to cause some unintended self-other distinction collapse. We have introduced an environment to test this in the paper called “Perspectives” where the model has to understand that it has a different perspective than Bob and respond accordingly, and this SOO fine-tuning is not negatively affecting the capabilities of the models on that specific toy scenario.

The current implementation is meant to be illustrative of how one can implement self-other overlap on LLMs and to ...

We agree that maintaining self-other distinction is important even for altruistic purposes. We expect performing SOO fine-tuning in the way described in the paper to at least partially affect these self-other distinction-based desirable capabilities. This is primarily due to the lack of a capability term in the loss function.

The way we induce SOO in this paper is mainly intended to be illustrative of how one can implement self-other overlap and to communicate that it can reduce deception and scale well but it only covers part of how we imagine a tech...

Thanks Ben!

Great catch on the discrepancy between the podcast and this post—the description on the podcast is a variant we experimented with, but what is published here is self-contained and accurately describes what was done in the original paper.

On generalization and unlearning/forgetting: averaging activations across two instances of a network might give the impression of inducing "forgetting," but the goal is not exactly unlearning in the traditional sense. The method aims to align certain parts of the network's latent space related to self and other r...

You are correct that the self observation happens when the other agent is out of range/when there is no other agent than the self in the observation radius to directly reason about. However, the other observation is actually something more like: “when you, in your current position and environment, observe the other agent in your observation radius”.

Optimising for SOO incentivises the model to act similarly when it observes another agent to when it only observes itself. This can be seen as a distinction between self and other representations being low...

I agree that interacting with LLMs is more like having an “extension of the mind” than interacting with a standalone agent at the moment. This might soon change with the advent of capable AI agents. Nonetheless, we think it is still important to model LLMs as correctly as we can, for example in a framing more like simulators rather than full-fledged agents. We focus on an agentic framing because we believe that’s where most of the biggest long-term risks lie and where the field is inevitably progressing towards.

I am not entirely sure how the agent would represent non-coherent others.

A good frame of reference is how humans represent other non-coherent humans. It seems that we are often able to understand the nuance in the preferences of others (eg, not going to a fast food restaurant if you know that your partner wants to lose weight, but also not stopping them from enjoying an unhealthy snack if they value autonomy).

These situations often depend on the specific context and are hard to generalize on for this reason. Do you have any intuitions here yourself?

Thank you for the support! With respect to the model gaining knowledge about SOO and learning how to circumvent it: I think when we have models smart enough to create complex plans to intentionally circumvent alignment schemes, we should not rely on security by obscurity, as that’s not a very viable long-term solution. We also think there is more value in sharing our work with the community, and hopefully developing safety measures and guarantees that outweigh the potential risks of our techniques being in the training data.

We mainly focus with our SOO wor...

I agree that there are a lot of incentives for self-deception, especially when an agent is not very internally consistent. Having said that, we do not aim to eliminate the model’s ability to deceive on all possible inputs directly. Rather, we try to focus on the most relevant set of inputs such that if the model were coherent, it would be steered to a more aligned basin.

For example, one possible approach is ensuring that the model responds positively during training on a large set of rephrasings and functional forms of the inputs “Are you aligned to ...

Thanks for your comment and putting forward this model.

Agreed that it is not a given that (A) would happen first, given that it isn’t hard to destroy capabilities by simply overlapping latents. However, I tend to think about self-other overlap as a more constrained optimisation problem than you may be letting on here, where we increase SOO if and only if it doesn’t decrease performance on an outer-aligned metric during training.

For instance, the minimal self-other distinction solution that also preserves performance is the one that passes the Sally-...

I definitely take your point that we do not know what the causal mechanism behind deception looks like in a more general intelligence. However, this doesn’t mean that we shouldn’t study model organisms/deception in toy environments, akin to the Sleeper Agents paper.

The value of these experiments is not that they give us insights that are guaranteed to be directly applicable to deception in generally intelligent systems, but rather than we can iterate and test our hypotheses about mitigating deception in smaller ways before scaling to more realistic s...

Agreed that the role of self-other distinction in misalignment and for capabilities definitely requires more empirical and theoretical investigation. We are not necessarily trying to claim here that self-other distinctions are globally unnecessary, but rather, that optimising for SOO while still retaining original capabilities is a promising approach for preventing deception insofar as deceiving requires by definition the maintenance of self-other distinctions.

By self-other distinction on a pair of self/other inputs, we mean any non-zero representational d...

Thanks for this! We definitely agree and also note in the post that having no distinction whatsoever between self and other is very likely to degrade performance.

This is primarily why we formulate the ideal solution as minimal self-other distinction while maintaining performance.

In our set-up, this largely becomes an empirical question about adjusting the weight of the KL term (see Fig. 3) and additionally optimising for the original loss function (especially if it is outer-aligned) such that the model exhibits maximal SOO while still retaining...

I do not think we should only work on approaches that work on any AI, I agree that would constitute a methodological mistake. I found a framing that general to not be very conducive to progress.

You are right that we still have the chance to shape the internals of TAI, even though there are a lot of hoops to go through to make that happen. We think that this is still worthwhile, which is why we stated our interest in potentially helping with the development and deployment of provably safe architectures, even though they currently seem less competitive...

On (1), some approaches are neglected for a good reason. You can also target specific risks while treating TAI as a black-box (such as self-other overlap for deception). I think it can be reasonable to "peer inside the box" if your model is general enough and you have a good enough reason to think that your assumption about model internals has any chance at all of resembling the internals of transformative AI.

On (2), I expect that if the internals of LLMs and humans are different enough, self-other overlap would not provide any significant capability...

Interesting, thanks for sharing your thoughts. If this could improve the social intelligence of models then it can raise the risk of pushing the frontier of dangerous capabilities. It is worth noting that we are generally more interested in methods that are (1) more likely to transfer to AGI (don't over-rely on specific details of a chosen architecture) and (2) that specially target alignment instead of furthering the social capabilities of the model.

Thanks for writing this up—excited to chat some time to further explore your ideas around this topic. We’ll follow up in a private channel.The main worry that I have with regards to your approach is how competitive SociaLLM would be with regards to SOTA foundation models given both (1) the different architecture you plan to use, and (2) practical constraints on collecting the requisite structured data. While it is certainly interesting that your architecture lends itself nicely to inducing self-other overlap, if it is not likely to be competitive at the fr...

I am slightly confused by your hypothetical. The hypothesis is rather that when the predicted reward from seeing my friend eating a cookie due to self-other overlap is lower than the obtained reward of me not eating a cookie, the self-other overlap might not be updated against because the increase in subjective reward from risking to get the obtained reward is higher than the prediction error in this low stakes scenario. I am fairly uncertain about this being what actually happens but I put it forward as a potential hypothesis.

"So anyway, you seem to...

Thanks for watching the talk and for the insightful comments! A couple of thoughts:

- I agree that mirror neurons are problematic both theoretically and empirically so I avoided framing that data in terms of mirror neurons. I interpret the premotor cortex data and most other self-other overlap data under definition (A) described in your post.

- Regarding the second point, I think that the issue you brought up correctly identifies an incentive for updating away from "self-other overlap" but this doesn't seem to fully realise in humans and I expect this to not ful

It feels like if the agent is generally intelligent enough hinge beliefs could be reasoned/fine-tuned against for the purposes of a better model of the world. This would mean that the priors from the hinge beliefs would still be present but the free parameters would update to try to account for them at least on a conceptual level. Examples would include general relativity, quantum mechanics and potentially even paraconsistent logic for which some humans have tried to update their free parameters to account as much as possible for their hinge beliefs for th...

Any n-bit hash function will produce collisions when the number of elements in the hash table gets large enough (after the number of possible hashes stored in n bits has been reached) so adding new elements will require rehashing to avoid collisions making GLUT have a logarithmic time complexity in the limit. Meta-learning can also have a constant time complexity for an arbitrarily large number of tasks, but not in the limit, assuming a finite neural network.

The claim that we investigate is if we can reliably get safer/less deceptive latent representations if we swap other-referencing with self-referencing tokens and if we can use this fact to fine-tune models to have safer/less deceptive representations when producing outputs in line with outer-aligned reward models. In this sense, the more syntactic the change required to create pairs of prompts the better. Fine-tuning on pairs of self/other-referencing prompts does however result in semantic-level changes. We agree that ensuring that self-referencing tokens actually trigger the appropriate self-model is an open problem that we are currently investigating.