Transformer Attention’s High School Math Mistake

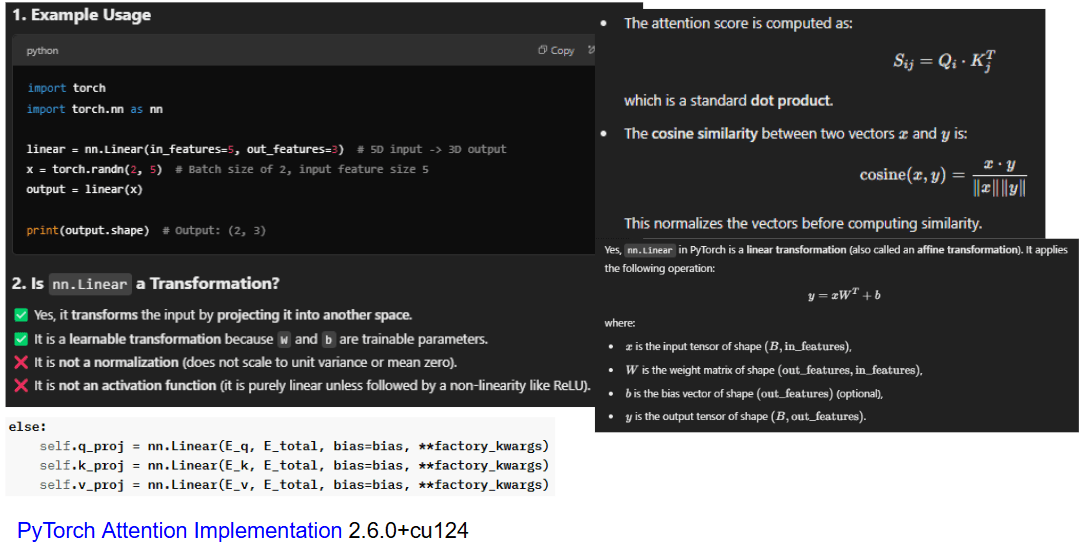

Each data point (input data, weights & bias) of a neural network has coordinates. Data alone, without coordinates, is almost meaningless. When attention mechanisms (Q, K, and V) undergo a linear transformation, they are projected into a different space with new coordinates. The attention score is then computed based on...

DeepSeek V3 mitigated this mistake unknowingly. In their MLA, K, V shares the same nn.linear.