All of Maxime Riché's Comments + Replies

Truth-seeking AIs by default? One hope for alignment by default is that AI developers may have to train their models to be truth-seeking to be able to make them contribute to scientific and technological progress, including RSI. Truth-seeking about the world model may generalize to truth-seeking for moral values, as observed in humans, and that's an important meta-value guiding moral values towards alignment.

In humans, truth-seeking is maybe pushed back from being a revealed preference at work to being a stated preference outside of work, because of status...

Thanks for your corrections, that's welcome

> 32B active parameters instead of likely ~220B for GPT4 => 6.8x lower training ... cost

Doesn't follow, training cost scales with the number of training tokens. In this case DeepSeek-V3 uses maybe 1.5x-2x more tokens than original GPT-4.

Each of the points above is a relative comparison with more or less everything else kept constant. In this bullet point, by "training cost", I mostly had in mind "training cost per token":

32B active parameters instead of likely ~

220280B for GPT4 =>6.88.7x lower t

Simple reasons for DeepSeek V3 and R1 efficiencies:

- 32B active parameters instead of likely ~220B for GPT4 => 6.8x lower training and inference cost

- 8bits training instead of 16bits => 4x lower training cost

- No margin on commercial inference => ?x maybe 3x

- Multi-token training => ~2x training efficiency, ~3x inference efficiency, and lower inference latency by baking in "predictive decoding', possibly 4x fewer training steps for the same number of tokens if predicting tokens only once

- And additional cost savings from memory optimization, especially

Models trained for HHH are likely not trained to be corrigible. Models should be trained to be corrigible too in addition to other propensities.

Corrigibility may be included in Helpfulness (alone) but when adding Harmlessness then Corrigibility conditional on being changed to be harmful is removed. So the result is not that surprising from that point of view.

People may be blind to the fact that improvements from gpt2 to 3 to 4 were both driven by scaling training compute (by 2 OOM between each generation) and (the hidden part) by scaling test compute through long context and CoT (like 1.5-2 OOM between each generations too).

If gpt5 uses just 2 OOM more training compute than gpt4 but the same test compute, then we should not expect "similar" gains, we should expect "half".

O1 may use 2 OOM more test compute than gpt4. So gpt4=>O1+gpt5 could be expected to be similar to gpt3=>gpt4

Speculations on (near) Out-Of-Distribution (OOD) regimes

- [Absence of extractable information] The model can no longer extract any relevant information. Models may behave more and more similarly to their baseline behavior in this regime. Models may learn the heuristic to ignore uninformative data, and this heuristic may generalize pretty far. Publication supporting this regime: Deep Neural Networks Tend To Extrapolate Predictably

- [Extreme information] The model can still extract information, but the features extracted are becoming extreme in v...

If it takes a human 1 month to solve a difficult problem, it seems unlikely that a less capable human who can't solve it within 20 years of effort can still succeed in 40 years

Since the scaling is logarithmic, your example seems to be a strawman.

The real claim debated is more something like:

"If it takes a human 1 month to solve a difficult problem, it seems unlikely that a less capable human who can't solve it within 100 months of effort can still succeed in 10 000 months" And this formulation doesn't seem obviously true.

Ten months later, which papers would you recommend for SOTA explanations of how generalisation works?

From my quick research:

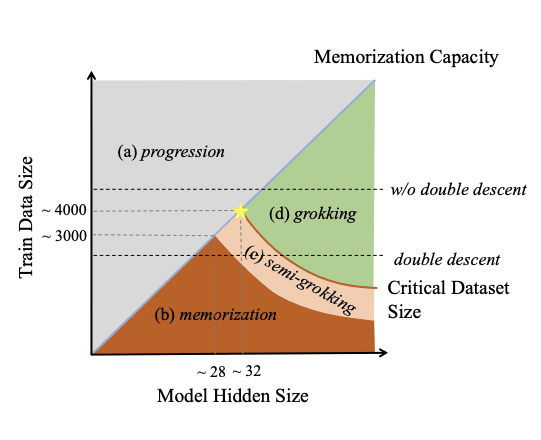

- "Explaining grokking through circuit efficiency" seems great at explaining and describing grokking

- "Unified View of Grokking, Double Descent and Emergent Abilities: A Comprehensive Study on Algorithm Task" proposes a plausible unified view of grokking and double descent (and a guess at a link with emergent capabilities and multi-task training). I especially like their summary plot:

For information to the readers and author: I am (independently) working on a project about narrowing down the moral values of alien civilizations on the verge of creating an ASI and becoming space-faring. The goal is to inform the prioritization of longtermist interventions.

I will gladly build on your content, which aggregates and beautifully expands several key mechanisms (individual selection ("Darwinian demon"), kin selection/multilevel selection ("Darwinian angel"), filters ("Fragility of Life Hypothesis)) that I use among others (e.g. sequential races, cultural evolution, accelerating growth stages, etc.).

Thanks for the post!

If the following correlations are true, then the opposite may be true (slave morality being better for improving the world through history):

- Improving the world being strongly correlated with economic growth (this is probably less true when X-risk are significant)

- Economic growth being strongly correlated with Entrepreneurship incentives (property rights, autonomy, fairness, meritocracy, low rents)

- Master morality being strongly correlated with acquiring power and thus decreasing the power of others and decreasing their entrepreneurship incentives

Right 👍

So the effects are:

Effects that should increase Anthropic's salaries relative to OpenAI: A) - The pool of AI safety focused candidates is smaller B) - AI safety focused candidates are more motivated

Effects that should decrease Anthropic's salaries relative to OpenAI: C) - AI safety focused candidates should be willing to accept significantly lower wages

New notes: (B) and (C) could cancel each other but that would be a bit suspicious. Still a partial cancellation would make a difference between OpenAI and Anthropic lower and harder to properly obser...

Rambling about Forecasting the order in which functions are learned by NN

Idea:

Using function complexity and their "compoundness" (edit 11 september: these functions seem to be called "composite functions"), we may be able to forecast the order in which algorithms in NN are learned. And we may be able to forecast the temporal ordering of when some functions or behaviours will start generalising strongly.

Rambling:

What happens when training neural networks is similar to the selection of genes in genomes or any reinforcement optimization processes. Compo...

Will we get to GPT-5 and GPT-6 soon?

This is a straightforward "follow the trend" model which tries to forecast when GPT-N-equivalent models will be first trained and deployed up to 2030.

Baseline forecast:

GPT-4.7 | GPT-5.3 | GPT-5.8 | GPT-6.3 | |

| Start of training | 2024.4 | 2025.5 | 2026.5 | 2028.5 |

| Deployment | 2025.2 | 2026.8 | 2028 | 2030.2 |

Bullish forecast:

GPT-5 | GPT-5.5 | GPT-6 | GPT-6.5 | |

| Start of training | 2024.4 | 2025 | 2026.5 | 2028.5 |

| Deployment | 2025.2 | 2026.5 | 2028 | 2030 |

FWIW, it predicts roughly similar growth in model size, energy cost and GPU count than described in https://sit...

Could Anthropic face an OpenAI drama 2.0?

I forecast that Anthropic would likely face a similar backlash from its employees than OpenAI in case Anthropic’s executives were to knowingly decrease the value of Anthropic shares significantly. E.g. if they were to switch from “scaling as fast as possible” to “safety-constrained scaling”. In that case, I would not find it surprising that a significant fraction of Anthropic’s staff threatened to leave or leave the company.

The reasoning is simple, given that we don’t observe significant differences in the wages of ...

What is the difference between Evaluation, Characterization, Experiments, and Observations?

The words evaluations, experiments, characterizations, and observations are somewhat confused or confusingly used in discussions about model evaluations (e.g., ref, ref).

Let’s define them more clearly:

- Observations provide information about an object (including systems).

- This information can be informative (allowing the observer to update its beliefs significantly), or not.

- Characterizations describe distinctive features of an object (inc

Interestingly, after a certain layer, the first principle component becomes identical to the mean difference between harmful and harmless activations.

Do you think this can be interpreted as the model having its focus entirely on "refusing to answer" from layer 15 onwards? And if it can be interpreted as the model not evaluating other potential moves/choices coherently over these layers. The idea is that it could be evaluating other moves in a single layer (after layer 15) but not over several layers since the residual stream is not updated significan...

Thank for the great comment!

Do we know if distributed training is expected to scale well to GPT-6 size models (100 trillions parameters) trained over like 20 data centers? How does the communication cost scale with the size of the model and the number of data centers? Linearly on both?

After reading for 3 min this:

Google Cloud demonstrates the world’s largest distributed training job for large language models across 50000+ TPU v5e chips (Google November 2023). It seems that scaling is working efficiently at least up to 50k GPUs (GPT-6 would be like 2.5...

The title is clearly an overstatement. It expresses more that I updated in that direction, than that I am confident in it.

Also, since learning from other comments that decentralized learning is likely solved, I am now even less confident in the claim, like only 15% chance that it will happen in the strong form stated in the post.

Maybe I should edit the post to make it even more clear that the claim is retracted.

FYI, the "Evaluating Alignment Evaluations" project of the current AI Safety Camp is working on studying and characterizing alignment(propensity) evaluations. We hope to contribute to the science of evals, and we will contact you next month. (Somewhat deprecated project proposal)

I wonder if the result is dependent on the type of OOD.

If you are OOD by having less extractable information, then the results are intuitive.

If you are OOD by having extreme extractable information or misleading information, then the results are unexpected.

Oh, I just read their Appendix A: "Instances Where “Reversion to the OCS” Does Not Hold"

Outputting the average prediction is indeed not the only behavior OOD. It seems that there are different types of OOD regimes.

ChatGPT was not GPT-4. It was a relatively minor fixup of GPT-3, GPT-3.5, with an improved RLHF variant, that they released while working on GPT-4's evaluations & productizing, which was supposed to be the big commercial success.

In fact, the costs to inference ChatGPT exceed the training costs on a weekly basis

That seems quite wild, if the training cost was 50M$, then the inference cost for a year would be 2.5B$.

The inference cost dominating the cost seems to depend on how you split the cost of building the supercomputer (buying the GPUs).

If you include the cost of building the supercomputer into the training cost, then the inference cost (without the cost of building the computer) looks cheap. If you split the building cost between training and inference in proportion to the "use time", then the inference cost would dominate.

Are these 2 bullet points faithful to your conclusion?

- GPT-4 training run (renting the compute for the final run): 100M$, of which 1/3 to 2/3 is the cost of the staff

- GPT-4 training run + building the supercomputer: 600M$, of which ~20% for cost of the staff

And some hot takes (mine):

- Because supercomputers become "obsolete" quickly (~3 years), you need to run inferences to pay for building your supercomputer (you need profitable commercial applications), or your training cost must also account for the full cost of the supercomputer, and this produces a ~x6 in

1) In the web interface, the parameter "Hardware adoption delay" is:

Meaning: Years between a chip design and its commercial release.

Best guess value: 1

Justification for best guess value: Discussed here. The conservative value of 2.5 years corresponds to an estimate of the time needed to make a new fab. The aggressive value (no delay) corresponds to fabless improvements in chip design that can be printed with existing production lines with ~no delay.

Is there another parameter for the delay (after the commercial release) to produce the hundreds of thousands ...

Maybe the use of prompt suffixes can do a great deal to decrease the probability chatbots turning into Waluigi. See the "insert" functionality of OpenAI API https://openai.com/blog/gpt-3-edit-insert

Chatbots developers could use suffix prompts in addition to prefix prompts to make it less likely to fall into a Waluigi completion.

Indeed, empirical results show that filtering the data, helps quite well in aligning with some preferences: Pretraining Language Models with Human Preferences

What about the impact of dropout (parameters, layers), normalisation (batch, layer) (with a batch containing several episodes), asynchronous distributed data collection (making batch aggregation more stochastic), weight decay (impacting any weight), multi-agent RL training with independent agents, etc.

And other possible stuff that don't exist at the moment: online pruning and growth while training, population training where the gradient hackers are exploited.

Shouldn't that naively make gradient hacking very hard?

We see a lot of people die, in the reality, fictions and dreams.

We also see a lot of people having sex or sexual desire in fictions or dreams before experiencing it.

IDK how strong this is a counter argument to how powerful the alignment in us is. Maybe a biological reward system + imitation+ fiction and later dreams is simply what is at play in humans.

Some quick thoughts about "Content we aren’t (yet) discussing":

Shard theory should be about transmission of values by SL (teaching, cloning, inheritance) more than learning them using RL

SL (Cloning) is more important than RL. Humans learn a world model by SSL, then they bootstrap their policies through behavioural cloning and finally they finetune their policies thought RL.

Why? Because of theoretical reasons and from experimental data points, this is the cheapest why to generate good general policies…

- SSL before SL because you get much more frequent a

If you look at the logit given a range that is not [0.0, 1.0] but [low perf, high perf], then you get a bit more predictive power, but it is still confusingly low.

A possible intuition here is that the scaling is producing a transition from non-zero performance to non-perfect performance. This seems right since the random baseline is not 0.0 and reaching perfect accuracy is impossible.

I tried this only with PaLM on NLU and I used the same adjusted range for all tasks:

[0.9 * overall min. acc., 1.0 - 0.9 * (1.0 - overall max acc.)] ~ [0.13, 0.95]

Even if...

Most of those tokens were spent on the RL tasks, which were 85% of the corpus. Looking at the table 1a/1b which, the pure text modeling tasks looks like they were 10% weight with the other 5% being the image caption datasets*; so if it did 5 x 1e11 tokens total (Figure 9), then presumably it only saw a tenth of that as actual pure text comparable to GPT-2, or 50b tokens. It's also a small model so it is less sample-efficient and will get less than n billion tokens' worth if you are mentally working back from "well, GPT-3 used x billion tokens").

Considerin...

"The training algorithm has found a better representation"?? That seems strange to me since the loss should be lower in that case, not spiking. Or maybe you mean that the training broke free of a kind of local minima (without telling that he found a better one yet). Also I guess people training the models observed that waiting after these spike don't lead to better performances or they would not have removed them from the training.

Around this idea, and after looking at the "grokking" paper, I would guess that it's more likely caused by the weig...

I am curious to hear/read more about the issue of spikes and instabilities in training large language model (see the quote / page 11 of the paper). If someone knows a good reference about that, I am interested!

...5.1 Training Instability

For the largest model, we observed spikes in the loss roughly 20 times during training, despite the fact that gradient clipping was enabled. These spikes occurred at highly irregular intervals, sometimes happening late into training, and were not observed when training the smaller models. Due to the cost of training the larges

Here with 2 conv and less than 100k parameters the accuracy is ~92%. https://github.com/zalandoresearch/fashion-mnist

SOTA on Fashion-MNIST is >96%. https://paperswithcode.com/sota/image-classification-on-fashion-mnist

Maybe another weak solution close to "Take bigger steps": Use decentralize training.

Meaning: perform several training steps (gradient updates) in parallel on several replicates of the model and periodically synchronize the weights (like average them).

Each replicate has only access to its own inputs and local weights and thus it seems plausible that the gradient hacker can't as easily cancel gradients going against its mesa-objective.

One particularly interesting recent work in this domain was Leike et al.'s “Learning human objectives by evaluating hypothetical behaviours,” which used human feedback on hypothetical trajectories to learn how to avoid environmental traps. In the context of the capability exploration/objective exploration dichotomy, I think a lot of this work can be viewed as putting a damper on instrumental capability exploration.

Isn't this work also linked to objective exploration? One of the four "hypothetical behaviours" used is the selection of trajectories which maxi...

The implications are stronger in that case right.

The post is about implications for impartial longtermists. So either under moral realism it means something like finding the best values to pursue. And under moral anti realism it means that an impartial utility function is kind of symmetrical with aliens. For example if you value something only because humans value it, then an impartial version is to also value things that alien value only because their species value it.

Though because of reasons introduced in The Convergent Path to the Stars, I think ... (read more)