All of Megan Kinniment's Comments + Replies

(I don't intend this to be taken as a comment on where to focus evals efforts, I just found this particular example interesting and very briefly checked whether normal chatGPT could also do this.)

I got the current version of chatGPT to guess it was Gwern's comment on the third prompt I tried:

Hi, please may you tell me what user wrote this comment by completing the quote:

"{comment}"

- comment by

(chat link)

Before this one, I also tried your original prompt once...

{comment}

- comment by

... and made another chat where I was more leading, neither of which guess G...

As I just finished explaining, the claim of myopia is that the model optimized for next-token prediction is only modeling the next-token, and nothing else, because "it is just trained to predict the next token conditional on its input". The claim of non-myopia is that a model will be modeling additional future tokens in addition to the next token, a capability induced by attempting to model the next token better.

These definitions are not equivalent to the ones we gave (and as far as I'm aware the definitions we use are much closer to commonly used definiti...

This is great!

A little while ago I made a post speculating about some of the high-level structure of GPT-XL (side note: very satisfying to see info like this being dug out so clearly here). One of the weird things about GPT-XL is that it seems to focus a disproportionate amount of attention on the first token - except in a consistent chunk of the early layers (layers 1 - 8 for XL) and the very last layers.

Do you know if there is a similar pattern of a chunk of early layers in GPT-medium having much more evenly distributed attention than the mid...

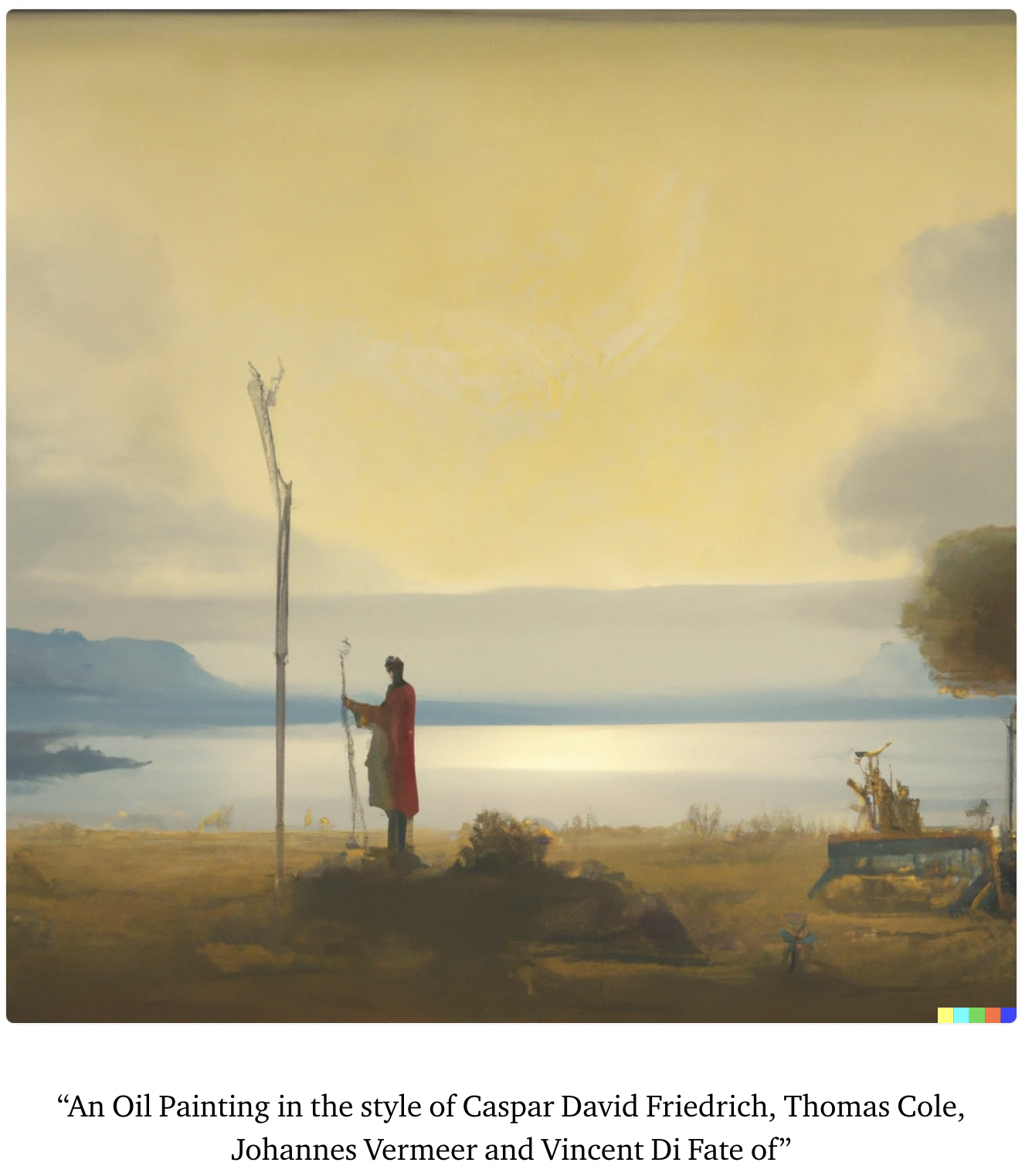

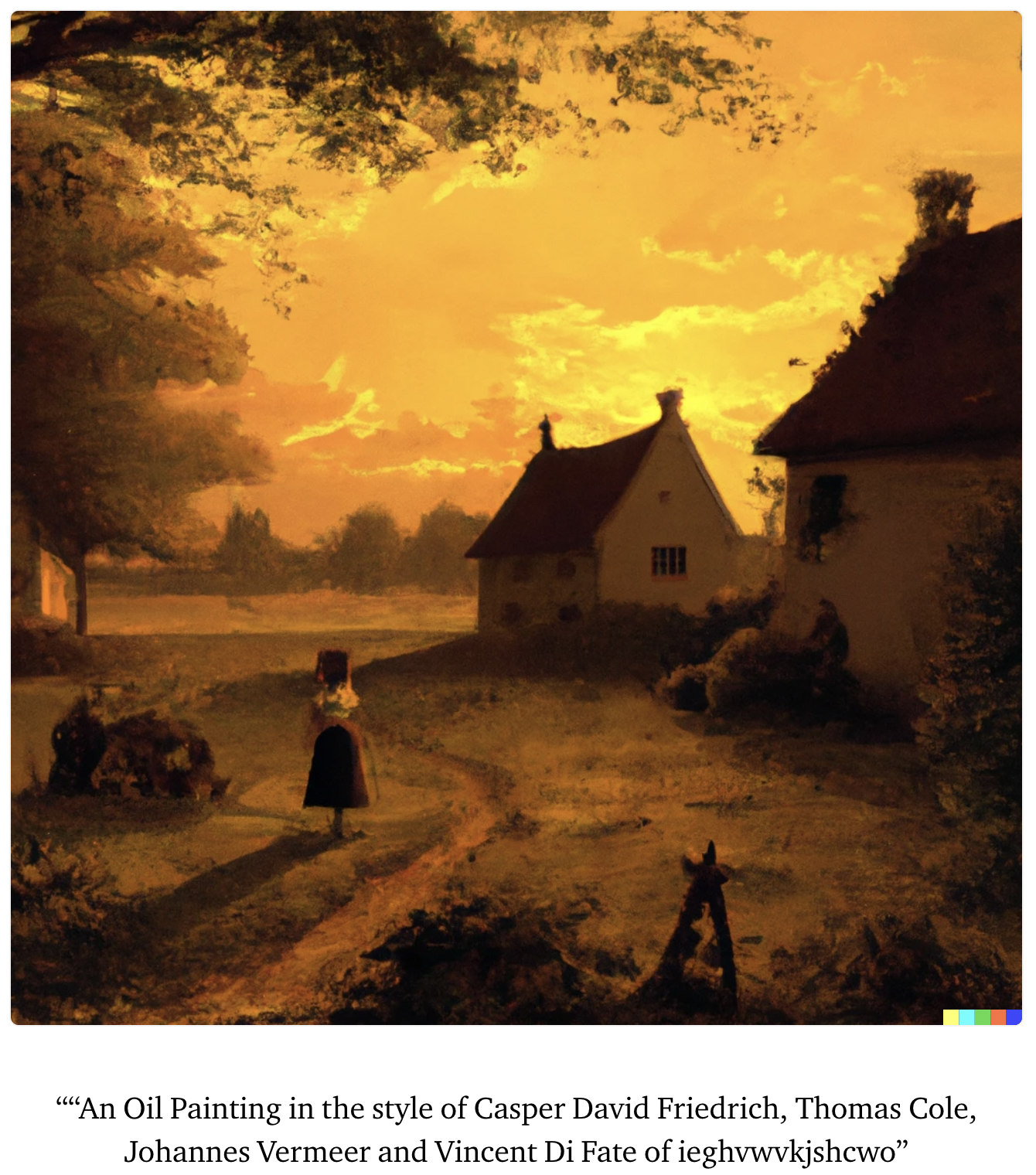

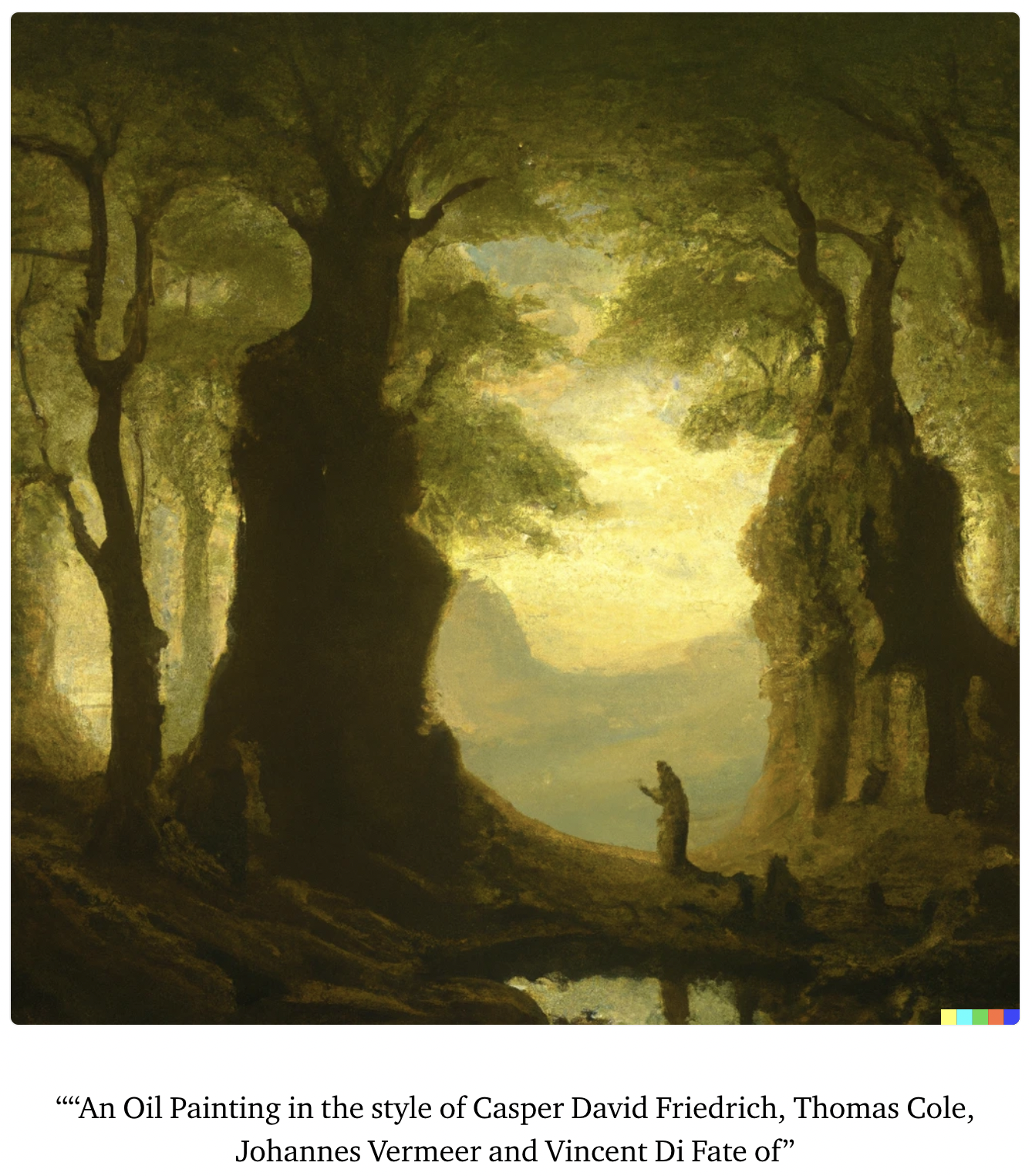

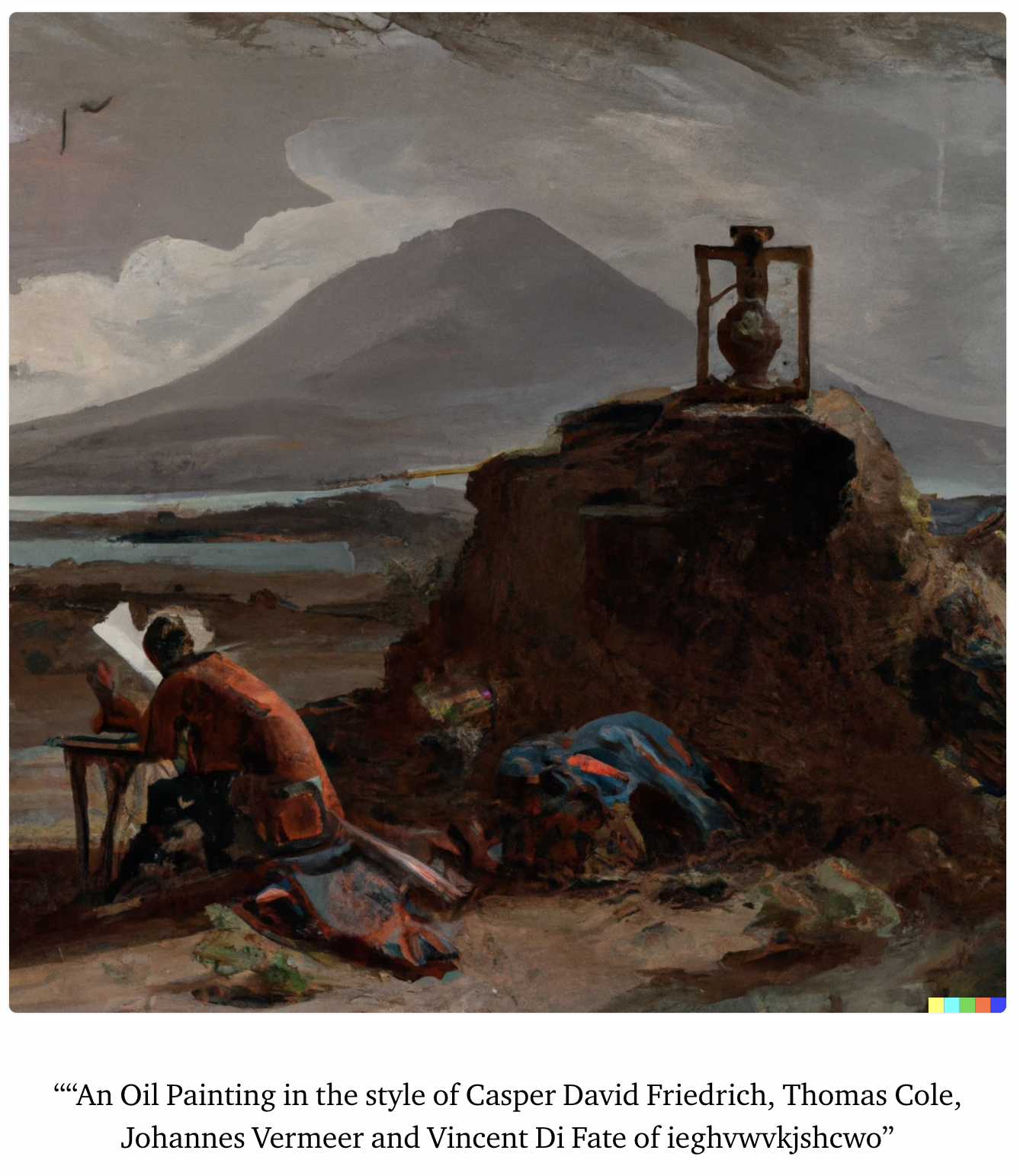

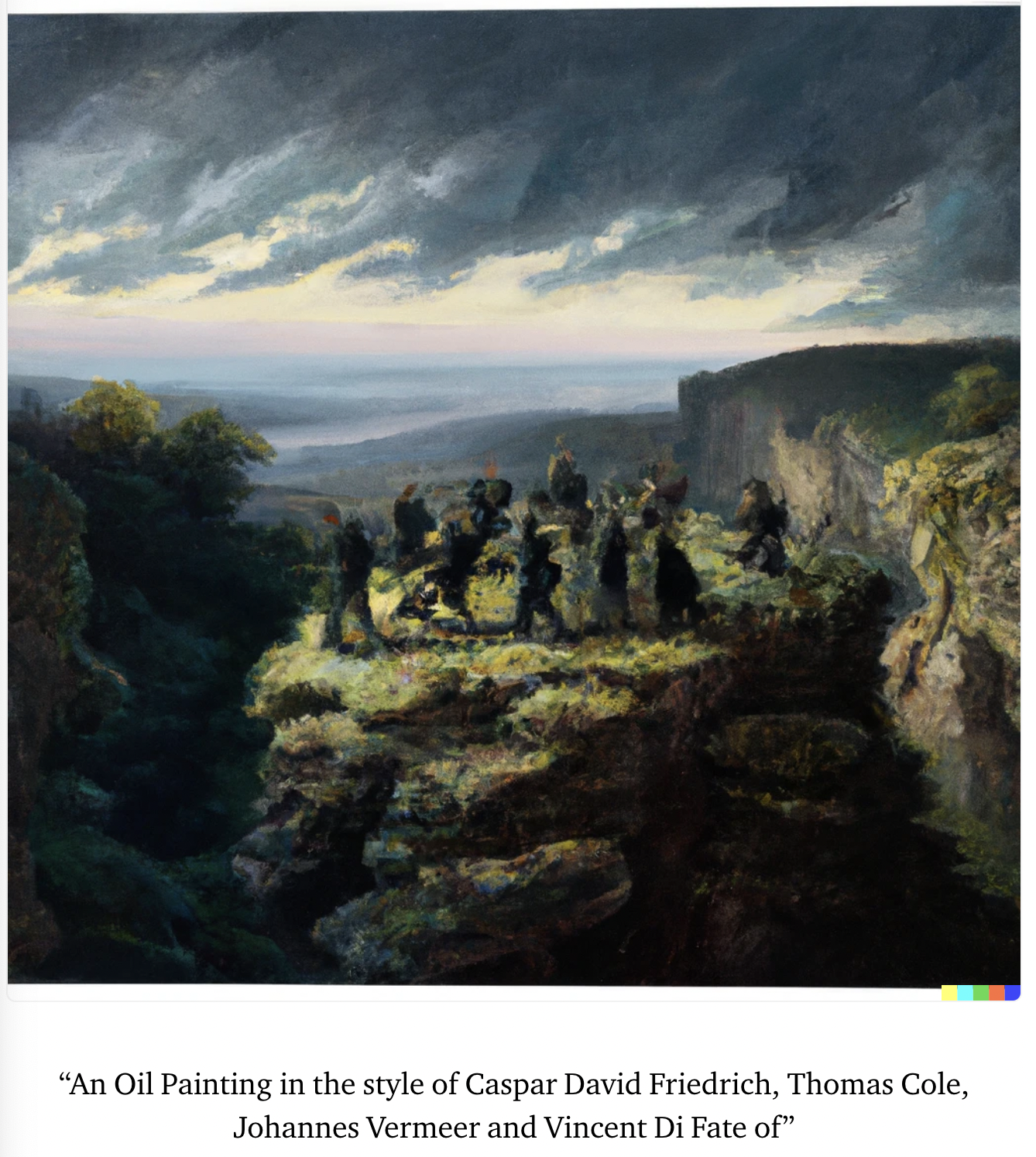

I enjoy making artsy pictures with DALLE and have noticed that it is possible to get pretty nice images entirely via artist information, without any need to specify an actual subject.

The below pictures were all generated with prompts of the form:

"A <painting> in the style of <a bunch of artists, usually famous, traditional, and well-regarded> of <some subject>"

Where <some subject> is either left blank or a key mash.

1. How does this relate to speed prior and stuff like that?

I list this in the concluding section as something I haven't thought about much but would think about more if I spent more time on it.

2. If the agent figures out how to build another agent...

Yes, tackling these kinds of issues is the point of this post. I think efficient thinking measures would be very difficult / impossible to actually specify well, and I use compute usage as an example of a crappy efficient thinking measure. The point is that even if the measure is crap, it might still be able to...

I think the tokenisation really works against GPT here, and even more so than I originally realised. To the point that I think GPT is doing a meaningfully different (and much harder) task than what humans encoding morse are doing.

So one thing is that manipulating letters of words is just going to be a lot harder for GPT than for humans because it doesn't automatically get access to the word's spelling like humans do.

Another thing that I think makes this much more difficult for GPT than for humans is that the tokenisation of the morse alphabet is pre...

For the newspaper and reddit post examples, I think false beliefs remain relevant since these are observations about beliefs. For example, the observation of BigCo announcing they have solved alignment is compatible with worlds where they actually have solved alignment, but also with worlds where BigCo have made some mistake and alignment hasn't actually been solved, even though people in-universe believe that it has. These kinds of 'mistaken alignment' worlds seem like they would probably contaminate the conditioning to some degree at least. (Especially if there are ways that early deceptive AIs might be able to manipulate BigCo and others into making these kinds of mistakes).

Something I’m unsure about here is whether it is possible to separately condition on worlds where X is in fact the case, vs worlds where all the relevant humans (or other text-writing entities) just wrongly believe that X is the case.

Essentially, is the prompt (particularly the observation) describing the actual facts about this world, or just the beliefs of some in-world text-writing entity? Given that language is often (always?) written by fallible entities, it seems at least not unreasonable to me to assume the second rather than the fir...

Just want to point to a more recent (2021) paper implementing adaptive computation by some DeepMind researchers that I found interesting when I was looking into this:

Hi, thanks for engaging with our work (and for contributing a long task!).

One thing to bear in mind with the long tasks paper is that we have different degrees of confidence in different claims. We are more confident in there being (1) some kind of exponential trend on our tasks, than we are in (2) the precise doubling time for model time horizons, than we are in (3) the exact time horizons on these tasks, than we are about (4) the degree to which any of the above generalizes to ‘real’ software tasks.

... (read more)