All of Mlxa's Comments + Replies

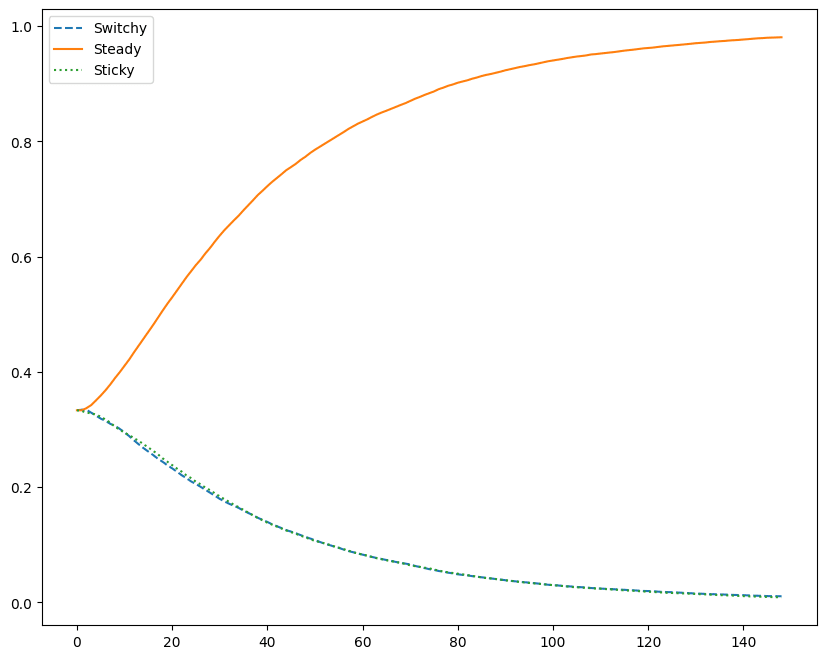

I agree with @James Camacho, at least intuitively, that stickiness in the space of observations is equivalent to switchiness in the space of their prefix XORs and vice versa. Also, I tried to replicate, and didn't observe the mentioned effect, so maybe one of us has a bug in the simulation.

I agree that if a DT is trained on trajectories which sometimes contain unoptimal actions, but the effect size of this mistake is small compared to the inherent randomness of the return, the learned policy will also take such unoptimal action, though with a bit less frequency. (By inherent randomness I mean, in this case, the fact that Player 2 pushes the button randomly and independently of the actions of Player 1)

But where do these trajectories come from? If you take them from another RL algorithm, then the optimization is already there. And if you start...

That example with traders was to show that in the limit these non EU-maximizers actually become EU-maximizers, now with linear utility instead of logaritmic. And in other sections I tried to demonstrate that they are not EU-maximizers for a finite number of agents.

First, in the expression for their utility based on the outcome distribution, you integrate something of the form, a quadratic form, instead of as you do to compute expected utility. By itself it doesn't prove that there is no utility function, because the...

I'm curious about your thoughts on lifting vs endurance training. I thought in terms of general health optimization a combination of them would be better then just lifting