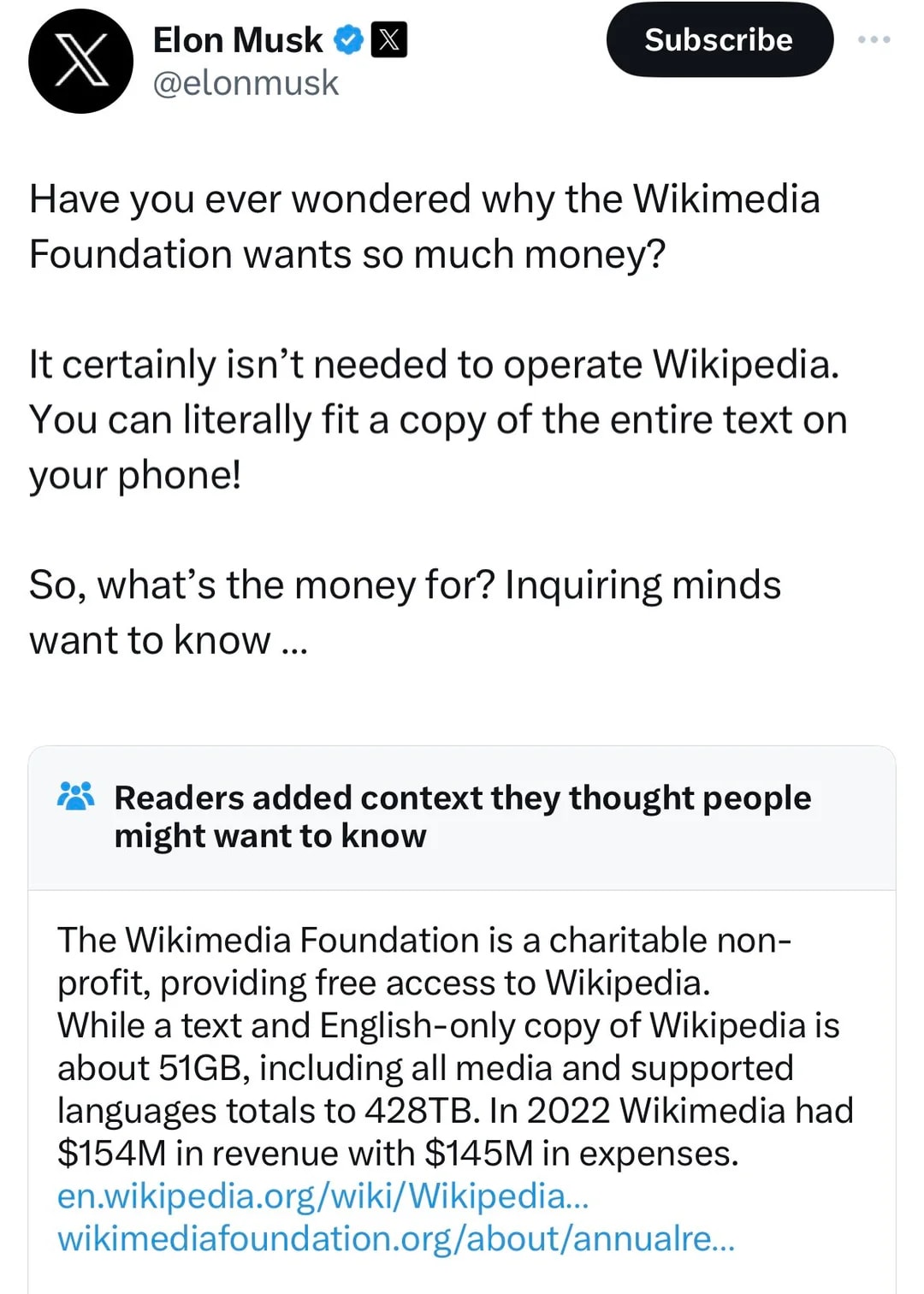

Cyborgism

Thanks to Garrett Baker, David Udell, Alex Gray, Paul Colognese, Akash Wasil, Jacques Thibodeau, Michael Ivanitskiy, Zach Stein-Perlman, and Anish Upadhayay for feedback on drafts, as well as Scott Viteri for our valuable conversations. Various people at Conjecture helped develop the ideas behind this post, especially Connor Leahy and Daniel Clothiaux. Connor coined the term "cyborgism". (picture thanks to Julia Persson and Dall-E 2) Executive summary: This post proposes a strategy for safely accelerating alignment research. The plan is to set up human-in-the-loop systems which empower human agency rather than outsource it, and to use those systems to differentially accelerate progress on alignment. 1. Introduction: An explanation of the context and motivation for this agenda. 2. Automated Research Assistants: A discussion of why the paradigm of training AI systems to behave as autonomous agents is both counterproductive and dangerous. 3. Becoming a Cyborg: A proposal for an alternative approach/frame, which focuses on a particular type of human-in-the-loop system I am calling a “cyborg”. 4. Failure Modes: An analysis of how this agenda could either fail to help or actively cause harm by accelerating AI research more broadly. 5. Testimony of a Cyborg: A personal account of how Janus uses GPT as a part of their workflow, and how it relates to the cyborgism approach to intelligence augmentation. Terminology * GPT: Large language models trained on next-token prediction. Most plans to accelerate research (including this one) revolve around leveraging GPTs specifically. I will mostly be using “GPT'' to gesture at the base models which have not been augmented using reinforcement learning.[1] * Autonomous Agent: An AI system which can be well modeled as having goals or preferences, and deliberately selects actions in order to achieve them (with limited human assistance). * Capabilities research: Research which directly improves the capabilities of AI systems