All of O O's Comments + Replies

Not just central banks but the U.S. going off the gold standard too then fiddling with bond yields to cover up ensuing inflation maybe?

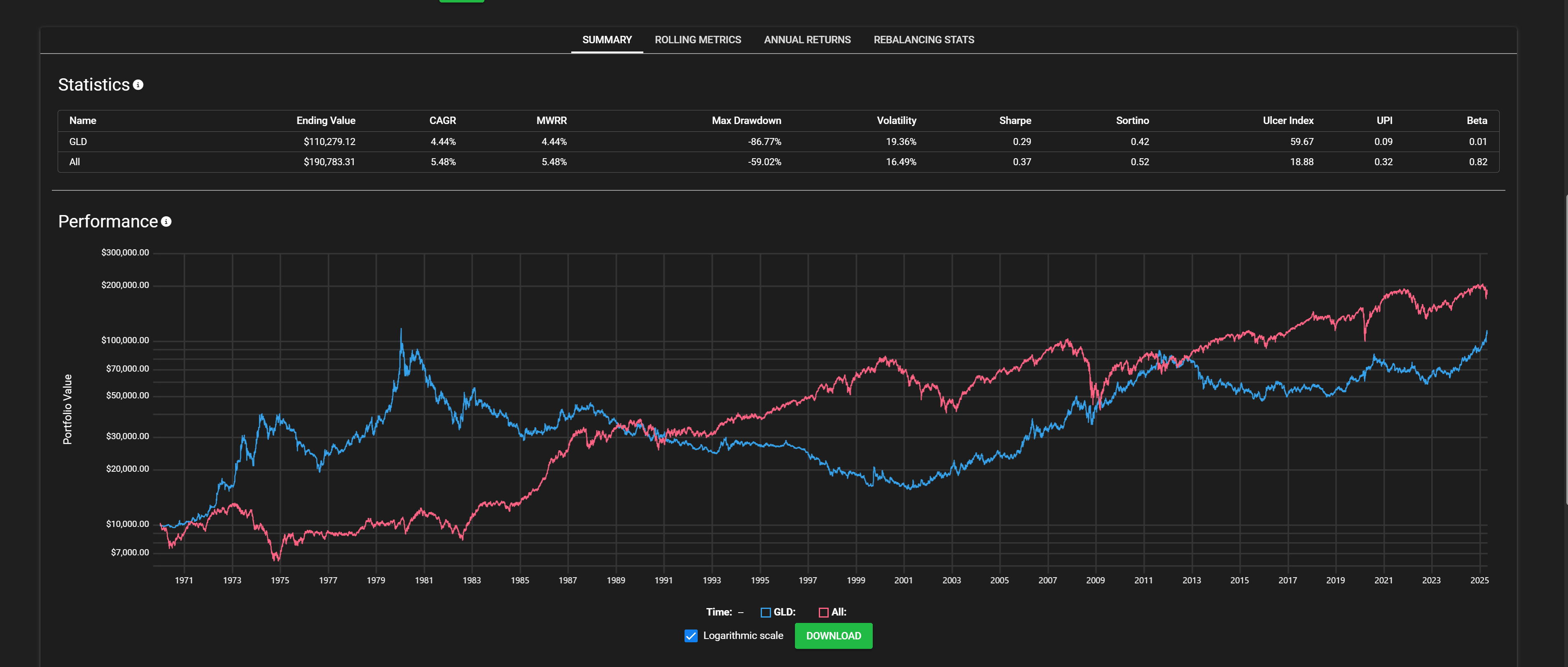

It's quite strange that owning all the world's (public) productive assets have only beaten gold, a largely useless shiny metal, by 1% per year over the last 56 years.

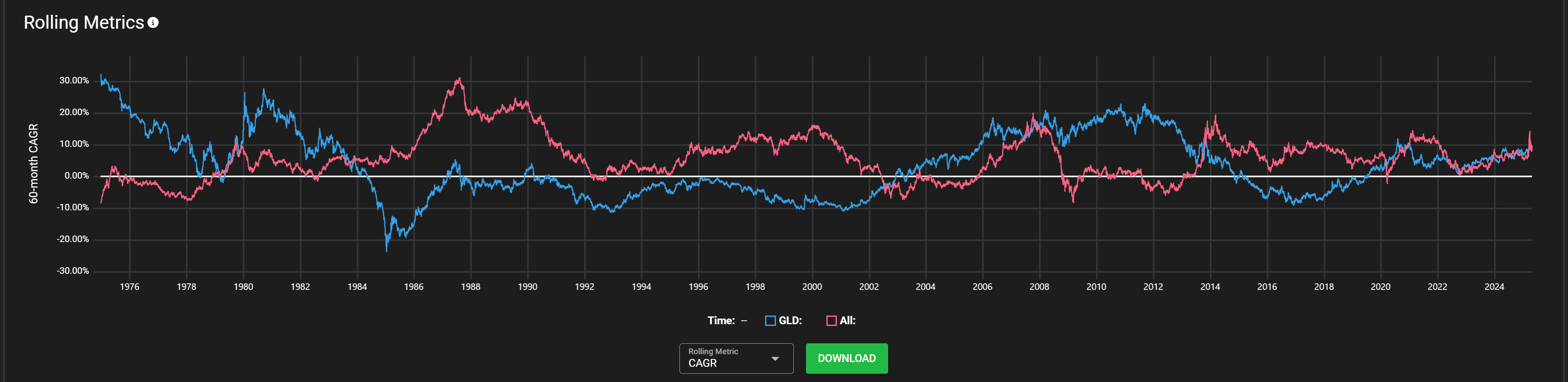

Even if you focus on rolling metrics to(this is 5 year rolling returns).:

there are lots of long stretches of gold beating world equities, especially in recent times. There are people with the suspicion (myself included) that there hasn't been much material growth in the world over the last 40 or so years compared to before. And that since growth is slowing down, this issue is worse if y...

I just think they’ll be easy to fool. For example, historically many companies would get political favors (tariff exemptions) by making flashy fake headlines such as promising to spend trillions on factories.

China has no specific animal welfare laws. There are also some Chinese that regard animal welfare as a Western import. Maybe the claim that they have no concept at all is too strong, but it's certainly minimized by previous regimes.

ie

Mao regarded the love for pets and the sympathy for the downtrodden as bourgeoise

And China's average meat consumption being lower could just be a reflection of their gdp per capita being lower. I don't know where you got the 14% vegetarian number. I can find 5% online. About the same as US numbers.

I don't think the Trump admin has the capacity to meaningful take over an AGI project. Whatever happens, I think the lab leadership will be calling the shots.

Looked up a poll from 2023. Though, maybe that poll is biased by people not voicing their true opinions?

Chinese culture is just less sympathetic in general. China practically has no concept of philanthropy, animal welfare. They are also pretty explicitly ethnonationalist. You don’t hear about these things because the Chinese government has banned dissent and walled off its inhabitants.

However, I think the Hong Kong reunification is going better than I'd expect given the 2019 protests. You'd expect mass social upheaval, but people are just either satisfied or moderately dissatisfied.

Claiming China has no concept of animal welfare is quite extraordinary. This is wrong both in theory and in practice. In theory, Buddhism has always ascribed sentience in animals, long before it was popular in the west. In practice, 14% of the Chinese population is vegetarian (vs. 4.2% in the US) and China's average meat consumption is also lower.

quantum computing, nuclear fusion

I recently interviewed with them, and one of them said they’re hiring a lot of SWEs as they shift to product. Also many of my friends are currently interviewing with them.

I mean some hard evidence is them currently hiring a lot of software engineers for random product-y things. If AGI was close, wouldn't they go all in on research and training?

https://www.windowscentral.com/software-apps/sam-altman-ai-will-make-coders-10x-more-productive-not-replace-them

It sounds like they’re getting pretty bearish on capabilities tho

Yes, the likely outcome of a long tariff regime is China replaces the U.S. as the hegemon + AI race leader and they can’t read Lesswrong or EA blogs there so all this work is useless.

These tariffs may get rid of the compute disadvantage China faces (ie Taiwan starts to ignore export controls). We might see China being comfortably ahead in a year or two assuming we don’t see Congress take drastic action to eliminate the president’s tariffing powers.

The costs of capex go way up. It costs a lot more to build datacenters. It will cost a lot more to buy GPUs. It might cost less to buy energy? Lenders will be in poorer shape. AI companies will lose funding. I think it's already quite tenuous, given how little moat AI companies have. Costs are exploding and pretraining scaling seems too diminishing to be worth it. It's also not clear how AI labs will solve the reliability issue (at least to investors).

I also expect Taiwan to start ignoring export controls if our obscenely high tariffs on them remain.

The point is the money or food just won’t get to them. How do you send food to a region in a civil war between 2 dictators?

A lot of them are trapped in corrupt systems that are very costly and have ethics concerns blocking change. We have the money to feed them, but it would take far more money to turn a bunch of African countries into stable democracies. Overthrowing dictatorships might also raise ethics concerns about colonialism.

The easiest solution would just be lots of immigration, but host population reject that because of our evolutionary pecularities.

Isn't it a distribution problem? World hunger has almost disappeared however. (The issue is hungrier nations have more kids, so progress is a bit hidden).

I've always wondered, why didn't superpowers apply MAIM to nuclear capabilities in the past?

> Speculative but increasingly plausible, confidentiality-preserving AI verifiers

Such as?

I mean, I don't want to give Big Labs any ideas, but I suspect the reasoning above implies that the o1/deepseek -style RL procedures might work a lot better if they can think internally for a long time

I expect gpt 5 to implement this. Based on recent research and how they phrase it.

I thought OpenAI’s deep research uses the full o3?

Why are current LLMs, reasoning models and whatever else still horribly unreliable? I can ask the current best models (o3, Claude, deep research, etc) to do a task to generate lots of code for me using a specific pattern or make a chart with company valuations and it’ll get them mostly wrong.

Is this just a result of labs hill climbing a bunch of impressive sounding benchmarks? I think this should delay timelines a bit. Unless there’s progress on reliability I just can’t perceive.

SWEs won't necessarily be fired even after becoming useless

I'm actually surprised at how eager/willing big tech is to fire SWEs once they're sure they won't be economically valuable. I think a lot of priors for them being stable come from the ZIRP era. Now, these companies have quite frequent layoffs, silent layoffs, and performance firings. Companies becoming leaner will be a good litmus test for a lot of these claims.

https://x.com/arankomatsuzaki/status/1889522974467957033?s=46&t=9y15MIfip4QAOskUiIhvgA

O3 gets IOI Gold. Either we are in a fast takeoff or the "gold" standard benchmarks are a lot less useful than imagined.

I feel like a lot of manifold is virtue signaling .

Just curious. How do you square the rise in AI stocks taking so long? Many people here thought it was obvious since 2022 and made a ton of money.

Keep in mind the current administration is replacing incompetent bureaucracies with self assembling corporations. The organization is still there, just more competent and under a different name. A government project could just look like telling the labs to create 1 data center, throwing money at them, and cutting red tape for building gas plants.

Seems increasingly likely to me that there will be some kind of national AI project before AGI is achieved as the government is waking up to its potential pretty fast. Unsure what the odds are, but last year, I would have said <10%. Now I think it's between 30% and 60%.

Has anyone done a write up on what the government-led AI project(s) scenario would look like?

It might just be a perception problem. LLMs don't really seem to have a good understanding of a letter being next to another one yet or what a diagonal is. If you look at arc-agi with o3, you see it doing worse as the grid gets larger with humans not having the same drawback.

EDIT: Tried on o1 pro right now. Doesn't seem like a perception problem, but it still could be. I wonder if it's related to being a succcesful agent. It might not model a sequence of actions on the state of a world properly yet. It's strange that this isn't unlocked with reasoning.

Ah so there could actually be a large compute overhang as it stands?

They did this on the far easier training set though?

An alternative story is they trained until a model was found that could beat the training set but many other benchmarks too, implying that there may be some general intelligence factor there. Maybe this is still goodharting on benchmarks but there’s probably truly something there.

No, I believe there is a human in the loop for the above if that’s not clear.

You’ve said it in another comment. But this is probably an “architecture search”.

I guess the training loop for o3 is similar but it would be on the easier training set instead of the far harder test set.

I think there is a third explanation here. The Kaggle model (probably) does well because you can brute force it with a bag of heuristics and gradually iterate by discarding ones that don't work and keeping the ones that do.

I have ~15% probability humanity will invent artificial superintelligence (ASI) by 2030.

The recent announcement of the o3 model has updated me to 95%, with most of the 5% being regulatory slow downs involving unprecedented global cooperation.

Do you have a link to these?

I think a lot of this is wishful thinking from safetyists who want AI development to stop. This may be reductionist but almost every pause historically can be explained economics.

Nuclear - war usage is wholly owned by the state and developed to its saturation point (i.e. once you have nukes that can kill all your enemies, there is little reason to develop them more). Energy-wise, supposedly, it was hamstrung by regulation, but in countries like China where development went unfettered, they are still not dominant. This tells me a lot it not being deve...

We still somehow got the steam engine, electricity, cars, etc.

There is an element of international competition to it. If we slack here, China will probably raise armies of robots with unlimited firepower and take over the world. (They constantly show aggression)

The longshoreman strike is only allowed (I think) because the west coast did automate and somehow are less efficient than the east coast for example.

Oh I guess I was assuming automation of coding would result in a step change in research in every other domain. I know that coding is actually one of the biggest blockers in much of AI research and automation in general.

It might soon become cost effective to write bespoke solutions for a lot of labor jobs for example.

Why would that be the likely case? Are you sure it's likely or are you just catastrophizing?

catastrophic job loss that destroys the global economy?

I expect the US or Chinese government to take control of these systems sooner than later to maintain sovereignty. I also expect there will be some force to counteract the rapid nominal deflation that would happen if there was mass job loss. Every ultra rich person now relies on billions of people buying their products to give their companies the valuation they have.

I don't think people want nominal deflation even if it's real economic growth. This will result in massive printing from the fed that probably lands in poeple's pockets (Iike covid checks).

While I'm not surprised by the pessimism here, I am surprised at how much of it is focused on personal job loss. I thought there would be more existential dread.

Existential dread doesn't necessarily follow from this specific development if training only works around verifiable tasks and not for everything else, like with chess. Could soon be game-changing for coding and other forms of engineering, without full automation even there and without applying to a lot of other things.

It’s better at questions but subjectively there doesn’t feel like there’s much transfer. It still gets some basic questions wrong.

Why is the built-in assumption for almost every single post on this site that alignment is impossible and we need a 100 year international ban to survive? This does not seem particularly intellectually honest to me. It is very possible no international agreement is needed. Alignment may turn out to be quite tractable.

Yudkowsky has a pinned tweet that states the problem quite well: it's not so much that alignment is necessarily infinitely difficult, but that it certainly doesn't seem anywhere as easy as advancing capabilities, and that's a problem when what matters is whether the first powerful AI is aligned:

Safely aligning a powerful AI will be said to be 'difficult' if that work takes two years longer or 50% more serial time, whichever is less, compared to the work of building a powerful AI without trying to safely align it.

A mere 5% chance that the plane will crash during your flight is consistent with considering this extremely concerning and doing anything in your power to avoid getting on it. "Alignment is impossible" is not necessary for great concern, isn't implied by great concern.

I guess in the real world the rules aren’t harder per se but just less clear and not written down. I think both the rules and tools needed to solve contest math questions at least feel harder than the vast majority of rules and tools human minds deal with. Someone like Terrence Tao, who is a master of these, excelled in every subject when he was a kid (iirc).

I think LLMs have a pretty good model of human behavior, so for anything related to human judgement, in theory this isn’t why it’s not doing well.

And where rules are unwritten/unknown (say biology), ar...

O1 probably scales to superhuman reasoning:

O1 given maximal compute solves most AIME questions. (One of the hardest benchmarks in existence). If this isn’t gamed by having the solution somewhere in the corpus then:

-you can make the base model more efficient at thinking

-you can implement the base model more efficiently on hardware

-you can simply wait for hardware to get better

-you can create custom inference chips

Anything wrong with this view? I think agents are unlocked shortly along with or after this too.

I wonder if giving it an example of the intended translated writing style helps.