All of Oskar Mathiasen's Comments + Replies

To the extent that purchases stay the same and we pay the cost domestically, that is indeed a tax paid by producers or consumers. Yes, it lowers their remaining capital, but is probably one of the least distortionary available taxes. In the terms described above, if you used the money to cut income tax rates, you’d probably be ahead.

Taxing something where the supply or demand is fixed is extremely efficient, and the extent to which purchases stay the same is exactly the extent to which supply or demand is inflexible. The economic inefficiency of a tax comes from the changes in behavior induced by the tax. The difference between a tariff and a sales tax, is that it induces you to buy native products.

Sorry I see now that i lost half a sentence in the middle. I agree that the notions of early/mid/late game doesn't map well to real life, and I don't think there is a good way to do so. I then (meant to) propose the stages of a 4X game as perhaps mapping more cleanly onto one-shot games

I think the most natural definitions are that early game is the part you have memorized, end game is where you can compute to the end (still doing pruning), and mid game is the rest.

So eg in Scrabble the end game is where there are no tiles or few enough tiles in the bag that you can think through all (relevant) combinations of bags.

I think perhaps the phases of a 4X game.

Explore: gain information that is relevant for what plan to execute

Expand: Investment phase, you take actions that maximise your growth

Exploit: You slowly start depriotizing growth as the time remaining grows shorter.

Exterminate: You go for your win condition

The arguments in the Aumann paper in favor of dropping the completeness axiom is that it makes for a better theory of Human/Buisness/Existent reasoning, not that it makes for a better theory of ideal reasoning.

The paper seems to prove that any partial preference ordering which obeys the other axioms must be representable by a utility function, but that there will be multiple such representatives.

My claim is that either there will be a dutch book, or your actions will be equivalent to the actions you would have taken by following one of those representative...

I don't understand how you are using incompleteness. For example, to me the sentence

"agents can make themselves immune to all possible money-pumps for completeness by acting in accordance with the following policy: ‘if I previously turned down some option X, I will not choose any option that I strictly disprefer to X.’"

Sounds like "agents can avoid all money pumps for completeness by completing their preferences in a random way." Which is true but doesn't seem like much of a challenge to completeness.

Can you explain what behavior is allowed under the f...

It seems to me that FDT has the property that you associate with the "ultimate decision theory".

My understanding is that FDT says that you should follow the policy which is attained by taking the argmax over all policies of the utility from following that policy (only including downstream effects of your policy).

In these easy examples your policy space is your space of committed actions. In which case the above seems to reduce to the "ultimate decision theory" criterion.

The assumptions made here are not time reversible as the macrostate at time t+1 being deterministic given the macrostate at time t, does not imply that the macrostate at time t is deterministic given the macrostate at time t+1.

So in this article the direction of time is given through the asymmetry of the evolution of macrostates.

I think "book of X" can be usefully "translated" as beliefs about X.

The book of truth is not truth, just like the book of night is not night.

I think "book of names" can be read as human categoristion of animals (giving them name). Although other readings do seem plausible.

You might be interested in John Harsanyi on the topic.

He argues that the conclusion achieved in the original position is (average) utilitarianism.

I agree that behind the veil one shouldn't know the time (and thus can't care differently about current vs future humans). This actually causes further problems for Rawls conception when you project back in time, what if the worst life that will ever be lived has already been lived? Then the maximin principle gives no guidance at all, and in positions of uncertainty it recommends putting all effort in preventing a new minimum from being set.

The concept of Kolmogorov Sufficient Statistic might be the missing piece. (cf Elements of information theory section 14.12)

We want the shortest program that describes a sequence of bits. A particularly interpretable type of such programs is "the sequence is in the set X generated by program p, and among those it is the n'th element"

Example "the sequence is in the set of sequences of length 1000 with 104 ones, generated by (insert program here), of which it is the n~10^144'th element".

We therefore define f(String, n) to be the size of the smallest se...

Then you violate the accurate beliefs condition. (If the world is infact a random mixture in proportion which their beliefs track correctly, then fdt will do better when averaging over the mixture)

I don't think the quoted problem has that structure.

And suppose that the existence of S tends to cause both (i) one-boxing tendencies and (ii) whether there’s money in the opaque box or not when decision-makers face Newcomb problems.

But now suppose that the pathway by which S causes there to be money in the opaque box or not is that another agent looks at S

So S causes one boxing tendencies, and the person putting money in the box looks only at S.

So it seems to be changing the problem to say that the predictor observes your brain/your decision proced...

Cant you make the same argument you make in Schwarz procreation by using Parfits hitchhiker after you have reached the city? In which case i think its better to use that example, as it avoids the Heighns criticism.

In the case of implausible discontinuities i agree with Heighn that there is no subjunctive dependence.

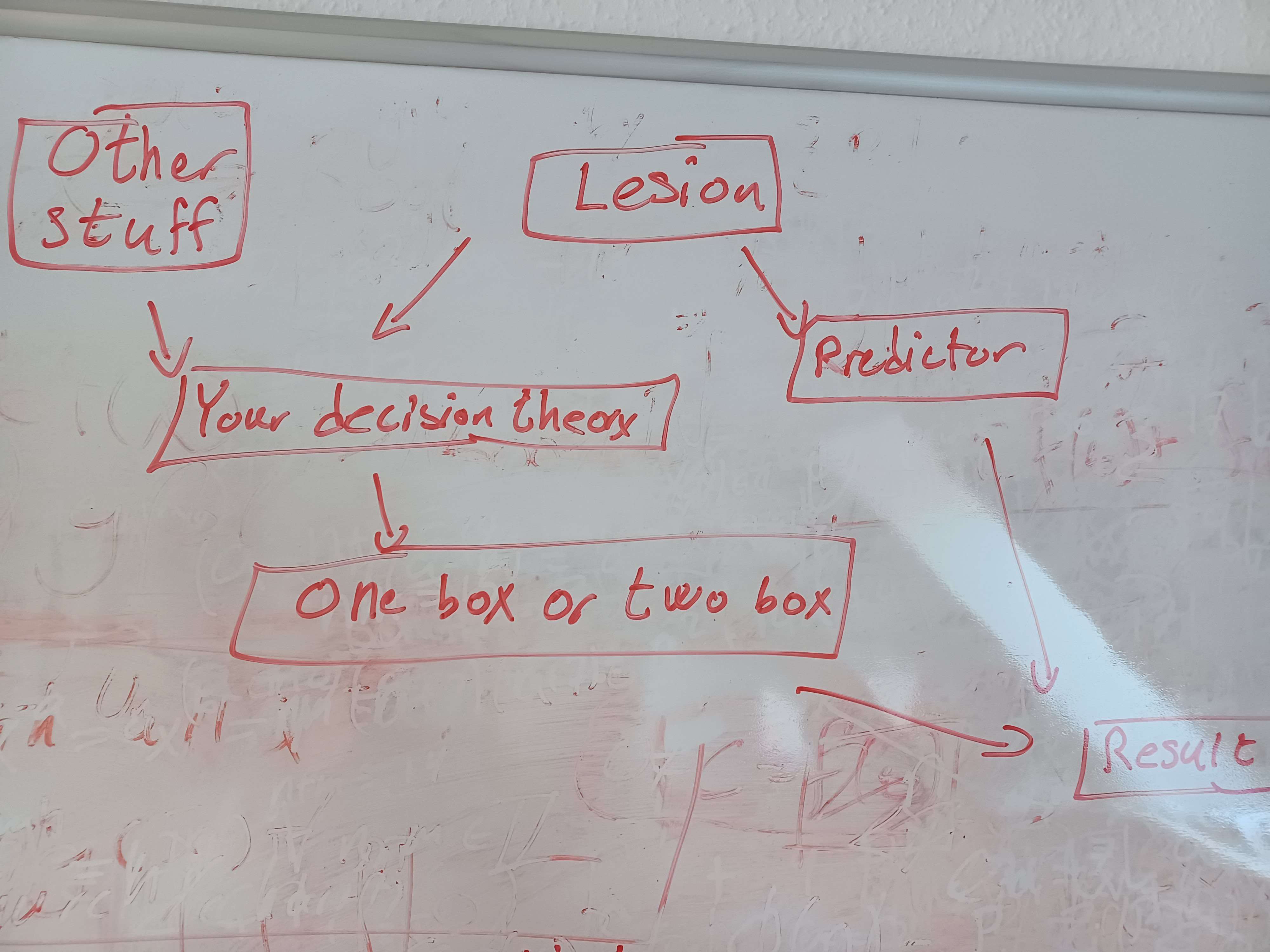

Here is a quick diagram of the causation in the thought experiment as i understand it.

We have an outcome which is completely determined by your decision to one box/two box and the predictor decision of whether to but money in the one box.

T...

Denmark culled all mink due to worries about a covid strain in mink. It has only recently (January 1 2023) become legal to farm mink in Denmark again.

logical inductors are actually defined by the logical induction criterion. The market bit is there to prove that it is possible to fulfill the criterion.

There is also the somewhat boring answer that probability can refer to anything which obeys the axioms of probability.

Note that coop is a consumer cooperative not an employee cooperative.

https://en.wikipedia.org/wiki/Consumers%27_co-operative

New report from Denmark called "Focusrapport about Covid-19 related hospitalizations during the Covid-19 pandemic"

https://www.ssi.dk/-/media/cdn/files/fokusrapport-om-covid-19-relaterede-hospitalsindlggelser-under-sars-cov-2-epidemien_06012022_1.pdf?la=da

It is sadly in danish, so i will give a translation of the main results section and some of the graphs.

Summary of main results:

Theme 1:

* The older a patient is, the greater the likelihood that he or she will have a covid-19- related hospitalization of 12 hours or more.

* The proportion of short ...

Updated numbers from today at: https://files.ssi.dk/covid19/omikron/statusrapport/rapport-omikronvarianten-10122021-ek56

Now with English translations.

Only significant change is that hospitalizations are up to 1.4% (18 cases).

There should be daily updates found her https://www.ssi.dk/aktuelt/nyheder/2021

click the newest one and click "læs rapporten her" (read the report here). Which takes you to a page where you can download the rapport for that day

Repost from wordpress blog

status rapport from Denmark

Key numbers: they give numbers of cases by day, and also give cases of omicron as a percentage of other cases.

Of 785 cases in Danish citizens, 76.31% of omicron cases where in double vaxed, 7.13% in triple vaxed, compared to 73.69% double (probably also including triple) vaxed for covid in general. 14.14% unvaxed for omicron 22.93% for general (over the last 7 days)

Rate of hospitalization is 1.15% for omicron (9 cases), and 1.85 in general.

Everything is probably confounded by age ...

Todays cumulative omicron cases is 398

Repost from my wordpress comments:

Danish seroprevalance (from https://www.ssi.dk/aktuelt/nyheder/2021/markant-stigning-i-den-fjerde-nationale-praevalensundersoegelse) seems to have been about 50% above commulative cases (from https://www.worldometers.info/coronavirus/country/denmark/) by may 2020.

Commulative omicron cases in Denmark per SSI https://www.ssi.dk/aktuelt/nyheder/2021

dec 3: 18 omicron cases

dec 5: 183 cases

dec 6: 261 cases

Oddly this looks linear (80 cases per day), but that is almost certainly random.

The fact that the 2 numbers are equal is not always true, it is randomly true on this day.

Yes.

I agree that the original post keeps going after removing differences in values because they don't remove differences in marginal value, which is what matters.

I am providing an example where properly removing the differences in marginal value results in no trade.

You are using a nonstandard definition of goods. Would you equally object to a market with only blueberries, apples and bananas on the basis that there is only one good available (fruits)?

The example world can be modified easily to use any utility function of the form a·red points + b·yellow points + c·blue points.

Yes.

The comment was meant as a proof by example that you can have no trade in a world with comparative advantages, if everyone has the same marginal value of all products the result is no trade.

Diminishing marginal returns are indeed enough to make marginal values different between people.

People could trade making each others numbers bigger, it's just that it will never be beneficial for both.

Letting people increase others numbers by decreasing their own number doesn't change the results

Example world without trade.

Every person gets at birth assigned an array of 3 integers a blue number, a yellow number and a red number. Every person has 3 attributes: the speed they can increase a red number (by spending that amount of time counting out loud), the speed they can increase a blue number, and the speed they can increase a yellow number. They can increase their own numbers or anyone elses. (Note we are not assuming everyone has the same amount of red, blue and yellow points at birth or that they are all equally fast at producing them). Everyon...

Two of the removed features are removed incompletely.

Differerences in preferences. What is important for trade is the marginal preference. So to remove this motivation to trade one mus assume the marginal value (both intrinsic and instrumental) to be equal for everyone for all goods, which i think can only happen in some very weird cases (eg all gods have no instrumental value and utility is a linear combination of the products).

The difference in peoples productive capacity (which doesn't by itself result in trade (it does when assuming diminishing margina...

Does you intuition still hold in the [Least Convenient Possible World](https://www.lesswrong.com/posts/neQ7eXuaXpiYw7SBy/the-least-convenient-possible-world) where costs of creating new beings is 0?

At least the big Brittish schools this doesn't clearly hold based on the experience of people i know. Granted the evidence i have is consistent with them only caring about silver or better.

Also my impression for the Russian schools was that not speaking Russian was a problem they were happy to work around (which certainly isn't true everywhere)

The Danish team leader (the closest source to these events i have talked to) seems to (personally) believe the cheating allegations in 2010 or at least that the evidence was insufficient.

Also note their non participation in 2017 and 2018 for reasons not known to me and in 2020 likely because the event was online

The comparison to chess is maybe more accurate than you think.

See stuff like:

Beginnings: The first IMO was held in Romania in 1959. It was initially founded for eastern European member countries of the Warsaw Pact, under the USSR bloc of influence, but later other countries participated as well.[2] (source https://en.wikipedia.org/wiki/International_Mathematical_Olympiad)

Also classic geometry is (to my knowledge) taught more generally in many eastern European countries (and make up 1/6-1/3 of the imo).

Also the note about incentives being larger in North Ko...

One possible way to get at the hack of ignoring unlikely possibilities in a reasonable way might be to do something similar to the "typical set" found in information theory. Especially as utility function maximization can be reformulated as relative entropy minimization.

(Epistemic status: my brain saw a possible connection, i have not spent much time on this idea)

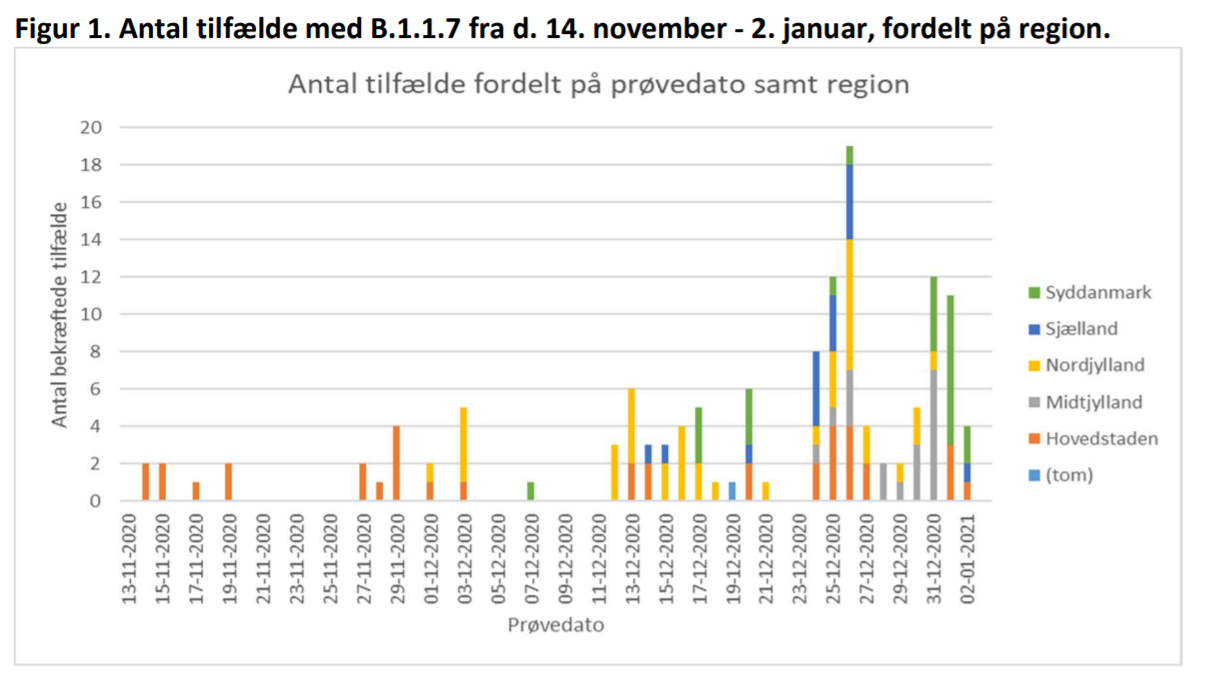

Here is the updated graph

on this one days with 0 cases are not excluded

Worth noting that there doesnt seem to be any significant differences in the ages of people who have the new and old variant

Source https://www.ssi.dk/-/media/cdn/files/notat_engelsk_virusvariant090121.pdf?la=da

Update with new numbers.

In the period from 28-12 to 02-01 we get the following numbers

Positive tests: 14408

sequenced tests: 1261 (8.8%)

B.1.1.7 cases: 36 (2.9%)

Which is slightly slower than a doubleing time of a week (a 1.8 multiplier per week with naive extension (i believe the naive method is likely to underestimate))

In this alternate universe the old testament is true, so it is a reference to the seventh day of creation where god rested (after having created the world)

The countries that do the most sequencing (who also have a significant number of cases) is Denmark (10.9% of positives are sequenced) and the UK(5.61%).

The Danish data makes me slightly hopeful:

1. the virus has been found in Denmark.

2. But the numbers are surprisingly small, 9 confirmed cases by 20-12,

3. The Danish government have not released statements regarding its effects on vaccines.

3-1. Denmark made such an announcement as soon as any evidence above baseline existed for cluster 5, so the lack of announcement is more significant than one might otherwise think.

4. Case numbers are not going up in Denmark

Denmark seems to also not be an option.

(and switzerland is an option twice)

If i had to guess on the motives, the last time a similar game was played (non publically) the meta developed to be about self recognizing, this is likely a rule to avoid this.

Winning strategy was some key string to identify yourself, cooperate with yourselt, play 3 otherwise. (Granted number of iterations was low, so people might not have moved to be non cooperating strategies enough (something like grim trigger))

You predict that it is more likely to have an ai which " that can perform nearly every economically valuable task more cheaply than a human, will have been created " than "will write a book without substantial aid, that ends up on the New York Times bestseller list. "

This seems weird as the first seems very likely to cause the second.

They seem to forget to first condition on the fact that the threshold must be an integer. This narrows the possibility space to have size countable infinit rather than uncountable infinit. Meaning they need to do a completely different mahtematics, which gives the correct ressult

Here are some relevant quotes by Eliezer from a discord discussion on dath ilan currency:

... (read more)Note that i have skipped parts of the conversation between each paragraph