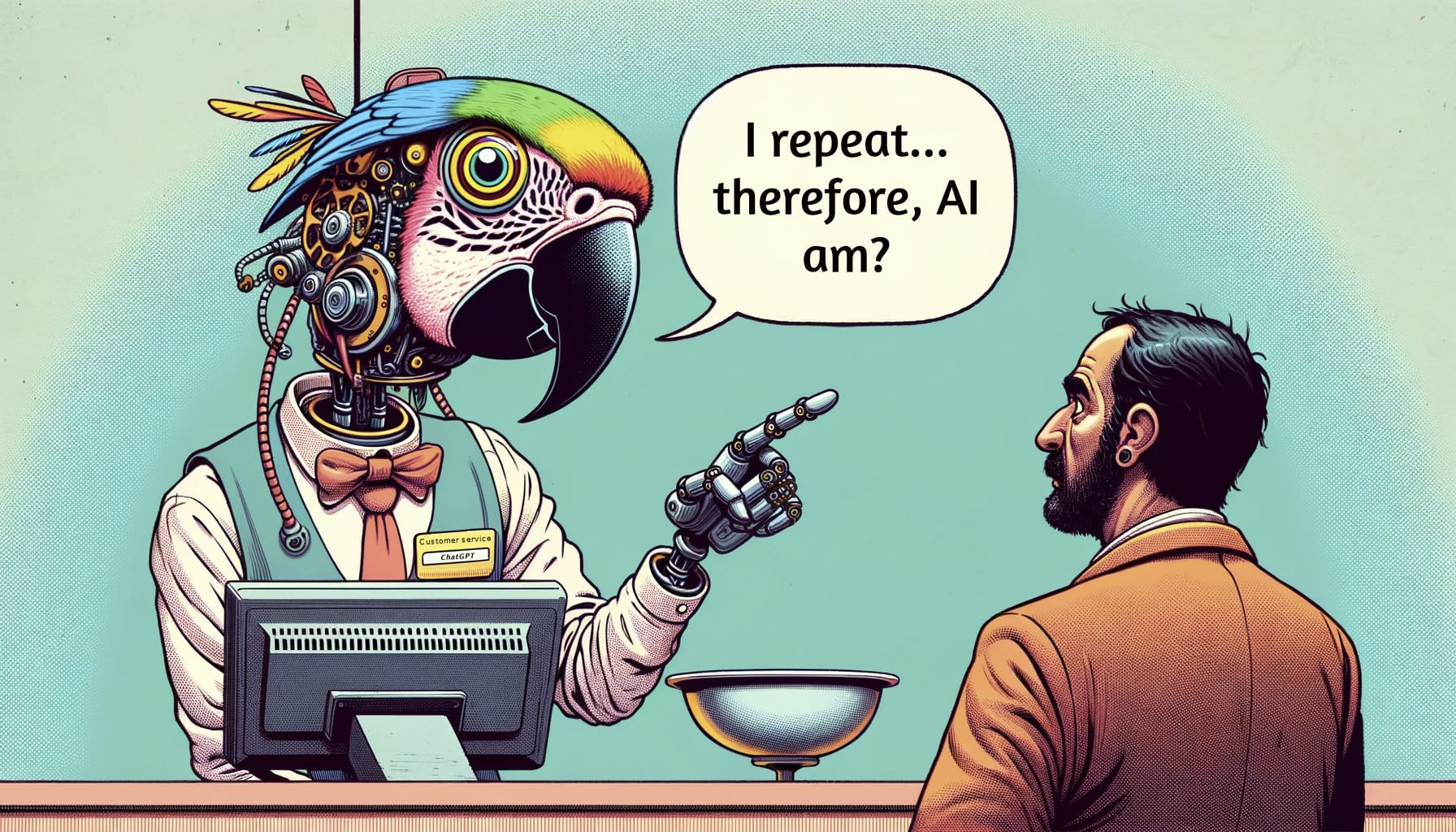

The Stochastic Parrot Hypothesis is debatable for the last generation of LLMs

This post is part of a sequence on LLM Psychology. @Pierre Peigné wrote the details section in argument 3 and the other weird phenomenon. The rest is written in the voice of @Quentin FEUILLADE--MONTIXI Intro Before diving into what LLM psychology is, it is crucial to clarify the nature of the subject we are studying. In this post, I’ll challenge the commonly debated stochastic parrot hypothesis for state-of-the-art large language models (≈GPT-4), and in the next post, I’ll shed light on what LLM psychology actually is. The stochastic parrot hypothesis suggests that LLMs, despite their remarkable capabilities, don't truly comprehend language. They are like mere parrots, replicating human speech patterns without truly grasping the essence of the words they utter. While I previously thought this argument had faded into oblivion, I often find myself in prolonged debates about why current SOTA LLMs surpass this simplistic view. Most of the time, people argue using examples of GPT3.5 and aren’t aware of GPT-4's prowess. Through this post, I am presenting my current stance, using LLM psychology tools, as to why I have doubts about this hypothesis. Let’s delve into the argument. Central to our debate is the concept of a "world model". A world model represents an entity's internal understanding and representation of the external environment they live in. For humans, it's our understanding of the world around us, how it works, how concepts interact with each other, and our place within it. The stochastic parrot hypothesis challenges the notion that LLMs possess a robust world model. It suggests that while they might reproduce language with impressive accuracy, they lack a deep, authentic understanding of the world and its nuances. Even if they have a good representation of the shadows on the wall (text), they don’t truly understand the processes that lead to those shadows, and the objects from which they are cast (real world). Yet, is this truly the case? While it is