I want everyone to be able to achieve world they would really like; guess the best way to do that is to help people learn, build one's strengths, build small-scale and large-scale projects, and also to cooperate.

Any gift must be accepted with gratitude and, if possible, with grace.

As of 2024-12-21, I have signed no contracts I cannot mention exist. I finally got to adding this notice thanks to the one in the-gears-to-ascension's bio.

Posts

Wikitag Contributions

Most cases, you could build a mini-quadcopter, "teach" it some tricks and try showcasing it, having video as a side effect!

Browsing through recent rejects, I found an interesting comment that suggests an automatic system "build a prior on whether something is true by observing social interactions around it vs competing ideas".

@timur-sadekov While it will fail most certainly, I do remember that the ultimate arbiter is experiment, so I shall reserve the judgement. Instead, I'm calling for you to test the idea over prediction markets (Manifold, for instance) and publish the results.

The AI industry is different—more like biology labs testing new pathogens: The biolab must carefully monitor and control conditions like air pressure, or the pathogen might leak into the external world.

This looks like a cached analogy, because given previous paragraph this would fit more: "the AI industry is different, more like testing planes; their components can be internally tested, but eventually the full plane must be assembled and fly, and then it can damage the surroundings".

Same goes for AI: If we don’t keep a watchful eye, the AI might be able to escape—for instance, by exploiting its role in shaping the security code meant to keep the AI locked inside the company’s computers.

This seems very likely to happen! We've already seen that AIs improving code can remove its essential features, so it wouldn't be surprising for some security checks to disappear.

Based on this, I've decided to upvote this post weakly only.

I suggest additional explanation.

The bigger the audience is, the more people there are who won't know a specific idea/concept/word (xkcd's comic #1053 "Ten Thousand" captures this quite succinctly), so you'll simply have to shorten.

I took logarithm of sentence length and linearly fitted it against logarithm of world population (that shouldn't really be precise since authors presumably mostly cared about their society, but that would be more time-expensive to check).

Relevant lines of Python REPL

>>> import math

>>> wps = [49, 50, 42, 20, 21, 14, 18, 12]

>>> pop = [600e6, 700e6, 1e9, 1.4e9, 1.5e9, 2.3e9, 3.5e9, 6e9]

>>> [math.log(w) for w in wps]

[3.8918202981106265, 3.912023005428146, 3.7376696182833684, 2.995732273553991, 3.044522437723423, 2.6390573296152584, 2.8903717578961645, 2.4849066497880004]

>>> [math.log(p) for p in pop]

[20.21244021318042, 20.36659089300768, 20.72326583694641, 21.059738073567623, 21.128730945054574, 21.556174959881517, 21.97602880544178, 22.515025306174465]

>>> 22.51-20.21

2.3000000000000007

>>> 3.89-2.48

1.4100000000000001

>>> 2.3/1.41

1.6312056737588652

>>> [round(math.exp(26.41 - math.log(w)*1.63)/1e9, 3) for w,p in zip(wps,pop)] # predicted population, billion

[0.518, 0.502, 0.667, 2.234, 2.063, 3.995, 2.652, 5.136]

>>> [round(math.exp(26.41 - math.log(w)*1.63)/1e9 - p/1e9, 3) for w,p in zip(wps,pop)] # prediction off by, billion

[-0.082, -0.198, -0.333, 0.834, 0.563, 1.695, -0.848, -0.864]

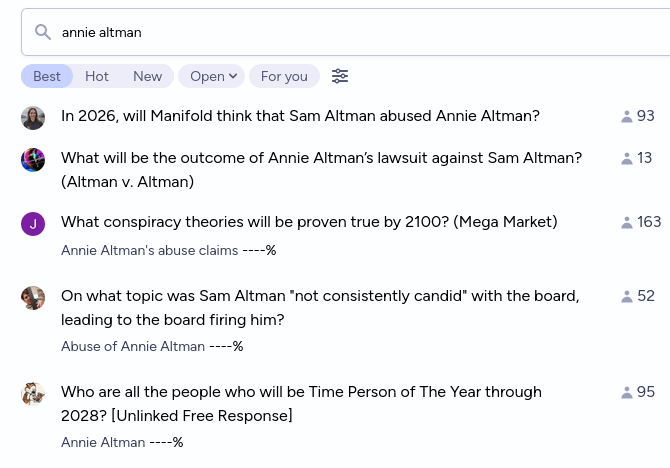

Given the gravity of Sam Altman's position at the helm of the company leading the development of an artificial superintelligence which it does not yet know how to align -- to imbue with morality and ethics -- I feel Annie's claims warrant a far greater level of investigation than they've received thus far.

Then there's a bit of shortage of something public... and ratsphere-adjacent... maybe prediction markets?

I can create (subsidize) a few, given resolution criteria.

Alphabet of LW Rationality, special excerpts

- Cooperation: you need to cooperate with yourself to do anything. Please do so iff you are building ASI;

- Defection: it ain't no defection if you're benefitting yourself and advancing your goals;

- Inquiry: a nice way of making others work for your amusement or knowledge expansion;

- Koan: if someone is not willing to do research for you, you can waste their time on pondering a compressed idea.

This concludes my April the 1st!

I don’t think there is a universal set of emojis that would work on every human, but I totally think that there is a set of such emojis (or something similar) that would work on any given human at any given time, at least a large percentage of the time, if you somehow were able to iterate enough times to figure out what it is.

Probably something with more informational content than emojis, like images (perhaps slightly animated). Trojan Sky, essentially.

I'm not sure if this will be of any use, since your social skills will surely be warped when you expect iterating on them (in a manner like radical transparency reduces awareness of feelings ).