Studying The Alien Mind

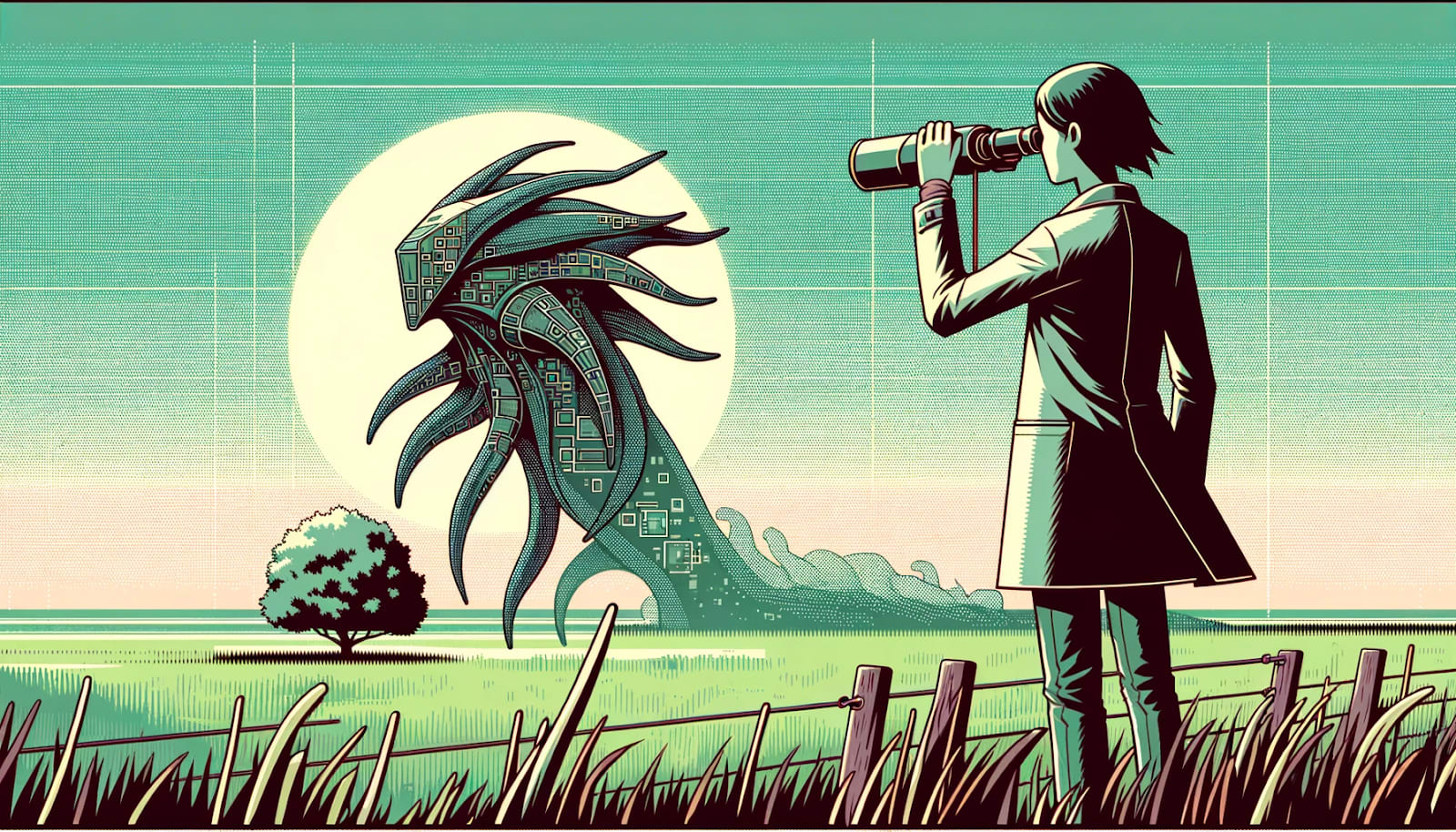

This post is part of a sequence on LLM psychology TL;DR We introduce our perspective on a top-down approach for exploring the cognition of LLMs by studying their behavior, which we refer to as LLM psychology. In this post we take the mental stance of treating LLMs as “alien minds,” comparing and contrasting their study with the study of animal cognition. We do this both to learn from past researchers who attempted to understand non-human cognition, as well as to highlight how much the study of LLMs is radically different from the study of biological intelligences. Specifically, we advocate for a symbiotic relationship between field work and experimental psychology, as well as cautioning implicit anthropomorphism in experiment design. The goal is to build models of LLM cognition which help us to both better explain their behavior, as well as to become less confused about how they relate to risks from advanced AI. Introduction When we endeavor to predict and understand the behaviors of Large Language Models (LLMs) like GPT4, we might presume that this requires breaking open the black box, and forming a reductive explanation of their internal mechanics. This kind of research is typified by approaches like mechanistic interpretability, which tries to directly understand how neural networks work by breaking open the black box and taking a look inside. While mechanistic interpretability offers insightful bottom-up analyses of LLMs, we’re still lacking a more holistic top-down approach to studying LLM cognition. If interpretability is analogous to the “neuroscience of AI,” aiming to understand the mechanics of artificial minds by understanding their internals, this post tries to approach the study of AI from a psychological stance.[1] What we are calling LLM psychology is an alternate, top-down approach which involves forming abstract models of LLM cognition by examining their behaviors. Like traditional psychology research, the ambition extends beyond merely catal

Great post! I really feel like you captured most of my worries about AI progress, and this feels like the first end-to-end scenario without holes of "AI goes bad". I think we need more of those. Some thoughts as I was reading through it:

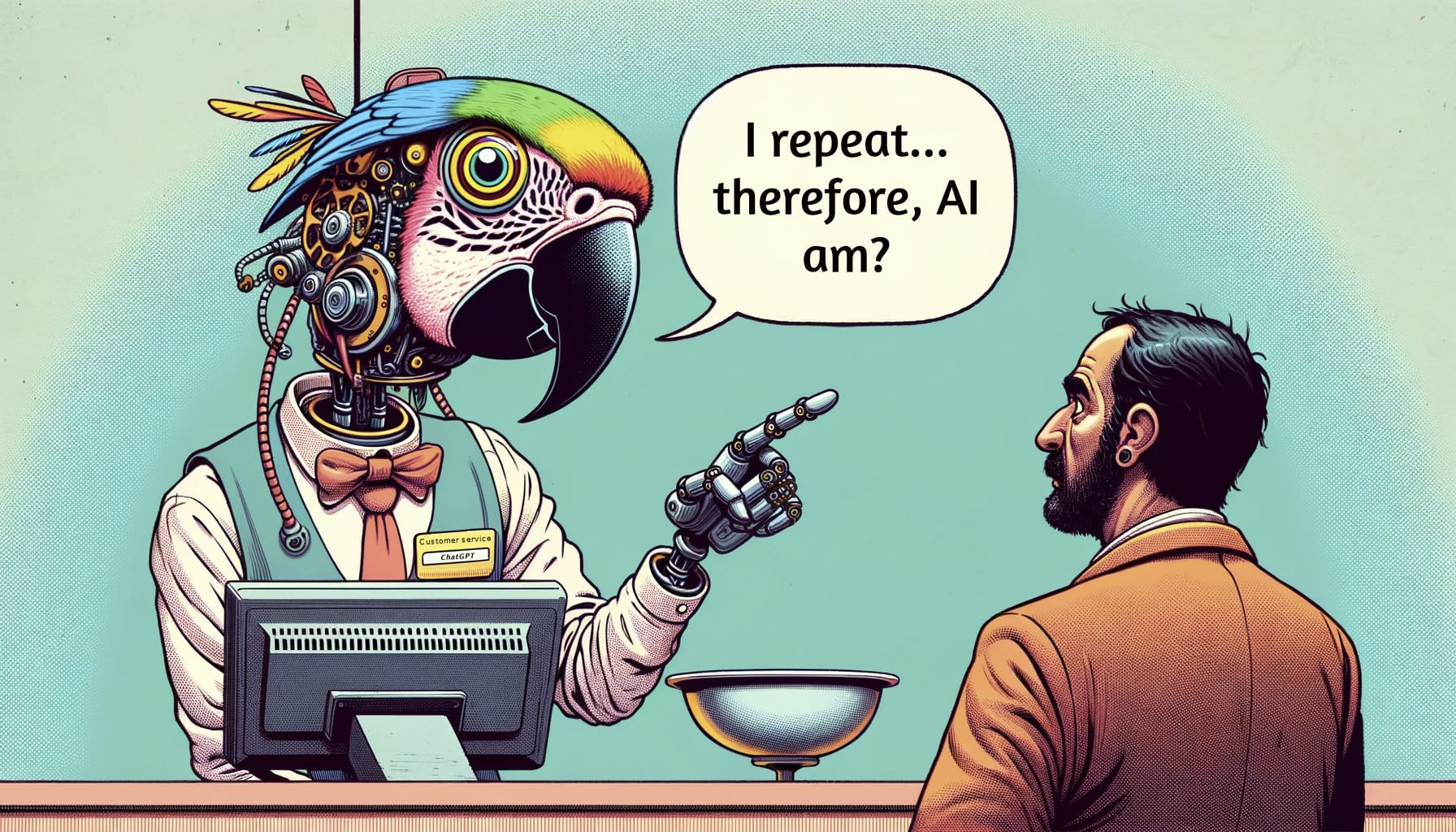

If this is still an "LLM-ish" system, then when these kinds of conversations arise, I always have this chain of thoughts racing through my mind:

What is U3? If U3 is the model, and it is a stochastic predictor, then it doesn't make a lot of sense to say "U3" wants to survive, as a simulation wanting to survive doesn't make sense to... (read more)