All of Sjlver's Comments + Replies

Absolutely agree that the diagram omits lots of good parts from your post!

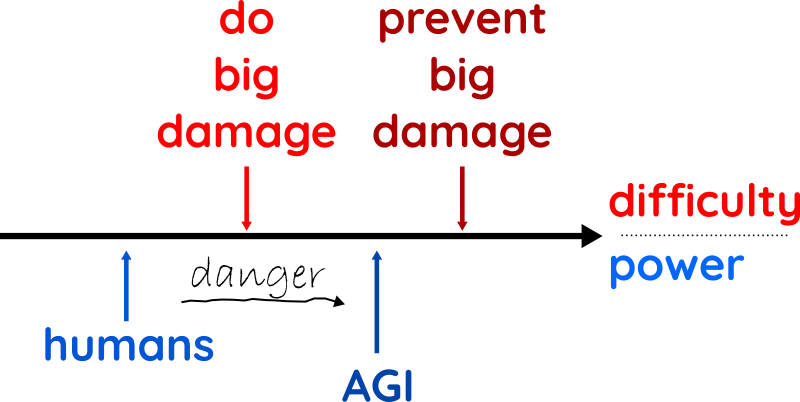

Is "do big damage" above or below human level? It probably depends on some unspecified assumptions, like whether we include groups of humans or just individuals...; anyway, the difficulty can't be much higher, since we have examples like nuclear weapon states that reach it. It can't be much lower, either, since it is lower-bounded by the ability of "callously-power-seeking humans". Somewhere in that range.

What I like about the diagram:

- It visualizes the dangerous zone between "do big

I tried to make a summary of how I understood your post, and systemize it a bit. I hope this is helpful for others, but I also want to say that I really liked the many examples in the post.

The central points that I understood from what you wrote:

- It takes more power to prevent big damage than to do big damage. You give several examples where preventing extinction requires solutions that are illegal or way out of the Overton window, and examples like engineered pandemics that seem easier.

I believe this point is basically correct for a variety of reasons, fro

How does urine testing for iron deficiency work? I was under the impression that one needs to measure blood ferritin levels to get a meaningful result... but I might be wrong?