All of t14n's Comments + Replies

I have Aranet4 CO2 monitors inside my apartment, one near my desk and one in the living room both at eye level and visible to me at all times when I'm in those spaces. Anecdotally, I find myself thinking "slower" @ 900+ ppm, and can even notice slightly worse thinking at levels as low as 750ppm.

I find indoor levels @ <600ppm to be ideal, but not always possible depending on if you have guests, air quality conditions of the day, etc.

I unfortunately only live in a space with 1 window, so ventilation can be difficult. However a single well placed fan facin...

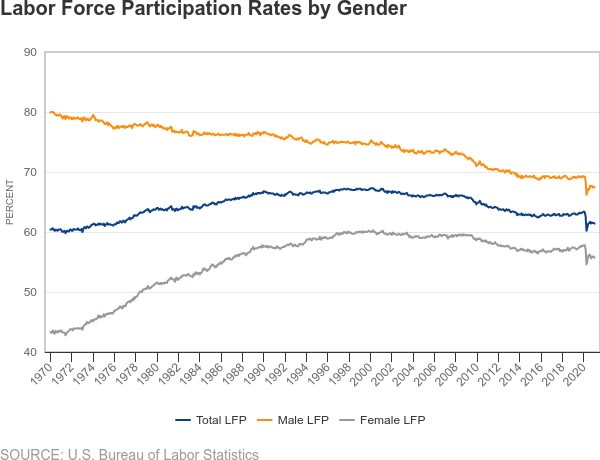

Want to know what AI will do to the labor market? Just look at male labor force participation (LFP) research.

US Male LFP has dropped since the 70s, from ~80% to ~67.5%.

There are a few dynamics driving this, and all of them interact with each other. But the primary ones seem to be:

- increased disability [1]

- younger men (e.g. <35 years old) pursuing education instead of working [2]

- decline in manufacturing and increase in services as fraction of the economy

- increased female labor force participation [1]

Our economy shifted from labor that required physical toug...

re: public track records

I have a fairly non-assertive, non-confrontational personality, which causes me to defer to "safer" strategies (e.g. nod and smile, don't think too hard about what's being said, or at least don't vocalize counterpoints). Perhaps others here might relate. These personality traits are reflected in "lazy thinking" online -- e.g. not posting even when I feel like I'm right about X, not sharing an article or sending a message for fear of looking awkward/revealing a preference about myself that others might not agree with.

I notice that pe...

Skill ceilings across humanity is quite high. I think of super genius chess players, Terry Tao, etc.

A particular individual's skill ceiling is relatively low (compared to these maximally gifted individuals). Sure, everyone can be better at listening, but there's a high non-zero chance you have some sort of condition or life experience that makes it more difficult to develop it (hearing disability, physical/mental illness, trauma, an environment of people who are actually not great at communicating themselves, etc).

I'm reminded of what Samo Burja calls "com...

I'm giving up on working on AI safety in any capacity.

I was convinced ~2018 that working on AI safety was an Good™ and Important™ thing, and have spent a large portion of my studies and career trying to find a role to contribute to AI safety. But after several years of trying to work on both research and engineering problems, it's clear no institutions or organizations need my help.

First: yes, it's clearly a skill issue. If I was a more brilliant engineer or researcher then I'd have found a way to contribute to the field by now.

But also, it seems like the ...

First: yes, it's clearly a skill issue. If I was a more brilliant engineer or researcher then I'd have found a way to contribute to the field by now.

I am not sure about this, just because someone will pay you to work on AI safety doesn't mean you won't be stuck down some dead end. Stuart Russell is super brilliant but I don't think AI safety through probabilistic programming will work.

Thank you for your service!

For what it's worth, I feel that the bar for being a valuable member of the AI Safety Community, is much more attainable than the bar of working in AI Safety full-time.

I think the main problem is that society-at-large doesn't significantly value AI safety research, and hence that the funding is severely constrained. I'd be surprised if the consideration you describe in the last paragraph plays a significant role.

Morris Chang (founder of TSMC and titan in the fabrication process) had a lecture at MIT giving an overview of the history in chip design and manufacturing. [1] There's a diagram ~34:00 that outlines the chip design process, and where foundries like TSMC slot into the process.

I also recommend skimming Chip War by Chris Miller. Has a very US-centric perspective, but gives a good overview of the major companies that developed chips from the 1960s-1990s, and the key companies that are relevant/bottlenecks to the manufacturing process circa-2022.

1: TSMC founder Morris Chang on the evolution of the semiconductor industry

There's "Nothing, Forever" [1] [2], which had a few minutes of fame when it initially launched but declined in popularity after some controversy (a joke about transgenderism generated by GPT-3). It was stopped for a bit, then re-launched after some tweaking with the dialogue generation (perhaps an updated prompt? GPT 3.5? There's no devlog so I guess we'll never know). There are clips of "season 1" on YouTube prior to the updated dialogue generation.

There's also ai_sponge, which was taken down from Twitch and YouTube due to it's incredibly racy jokes (e.g....

re: 1b (development likely impossible to kept secret given scale required)

I'm remind of Dylan Patel's comments (semianalysis) on a recent episode of the Dwarkesh Podcast which goes something like:

Given the success we've seen in training SOTA models with constrained GPU resources (Deepseek), I... (read more)