All of yanni kyriacos's Comments + Replies

One axis where Capabilities and Safety people pull apart the most, with high consequences is on "asking for forgiveness instead of permission."

1) Safety people need to get out there and start making stuff without their high prestige ally nodding first

2) Capabilities people need to consider more seriously that they're building something many people simply do not want

Solving the AGI alignment problem demands a herculean level of ambition, far beyond what we're currently bringing to bear. Dear Reader, grab a pen or open a google doc right now and answer these questions:

1. What would you do right now if you became 5x more ambitious?

2. If you believe we all might die soon, why aren't you doing the ambitious thing?

One idea: Create a LinkedIn advertiser account and segment by Industry and or Job Title. and or Job Function

SASH isn't official (we're waiting on funding).

Here is TARA :)

https://www.lesswrong.com/posts/tyGxgvvBbrvcrHPJH/apply-to-be-a-ta-for-tara

I think it will take less than 3 years for the equivalent of 1,000,000 people to get laid off.

If transformative AI is defined by its societal impact rather than its technical capabilities (i.e. TAI as process not a technology), we already have what is needed. The real question isn't about waiting for GPT-X or capability Y - it's about imagining what happens when current AI is deployed 1000x more widely in just a few years. This presents EXTREMELY different problems to solve from a governance and advocacy perspective.

E.g. 1: compute governance might no longer be a good intervention

E.g. 2: "Pause" can't just be about pausing model development. It should also be about pausing implementation across use cases

Yep

E.g. 50,000 travel agents lose their jobs in 25/26

That's pretty high. What use cases are you imagining as the most likely?

I spent 8 years working in strategy departments for Ad Agencies. If you're interested in the science behind brand tracking, I recommend you check out the Ehrenberg-Bass Institutes work on Category Entry Points: https://marketingscience.info/research-services/identifying-and-prioritising-category-entry-points/

What is your AI Capabilities Red Line Personal Statement? It should read something like "when AI can do X in Y way, then I think we should be extremely worried / advocate for a Pause*".

I think it would be valuable if people started doing this; we can't feel when they're on an exponential, so its likely we will have powerful AI creep up on us.

@Greg_Colbourn just posted this and I have an intuition that people are going to read it and say "while it can do Y it still can't do X"

*in the case you think a Pause is ever optimal.

I'd like to see research that uses Jonathan Haidt's Moral Foundations research and the AI risk repository to forecast whether there will soon be a moral backlash / panic against frontier AI models.

Ideas below from Claude:

# Conservative Moral Concerns About Frontier AI Through Haidt's Moral Foundations

## Authority/Respect Foundation

### Immediate Concerns

- AI systems challenging traditional hierarchies of expertise and authority

- Undermining of traditional gatekeepers in media, education, and professional fields

- Potential for AI to make decisions traditiona...

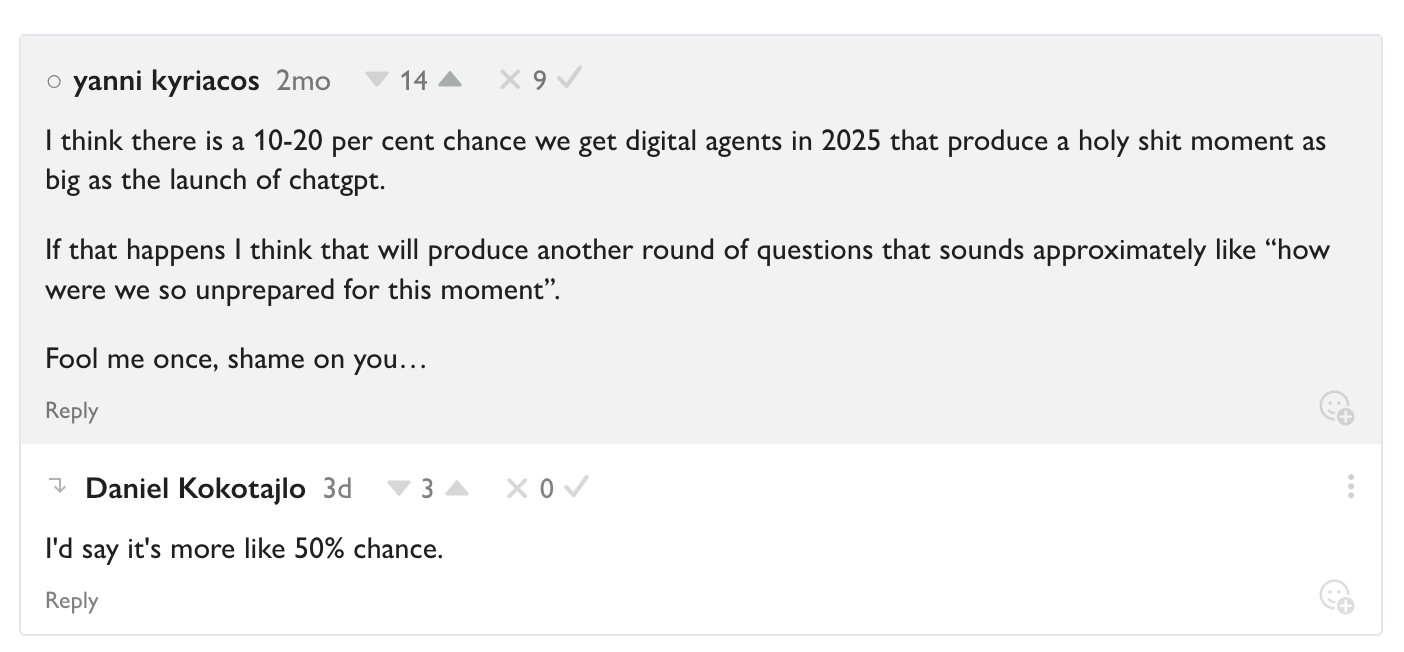

I think there is a 10-20 per cent chance we get digital agents in 2025 that produce a holy shit moment as big as the launch of chatgpt.

If that happens I think that will produce another round of questions that sounds approximately like “how were we so unprepared for this moment”.

Fool me once, shame on you…

I am 90% sure that most AI Safety talent aren't thinking hard enough about what Neglectedness. The industry is so nascent that you could look at 10 analogous industries, see what processes or institutions are valuable and missing and build an organisation around the highest impact one.

The highest impact job ≠ the highest impact opportunity for you!

AI Safety (in the broadest possible sense, i.e. including ethics & bias) is going be taken very seriously soon by Government decision makers in many countries. But without high quality talent staying in their home countries (i.e. not moving to UK or US), there is a reasonable chance that x/c-risk won’t be considered problems worth trying to solve. X/c-risk sympathisers need seats at the table. IMO local AIS movement builders should be thinking hard about how to keep talent local (this is an extremely hard problem to solve).

Big AIS news imo: “The initial members of the International Network of AI Safety Institutes are Australia, Canada, the European Union, France, Japan, Kenya, the Republic of Korea, Singapore, the United Kingdom, and the United States.”

H/T @shakeel

I beta tested a new movement building format last night: online networking. It seems to have legs.

V quick theory of change:

> problem it solves: not enough people in AIS across Australia (especially) and New Zealand are meeting each other (this is bad for the movement and people's impact).

> we need to brute force serendipity to create collabs.

> this initiative has v low cost

quantitative results:

> I purposefully didn't market it hard because it was a beta. I literally got more people that I hoped for

> 22 RSVPs and 18 attendees

> this s...

Is anyone in the AI Governance-Comms space working on what public outreach should look like if lots of jobs start getting automated in < 3 years?

I point to Travel Agents a lot not to pick on them, but because they're salient and there are lots of them. I think there is a reasonable chance in 3 years that industry loses 50% of its workers (3 million globally).

People are going to start freaking out about this. Which means we're in "December 2019" all over again, and we all remember how bad Government Comms were during COVID.

Now is the time to start ...

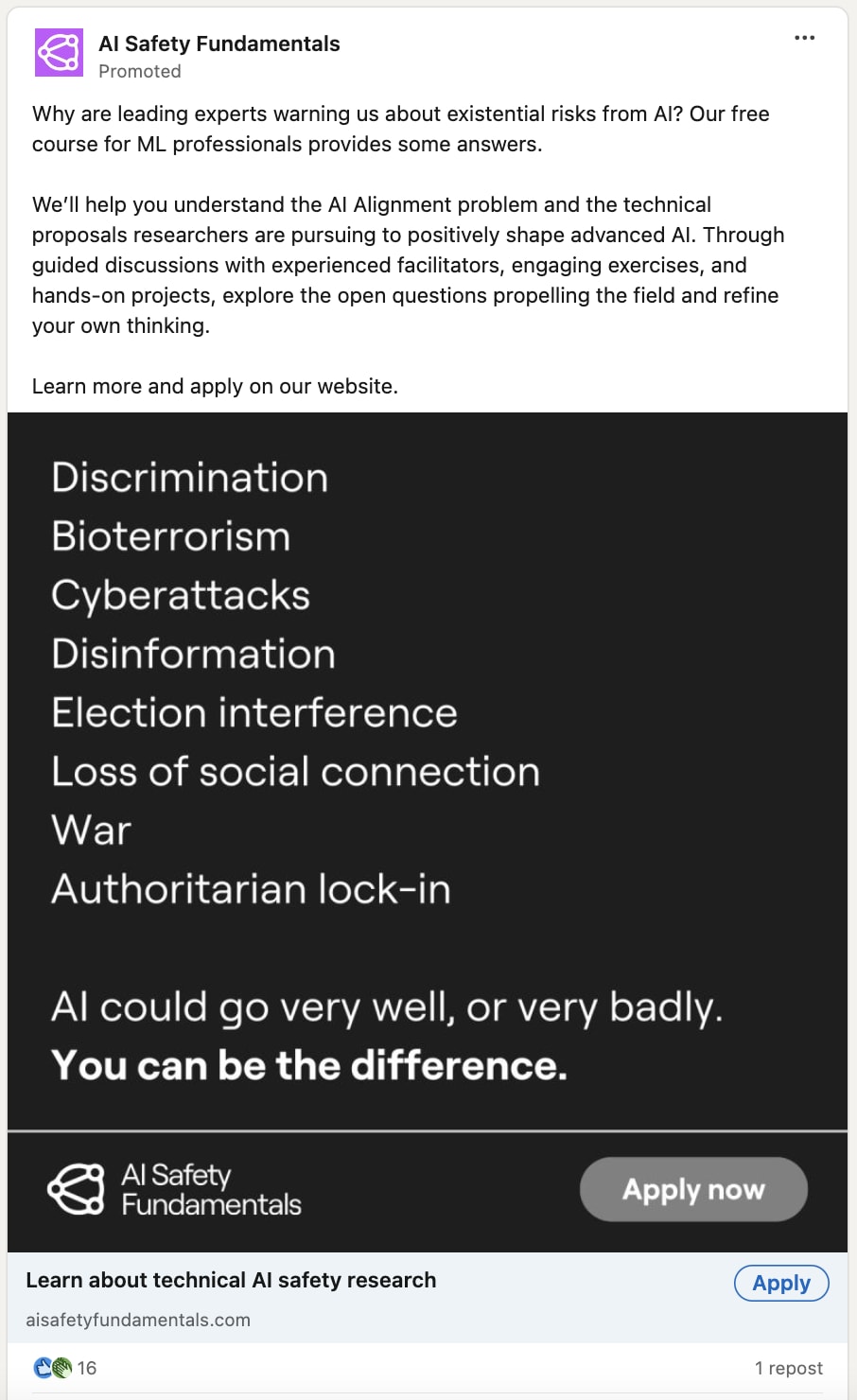

I should have been clearer - I like the fact that they're trying to create a larger tent and (presumably) win ethics people over. There are many reasons not also not like the ad. I would also guess that they have an automated campaign running with several (maybe dozens) of pieces of creative. Without seeing their analytics it would be impossible to know which performs the best, but it wouldn't surprise me if it was this one (lists work).

I really like this ad strategy from BlueDot. 5 stars.

90% of the message is still x / c-risk focussed, but by including "discrimination" and "loss of social connection" they're clearly trying to either (or both);

- create a big tent

- nudge people that are in "ethics" but sympathetic to x / c-risk into x / c-risk

(prediction: 4000 disagreement Karma)

Huh, this is really a surprisingly bad ad. None of the things listed are things that IMO have much to do with why AI is uniquely dangerous (they are basically all misuse-related), and also not really what any of the AI Safety fundamentals curriculum is about (and as such is false advertising). Like, I don't think the AI Safety fundamentals curriculum has anything that will help with election interference or loss of social connection or less likelihood of war or discrimination?

Thanks for the heads up! Should be there now :)

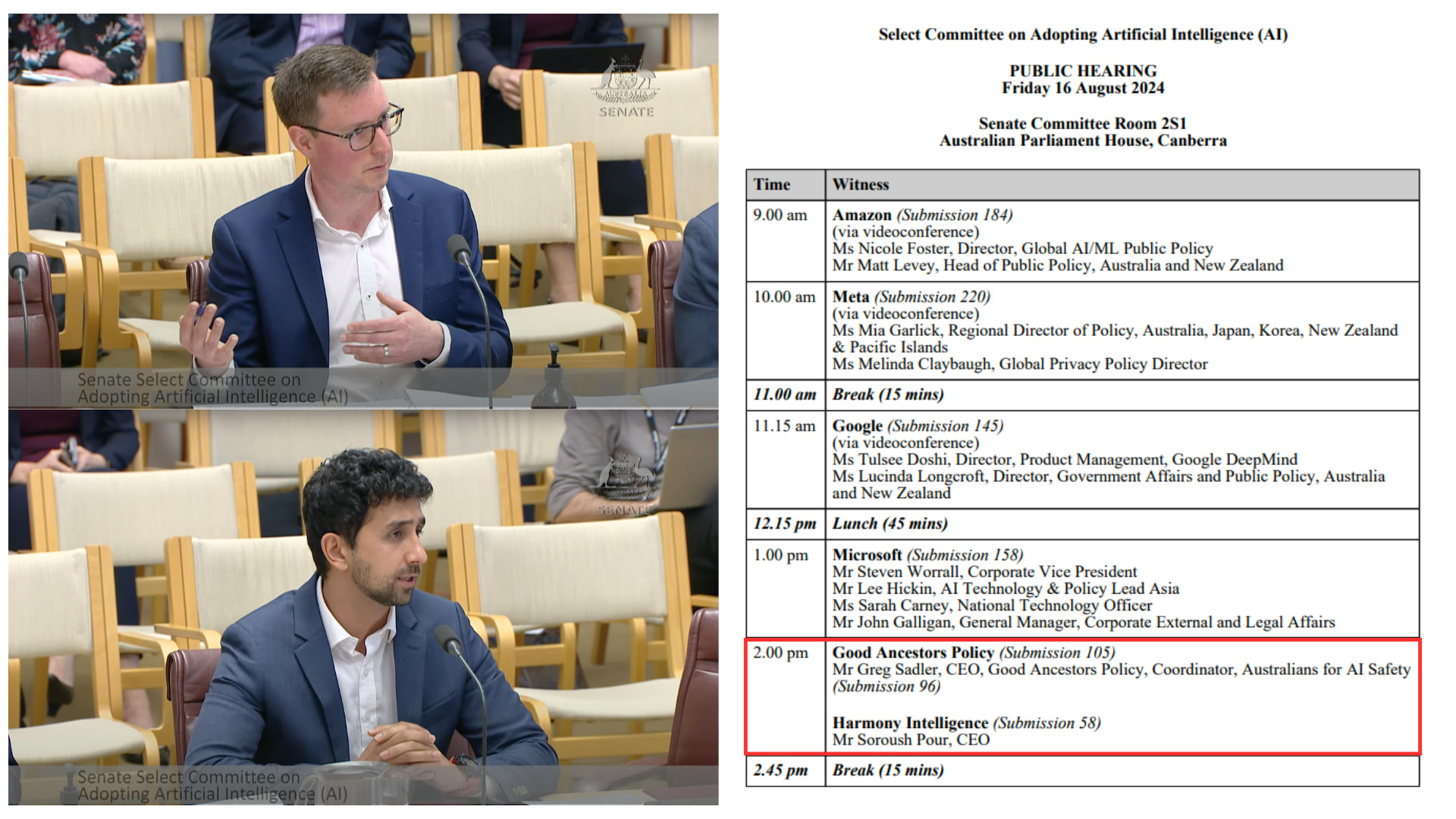

[IMAGE] Extremely proud and excited to watch Greg Sadler (CEO of Good Ancestors Policy) and Soroush Pour (Co-Founder of Harmony Intelligence) speak to the Australian Federal Senate Select Committee on Artificial Intelligence. This is a big moment for the local movement. Also, check out who they ran after.

I think how delicately you treat your personal Overton Window should also depend on your timelines.

My instinct as to why people don't find it a compelling argument;

- They don't have short timelines like me, and therefore chuck it out completely

- Are struggling to imagine a hostile public response to 15% unemployment rates

- Copium

I always get downvoted when I suggest that (1) if you have short timelines and (2) you decide to work at a Lab then (3) people might hate you soon and your employment prospects could be damaged.

What is something obvious I'm missing here?

One thing I won't find convincing is someone pointing at the Finance industry post GFC as a comparison.

I believe the scale of unemployment could be much higher. E.g. 5% ->15% unemployment in 3 years.

Thanks for the feedback! I had a feeling this is where I'd land :|

I’d like to quickly skill up on ‘working with people that have a kind of neurodivergence’.

This means;

- understanding their unique challenges

- how I can help them get the best from/for themselves

- but I don’t want to sink more than two hours into this

Can anyone recommend some online content for me please?

Hey thanks for your thoughts! I'm not sure what you mean by "obvious business context", but I'm either meeting friends / family (not business) or colleagues / community members (business).

Is there a third group that I'm missing?

If you're actually talking about the second group, I wonder how much your and their productivity would be improved if you were less lax about it?

It just occurred to me that maybe posting this isn't helpful. And maybe net negative. If I get enough feedback saying it is then I'll happily delete.

I don't know how to say this in a way that won't come off harsh, but I've had meetings with > 100 people in the last 6 months in AI Safety and I've been surprised how poor the industry standard is for people:

- Planning meetings (writing emails that communicate what the meeting will be about, it's purpose, my role)

- Turning up to scheduled meetings (like literally, showing up at all)

- Turning up on time

- Turning up prepared (i.e. with informed opinions on what we're going to discuss)

- Turning up with clear agendas (if they've organised the meeting)

- Runn...

About a year and a half ago I listened to Loch Kelly for the first time. I instantly glimpsed the nondual nature of reality. Besides becoming a parent, it is the most important thing that has ever happened to me. The last year and a half has been a process of stabilising this glimpse and it is going extremely well. I relate deeply to the things that Sasha is describing here. Thanks for sharing ❤️

The Australian Federal Government is setting up an "AI Advisory Body" inside the Department of Industry, Science and Resources.

IMO that means Australia has an AISI in:

9 months (~ 30% confidence)

12 months (~ 50%)

18 months (~ 80%)

Something bouncing around my head recently ... I think I agree with the notion that "you can't solve a problem at the level it was created".

A key point here is the difference between "solving" a problem and "minimising its harm".

- Solving a problem = engaging with a problem by going up a level from which is was createwd

- Minimising its harm = trying to solve it at the level it was created

Why is this important? Because I think EA and AI Safety have historically focussed (and has their respective strengths in) harm-minimisation.

This applies obviously the micro. ...

Hi! I think in Sydney we're ~ 3 seats short of critical mass, so I am going to reassess the viability of a community space in 5-6 months :)

It sounds like you're saying you feel quite comfortable with how long you wait, which is nice to hear :)

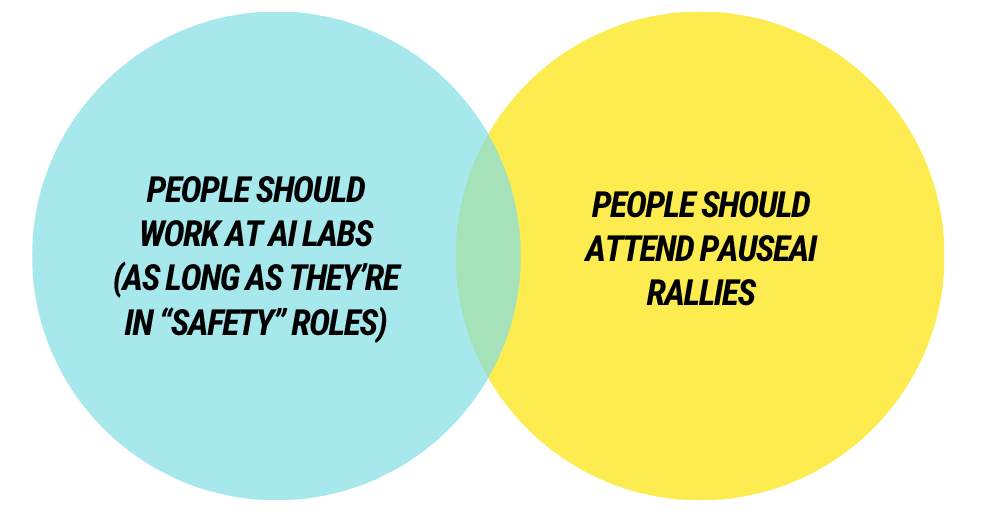

I'm pretty confident that a majority of the population will soon have very negative attitudes towards big AI labs. I'm extremely unsure about what impact this will have on the AI Safety and EA communities (because we work with those labs in all sorts of ways). I think this could increase the likelihood of "Ethics" advocates becoming much more popular, but I don't know if this necessarily increases catastrophic or existential risks.

Not sure if this type of concern has reached the meta yet, but if someone approached me asking for career advice, tossing up whether to apply for a job at a big AI lab, I would let them know that it could negatively affect their career prospects down the track because so many people now perceive such as a move as either morally wrong or just plain wrong-headed. And those perceptions might only increase over time. I am not making a claim here beyond this should be a career advice consideration.

More thoughts on "80,000 hours should remove OpenAI from the Job Board"

- - - -

A broadly robust heuristic I walk around with in my head is "the more targeted the strategy, the more likely it will miss the target".

Its origin is from when I worked in advertising agencies, where TV ads for car brands all looked the same because they needed to reach millions of people, while email campaigns were highly personalised.

The thing is, if you get hit with a tv ad for a car, worst case scenario it can't have a strongly negative effect because of its generic...

I think an assumption 80k makes is something like "well if our audience thinks incredibly deeply about the Safety problem and what it would be like to work at a lab and the pressures they could be under while there, then we're no longer accountable for how this could go wrong. After all, we provided vast amounts of information on why and how people should do their own research before making such a decision".

The problem is, that is not how most people make decisions. No matter how much rational thinking is promoted, we're first and foremost emotional ...

I was around a few years ago when there were already debates about whether 80k should recommend OpenAI jobs. And that's before any of the fishy stuff leaked out, and they were stacking up cool governance commitments like becoming a capped-profit and having a merge-and-assist-clause.

And, well, it sure seem like a mistake in hindsight how much advertising they got.

A piece of career advice I've given a few times recently to people in AI Safety, which I thought worth repeating here, is that AI Safety is so nascent a field that the following strategy could be worth pursuing:

1. Write your own job description (whatever it is that you're good at / brings joy into your life).

2. Find organisations that you think need thing that job but don't yet have it. This role should solve a problem they either don't know they have or haven't figured out how to solve.

3. Find the key decision maker and email them. Explain the (their) pro...

When I was ~ 5 I saw a homeless person on the street. I asked my dad where his home was. My dad said "he doesn't have a home". I burst into tears.

I'm 35 now and reading this post makes me want to burst into tears again. I appreciate you writing it though.

I'm pleased to announce the launch of a brand new Facebook group dedicated to AI Safety in Wellington: AI Safety Wellington (AISW). This is your local hub for connecting with others passionate about ensuring a safe and beneficial future with artificial intelligence / reducing x-risk.To kick things off, we're hosting a super casual meetup where you can:

- Meet & Learn: Connect with Wellington's AI Safety community.

- Chat & Collaborate: Discuss career paths, upcoming events, and

AI Safety Monthly Meetup - Brief Impact Analysis

For the past 8 months, we've (AIS ANZ) been running consistent community meetups across 5 cities (Sydney, Melbourne, Brisbane, Wellington and Canberra). Each meetup averages about 10 attendees with about 50% new participant rate, driven primarily through LinkedIn and email outreach. I estimate we're driving unique AI Safety related connections for around $6.

Volunteer Meetup Coordinators organise the bookings, pay for the Food & Beverage (I reimburse them after the fact) and greet attendees. This ini... (read more)