Cross-posted from the MIRI blog.

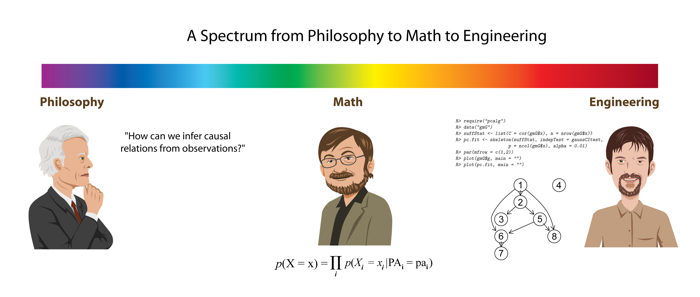

For centuries, philosophers wondered how we could learn what causes what. Some argued it was impossible, or possible only via experiment. Others kept hacking away at the problem, clarifying ideas like counterfactual and probability and correlation by making them more precise and coherent.

Then, in the 1990s, a breakthrough: Judea Pearl and others showed that, in principle, we can sometimes infer causal relations from data even without experiment, via the mathematical machinery of probabilistic graphical models.

Next, engineers used this mathematical insight to write software that can, in seconds, infer causal relations from a data set of observations.

Across the centuries, researchers had toiled away, pushing our understanding of causality from philosophy to math to engineering.

And so it is with Friendly AI research. Current progress on each sub-problem of Friendly AI lies somewhere on a spectrum from philosophy to math to engineering.

We began with some fuzzy philosophical ideas of what we want from a Friendly AI (FAI). We want it to be benevolent and powerful enough to eliminate suffering, protect us from natural catastrophes, help us explore the universe, and otherwise make life awesome. We want FAI to allow for moral progress, rather than immediately reshape the galaxy according to whatever our current values happen to be. We want FAI to remain beneficent even as it rewrites its core algorithms to become smarter and smarter. And so on.

Small pieces of this philosophical puzzle have been broken off and turned into math, e.g. Pearlian causal analysis and Solomonoff induction. Pearl's math has since been used to produce causal inference software that can be run on today's computers, whereas engineers have thus far succeeded in implementing (tractable approximations of) Solomonoff induction only for very limited applications.

Toy versions of two pieces of the "stable self-modification" problem were transformed into math problems in de Blanc (2011) and Yudkowsky & Herreshoff (2013), though this was done to enable further insight via formal analysis, not to assert that these small pieces of the philosophical problem had been solved to the level of math.

Thanks to Patrick LaVictoire and other MIRI workshop participants,1 Douglas Hofstadter's FAI-relevant philosophical idea of "superrationality" seems to have been, for the most part, successfully transformed into math, and a bit of the engineering work has also been done.

I say "seems" because, while humans are fairly skilled at turning math into feats of practical engineering, we seem to be much less skilled at turning philosophy into math, without leaving anything out. For example, some very sophisticated thinkers have claimed that "Solomonoff induction solves the problem of inductive inference," or that "Solomonoff has successfully invented a perfect theory of induction." And indeed, it certainly seems like a truly universal induction procedure. However, it turns out that Solomonoff induction doesn't fully solve the problem of inductive inference, for relatively subtle reasons.2

Unfortunately, philosophical mistakes like this could be fatal when humanity builds the first self-improving AGI (Yudkowsky 2008).3 FAI-relevant philosophical work is, as Nick Bostrom says, "philosophy with a deadline."

1 And before them, Moshe Tennenholtz.

2 Yudkowsky plans to write more about how to improve on Solomonoff induction, later.

3 This is a specific instance of a problem Peter Ludlow described like this: "the technological curve is pulling away from the philosophy curve very rapidly and is about to leave it completely behind."

Guy on the right is Markus Kalisch.

Not sure about the one on the left - outside chance it's Bertrand Russell but probably not.

Give the man some points!

Guy on the right is indeed Markus Kalisch.

The guy on the left is not Bertrand Russell.