I'm delighted that Anthropic has formally committed to our responsible scaling policy. We're also sharing more detail about the Long-Term Benefit Trust, which is our attempt to fine-tune our corporate governance to address the unique challenges and long-term opportunities of transformative AI.

Today, we’re publishing our Responsible Scaling Policy (RSP) – a series of technical and organizational protocols that we’re adopting to help us manage the risks of developing increasingly capable AI systems.

As AI models become more capable, we believe that they will create major economic and social value, but will also present increasingly severe risks. Our RSP focuses on catastrophic risks – those where an AI model directly causes large scale devastation. Such risks can come from deliberate misuse of models (for example use by terrorists or state actors to create bioweapons) or from models that cause destruction by acting autonomously in ways contrary to the intent of their designers.

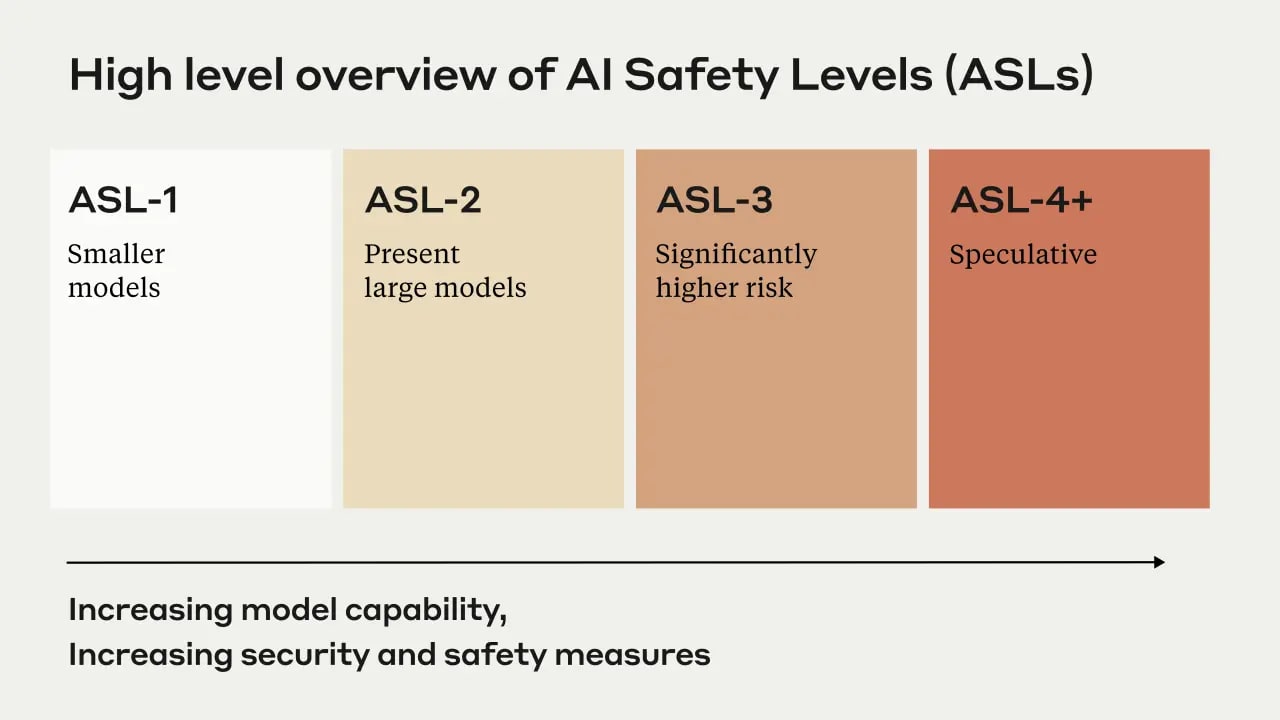

Our RSP defines a framework called AI Safety Levels (ASL) for addressing catastrophic risks, modeled loosely after the US government’s biosafety level (BSL) standards for handling of dangerous biological materials. The basic idea is to require safety, security, and operational standards appropriate to a model’s potential for catastrophic risk, with higher ASL levels requiring increasingly strict demonstrations of safety.

A very abbreviated summary of the ASL system is as follows:

- ASL-1 refers to systems which pose no meaningful catastrophic risk, for example a 2018 LLM or an AI system that only plays chess.

- ASL-2 refers to systems that show early signs of dangerous capabilities – for example ability to give instructions on how to build bioweapons – but where the information is not yet useful due to insufficient reliability or not providing information that e.g. a search engine couldn’t. Current LLMs, including Claude, appear to be ASL-2.

- ASL-3 refers to systems that substantially increase the risk of catastrophic misuse compared to non-AI baselines (e.g. search engines or textbooks) OR that show low-level autonomous capabilities.

- ASL-4 and higher (ASL-5+) is not yet defined as it is too far from present systems, but will likely involve qualitative escalations in catastrophic misuse potential and autonomy.

The definition, criteria, and safety measures for each ASL level are described in detail in the main document, but at a high level, ASL-2 measures represent our current safety and security standards and overlap significantly with our recent White House commitments. ASL-3 measures include stricter standards that will require intense research and engineering effort to comply with in time, such as unusually strong security requirements and a commitment not to deploy ASL-3 models if they show any meaningful catastrophic misuse risk under adversarial testing by world-class red-teamers (this is in contrast to merely a commitment to perform red-teaming). Our ASL-4 measures aren’t yet written (our commitment is to write them before we reach ASL-3), but may require methods of assurance that are unsolved research problems today, such as using interpretability methods to demonstrate mechanistically that a model is unlikely to engage in certain catastrophic behaviors.

We have designed the ASL system to strike a balance between effectively targeting catastrophic risk and incentivising beneficial applications and safety progress. On the one hand, the ASL system implicitly requires us to temporarily pause training of more powerful models if our AI scaling outstrips our ability to comply with the necessary safety procedures. But it does so in a way that directly incentivizes us to solve the necessary safety issues as a way to unlock further scaling, and allows us to use the most powerful models from the previous ASL level as a tool for developing safety features for the next level.[1] If adopted as a standard across frontier labs, we hope this might create a “race to the top” dynamic where competitive incentives are directly channeled into solving safety problems.

From a business perspective, we want to be clear that our RSP will not alter current uses of Claude or disrupt availability of our products. Rather, it should be seen as analogous to the pre-market testing and safety feature design conducted in the automotive or aviation industry, where the goal is to rigorously demonstrate the safety of a product before it is released onto the market, which ultimately benefits customers.

Anthropic’s RSP has been formally approved by its board and changes must be approved by the board following consultations with the Long Term Benefit Trust. In the full document we describe a number of procedural safeguards to ensure the integrity of the evaluation process.

However, we want to emphasize that these commitments are our current best guess, and an early iteration that we will build on. The fast pace and many uncertainties of AI as a field imply that, unlike the relatively stable BSL system, rapid iteration and course correction will almost certainly be necessary.

The full document can be read here. We hope that it provides useful inspiration to policymakers, third party nonprofit organizations, and other companies facing similar deployment decisions.

We thank ARC Evals for their key insights and expertise supporting the development of our RSP commitments, particularly regarding evaluations for autonomous capabilities. We found their expertise in AI risk assessment to be instrumental as we designed our evaluation procedures. We also recognize ARC Evals' leadership in originating and spearheading the development of their broader ARC Responsible Scaling Policy framework, which inspired our approach.. [italics in original]

I'm also including a short excerpt from the Long-Term Benefit Trust post, which I suggest reading in full. You may also wish to (re-)read Anthropic's core views on AI safety as background.

The Anthropic Long-Term Benefit Trust (LTBT, or Trust) is an independent body comprising five Trustees with backgrounds and expertise in AI safety, national security, public policy, and social enterprise. The Trust’s arrangements are designed to insulate the Trustees from financial interest in Anthropic and to grant them sufficient independence to balance the interests of the public alongside the interests of Anthropic’s stockholders. ... the Trust will elect a majority of the board within 4 years. ...

The initial Trustees are:

- Jason Matheny: CEO of the RAND Corporation

- Kanika Bahl: CEO & President of Evidence Action

- Neil Buddy Shah: CEO of the Clinton Health Access Initiative (Chair)

- Paul Christiano: Founder of the Alignment Research Center

- Zach Robinson: Interim CEO of Effective Ventures US

The Anthropic board chose these initial Trustees after a year-long search and interview process to surface individuals who exhibit thoughtfulness, strong character, and a deep understanding of the risks, benefits, and trajectory of AI and its impacts on society. Trustees serve one-year terms and future Trustees will be elected by a vote of the Trustees. We are honored that this founding group of Trustees chose to accept their places on the Trust, and we believe they will provide invaluable insight and judgment.

As a general matter, Anthropic has consistently found that working with frontier AI models is an essential ingredient in developing new methods to mitigate the risk of AI. ↩︎

Anthropic releasing their RSP was an important change in the AI safety landscape. The RSP was likely a substantial catalyst for policies like RSPs—which contain if-then commitments and more generally describe safety procedures—becoming more prominent. In particular, OpenAI now has a beta Preparedness Framework, Google DeepMind has a Frontier Safety Framework but there aren't any concrete publicly-known policies yet, many companies agreed to the Seoul commitments which require making a similar policy, and SB-1047 required safety and security protocols.

However, I think the way Anthropic presented their RSP was misleading in practice (at least misleading to the AI safety community) in that it neither strictly requires pausing nor do I expect Anthropic to pause until they have sufficient safeguards in practice. I discuss why I think pausing until sufficient safeguards are in place is unlikely, at least in timelines as short as Dario's (Dario Amodei is the CEO of Anthropic), in my earlier post.

I also have serious doubts about whether the LTBT will serve as a meaningful check to ensure Anthropic serves the interests of the public. The LTBT has seemingly done very little thus far—appointing only 1 board member despite being able to appoint 3/5 of the board members (a majority) and the LTBT is down to only 3 members. And none of its members have technical expertise related to AI. (The LTBT trustees seem altruistically motivated and seem like they would be thoughtful about questions about how to widely distribute benefits of AI, but this is different from being able to evaluate whether Anthropic is making good decisions with respect to AI safety.)

Additionally, in this article, Anthropic's general counsel Brian Israel seemingly claims that the board probably couldn't fire the CEO (currently Dario) if the board did this despite believing it would greatly reduce profits to shareholders[1]. Almost all of a board's hard power comes from being able to fire the CEO, so if this claim were to be true, that would greatly undermine the ability of the board (and the LTBT which appoints the board) to ensure Anthropic, a public benefit corporation, serves the interests of the public in cases where this conflicts with shareholder interests. In practice, I think this claim which was seemingly made by the general counsel of Anthropic is likely false and, because Anthropic is a public benefit corporation, the board could fire the CEO and win in court even if they openly thought this would massively reduce shareholder value (so long as the board could show they used a reasonable process to consider shareholder interests and decided that the public interest outweighed in this case). Regardless, this article is evidence that the LTBT won't provide a meaningful check on Anthropic in practice.

Misleading communication about the RSP

On the RSP, this post says:

While I think this exact statement might be technically true, people have sometimes interpreted this quote and similar statements as a claim that Anthropic would pause until their safety measures sufficed for more powerful models. I think Anthropic isn't likely to do this; in particular:

Anthropic and Anthropic employees often use similar language to this quote when describing the RSP, potentially contributing to a poor sense of what will happen. My impression is that lots of Anthropic employees just haven't thought about this, and believe that Anthropic will behave much more cautiously than I think is plausible (and more cautiously than I think is prudent given other actors).

Other companies have worse policies and governance

While I focus on Anthropic in this comment, it is worth emphasizing that the policies and governance of other AI companies seem substantially worse. xAI, Meta and DeepSeek have no public safety policies at all, though they have said they will make a policy like this. Google DeepMind has published that they are working on making a frontier safety framework with commitments, but thus far they have just listed potential threat models corresponding to model capabilities and security levels without committing to security for specific capability levels. OpenAI has the beta preparedness framework, but the current security requirements seem inadequate and the required mitigations and assessment process for this is unspecified other than saying that the post-mitigation risk must be medium or below prior to deployment and high or below prior to continued development. I don't expect OpenAI to keep the spirit of this commitment in short timelines. OpenAI, Google DeepMind, xAI, Meta, and DeepSeek all have clearly much worse governance than Anthropic.

What could Anthropic do to address my concerns?

Given these concerns about the RSP and the LTBT, what do I think should happen? First, I'll outline some lower cost measures that seem relatively robust and then I'll outline more expensive measures that don't seem obviously good (at least not obviously good to strongly prioritize) but would be needed to make the situation no longer be problematic.

Lower cost measures:

Unfortunately, these measures aren't straightforwardly independently verifiable based on public knowledge. As far as I know, some of these measures could already be in place.

More expensive measures:

Likely objections and my responses

Here are some relevant objections to my points and my responses:

The article says: "However, even the board members who are selected by the LTBT owe fiduciary obligations to Anthropic's stockholders, Israel says. This nuance means that the board members appointed by the LTBT could probably not pull off an action as drastic as the one taken by OpenAI's board members last November. It's one of the reasons Israel was so confidently able to say, when asked last Thanksgiving, that what happened at OpenAI could never happen at Anthropic. But it also means that the LTBT ultimately has a limited influence on the company: while it will eventually have the power to select and remove a majority of board members, those members will in practice face similar incentives to the rest of the board." This indicates that the board couldn't fire the CEO if they thought this would greatly reduce profits to shareholders though it is somewhat unclear. ↩︎

Making more statements would also be fine! I wouldn't mind if there were just clarifying statements even if the original statement had some problems.

(To try to reduce the incentive for less statements, I criticized other labs for not having policies at all.)