When people complain about LLMs doing nothing more than interpolation, they're mixing up two very different ideas: interpolation as intersecting every point in the training data, and interpolation as predicting behavior in-domain rather than out-of-domain.

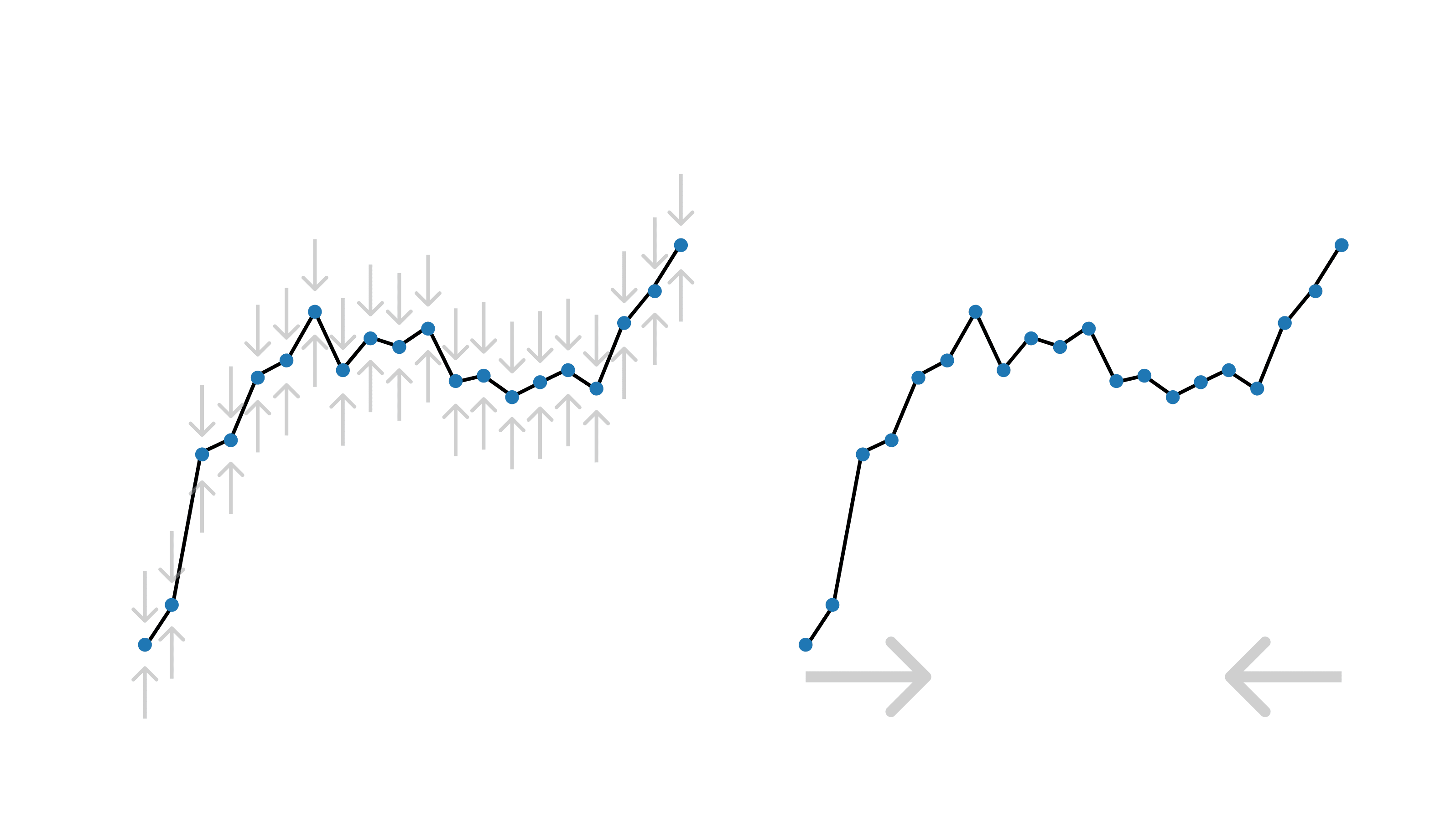

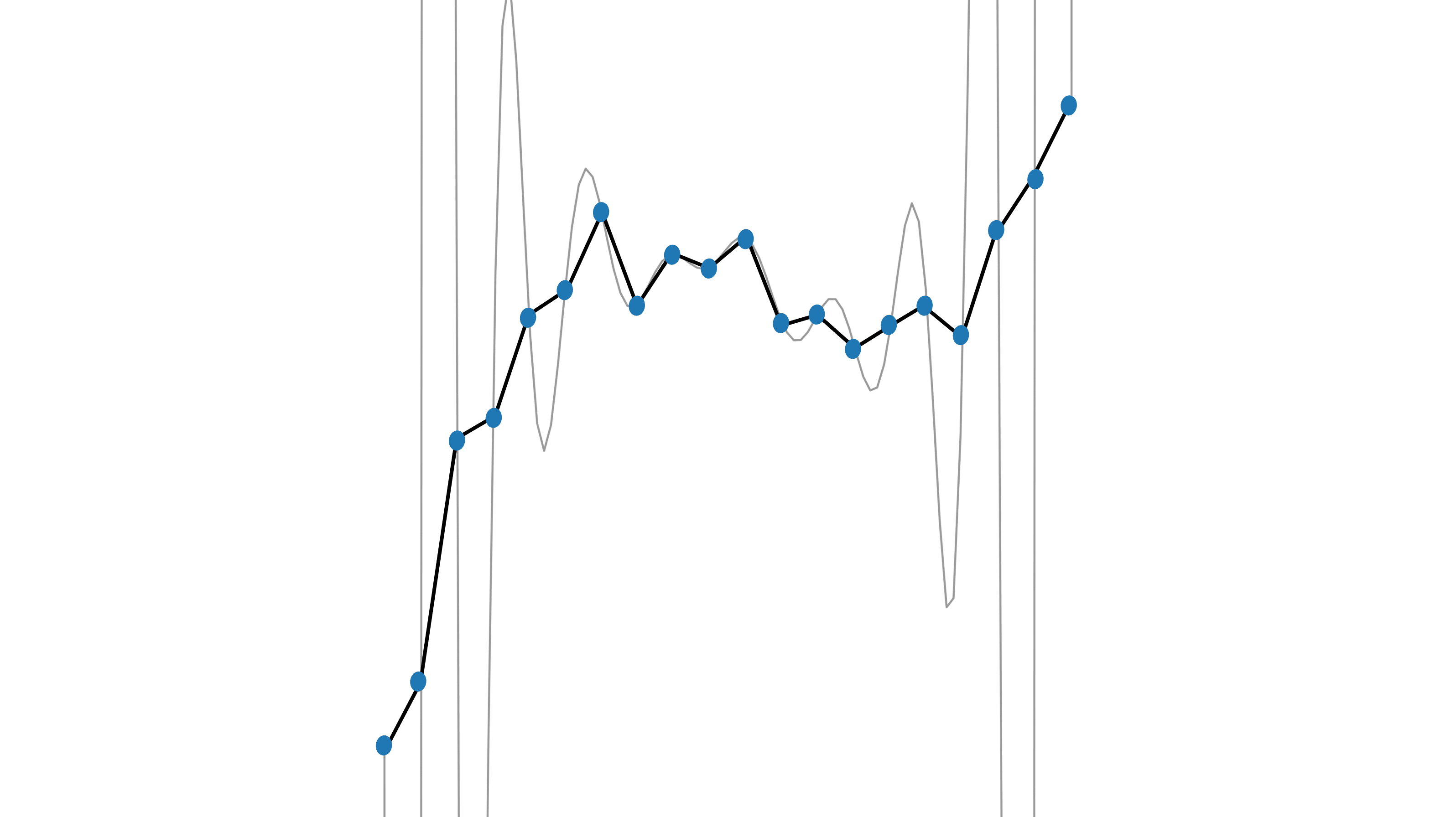

With language, interpolation-as-intersecting isn't inherently good or bad—it's all about how you do it. Just compare polynomial interpolation to piecewise-linear interpolation (the thing that ReLUs do).

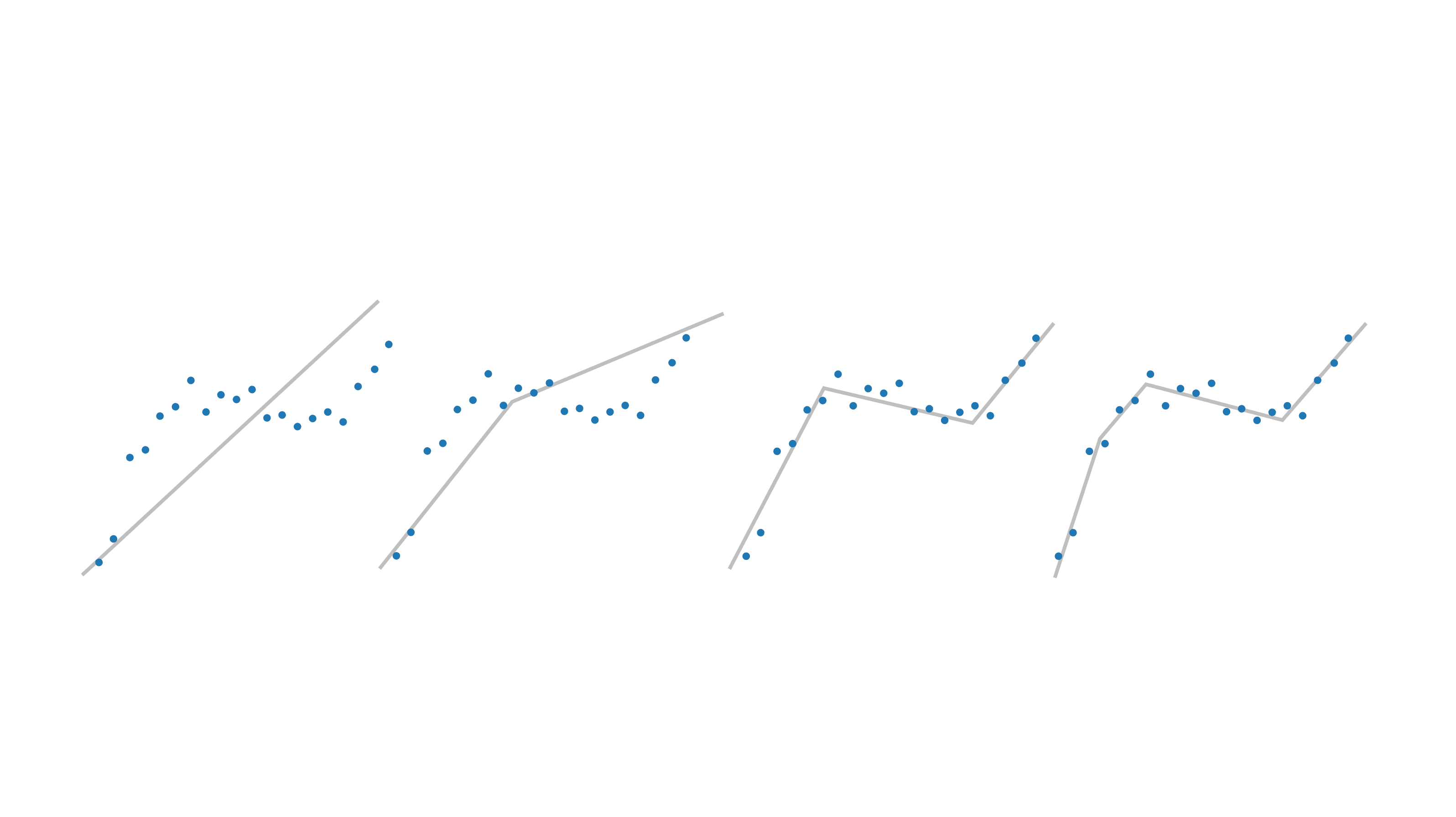

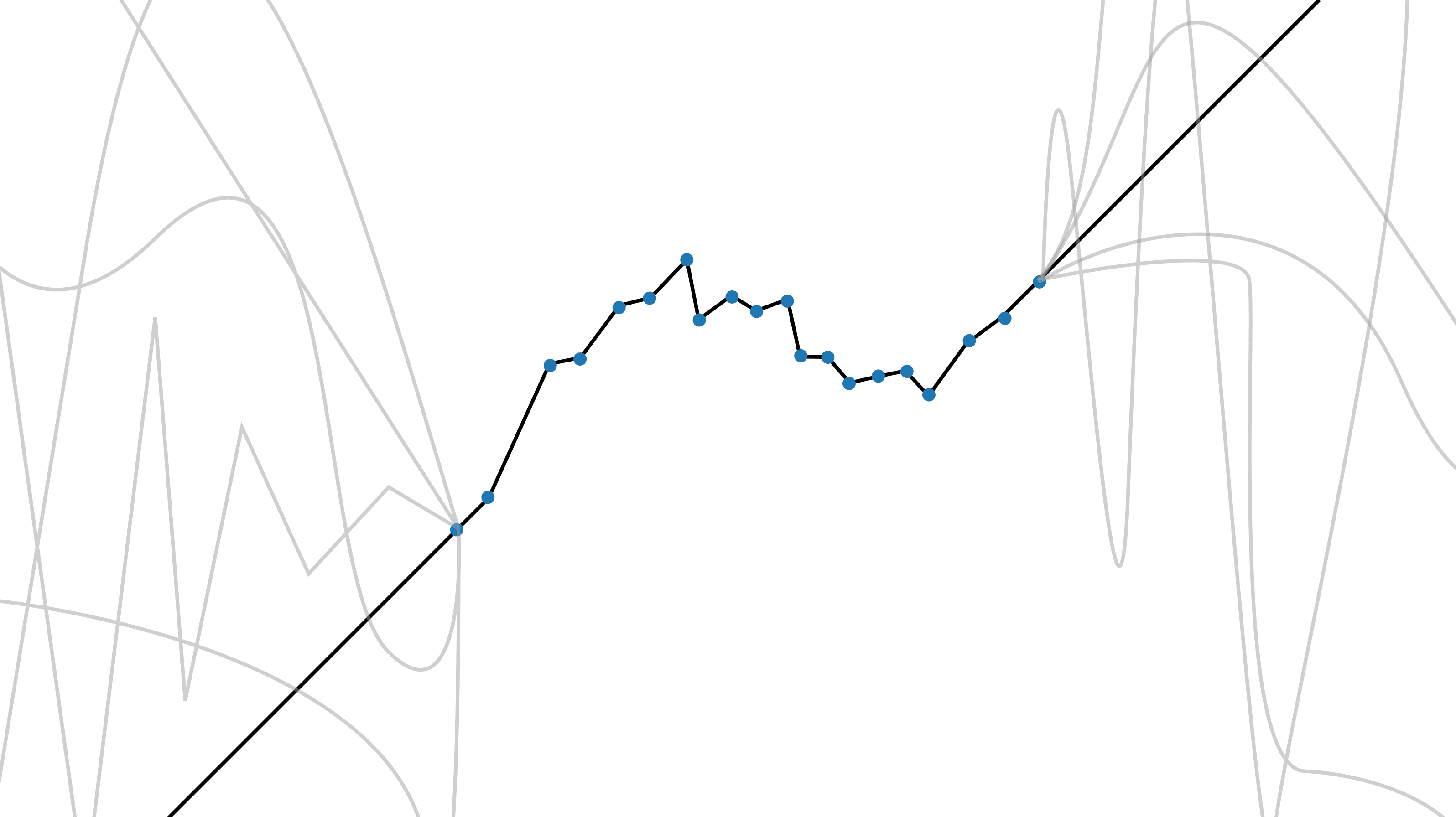

Neural networks (NNs) are biased towards fitting simple piecewise functions, which is (locally) the least biased way to interpolate. The simplest function that intersects two points is the straight line.

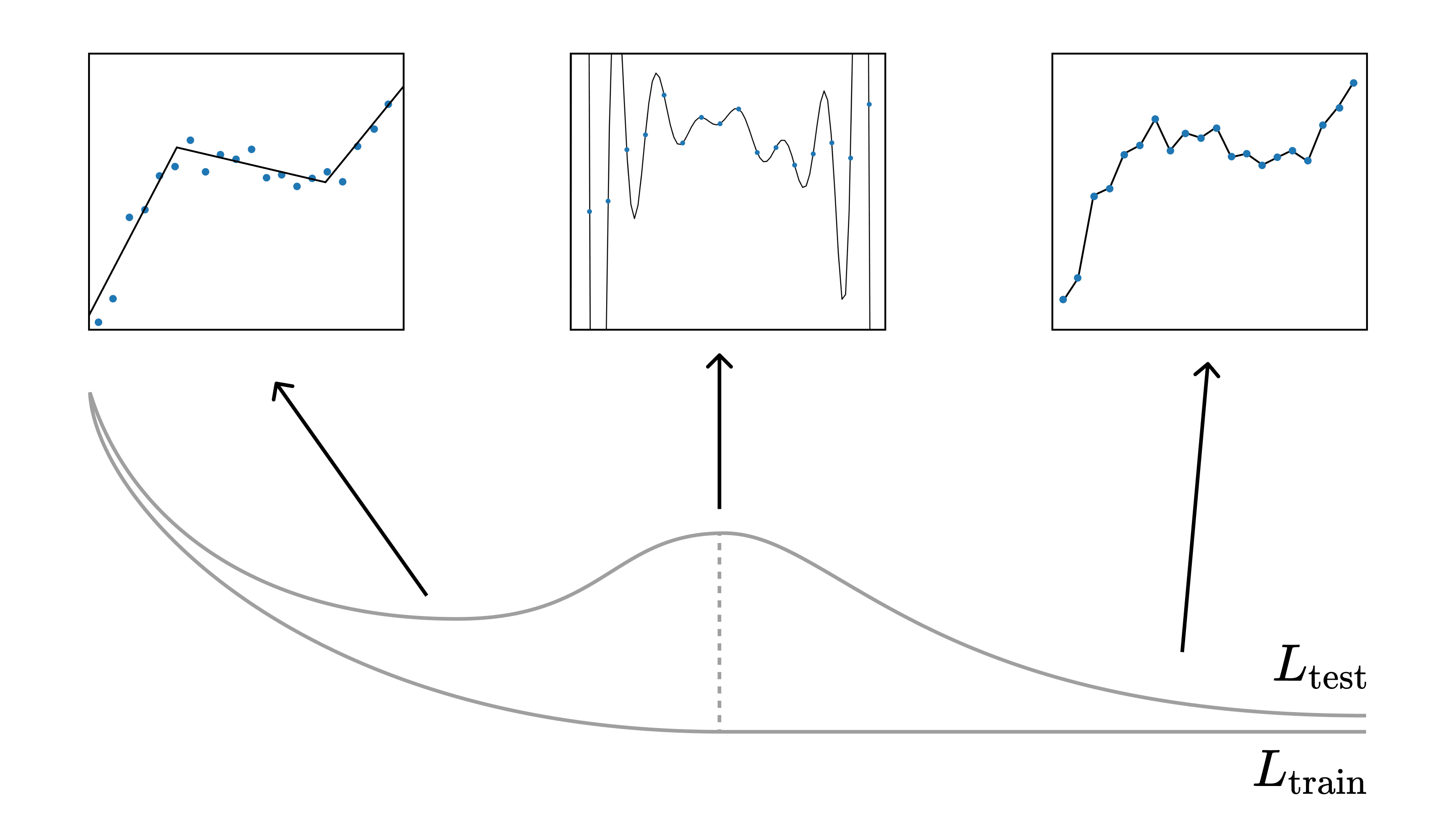

In reality, we don't even train LLMs long enough to hit that intersecting threshold. In this under-interpolated sweet spot, NNs seem to learn features from coarse to fine with increasing model size. E.g.: https://arxiv.org/abs/1903.03488

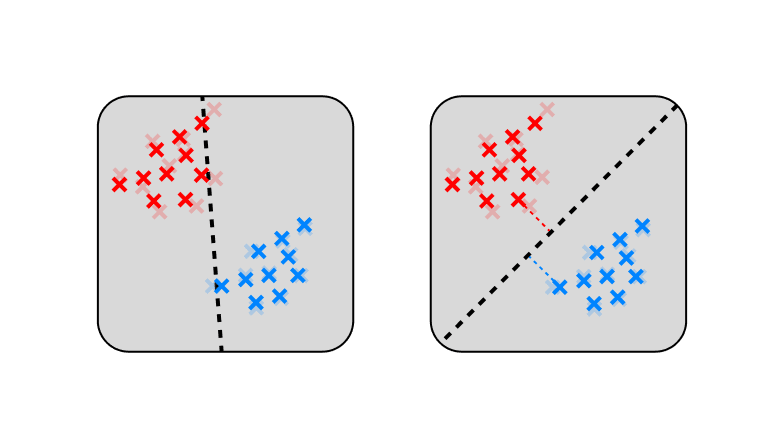

Bonus: this is what's happening with double descent: Test loss goes down, then up, until you reach the interpolation threshold. At this point there's only one interpolating solution, and it's a bad fit. But as you increase model capacity further, you end up with many interpolating solutions, some of which generalize better than others.

Meanwhile, with interpolation-not-extrapolation NNs can and do extrapolate outside the convex hull of training samples. Again, the bias towards simple linear extrapolations is locally the least biased option. There's no beating the polytopes.

Here I've presented the visuals in terms of regression, but the story is pretty similar for classification, where the function being fit is a classification boundary. In this case, there's extra pressure to maximize margins, which further encourages generalization

The next time you feel like dunking on interpolation, remember that you just don't have the imagination to deal with high-dimensional interpolation. Maybe keep it to yourself and go interpolate somewhere else.