2 Answers sorted by

60

So the quote from Hinton is:

"My favorite example of people doing confabulation is: there's someone called Gary Marcus who criticizes neural nets don't really understand anything, they just pastiche together text they read on the web. Well that's cause he doesn't understand how they work. They don't pastiche together text that they've read on the web, because they're not storing any text. They're storing these weights and generating things. He's just kind of making up how he thinks it works. So actually that's a person doing confabulation."

Then Gary Marcus gives an example,

In my entire life I have never met a human that made up something outlandish like that (e.g, that I, Gary Marcus, own a pet chicken named Henrietta), except as a prank or joke.

One of the most important lessons in cognitive psychology is that any given behavioral pattern (or error) can be caused by many different underlying mechanisms. The mechanisms leading to LLM hallucinations just aren’t the same as me forgetting where I put my car keys. Even the errors themselves are qualitatively different. It’s a shame that Hinton doesn’t get that.

You can of course go simply recreate an LLM for yourself,

It may have taken decades to develop this technique, by trying a lot of things that didn't work, but : https://colab.research.google.com/drive/1JMLa53HDuA-i7ZBmqV7ZnA3c_fvtXnx-?usp=sharing

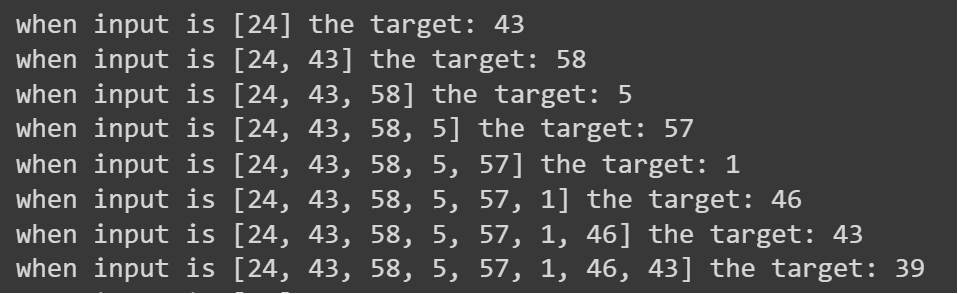

Here's the transformer trying to find weights to memorize the tokens, by starting at each possible place on the token string.

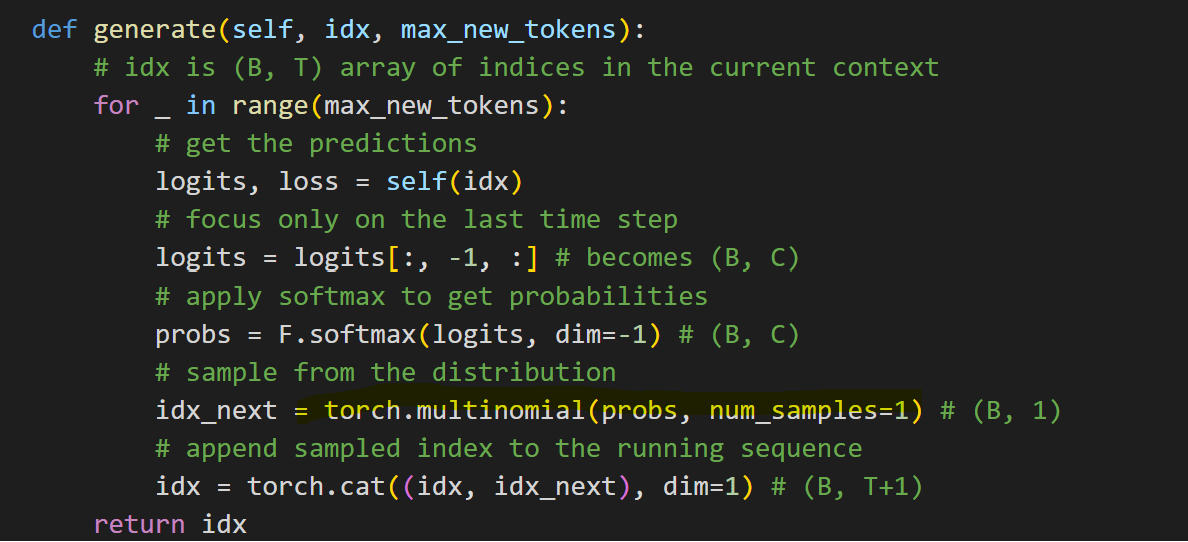

And here's part of the reason it doesn't just repeat exactly what it was given:

That torch.multinomial call says to choose the next token in proportion to the probability that it is the next token from the text strings most similar to the one currently being evaluated.

So:

Am I missing something here? Are these two positions compatible or does one need to be wrong for the other one to be correct? What is the crux between them and what experiment could be devised to test it?

So Hinton claims Gary Marcus doesn't understand how LLMs work. Given how simple they are, that's unlikely to be correct. And Gary Marcus claims that humans don't make wild confabulations. Which is true but not true: a human has additional neural subsystems than simply a "next token guesser".

For example a human can to an extent inspect what they are going to say before they say or write it. Before saying Gary Marcus was "inspired by his pet chicken, Henrietta" a human may temporarily store the next words they plan to say elsewhere in the brain, and evaluate it. "Do I remember seeing the chicken? What memories correspond to it? Do the phonemes sound kinda like HEN-RI-ETTA or was it something else..."

Not only this, but subtler confabulations happen all the time. I personally was confused between the films "Scream" and "Scary Movie", as both occupy the same embeddings space. Apparently I'm not the only one.

For example a human can to an extent inspect what they are going to say before they say or write it. Before saying Gary Marcus was "inspired by his pet chicken, Henrietta" a human may temporarily store the next words they plan to say elsewhere in the brain, and evaluate it.

Transformer-based also internally represent the tokens they are likely to emit in future steps. Demonstrated rigorously in Future Lens: Anticipating Subsequent Tokens from a Single Hidden State, though perhaps the simpler demonstration is simply that LLMs can reliably complete the se...

Thanks for your answer. Would it be fair to say that both of them are oversimplifying the other's position and that they are both, to some extent, right?

20

Obviously LLMs memorize some things, the easy example is that the pretraining dataset of GPT-4 probably contained lots of cryptographically hashed strings which are impossible to infer from the overall patterns of language. Predicting those accurately absolutely requires memorization, there's literally no other way unless the LLM solves an NP-hard problem. Then there are in-between things like Barack Obama's age, which might be possible to infer from other language (a president is probably not 10 yrs old or 230), but within the plausible range, you also just need to memorize it.

Where it gets interesting is when you leave the space of token strings the machine has seen, but you are somewhere in the input space "in between" strings it has seen. That's why this works at all and exhibits any intelligence.

For example if it has seen a whole bunch of patterns like "A->B", and "C->D", if you give input "G" it will complete with "->F".

Or for President ages, what if the president isn't real? https://chat.openai.com/share/3ccdc340-ada5-4471-b114-0b936d1396ad

It started with this video of Hinton taking a jab at Marcus: https://twitter.com/tsarnick/status/1754439023551213845

And here is Marcu's answer:

https://garymarcus.substack.com/p/deconstructing-geoffrey-hintons-weakest

As far as I understand, Gary Marcus argues that LLMs memorize some of their training data, while Hinton argues that no such thing takes place, it's all just patterns of language.

I found these two papers on LLM memorization:

https://arxiv.org/abs/2202.07646 - Quantifying Memorization Across Neural Language Models

https://browse.arxiv.org/abs/2311.17035 - Scalable Extraction of Training Data from (Production) Language Models

Am I missing something here? Are these two positions compatible or does one need to be wrong for the other one to be correct? What is the crux between them and what experiment could be devised to test it?