Imagine a sequence of binary outcomes generated independently and identically by some stochastic process. After observing N outcomes, with n successes, Laplace's Rule of Succession suggests that our confidence in another success should be (n+1)/(N+2). This corresponds to a uniform prior over [0,1] for the underlying probability. But should we really be uniform about probabilities?

I think a uniform prior is wrong for three reasons:

- The uniform prior suggests we should be equally surprised if the underlying probability lies in the interval [0, 0.0001] as in [0.3456, 0.3457]. But this seems wrong. I can think of many process that give probabilities in the first interval — for example, any process that succeeds only in rare edge cases. In contrast, I couldn't list any processes that give probabilities specifically around 0.3456. The uniform prior fails to capture the wide range of log-odds that occur in real-life processes.

- Under the uniform prior, the process is almost surely not deterministic — i.e. there is zero prior likelihood of p being exactly 0.0 or 1.0. This seems wrong. Among probabilistic programs that generate binary outcomes, there are very simple deterministic ones (e.g. "always output 0" or "always output 1"). An appropriate prior should have nonzero prior probability on these simple programs.

- The uniform prior assigns zero likelihood to simple fractions like p=1/2 or p=5/6. This too seems wrong — simple rational probabilities should have higher weight. To fix this, we should mix in the Thomae distribution, which adds a weight (m·n)^(-α) to each fraction m/(m+n) for every pair 1 ≤ m,n ≤ 100.

I propose this mixture distribution:

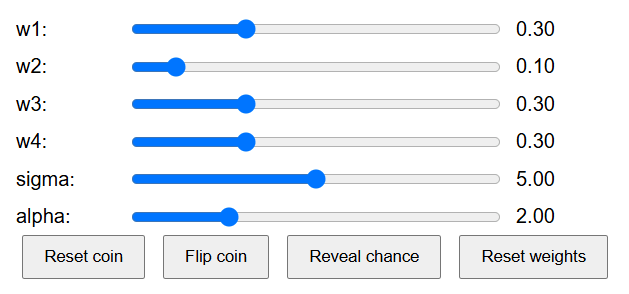

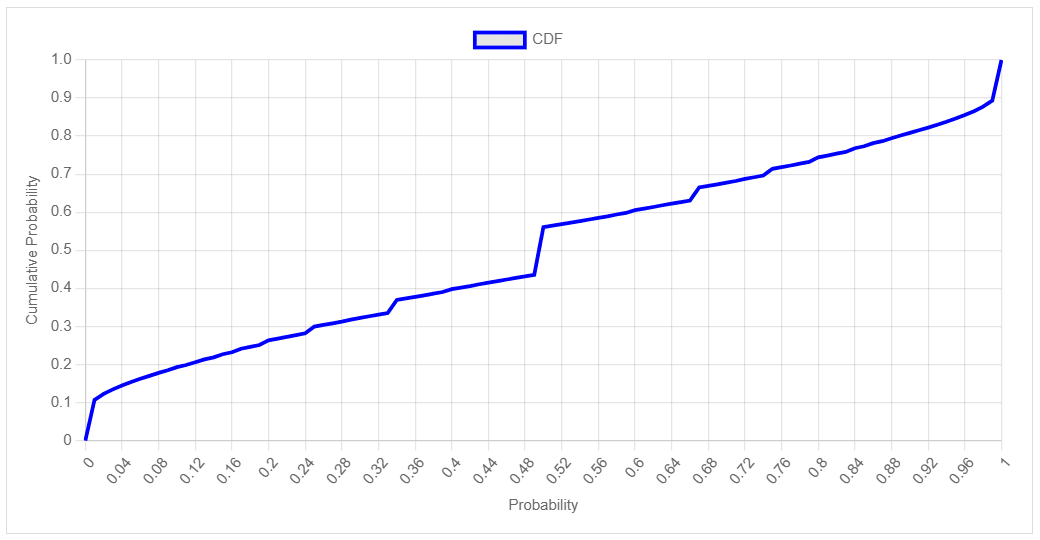

w1 * logistic-normal(0, sigma^2) + w2 * 0.5(dirac(0) + dirac(1)) + w3 * thomae_{100}(α) + w4 * uniform(0,1)

where:

- The first term captures logistic transformations of normal variables (weight w1), resolving the issue that probabilities should be spread across log-odds

- The second term captures deterministic programs (weight w2), allowing for exactly zero and one

- The third term captures rational probabilities with simple fractions (weight w3), giving weight to simple ratios

- The fourth term captures uniform interval (weight w4), corresponding to Laplace's original prior

Ideally, our prior should be a mixture of every possible probabilistic program, weighted by 2^(-K) where K is its Kolmogorov complexity. This would properly capture our preference for simple mechanisms. However, such a distribution is impossible to represent, compute, or apply. Instead, I propose my prior as a tractable distribution that resolves what I think are the most egregious problems with Laplace's law of succession.

Now that I've found the appropriate approximation for the universal prior over binary outcomes, the path to solving induction is clear. First, we'll extend this to pairs of binary outcomes, then triples, and so on. I expect to have sequence of length 10 nailed by Tuesday, and full Solomonoff Induction by Q1 2025.

I've built an interactive demo to explore this distribution. The default parameters (w1=0.3, w2=0.1, w3=0.3, w4=0.3, sigma=5, alpha=2) reflect my intuition about the relative frequency of these different types of programs in practice. This gives a more realistic prior for many real-world scenarios where we're trying to infer the behavior of unknown processes that might be deterministic, fair, or genuinely random in various ways. What do you think? Is there a simple model which serves as a better prior?