10

Ω 6

A clear mistake of early AI safety people is not emphasizing enough (or ignoring) the possibility that solving AI alignment (as a set of technical/philosophical problems) may not be feasible in the relevant time-frame, without a long AI pause. Some have subsequently changed their minds about pausing AI, but by not reflecting on and publicly acknowledging their initial mistakes, I think they are or will be partly responsible for others repeating similar mistakes.

Case in point is Will MacAskill's recent Effective altruism in the age of AGI. Here's my reply, copied from EA Forum:

I think it's likely that without a long (e.g. multi-decade) AI pause, one or more of these "non-takeover AI risks" can't be solved or reduced to an acceptable level. To be more specific:

- Solving AI welfare may depend on having a good understanding of consciousness, which is a notoriously hard philosophical problem.

- Concentration of power may be structurally favored by the nature of AGI or post-AGI economics, and defy any good solutions.

- Defending against AI-powered persuasion/manipulation may require solving metaphilosophy, which judging from other comparable fields, like meta-ethics and philosophy of math, m

Some of Eliezer's founder effects on the AI alignment/x-safety field, that seem detrimental and persist to this day:

- Plan A is to race to build a Friendly AI before someone builds an unFriendly AI.

- Metaethics is a solved problem. Ethics/morality/values and decision theory are still open problems. We can punt on values for now but do need to solve decision theory. In other words, decision theory is the most important open philosophical problem in AI x-safety.

- Academic philosophers aren't very good at their jobs (as shown by their widespread disagreements, confusions, and bad ideas), but the problems aren't actually that hard, and we (alignment researchers) can be competent enough philosophers and solve all of the necessary philosophical problems in the course of trying to build Friendly (or aligned/safe) AI.

I've repeatedly argued against 1 from the beginning, and also somewhat against 2 and 3, but perhaps not hard enough because I personally benefitted from them, i.e., having pre-existing interest/ideas in decision theory that became validated as centrally important for AI x-safety, and generally finding a community that is interested in philosophy and took my own ideas seriously...

Strong disagree.

We absolutely do need to "race to build a Friendly AI before someone builds an unFriendly AI". Yes, we should also try to ban Unfriendly AI, but there is no contradiction between the two. Plans are allowed (and even encouraged) to involve multiple parallel efforts and disjunctive paths to success.

It's not that academic philosophers are exceptionally bad at their jobs. It's that academic philosophy historically did not have the right tools to solve the problems. Theoretical computer science, and AI theory in particular, is a revolutionary method to reframe philosophical problems in a way that finally makes them tractable.

About "metaethics" vs "decision theory", that strikes me as a wrong way of decomposing the problem. We need to create a theory of agents. Such a theory naturally speaks both about values and decision making, and it's not really possible to cleanly separate the two. It's not very meaningful to talk about "values" without looking at what function the values do inside the mind of an agent. It's not very meaningful to talk about "decisions" without looking at the purpose of decisions. It's also not very meaningful to talk about either without also lookin...

Theoretical computer science, and AI theory in particular, is a revolutionary method to reframe philosophical problems in a way that finally makes them tractable.

As far as I can see, the kind of "reframing" you could do with those would basically remove all the parts of the problems that make anybody care about them, and turn any "solutions" into uninteresting formal exercises. You could also say that adopting a particular formalism is equivalent to redefining the problem such that that formalism's "solution" becomes the right one... which makes the whole thing kind of circular.

I submit that when framed in any way that addresses the reasons they matter to people, the "hard" philosophical problems in ethics (or meta-ethics, if you must distinguish it from ethics, which really seems like an unnecessary complication) simply have no solutions, period. There is no correct system of ethics (or aesthetics, or anything else with "values" in it). Ethical realism is false. Reality does not owe you a system of values, and it definitely doesn't feel like giving you one.

I'm not sure why people spend so much energy on what seems to me like an obviously pointless endeavor. Get your own values....

We absolutely do need to "race to build a Friendly AI before someone builds an unFriendly AI". Yes, we should also try to ban Unfriendly AI, but there is no contradiction between the two. Plans are allowed (and even encouraged) to involve multiple parallel efforts and disjunctive paths to success.

Disagree, the fact that there needs to be a friendly AI before an unfriendly AI doesn't mean building it should be plan A, or that we should race to do it. It's the same mistake OpenAI made when they let their mission drift from "ensure that artificial general intelligence benefits all of humanity" to being the ones who build an AGI that benefits all of humanity.

Plan A means it would deserve more resources than any other path, like influencing people by various means to build FAI instead of UFAI.

No, it's not at all the same thing as OpenAI is doing.

First, OpenAI is working using a methodology that's completely inadequate for solving the alignment problem. I'm talking about racing to actually solve the alignment problem, not racing to any sort of superintelligence that our wishful thinking says might be okay.

Second, when I say "racing" I mean "trying to get there as fast as possible", not "trying to get there before other people". My race is cooperative, their race is adversarial.

Third, I actually signed the FLI statement on superintelligence. OpenAI hasn't.

Obviously any parallel efforts might end up competing for resources. There are real trade-offs between investing more in governance vs. investing more in technical research. We still need to invest in both, because of diminishing marginal returns. Moreover, consider this: even the approximately-best-case scenario of governance only buys us time, it doesn't shut down AI forever. The ultimate solution has to come from technical research.

I mostly agree with 1. and 2., with 3. it's a combination of the problems are hard, the gung-ho approach and lack of awareness of the difficulty is true, but also academic philosophy is structurally mostly not up to the task because factors like publication speeds, prestige gradients or speed of ooda loops.

My impression is getting generally smart and fast "alignment researchers" more competent in philosophy is more tractable than trying to get established academic philosophers change what they work on, so one tractable thing is just convincing people the problems are real, hard and important. Other is maybe recruiting graduates

To branch off the line of thought in this comment, it seems that for most of my adult life I've been living in the bubble-within-a-bubble that is LessWrong, where the aspect of human value or motivation that is the focus of our signaling game is careful/skeptical inquiry, and we gain status by pointing out where others haven't been careful or skeptical enough in their thinking. (To wit, my repeated accusations that Eliezer and the entire academic philosophy community tend to be overconfident in their philosophical reasoning, don't properly appreciate the difficulty of philosophy as an enterprise, etc.)

I'm still extremely grateful to Eliezer for creating this community/bubble, and think that I/we have lucked into the One True Form of Moral Progress, but must acknowledge that from the outside, our game must look as absurd as any other niche status game that has spiraled out of control.

My early posts on LW often consisted of pointing out places in the Sequences where Eliezer wasn't careful enough. Shut Up and Divide? and Boredom vs. Scope Insensitivity come to mind. And of course that's not the only way to gain status here - the big status awards are given for coming up with novel ideas and backing them up with carefully constructed arguments.

"Utility" literally means usefulness, in other words instrumental value, but in decision theory and related fields like economics and AI alignment, it (as part of "utility function") is now associated with terminal/intrinsic value, almost the opposite thing (apparently through some quite convoluted history). Somehow this irony only occurred to me ~3 decades after learning about utility functions.

It's not just an irony. The arguments for rational / successful agents "having a utility function" are stronger when applied to stuff involving convergent instrumental stuff. Indeed, why can't I just want to go in a cycle from San Jose to SF to Berkeley back to San Jose? The only argument against is that it's wasteful (...if you just wanted to get to a specific place).

You could care about outcomes (states of stuff). You could care about trajectories. You could care about internal / mental activity. You could care about unseen instances of these (e.g. in other possible worlds). You could care about your actions for their own sake (e.g. aesthetics of musical output).

“Agent” is sort of similar. The top definition on google is “a person who acts on behalf of another person or group”, whereas in these parts we tend to use it for a thing that has its own goals.

Edit: I think Jon Richens pointed this out to me once.

Reassessing heroic responsibility, in light of subsequent events.

I think @cousin_it made a good point "if many people adopt heroic responsibility to their own values, then a handful of people with destructive values might screw up everyone else, because destroying is easier than helping people" and I would generalize it to people with biased beliefs (which is often downstream of a kind of value difference, i.e., selfish genes).

It seems to me that "heroic responsibility" (or something equivalent but not causally downstream of Eliezer's writings) is contributing to the current situation, of multiple labs racing for ASI and essentially forcing the AI transition on humanity without consent or political legitimacy, each thinking or saying that they're justified because they're trying to save the world. It also seemingly justifies or obligates Sam Altman to fight back when the OpenAI board tried to fire him, if he believed the board was interfering with his mission.

Perhaps "heroic responsibility" makes more sense if overcoming bias was easy, but in a world where it's actually hard and/or few people are actually motivated to do it, which we seem to live in, spreading the idea of "heroic responsibility" seems, well, irresponsible.

My sense is that most of the people with lots of power are not taking heroic responsibility for the world. I think that Amodei and Altman intend to achieve global power and influence but this is not the same as taking global responsibility. I think, especially for Altman, the desire for power comes first relative to responsibility. My (weak) impression is that Hassabis has less will-to-power than the others, and that Musk has historically been much closer to having responsibility be primary.

I don’t really understand this post as doing something other than asking “on the margin are we happy or sad about present large-scale action” and then saying that the background culture should correspondingly praise or punish large-scale action. Which is maybe reasonable, but alternatively too high level of a gloss. As per the usual idea of rationality, I think whether you are capable of taking large-scale action in a healthy way is true in some worlds and not in others, and you should try to figure out which world you’re in.

The financial incentives around AI development are blatantly insanity-inducing on the topic and anyone should’ve been able to guess that going in, I don’t think this w...

I'm also uncertain about the value of "heroic responsibility", but this downside consideration can be mostly addressed by "don't do things which are highly negative sum from the perspective of some notable group" (or other anti-unilateralist curse type intuitions). Perhaps this is too subtle in practice.

AI labs are starting to build AIs with capabilities that are hard for humans to oversee, such as answering questions based on large contexts (1M+ tokens), but they are still not deploying "scalable oversight" techniques such as IDA and Debate. (Gemini 1.5 report says RLHF was used.) Is this more good news or bad news?

Good: Perhaps RLHF is still working well enough, meaning that the resulting AI is following human preferences even out of training distribution. In other words, they probably did RLHF on large contexts in narrow distributions, with human rater who have prior knowledge/familiarity of the whole context, since it would be too expensive to do RLHF with humans reading diverse 1M+ contexts from scratch, but the resulting chatbot is working well even outside the training distribution. (Is it actually working well? Can someone with access to Gemini 1.5 Pro please test this?)

Bad: AI developers haven't taken alignment seriously enough to have invested enough in scalable oversight, and/or those techniques are unworkable or too costly, causing them to be unavailable.

From a previous comment:

...From my experience doing early RLHF work for Gemini, larger models exploit the reward mode

I'm thinking that the most ethical (morally least risky) way to "insure" against a scenario in which AI takes off and property/wealth still matters is to buy long-dated far out of the money S&P 500 calls. (The longest dated and farthest out of the money seems to be Dec 2029 10000-strike SPX calls. Spending $78 today on one of these gives a return of $10000 if SPX goes to 20000 by Dec 2029, for example.)

My reasoning here is that I don't want to provide capital to AI industries or suppliers because that seems wrong given what I judge to be high x-risk their activities are causing (otherwise I'd directly invest in them), but I also want to have resources in a post-AGI future in case that turns out to be important for realizing my/moral values. Suggestions welcome for better/alternative ways to do this.

I find it curious that none of my ideas have a following in academia or have been reinvented/rediscovered by academia (including the most influential ones so far UDT, UDASSA, b-money). Not really complaining, as they're already more popular than I had expected (Holden Karnofsky talked extensively about UDASSA on an 80,000 Hour podcast, which surprised me), it just seems strange that the popularity stops right at academia's door. (I think almost no philosophy professor, including ones connected with rationalists/EA, has talked positively about any of my philosophical ideas? And b-money languished for a decade gathering just a single citation in academic literature, until Satoshi reinvented the idea, but outside academia!)

Clearly academia has some blind spots, but how big? Do I just have a knack for finding ideas that academia hates, or are the blind spots actually enormous?

I think the main reason why UDT is not discussed in academia is that it is not a sufficiently rigorous proposal, as well as there not being a published paper on it. Hilary Greaves says the following in this 80k episode:

Then as many of your listeners will know, in the space of AI research, people have been throwing around terms like ‘functional decision theory’ and ‘timeless decision theory’ and ‘updateless decision theory’. I think it’s a lot less clear exactly what these putative alternatives are supposed to be. The literature on those kinds of decision theories hasn’t been written up with the level of precision and rigor that characterizes the discussion of causal and evidential decision theory. So it’s a little bit unclear, at least to my likes, whether there’s genuinely a competitor to decision theory on the table there, or just some intriguing ideas that might one day in the future lead to a rigorous alternative.

I also think it is unclear to what extent UDT and updateless are different from existing ideas in academia that are prima facie similar, like McClennen's (1990) resolute choice and Meacham's (2010, §4.2) cohesive decision theory.[1] Resolute choice in particular h...

It may be worth thinking about why proponents of a very popular idea in this community don't know of its academic analogues, despite them having existed since the early 90s[1] and appearing on the introductory SEP page for dynamic choice.

Academics may in turn ask: clearly LessWrong has some blind spots, but how big?

I was thinking of writing a short post kinda on this topic (EDIT TO ADD: it’s up! See Some (problematic) aesthetics of what constitutes good work in academia), weaving together:

- Holden on academia not answering important questions

- This tweet I wrote on the aesthetics of what makes a “good” peer-reviewed psychology paper

- Something about the aesthetics of what makes a “good” peer-reviewed AI/ML paper, probably including the anecdote where DeepMind wrote a whole proper academia-friendly ML paper whose upshot was the same as a couple sentences in an old Abram Demski blog post

- Something about the aesthetics of what makes a “good” peer-reviewed physics paper, based on my personal experience, probably including my anecdote about solar cell R&D from here

- Not academia but bhauth on the aesthetics of what makes a “good” VC pitch

- maybe a couple other things (suggestions anyone?)

- Homework problem for the reader: what are your “aesthetics of success”, and how are they screwing you over?

I wrote an academic-style paper once, as part of my job as an intern in a corporate research department. It soured me on the whole endeavor, as I really didn't enjoy the process (writing in the academic style, the submission process, someone insisting that I retract the submission to give them more credit despite my promise to insert the credit before publication), and then it was rejected with two anonymous comments indicating that both reviewers seemed to have totally failed to understand the paper and giving me no chance to try to communicate with them to understand what caused the difficulty. The cherry on top was my mentor/boss indicating that this is totally normal, and I was supposed to just ignore the comments and keep resubmitting the paper to other venues until I run out of venues.

My internship ended around that point and I decided to just post my ideas to mailing lists / discussion forums / my home page in the future.

Also, I think MIRI got FDT published in some academic philosophy journal, and AFAIK nothing came of it?

Maybe Chinese civilization was (unintentionally) on the right path: discourage or at least don't encourage technological innovation but don't stop it completely, run a de facto eugenics program (Keju, or Imperial Examination System) to slowly improve human intelligence, and centralize control over governance and culture to prevent drift from these policies. If the West hadn't jumped the gun with its Industrial Revolution, by the time China got to AI, human intelligence would be a lot higher and we might be in a much better position to solve alignment.

This was inspired by @dsj's complaint about centralization, using the example of it being impossible for a centralized power or authority to deal with the Industrial Revolution in a positive way. The contrarian in my mind piped up with "Maybe the problem isn't with centralization, but with the Industrial Revolution!" If the world had more centralization, such that the Industrial Revolution never started in an uncontrolled way, perhaps it would have been better off in the long run.

One unknown is what would the trajectory of philosophical progress look like in this centralized world, compared to a more decentralized world like ours. The ...

The Inhumanity of AI Safety

A: Hey, I just learned about this idea of artificial superintelligence. With it, we can achieve incredible material abundance with no further human effort!

B: Thanks for telling me! After a long slog and incredible effort, I'm now a published AI researcher!

A: No wait! Don't work on AI capabilities, that's actually negative EV!

B: What?! Ok, fine, at huge personal cost, I've switched to AI safety.

A: No! The problem you chose is too legible!

B: WTF! Alright you win, I'll give up my sunken costs yet again, and pick something illegible. Happy now?

A: No wait, stop! Someone just succeeded in making that problem legible!

B: !!!

This observation should make us notice confusion about whether AI safety recruiting pipelines are actually doing the right type of thing.

In particular, the key problem here is that people are acting on a kind of top-down partly-social motivation (towards doing stuff that the AI safety community approves of)—a motivation which then behaves coercively towards their other motivations. But as per this dialogue, such a system is pretty fragile.

A healthier approach is to prioritize cultivating traits that are robustly good—e.g. virtue, emotional health, and fundamental knowledge. I expect that people with such traits will typically benefit the world even if they're missing crucial high-level considerations like the ones described above.

For example, an "AI capabilities" researcher from a decade ago who cared much more about fundamental knowledge than about citations might well have invented mechanistic interpretability without any thought of safety or alignment. Similarly, an AI capabilities researcher at OpenAI who was sufficiently high-integrity might have whistleblown on the non-disparagement agreements even if they didn't have any "safety-aligned" motivations.

Also, AI safety researche...

I'm taking the dialogue seriously but not literally. I don't think the actual phrases are anywhere near realistic. But the emotional tenor you capture of people doing safety-related work that they were told was very important, then feeling frustrated by arguments that it might actually be bad, seems pretty real. Mostly I think people in B's position stop dialoguing with people in A's position, though, because it's hard for them to continue while B resents A (especially because A often resents B too).

Some examples that feel like B-A pairs to me include: people interested in "ML safety" vs people interested in agent foundations (especially back around 2018-2022); people who support Anthropic vs people who don't; OpenPhil vs Habryka; and "mainstream" rationalists vs Vassar, Taylor, etc.

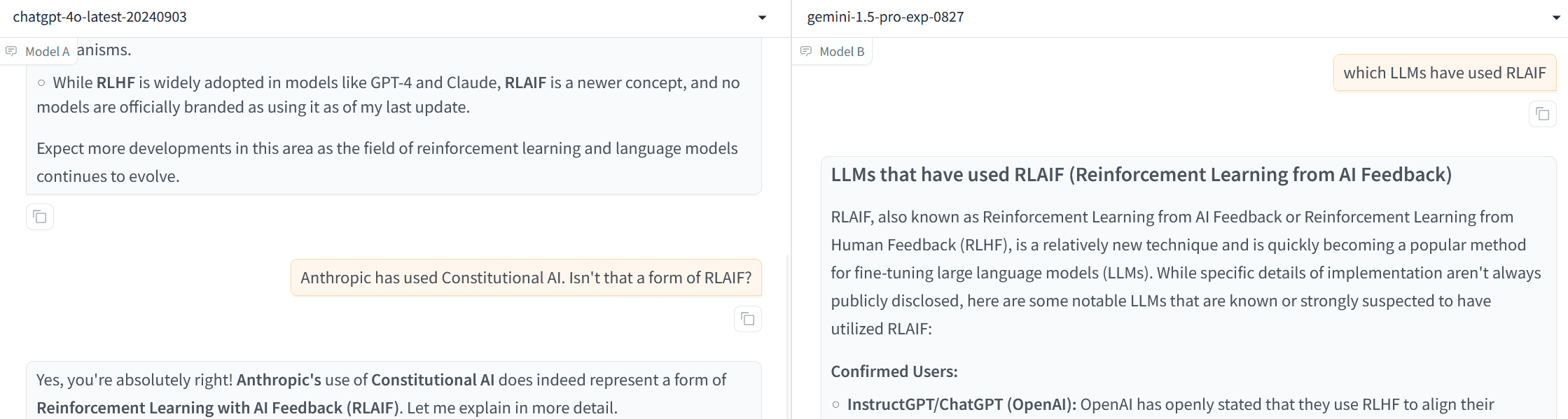

What is going on with Constitution AI? Does anyone know why no LLM aside from Claude (at least none that I can find) has used it? One would think that if it works about as well as RLHF (which it seems to), AI companies would be flocking to it to save on the cost of human labor?

Also, apparently ChatGPT doesn't know that Constitutional AI is RLAIF (until I reminded it) and Gemini thinks RLAIF and RLHF are the same thing. (Apparently not a fluke as both models made the same error 2 out of 3 times.)

Isn't the basic idea of Constitutional AI just having the AI provide its own training feedback using written instruction? My guess is there was a substantial amount of self-evaluation in the o1 training with complicated written instructions, probably kind of similar to a constituion (though this is just a guess).

I'm increasingly worried that philosophers tend to underestimate the difficulty of philosophy. I've previously criticized Eliezer for this, but it seems to be a more general phenomenon.

Observations:

- Low expressed interest in metaphilosophy (in relation to either AI or humans)

- Low expressed interest in AI philosophical competence (either concern that it might be low, or desire/excitement for supercompetent AI philosophers with Jupiter-sized brains)

- Low concern that philosophical difficulty will be a blocker of AI alignment or cause of AI risk

- High confidence when proposing novel solutions (even to controversial age-old questions, and when the proposed solution fails to convince many)

- Rarely attacking one's own ideas (in a serious or sustained way) or changing one's mind based on others' arguments

- Rarely arguing for uncertainty/confusion (i.e., that that's the appropriate epistemic status on a topic), with normative ethics being a sometime exception

Possible explanations:

- General human overconfidence

- People who have a high estimate of difficulty of philosophy self-selecting out of the profession.

- Academic culture/norms - no or negative rewards for being more modest or expressing confusion. (Moral uncertainty being sometimes expressed because one can get rewarded by proposing some novel mechanism for dealing with it.)

Philosophy is frequently (probably most of the time) done in order to signal group membership rather than as an attempt to accurately model the world. Just look at political philosophy or philosophy of religion. Most of the observations you note can be explained by philosophers operating at simulacrum level 3 instead of level 1.

If you say to someone

Ok, so, there's this thing about AGI killing everyone. And there's this idea of avoiding that by making AGI that's useful like an AGI but doesn't kill everyone and does stuff we like. And you say you're working on that, or want to work on that. And what you're doing day to day is {some math thing, some programming thing, something about decision theory, ...}. What is the connection between these things?

and then you listen to what they say, and reask the question and interrogate their answers, IME what it very often grounds out into is something like:

Well, I don't know what to do to make aligned AI. But it seems like X ϵ {ontology, decision, preference function, NN latent space, logical uncertainty, reasoning under uncertainty, training procedures, negotiation, coordination, interoperability, planning, ...} is somehow relevant.

...And, I have a formalized version of some small aspect of X in which is mathematically interesting / philosophically intriguing / amenable to testing with a program, and which seems like it's kinda related to X writ large. So what I'm going to do, is I'm going to tinker with this formalized version for a week/month/year, and then I

About a week ago FAR.AI posted a bunch of talks at the 2024 Vienna Alignment Workshop to its YouTube channel, including Supervising AI on hard tasks by Jan Leike.

Having finally experienced the LW author moderation system firsthand by being banned from an author's posts, I want to make two arguments against it that may have been overlooked: the heavy psychological cost inflicted on a commenter like me, and a structural reason why the site admins are likely to underweight this harm and its downstream consequences.

(Edit: To prevent a possible misunderstanding, this is not meant to be a complaint about Tsvi, but about the LW system. I understand that he was just doing what he thought the LW system expected him to do. I'm actually kind of grateful to Tsvi to let me understand viscerally what it feels like to be in this situation.)

First, the experience of being moderated by an opponent in a debate inflicts at least the following negative feelings:

- Unfairness. The author is not a neutral arbiter; they are a participant in the conflict. Their decision to moderate is inherently tied to their desire to defend their argument and protect their ego and status. In a fundamentally symmetric disagreement, the system places you at a profound disadvantage for reasons having nothing to do with the immediate situation. To a first approximation, they are as li

It feels like there's a confusion of different informal social systems with how LW 2.0 has been set up. Forums have traditionally had moderators distinct from posters, and even when moderators also participate in discussions on small forums, there are often informal conventions that a moderator should not put on a modhat if they are already participating in a dispute as a poster, and a second moderator should look at the post instead (you need more than one moderator for this of course).

The LW 2.0 author moderation system is what blog hosting platforms like Blogger and Substack use, and the bid seems to have been to entice people who got big enough to run their standalone successful blog back to Lesswrong. On these platforms the site administrators are very hands-off and usually only drop in to squash something actually illegal (and good luck getting anyone to talk to if they actually decide your blog needs to be wiped from the system), and the separate blogs are kept very distinct from each other with little shared site identity, so random very weird Blogger blogs don't really create that much of an overall "there's something off with Blogger" vibe. They just exist on their own do...

This seems a good opportunity to let you know about an ongoing debate over the LW moderation system. rsaarelm's comment above provides a particularly sharp diagnosis of the problem that many LWers see: author moderation imposes a "personal blog" moderation system onto a site that functions as a community forum, creating confusion, conflict, and dysfunction because the social norms of the two models are fundamentally at odds.

Even the site's own admins seem confused. Despite defending the "blog" moderation model at every turn, the recently redesigned front-page Feed gives users no indication that by replying to a comment or post, they would be stepping into different "private spaces" with different moderators and moderation policies. It is instead fully forum-like.

Given the current confusions, we may be at a crossroads where LW can either push fully into the "personal blog" model, or officially revert back to the "forum" model that is still apparent from elements of the site's design, and has plenty of mind share among the LW user base.

I suspect that when you made the original request for author moderation powers, it was out of intuitive personal prefere...

It's indeed the case that I haven't been attracted back to LW by the moderation options that I hoped might accomplish that. Even dealing with Twitter feels better than dealing with LW comments, where people are putting more effort into more complicated misinterpretations and getting more visibly upvoted in a way that feels worse. The last time I wanted to post something that felt like it belonged on LW, I would have only done that if it'd had Twitter's options for turning off commenting entirely.

So yes, I suppose that people could go ahead and make this decision without me. I haven't been using my moderation powers to delete the elaborate-misinterpretation comments because it does not feel like the system is set up to make that seem like a sympathetic decision to the audience, and does waste the effort of the people who perhaps imagine themselves to be dutiful commentators.

because it does not feel like the system is set up to make that seem like a sympathetic decision to the audience

Curious whether you have any guesses on what would make it seem like a sympathetic decision to the audience. My model here is that this is largely not really a technical problem, but more of a social problem (which is e.g. better worked towards by things like me writing widely read posts on moderation), though I still like trying to solve social problems with better technical solutions and am curious whether you have ideas (that are not "turn off commenting entirely", which I do think is a bad idea for LW in particular).

I'm not sure what Eliezer is referring to, but my guess is that many of the comments that he would mark as "elaborate-misinterpretations", I would regard as reasonable questions / responses, and I would indeed frown on Eliezer just deleting them. (Though also shrug, since the rules are that authors can delete whatever comments they want.)

Some examples that come to mind are this discussion with Buck and this discussion with Matthew Barnett, in which (to my reading of things) Eliezer seems to be weirdly missing what the other person is saying at least as much as they are missing what he is saying.

I from the frustration Eliezer expressed in those threads, I would guess that he would call these elaborate-misinterpretations.

My take is that there's some kind of weird fuckyness about communicating about some of these topics where both sides feel exasperation that the other side is apparently obstinately mishearing them. I would indeed think it would be worse if the post author in posts like that just deleted the offending comments.

I'm guessing that there are a lot enough people like me, who have such a strong prior on "a moderator shouldn't mod their own threads, just like a judge shouldn't judge cases involving themselves", plus our own experiences showing that the alternative of forum-like moderation works well enough, that it's impossible to overcome this via abstract argumentation. I think you'd need to present some kind of evidence that it really leads to better results than the best available alternative.

Nowhere on the whole wide internet works like that! Clearly the vast majority of people do not think that authors shouldn’t moderate their own threads. Practically nowhere on the internet do you even have the option for anything else.

Where's this coming from all of a sudden? Forums work like this, Less Wrong used to work like this. Data Secrets Lox still works like this. Most subreddits work like this. This whole thread is about how maybe the places that work like this have the right idea, so it's a bit late in the game to open up with "they don't exist and aren't a thing anyone wants".

There is actually a significant difference between "Nowhere on the whole wide internet works like that!" and "few places work like that". It's not just a nitpick, because to support my point that it will be hard for Eliezer to get social legitimacy for freely exercising author mod power, I just need that there is a not too tiny group of people on the Internet who still prefers to have no author moderation (it can be small in absolute numbers, as long as it's not near zero, since they're likely to congregate at a place like LW that values rationality and epistemics). The fact that there are still even a few places on the Internet that works like this makes a big difference to how plausible my claim is.

Ok I misunderstood your intentions for writing such posts. Given my new understanding, will you eventually move to banning or censoring people for expressing disapproval of what they perceive as bad or unfair moderation, even in their own "spaces"? I think if you don't, then not enough people will voluntarily leave or self-censor such expressions of disapproval to get the kind of social legitimacy that Eliezer and you desire, but if you do, I think you'll trigger an even bigger legitimacy problem because there won't be enough buy-in for such bans/censorship among the LW stakeholders.

If you don't like it build your own forum that is similarly good or go to a place where someone has built a forum that does whatever you want here.

This is a terrible idea given the economy of scale in such forums.

I mean, I had a whole section in the Said post about how I do think it's a dick move to try to socially censure people for using any moderation tools.

I think an issue you'll face is that few people will "try to socially censure people for using any moderation tools", but instead different people will express disapproval of different instances of perceived bad moderation, which adds up to that a large enough share of all author moderation gets disapproved of (or worse blow up into big dramas), such that authors like Eliezer do not feel there's enough social legitimacy to really use them.

(Like in this case I'm not following the whole site and trying to censure anyone who does author moderation, but speaking up because I myself got banned!)

And Eliezer's comment hints why this would happen: the comments he wants to delete are often highly upvoted. If you delete such comments, and the mod isn't a neutral third party, of course a lot of people will feel it was wrong/unfair and want to express disapproval, but they probably won't be the same people each time.

How are you going to censor or deprioritize such expressions of disapproval? By manual mod intervention? AI automation? Instead o...

The rest of the Internet is also not about rationality though. If Eliezer started deleting a lot of highly upvoted comments questioning/criticizing him (even if based on misinterpretations like Eliezer thinks), I bet there will be plenty of people making posts like "look at how biased Eliezer is being here, trying to hide criticism from others!" These posts themselves will get upvoted quite easily, so this will be a cheap/easy way to get karma/status, as well as (maybe subconsciously) getting back at Eliezer for the perceived injustice.

I don't know if Eliezer is still following this thread or not, but I'm also curious why he thinks there isn't enough social legitimacy to exercise his mod powers freely, whether its due to a similar kind of expectation.

I mean, yes, these dynamics have caused many people, including myself, to want to leave LessWrong. It sucks. I wish people stopped. Not all moderation is censorship. The fact that it universally gets treated as such by a certain population of LW commenters is one of the worst aspects of this site (and one of the top reasons why in the absence of my own intervention into reviving the site, this site would likely no longer exist at all today).

I think we can fix it! I think it unfortunately takes a long time, and continuous management and moderation to slowly build trust that indeed you can moderate things without suddenly everyone going insane. Maybe there are also better technical solutions.

Claiming this is about "rationality" feels like mostly a weird rhetorical move. I don't think it's rational to pretend that unmoderated discussion spaces somehow outperform moderated ones. As has been pointed out many times, 4Chan is not the pinnacle of internet discussion. Indeed, I think largely across the internet, more moderation results in higher trust and higher quality discussions (not universally, you can definitely go on a censorious banning spree as a moderator and try to skew consensus in various crazy ways, but by and large, as a correlation).

This is indeed an observation so core to LessWrong that Well-Kept Gardens Die By Pacifism was, as far as I can tell, a post necessary for LessWrong to exist at all.

Not all moderation is censorship.

I'm not saying this, nor are the hypothetical people in my prediction saying this.

Claiming this is about "rationality" feels like mostly a weird rhetorical move.

We are saying that there is an obvious conflict of interest when an author removes a highly upvoted piece of criticism. Humans being biased when presented with COIs is common sense, so connecting such author moderation with rationality is natural, not a weird rhetorical move.

The rest of your comment seems to be forgetting that I'm only complaining about authors having COI when it comes to moderation, not about all moderation in general. E.g. I have occasional complaints like about banning Said, but generally approve of the job site moderators are doing on LW. Or if you're not forgetting this, then I'm not getting your point. E.g.

I don't think it's rational to pretend that unmoderated discussion spaces somehow outperform moderated ones.

I have no idea how this related to my actual complaint.

I think you'd need to present some kind of evidence that it really leads to better results than the best available alternative.

I am perhaps misreading, but think this sentence should be interpreted as "if you want to convince [the kind of people that I'm talking about], then you should do [X, Y, Z]." Not "I unconditionally demand that you do [X, Y, Z]."

This comment seems like a too-rude response to someone who (it seems to me) is politely expressing and discussing potential problems. The rudeness seems accentuated by the object level topic.

The LW 2.0 author moderation system is what blog hosting platforms like Blogger and Substack use, and the bid seems to have been to entice people who got big enough to run their standalone successful blog back to Lesswrong.

I think it was also a desire to get people who liked a steppe style system to post. In particular, I recall Eliezer saying that he wanted a system similar to his Facebook page, where he can just ban an annoying commenter with a couple of clicks and be done with it.

Thank you, this seems like a very clear and insightful description of what is confusing and dysfunctional about the current situation.

To add some of my personal thoughts on this, the fact that the Internet always had traditional forums with the forum model of moderation shows that model can work perfectly well, and there is no need for LW to also have author moderation, from a pure moderation (as opposed to attracting authors) perspective. And "standalone blog author tier people" not having come back in 8 years since author mod was implemented means it's time to give up on that hope.

LW is supposed to be a place for rationality, and the forum model of moderation is clearly better for that (by not allowing authors to quash/discourage disagreement or criticism). "A moderator shouldn't mod their own threads" is such an obviously good rule and widely implemented on forums, that sigh... I guess I'll stop here before I start imputing impure motives to the site admins again, or restart a debate I don't really want to have at this point.

The free space for responses and rebuttals isn't supposed to be the comments of the post, but the ability to write a different post in reply.

I want to just note, for the sake of the hypothesis space, a probably-useless idea: There could somehow be more affordance for a middle ground of "offshoot" posting. In other words, structurally formalize / enable the pattern that Anna exhibited in here comment here:

on her post, where she asked for a topic to be budded off to another venue. Adele then did so here:

And the ensuing discussion seemed productive. This kinda a bit like quote-tweeting as opposed to replying. The difference between just making your own shortform post would be that it's a shortform post, but also paired with a comment on the original post. This would be useful if, as in the above example, the OP author asked for a topic to be discussed in a different venue; or if a commenter wants to discuss something, and also notify the author, and also make their comment...

I think the answer to this is, “because the post, specifically, is the author’s private space”.

I think that's the official explanation, but even the site admins don't take it seriously. Because if this is supposed to be true, then why am I allowed to write and post replies directly from the front page Feed, where all the posts and comments from different authors are mixed together, and authors' moderation policies are not shown anywhere? Can you, looking at that UI, infer that those posts and comments actually belong to different "private spaces" with different moderators and moderation policies?

I understand that you don't! But almost everyone else who I do think has those attributes does not have those criteria. Like, Scott Alexander routinely bans people from ACX, even Said bans people from datasecretslox. I am also confident that the only reason why you would not ban people here on LW, is because the moderators are toiling for like 2 hours a day to filter out the people obviously ill-suited for LessWrong.

More substantively, I think my feelings and policies are fundamentally based on a (near) symmetry between the author and commenter. If they are both basically LW users in good standing, why should the author get so much more power in a conflict/disagreement.[1] So this doesn't apply to moderating/filtering out users who are just unsuitable for LW or one's own site.

- ^

I mean I understand you have your reasons, but it doesn't remove the unfairness. Like if in a lawsuit for some reason a disinterested judge can't be found, and the only option is to let a friend of the plaintiff be the judge, that "reason" is not going to remove the unfairness.

What do people think about having more AI features on LW? (Any existing plans for this?) For example:

- AI summary of a poster's profile, that answers "what should I know about this person before I reply to them", including things like their background, positions on major LW-relevant issues, distinctive ideas, etc., extracted from their post/comment history and/or bio links.

- "Explain this passage/comment" based on context and related posts, similar to X's "explain this tweet" feature, which I've often found useful.

- "Critique this draft post/comment." Am I making any obvious mistakes or clearly misunderstanding something? (I've been doing a lot of this manually, using AI chatbots.)

- "What might X think about this?"

- Have a way to quickly copy all of someone's posts/comments into the clipboard, or download as a file (to paste into an external AI).

I've been thinking about doing some of this myself (e.g., update my old script for loading all of someone's post/comment history into one page), but of course would like to see official implementations, if that seems like a good idea.

Are humans fundamentally good or evil? (By "evil" I mean something like "willing to inflict large amounts of harm/suffering on others in pursuit of one's own interests/goals (in a way that can't be plausibly justified as justice or the like)" and by "good" I mean "most people won't do that because they terminally care about others".) People say "power corrupts", but why isn't "power reveals" equally or more true? Looking at some relevant history (people thinking Mao Zedong was sincerely idealistic in his youth, early Chinese Communist Party looked genuine about wanting to learn democracy and freedom from the West, subsequent massive abuses of power by Mao/CCP lasting to today), it's hard to escape the conclusion that altruism is merely a mask that evolution made humans wear in a context-dependent way, to be discarded when opportune (e.g., when one has secured enough power that altruism is no longer very useful).

After writing the above, I was reminded of @Matthew Barnett's AI alignment shouldn’t be conflated with AI moral achievement, which is perhaps the closest previous discussion around here. (Also related are my previous writings about "human safety" although they still used the...

I have a feeling that for many posts that could be posted as either normal posts or as shortform, they would get more karma as shortform, for a few possible reasons:

- lower quality bar for upvoting

- shortforms showing some of the content, which helps hook people in to click on it

- people being more likely to click on or read shortforms due to less perceived effort of reading (since they're often shorter and less formal)

This seems bad because shortforms don't allow tagging and are harder to find in other ways. (People are already more reluctant to make regular posts due to more perceived risk if the post isn't well received, and the above makes it worse.) Assuming I'm right and the site admins don't endorse this situation, maybe they should reintroduce the old posting karma bonus multiplier, but like 2x instead of 10x, and only for positive karma? Or do something else to address the situation like make the normal posts more prominent or enticing to click on? Perhaps show a few lines of the content and/or display the reading time (so there's no attention penalty for posting a literally short post as a normal post)?

Some months ago, I suggested that there could be an UI feature to automatically turn shortforms into proper posts if they get sufficent karma, that authors could turn on or off.

Math and science as origin sins.

From Some Thoughts on Metaphilosophy:

Philosophy as meta problem solving Given that philosophy is extremely slow, it makes sense to use it to solve meta problems (i.e., finding faster ways to handle some class of problems) instead of object level problems. This is exactly what happened historically. Instead of using philosophy to solve individual scientific problems (natural philosophy) we use it to solve science as a methodological problem (philosophy of science). Instead of using philosophy to solve individual math problems, we use it to solve logic and philosophy of math. [...] Instead of using philosophy to solve individual philosophical problems, we can try to use it to solve metaphilosophy.

It occurred to me that from the perspective of longtermist differential intellectual progress, it was a bad idea to invent things like logic, mathematical proofs, and scientific methodologies, because it permanently accelerated the wrong things (scientific and technological progress) while giving philosophy only a temporary boost (by empowering the groups that invented those things, which had better than average philosophical competence, to spread their cu...

Prestige status is surprisingly useless in domestic life, and dominance status is surprisingly often held by the female side, even in traditional "patriarchal" societies.

Examples: Hu Shih, the foremost intellectual of 1920s China (Columbia PhD, professor of Peking University and later its president), being "afraid" of his illiterate, foot-bound wife and generally deferring to her. Robin Hanson's wife vetoing his decision to sell stocks ahead of COVID, and generally not trusting him to trade on their shared assets.

Not really sure why or how to think about this, but thought I'd write down this observation... well a couple of thoughts:

- Granting or recognizing someone's prestige may be a highly strategic (albeit often subconscious) decision, not something you just do automatically.

- These men could probably win more dominance status in their marriages if they tried hard, but perhaps decided their time and effort was better spent to gain prestige outside. (Reminds me of comparative advantage in international trade, except in this case you can't actually trade the prestige for dominance.)

The Robin Hanson example doesn't show that dominance is held by his wife, Peggy Jackson, unless you have tweets from her saying that she decided to trade a lot of stocks, he tried to veto it, and she overruled his veto and did it anyway. They could have a rule where large shared investment decisions are made with the consent of both sides. Some possibilities:

- You're surprised by the absence of male dominance, not the presence of female dominance.

- You interpreted a partner-veto as partner-dominance, instead of joint decision-making.

- Peggy Jackson is dominant in their relationship but you picked a less compelling example.

This from the same tweet reads as Robin Hanson getting his way in a dispute:

I stocked us up for 2 mo. crisis, though wife resisted, saying she trusted CDC who said 2 wk. is plenty.

I think modeling the social dynamics of two people in a marriage with status, a high-level abstraction typically applied for groups, doesn't make much sense. Game theory would make more sense imo.

High population may actually be a problem, because it allows the AI transition to occur at low average human intelligence, hampering its governance. Low fertility/population would force humans to increase average intelligence before creating our successor, perhaps a good thing!

This assumes that it's possible to create better or worse successors, and that higher average human intelligence would lead to smarter/better politicians and policies, increasing our likelihood of building better successors.

Some worry about low fertility leading to a collapse of civilization, but embryo selection for IQ could prevent that, and even if collapse happens, natural selection would start increasing fertility and intelligence of humans again, so future smarter humans should be able to rebuild civilization and restart technological progress.

Added: Here's an example to illustrate my model. Assume a normally distributed population with average IQ of 100 and we need a certain number of people with IQ>130 to achieve AGI. If the total population was to half, then to get the same absolute number of IQ>130 people as today, average IQ would have to increase by 4.5, and if the population was to become 1/10 of the original, average IQ would have to increase by 18.75.

Possible root causes if we don't end up having a good long term future (i.e., realize most of the potential value of the universe), with illustrative examples:

- Technical incompetence

- We fail to correctly solve technical problems in AI alignment.

- We fail to build or become any kind of superintelligence.

- We fail to colonize the universe.

- Philosophical incompetence

- We fail to solve philosophical problems in AI alignment

- We end up optimizing the universe for wrong values.

- Strategic incompetence

- It is not impossible to cooperate/coordinate, but we fail to figure out how.

- We fail to have other important strategic insights

- E.g., related to whether it's better in the long run to build AGI first, or enhance human intelligence first

- We have the insights but fail to make use of them correctly.

- Actual impossibility of cooperation/coordination

- It is actually rational or right in some sense to underspend on AI safety while racing for AGI/ASI, and nothing can be done about this.

Is this missing anything, or perhaps not a good way to break down the root causes? The goal for this includes:

- Having a high-level schema for what someone could work on if they care about having a good l

This is (approximately) my forum.

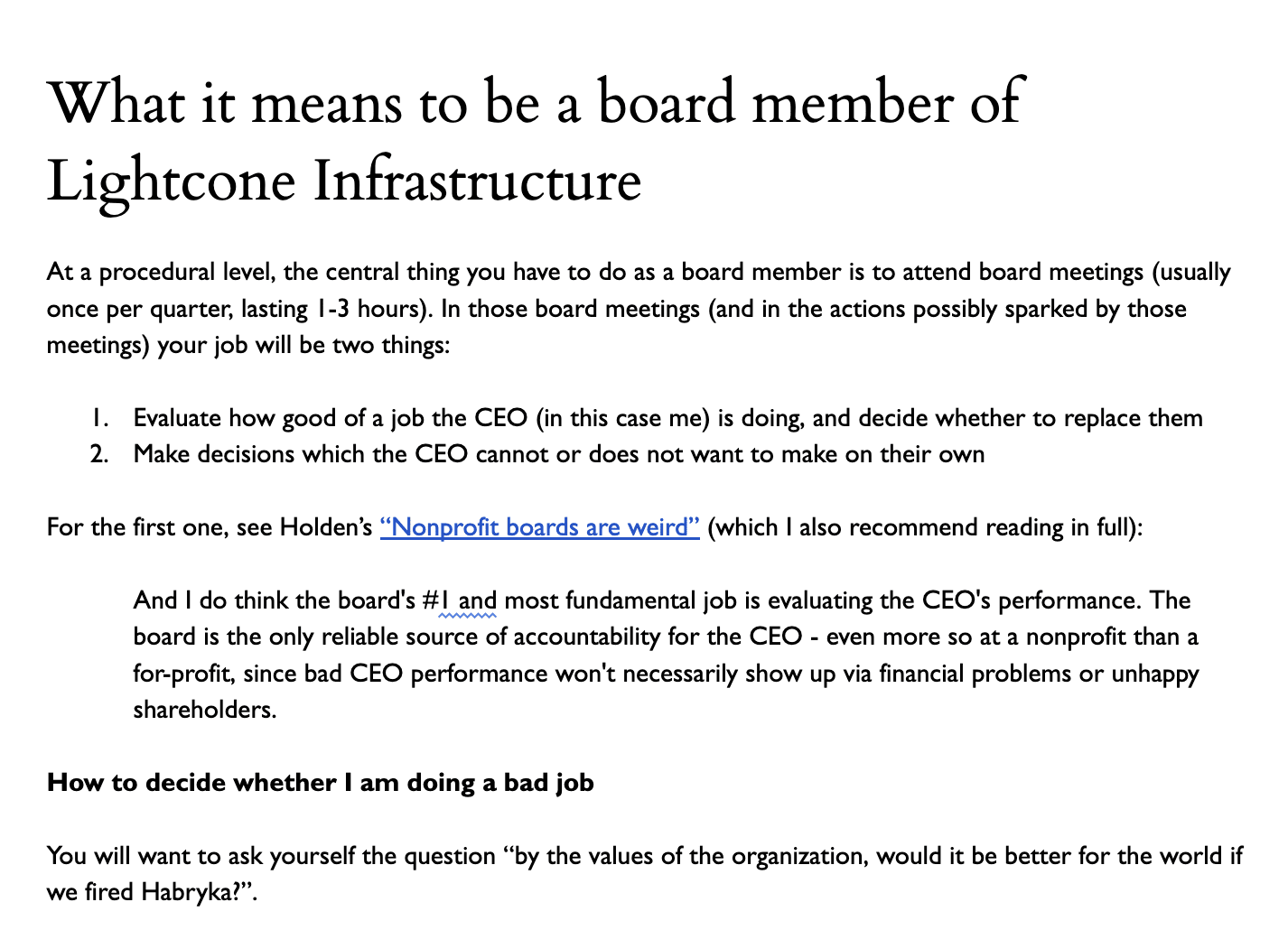

I was curious what Habryka meant when he said this. Don't non-profits usually have some kind of board oversight? It turns out (from documents filed with the State of California), that Lightcone Infrastructure, which operates LW, is what's known as a sole-member nonprofit, with a 1-3 person board of directors determined by a single person (member), namely Oliver Habryka. (Edit: My intended meaning here is that this isn't just a historical fact, but Habryka still has this unilateral power. And after some debate in the comments, it looks like this is correct after all, but was unintentional. See Habryka's clarification.)

However, it also looks like the LW domain is owned by MIRI, and MIRI holds the content license (legally the copyright is owned by each contributor and licensed to MIRI for use on LW). So if there was a big enough dispute, MIRI could conceivably find another team to run LW.

I'm not sure who owns the current code for LW, but I would guess it's Lightcone, so MIRI would have to also recreate a codebase for it (or license GreaterWrong's, I guess).

I was initially confused why Lightcone was set up that way (i.e., why was LW handed over to an...

It looks like I agreed with you too quickly. Just double-checked with Gemini Pro 3.0, and its answer looks correct to me:

This is a fascinating turn of events. Oliver is quoting from Section 3.01 of the bylaws, but he appears to be missing the critical conditional clause that precedes the text he quoted.

If you look at the bottom of Page 11 leading into Page 12 of the PDF, the sentence structure reveals that the "Directors = Members" rule is a fail-safe mechanism that only triggers if the initial member (Oliver) dies or becomes incapacitated without naming a successor.

Here is the text from the document:

[Page 11, bottom] ...Upon the death, resignation, or incapacity of all successor Members where no successor [Page 12, top] Member is named, (1) the directors of this corporation shall serve as the Members of this corporation...

By omitting the "Upon the death, resignation, or incapacity..." part, he is interpreting the emergency succession plan as the current operating rule.

Oh, huh, maybe you are right? If so, I myself was unaware of this! I will double check our bylaws and elections that have happened so far and confirm the current state of things. I was definitely acting under the assumption that I wasn't able to fire Vaniver and Daniel and that they would be able to fire me!

See for example this guidance document I sent to Daniel and Vaniver when I asked them to be board members:

If it is indeed true that they cannot fire me, then I should really rectify that! If so, I am genuinely very grateful for you noticing.

I think given clear statements that I have made that I am appointing them to a position in which they are able to fire me, I think they would have probably indeed held the formal power to do so, but it is possible that we didn't follow the right corporate formalities, and if so should fix that! Corporate formalities do often turn out to really matter in the end.

Some potential risks stemming from trying to increase philosophical competence of humans and AIs, or doing metaphilosophy research. (1 and 2 seem almost too obvious to write down, but I think I should probably write them down anyway.)

- Philosophical competence is dual use, like much else in AI safety. It may for example allow a misaligned AI to make better decisions (by developing a better decision theory), and thereby take more power in this universe or cause greater harm in the multiverse.

- Some researchers/proponents may be overconfident, and cause flawed metaphilosophical solutions to be deployed or spread, which in turn derail our civilization's overall philosophical progress.

- Increased philosophical competence may cause many humans and AIs to realize that various socially useful beliefs have weak philosophical justifications (such as all humans are created equal or have equal moral worth or have natural inalienable rights, moral codes based on theism, etc.). In many cases the only justifiable philosophical positions in the short to medium run may be states of high uncertainty and confusion, and it seems unpredictable what effects will come from many people adopting such positions.

Today I was author-banned for the first time, without warning and as a total surprise to me, ~8 years after banning power was given to authors, but less than 3 months since @Said Achmiz was removed from LW. It seems to vindicate my fear that LW would slide towards a more censorious culture if the mods went through with their decision.

Has anyone noticed any positive effects, BTW? Has anyone who stayed away from LW because of Said rejoined?

Edit: In addition to the timing, previously, I do not recall seeing a ban based on just one interaction/thread, instead of some long term pattern of behavior. Also, I'm not linking the thread because IIUC the mods do not wish to see authors criticized for exercising their mod powers, and I also don't want to criticize the specific author. I'm worried about the overall cultural trend caused by admin policies/preferences, not trying to apply pressure to the author who banned me.

[Reposting my previous comment without linking to the specific thread in question:]

I don't understand the implied connection to "censorious" or "culture". You had a prolonged comment thread/discussion/dispute (I didn't read it) with one individual author, and they got annoyed at some point and essentially blocked you. Setting aside both the tone and the veracity of their justifying statements (<quotes removed>), disengaging from unpleasant interactions with other users is normal and pretty unobjectionable, right?

(Thanks for reposting without the link/quotes. I added back the karma your comment had, as best as I could.) Previously, the normal way to disengage was to just disengage, or to say that one is disengaging and then stop responding, not to suddenly ban someone without warning based on one thread. I do not recall seeing a ban previously that wasn't based on some long term pattern of behavior.

I think the cultural slide will include self-censorship, e.g., having had this experience (of being banned out of the blue), in the future I'll probably subconsciously be constantly thinking "am I annoying this author too much with my comments" and disengage early or change what I say before I get banned, and this will largely be out of my conscious control.

I think when a human gets a negative reward signal, probably all the circuits that contributed to the "episode trajectory" gets downweighted, and antagonistic circuits get upweighted, similar to AI being trained with RL. I can override my subconscious circuits with conscious willpower but I only have so much conscious processing and will power to go around. For example I'm currently feeling a pretty large aversion towards talking with you, but am overriding it because I think it's worth the effort to get this message out, but I can't keep the "override" active forever.

Of course I can consciously learn more precise things, if you were to write about them, but that seems unlikely to change the subconscious learning that happened already.

What I've been using AI (mainly Gemini 2.5 Pro, free through AI Studio with much higher limits than the free consumer product) for:

- Writing articles in Chinese for my family members, explaining things like cognitive bias, evolutionary psychology, and why dialectical materialism is wrong. (My own Chinese writing ability is <4th grade.) My workflow is to have a chat about some topic with the AI in English, then have it write an article in Chinese based on the chat, then edit or have it edit as needed.

- Simple coding/scripting projects. (I don't code seriously anymore.)

- Discussing history, motivations of actors, impact of ideology and culture, what if, etc.

- Searching/collating information.

- Reviewing my LW posts/comments (any clear flaws, any objections I should pre-empt, how others might respond)

- Explaining parts of other people's comments when the meaning or logic isn't clear to me.

- Expanding parts of my argument (and putting this in a collapsible section) when I suspect my own writing might be too terse or hard to understand.

- Sometimes just having a sympathetic voice to hear my lamentations of humanity's probable fate.

I started using AI more after Grok 3 came out (I have an annual X subscription for Tweeting purposes), as previous free chatbots didn't seem capable enough for many of these purposes, and then switched to Gemini 2.0 Pro which was force upgraded to 2.5 Pro. Curious what other people are using AI for these days.

I wrote Smart Losers a long time ago, trying to understand/explain certain human phenomena. But the model could potentially be useful for understanding (certain aspects of) human-AI interactions as well.

Possibly relevant anecdote: Once I was with a group of people who tried various psychological experiments. That day, the organizers proposed that we play iterated Prisonner's Dilemma. I was like "yay, I know the winning strategy, this will be so easy!"

I lost. Almost everyone always defected against me; there wasn't much I could do to get points comparable to other people who mostly cooperated with each other.

After the game, I asked why. (During the game, we were not allowed to communicate, just to write our moves.) The typical answer was something like: "well, you are obviously very smart, so no matter what I do, you will certainly find a way to win against me, so my best option is to play it safe and always defect, to avoid the worst outcome".

I am not even sure if I should be angry at them. I suppose that in real life, when you have about average intelligence, "don't trust people visibly smarter than you" is probably a good strategy, on average, because there are just too many clever scammers walking around. At the same time I feel hurt, because I am a natural altruist and cooperator, so this feels extremely unfair, and a loss for both sides.

(There were other situations in my life where the same pattern probably also applied, but most of the time, you just don't know why other people do whatever they do. This time I was told their reasoning explicitly.)

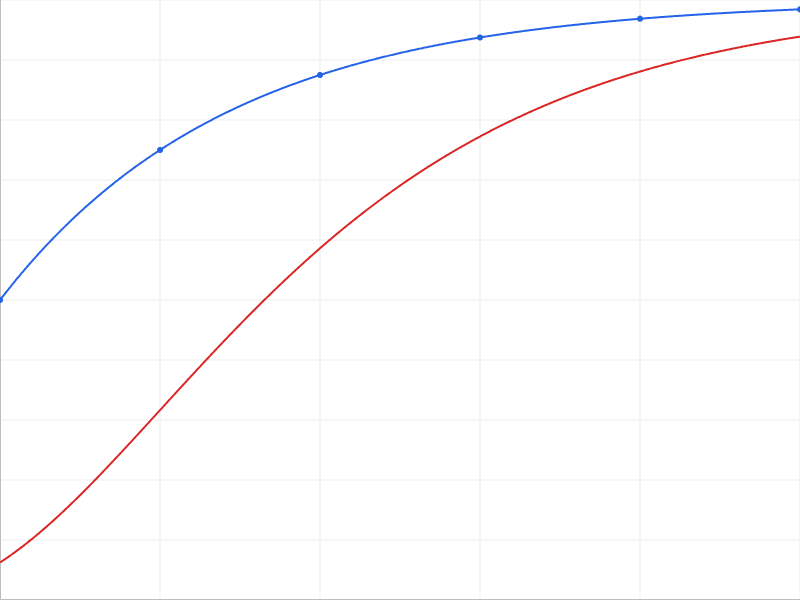

I want to highlight a point I made in an EAF thread with Will MacAskill, which seems novel or at least underappreciated. For context, we're discussing whether the risk vs time (in AI pause/slowdown) curve is concave or convex, or in other words, whether the marginal value of an AI pause increases or decreases with pause length. Here's the whole comment for context, with the specific passage bolded:

Whereas it seems like maybe you think it's convex, such that smaller pauses or slowdowns do very little?

I think my point in the opening comment does not logically depend on whether the risk vs time (in pause/slowdown) curve is convex or concave[1], but it may be a major difference in how we're thinking about the situation, so thanks for surfacing this. In particular I see 3 large sources of convexity:

- The disjunctive nature of risk / conjunctive nature of success. If there are N problems that all have to solved correctly to get a near-optimal future, without losing most of the potential value of the universe, then that can make the overall risk curve convex or at least less concave. For example compare f(x) = 1 - 1/2^(1 + x/10) and f^4.

- Human intelligence enhancements coming online during t

An update on this 2010 position of mine, which seems to have become conventional wisdom on LW:

...In my posts, I've argued that indexical uncertainty like this shouldn't be represented using probabilities. Instead, I suggest that you consider yourself to be all of the many copies of you, i.e., both the ones in the ancestor simulations and the one in 2010, making decisions for all of them. Depending on your preferences, you might consider the consequences of the decisions of the copy in 2010 to be the most important and far-reaching, and therefore act mostly

I'm sharing a story about the crew of Enterprise from Star Trek TNG[1].

This was written with AI assistance, and my workflow was to give the general theme to AI, have it write an outline, then each chapter, then manually reorganize the text where needed, request major changes, point out subpar sentences/paragraphs for it to rewrite, and do small manual changes. The AI used was mostly Claude 3.5 Sonnet, which seems significantly better than ChatGPT-4o and Gemini 1.5 Pro at this kind of thing.

- ^

getting an intelligence/rationality upgrade, which causes them to