Re: Ayahuasca from the ACX survey having effects like:

- “Obliterated my atheism, inverted my world view no longer believe matter is base substrate believe consciousness is, no longer fear death, non duality seems obvious to me now.”

[1]There's a cluster of subcultures that consistently drift toward philosophical idealist metaphysics (consciousness, not matter or math, as fundamental to reality): McKenna-style psychonauts, Silicon Valley Buddhist circles, neo-occultist movements, certain transhumanist branches, quantum consciousness theorists, and various New Age spirituality scenes. While these communities seem superficially different, they share a striking tendency to reject materialism in favor of mind-first metaphysics.

The common factor connecting them? These are all communities where psychedelic use is notably prevalent. This isn't coincidental.

There's a plausible mechanistic explanation: Psychedelics disrupt the Default Mode Network and adjusting a bunch of other neural parameters. When these break down, the experience of physical reality (your predictive processing simulation) gets fuzzy and malleable while consciousness remains vivid and present. This creates a powerful intuition that consciousness must be more fundamental than matter. Conscious experience is more fundamental/stable than perception of the material world, which many people conflate with the material world itself.

The fun part? This very intuition - that consciousness is primary and matter secondary - is itself being produced by ingesting a chemical which alters physical brain mechanisms. We're watching neural circuitry create metaphysical intuitions in real-time.

This suggests something profound about metaphysics itself: Our basic intuitions about what's fundamental to reality (whether materialist OR idealist) might be more about human neural architecture than about ultimate reality. It's like a TV malfunctioning in a way that produces the message "TV isn't real, only signals are real!"

This doesn't definitively prove idealism wrong, but it should make us deeply suspicious of metaphysical intuitions that feel like direct insight - they might just be showing us the structure of our own cognitive machinery.

- ^

Claude assisted writing, ideas from me and edited by me.

This suggests something profound about metaphysics itself: Our basic intuitions about what's fundamental to reality (whether materialist OR idealist) might be more about human neural architecture than about ultimate reality. It's like a TV malfunctioning in a way that produces the message "TV isn't real, only signals are real!"

In meditation, this is the fundamental insight, the so called non-dual view. Neither are you the fundamental non-self nor are you the specific self that you yourself believe in, you're neither, they're all empty views, yet that view in itself is also empty. For that view comes from the co-creation of reality from your own perspective yet why should that be fundamental?

Emptiness is empty and so can't be true and you just kind of fall down into this realization of there being only circular or arbitrary properties of experience. Self and non-self are just as true and living from this experience is wonderfully freeing.

If you view your self as a nested hierarchical controller and you see through it then you believe that you can't be it and so you therefore cling onto what is next most apparent, that you're the entire universe but that has to be false as well!

This is explored in the later parts of the book seeing that frees by rob burbea if anyone's interested.

There's a plausible mechanistic explanation: Psychedelics disrupt the Default Mode Network and adjusting a bunch of other neural parameters. When these break down, the experience of physical reality (your predictive processing simulation) gets fuzzy and malleable while consciousness remains vivid and present. This creates a powerful intuition that consciousness must be more fundamental than matter. Conscious experience is more fundamental/stable than perception of the material world, which many people conflate with the material world itself.

I think something like a similar model here is that it disrupts the controller and so you're left with sensory input instead without a controller and so you can only be sensory input? I'm uncertain of how you use the word consciousness here do you mean our blob of sensory experience or something else?

Nice! I haven't read a ton of Buddhism, cool that this fits into a known framework.

I'm uncertain of how you use the word consciousness here do you mean our blob of sensory experience or something else?

Yeah, ~subjective experience.

I sent the following offer (lightly edited) to discuss metaphysics to plex. I extend the same to anyone else.[1]

(Note: I don't do psychedelics or participate in the mentioned communities[2], and I'm deeply suspicious of intuitions/deep-human-default-assumptions. I notice unquestioned intuitions across views on this, and the primary thing I'd like to do in discussing is try to get the other to see theirs.)

Hi, I saw [this shortform] by you

Wondering if you'd want to discuss metaphysics itself / let me socratic-question[3] you. I lean towards the view that "metaphysics is extra-mathematical; it contains non-mathematical-objects, such as phenomenal qualia" but I'd like to mostly see if I can socratic-question you into noticing incoherence. I'm too uncertain about the true metaphysics to put forth a totalizing theory.

It might help if you've read this post first.

My starting questions:

Do you believe phenomenal qualia exist? If you do, are they mathematical objects? If you don't, can you watch this short video on a thought experiment?

(Commentary: someone in the comments of the linked post told me that video was what changed their position from 'camp 1' (~illusionist, e.g. "qualia is an intuition from evolution") to 'camp 2' ("qualia is metaphysically fundamental"))

- You mentioned 'matter' and 'math' as candidates for 'fundamental to reality'. My question depends on which is your view.

If you believe matter is fundamental, what do you mean by matter; how does it differ from math?

(Commentary: "matter" seems like an intuitive concept; one has an intuition that reality is "made of something", and calls that "thing" (undefined) "matter", and refers to "matter" without knowing "what it is" (also undefined); there being a word for this intuition prevents them from noticing their lack of knowledge, or the circular self-reference between "matter" and "what the world is made of")

If you believe math is fundamental, what distinguishes this particular mathematical universe from other ones; what qualifies this world as "real", if anything; what 'breathes fire into the equations and creates a world for them to describe'?

(Commentary: one self-consistent position answers "nothing" - that this world is just one of the infinitely many possible mathematical functions / programs. That 'real' secretly means 'the program(s?) we are part of'. Though I observe this position to be rare; most have a strong intuition that there is something which "makes reality real".)

- If you believe math is fundamental, what is math?[4]

- ^

Though I'll likely lose interest if it seems like we're talking past each other / won't resolve any cruxy disagreements.

- ^

(except arguably the qualia research institute's discord server, which might count because it has psychedelics users in it)

- ^

(Questioning with the goal of causing the questioned one to notice specific assumptions or intuitions to their beliefs, as a result of trying to generate a coherent answer)

- ^

From an unposted text:

The paradox of recursive explanation applies to metaphysics too

In Explain/Worship/Ignore (~500 words), Eliezer describes an apparent paradox: if you ask for some physical phenomena to be explained (shown to have a more fundamental cause), then ask the same of the explanation, and so on, the only conceivable outcomes seem paradoxical: infinite recurse, circular self-reference, or a 'special' first cause that does not itself need to be explained.

This is sometimes called the paradox of why. It's usually applied to physics; this text applies it to logic/math-structure and metaphysics/ontology too. In short, you can continually ask "but what is x" for any aspect of logic or ontology.

Here's a hypothetical Socratic dialogue.

Author: "What is reality?"

Interlocutor: "Reality is

<complete description of [very large mathematical structure that perfectly reflects the behavior of the universe]>."Author: "Let's suppose that is true. But this 'math' you just used; what is it?"

Interlocutor: "Mathematics is a program which assigns, based on a few simple rules, 'T' or 'F' to inputs formatted in a certain way."

Author: "I think you just moved the hard part of the question to be one question away: What is a program?"

Interlocutor: "Hmm. I can't define a program in terms of math, because I just did the reverse. Wikipedia says a program is 'a sequence of instructions for a computer to execute'. If I just wrote that, Author would ask what it means for a computer to execute an instruction. What else could I write?

A computer is a part of physics arranged into a localized structure, and for this structure to 'execute an instruction' is for physical law to flow through (operate on, apply to) it, such that the structure's observed behavior matches that of a simpler-than-physics abstract system for transforming inputs to outputs. Unfortunately, I've already defined physics in terms of math, so defining a program in terms of physics would be circular. I think I'm at a dead end."

Author: "Do you want to revise one of your previous definitions?"

Interlocutor: "Maybe I could define math as some more fundamental thing instead of as a certain kind of program. But I just failed to find such a more fundamental thing for 'program'. Let's check Wikipedia again...

WP:Mathematics: 'Mathematics involves the description and manipulation of abstract objects that consist of either abstractions from nature or—in modern mathematics—purely abstract entities that are stipulated to have certain properties, called axioms'

WP:Mathematical_Object 'Typically, a mathematical object can be a value that can be assigned to a symbol, and therefore can be involved in formulas. Commonly encountered mathematical objects include numbers, expressions, shapes, functions, and sets.'

"I can't use the 'abstractions from nature' part, because I've already defined nature to be mathematical, so that would be circular. Saying math is made of numbers, expressions, symbols, etc, isn't helpful either, though; Author will just ask what those are, metaphysically. Okay, I concede for now, though maybe a commenter will propose such a more-fundamental-thing that I'm not aware of, though it would invite the same question."

[End of dialogue]

Alright, I'll try to answer the questions:

- I think qualia is rescuable, in a sense, and my specific view is that they exist as a high-level model.

As far as what that qualia is, I think it's basically an application of modeling the world in order to control something, and thus qualia, broadly speaking is your self-model.

As far as my exact views on qualia, the links below are helpful:

https://www.lesswrong.com/posts/FQhtpHFiPacG3KrvD/seth-explains-consciousness#7ncCBPLcCwpRYdXuG

https://www.lesswrong.com/posts/NMwGKTBZ9sTM4Morx/linkpost-a-conceptual-framework-for-consciousness

- My general answer to these question is probably computation/programs/mathematics, with the caveat that these notions are very general, and thus don't explain anything specific about our world.

I personally agree with this on what counts as real:

If you believe math is fundamental, what distinguishes this particular mathematical universe from other ones; what qualifies this world as "real", if anything; what 'breathes fire into the equations and creates a world for them to describe'?

(Commentary: one self-consistent position answers "nothing" - that this world is just one of the infinitely many possible mathematical functions / programs. That 'real' secretly means 'the program(s?) we are part of'. Though I observe this position to be rare; most have a strong intuition that there is something which "makes reality real".)

What breathes fire into the equations of our specific world is either an infinity of computational resources, or a very large amount of computational resources.

As far as what mathematics is, I definitely like the game analogy where we agree to play a game according to specified rules, though another way to describe mathematics is as a way to generalize all of the situations you encounter and abstract from specific detail, and it is also used to define what something is.

Let's do most of this via the much higher bandwidth medium of voice, but quickly:

- Yes, qualia[1] is real, and is a class of mathematical structure.[2]

- (placeholder for not a question item)

- Matter is a class of math which is ~kinda like our physics.

- Our part of the multiverse probably doesn't have special "exists" tags, probably everything is real (though to get remotely sane answers you need a decreasing reality fluid/caring fluid allocation).

Math, in the sense I'm trying to point to it, is 'Structure'. By which I mean: Well defined seeds/axioms/starting points and precisely specified rules/laws/inference steps for extending those seeds. The quickest way I've seen to get the intuition for what I'm trying to point at with 'structure' is to watch these videos in succession (but it doesn't work for everyone):

- ^

experience/the thing LWers tend to mean, not the most restrictive philosophical sense (#4 on SEP) which is pointlessly high complexity (edit: clarified that this is not the universal philosophical definition, but only one of several meanings, walked back a little on rhetoric)

- ^

possibly maybe even the entire class, though if true most qualia would be very very alien to us and not necessarily morally valuable

Life is Nanomachines

In every leaf of every tree

If you could look, if you could see

You would observe machinery

Unparalleled intricacy

In every bird and flower and bee

Twisting, churning, biochemistry

Sustains all life, including we

Who watch this dance, and know this key

Rationalists try to be well calibrated and have good world models, so we should be great at prediction markets, right?

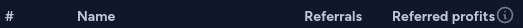

Alas, it looks bad at first glance:

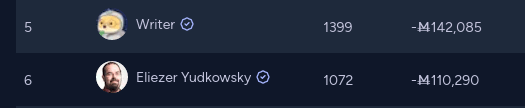

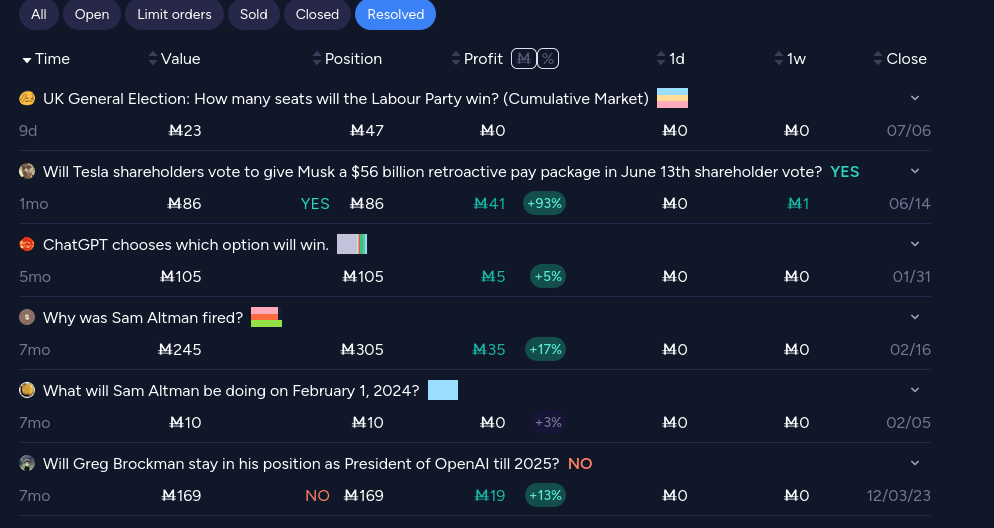

I've got a hopeful guess at why people referred from core rationalist sources seem to be losing so many bets, based on my own scores. My manifold score looks pretty bad (-M192 overall profit), but there's a fun reason for it. 100% of my resolved bets are either positive or neutral, while all but one of my unresolved bets are negative or neutral.

Here's my full prediction record:

The vast majority of my losses are on things that don't resolve soon and are widely thought to be unlikely (plus a few tiny not particularly well thought out bets like dropping M15 on LK-99), and I'm for sure losing points there. but my actual track record cached out in resolutions tells a very different story.

I wonder if there are some clever stats that @James Grugett @Austin Chen or others on the team could do to disentangle these effects, and see what the quality-adjusted bets on critical questions like the AI doom ones would be absent this kind of effect. I'd be excited to see the UI showing an extra column on the referrers table showing cashed out predictions only rather than raw profit. Or generally emphasising cached out predictions in the UI more heavily, to mitigate the Keynesian beauty contest style effects of trying to predict distant events.

These datapoints just feel like the result of random fluctuations. Both Writer and Eliezer mostly drove people to participate on the LK-99 stuff where lots of people were confidently wrong. In-general you can see that basically all the top referrers have negative income:

Among the top 10, Eliezer and Writer are somewhat better than the average (and yaboi is a huge outlier, which my guess is would be explained by them doing something quite different than the other people).

Agree, expanding to the top 9[1] makes it clear they're not unusual in having large negative referral totals. I'd still expect Ratia to be doing better than this, and would guess a bunch of that comes from betting against common positions on doom markets, simulation markets, and other things which won't resolve anytime soon (and betting at times when the prices are not too good, because of correlations in when that group is paying attention).

- ^

Though the rest of the leaderboard seems to be doing much better

The vast majority of my losses are on things that don't resolve soon

The interest rate on manifold makes such investments not worth it anyway, even if everyone had reasonable positions to you.

A couple of months ago I did some research into the impact of quantum computing on cryptocurrencies, seems maybe significant, and a decent number of LWers hold cryptocurrency. I'm not sure if this is the kind of content that's wanted, but I could write up a post on it.

I think I have a draft somewhere, but never finished it. tl;dr; Quantum lets you steal private keys from public keys (so all wallets that have a send transaction). Upgrading can protect wallets where people move their coins, but it's going to be messy, slow, and won't work for lost-key wallets, which are a pretty huge fraction of the total BTC reserve. Once we get quantum BTC at least is going to have a very bad time, others will have a moderately bad time depending on how early they upgrade.

Ayahuasca: Informed consent on brain rewriting

(based on anecdotes and general models, not personal experience)

LW supports polls? I'm not seeing it in https://www.lesswrong.com/tag/guide-to-the-lesswrong-editor, unless you mean embed a manifold market which.. would work, but adds an extra step between people and voting unless they're registered to manifold already.

Thinking about some things I may write. If any of them sound interesting to you let me know and I'll probably be much more motivated to create it. If you're up for reviewing drafts and/or having a video call to test ideas that would be even better.

- Memetics mini-sequence (https://www.lesswrong.com/tag/memetics has a few good things, but no introduction to what seems like a very useful set of concepts for world-modelling)

- Book Review: The Meme Machine (focused on general principles and memetic pressures toward altruism)

- Meme-Gene and Meme-Meme co-evolution (focused on memeplexes and memetic defences/filters, could be just a part of the first post if both end up shortish)

- The Memetic Tower of Generate and Test (a set of ideas about the specific memetic processes not present in genetic evolution, inspired by Dennet's tower of generate and test)

- (?) Moloch in the Memes (even if we have an omnibenevolent AI god looking out for sentient well-being, things maybe get weird in the long term as ideas become more competent replicators/persisters if the overseer is focusing on the good and freedom of individuals rather than memes. I probably won't actually write this, because I don't have much more than some handwavey ideas about a situation that is really hard to think about.)

- Unusual Artefacts of Communication (some rare and possibly good ways of sharing ideas, e.g. Conversation Menu, CYOA, Arbital Paths, call for ideas. Maybe best as a question with a few pre-written answers?)

Hi, did you ever go anywhere with Conversation Menu? I'm thinking of doing something like this related to AI risk to try to quickly get people to the arguments around their initial reaction and if helping with something like this is the kind of thing you had in mind with Conversation Menu I'm interested to hear any more thoughts you have around this. (Note, I'm thinking of fading in buttons more than a typical menu.) Thanks!