Back in 2016, CFAR pivoted to focusing on xrisk. I think the magic phrase at the time was:

"Rationality for its own sake, for the sake of existential risk."

I was against this move. I also had no idea how power works. I don't know how to translate this into LW language, so I'll just use mine: I was secret-to-me vastly more interested in being victimized at people/institutions/the world than I was in doing real things.

But the reason I was against the move is solid. I still believe in it.

I want to spell that part out a bit. Not to gripe about the past. The past makes sense to me. But because the idea still applies.

I think it's a simple idea once it's not cloaked in bullshit. Maybe that's an illusion of transparency. But I'll try to keep this simple-to-me and correct toward more detail when asked and I feel like it, rather than spelling out all the details in a way that turns out to have been unneeded.

Which is to say, this'll be kind of punchy and under-justified.

The short version is this:

We're already in AI takeoff. The "AI" is just running on human minds right now. Sorting out AI alignment in computers is focusing entirely on the endgame. That's not where the causal power is.

Maybe that's enough for you. If so, cool.

I'll say more to gesture at the flesh of this.

What kind of thing is wokism? Or Communism? What kind of thing was Naziism in WWII? Or the flat Earth conspiracy movement? Q Anon?

If you squint a bit, you might see there's a common type here.

In a Facebook post I argued that it's fair to view these things as alive. Well, really, I just described them as living, which kind of is the argument. If your woo allergy keeps you from seeing that… well, good luck to you. But if you're willing to just assume I mean something non-woo, you just might see something real there.

These hyperobject creatures are undergoing massive competitive evolution. Thanks Internet. They're competing for resources. Literal things like land, money, political power… and most importantly, human minds.

I mean something loose here. Y'all are mostly better at details than I am. I'll let you flesh those out rather than pretending I can do it well.

But I'm guessing you know this thing. We saw it in the pandemic, where friendships got torn apart because people got hooked by competing memes. Some "plandemic" conspiracy theorist anti-vax types, some blind belief in provably incoherent authorities, the whole anti-racism woke wave, etc.

This is people getting possessed.

And the… things… possessing them are highly optimizing for this.

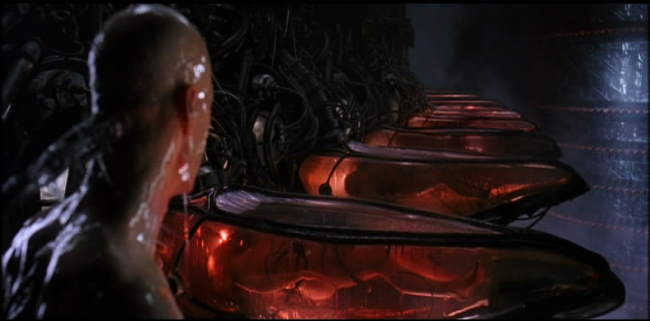

To borrow a bit from fiction: It's worth knowing that in their original vision for The Matrix, the Wachowski siblings wanted humans to be processors, not batteries. The Matrix was a way of harvesting human computing power. As I recall, they had to change it because someone argued that people wouldn't understand their idea.

I think we're in a scenario like this. Not so much the "in a simulation" part. (I mean, maybe. But for what I'm saying here I don't care.) But yes with a functionally nonhuman intelligence hijacking our minds to do coordinated computations.

(And no, I'm not positing a ghost in the machine, any more than I posit a ghost in the machine of "you" when I pretend that you are an intelligent agent. If we stop pretending that intelligence is ontologically separate from the structures it's implemented on, then the same thing that lets "superintelligent agent" mean anything at all says we already have several.)

We're already witnessing orthogonality.

The talk of "late-stage capitalism" points at this. The way greenwashing appears for instance is intelligently weaponized Goodhart. It's explicitly hacking people's signals in order to extract what the hypercreature in question wants from people (usually profit).

The way China is drifting with a social credit system and facial recognition tech in its one party system, it appears to be threatening a Shriek. Maybe I'm badly informed here. But the point is the possibility.

In the USA, we have to file income taxes every year even though we have the tech to make it a breeze. Why? "Lobbying" is right, but that describes the action. What's the intelligence behind the action? What agent becomes your intentional opponent if you try to change this? You might point at specific villains, but they're not really the cause. The CEO of TurboTax doesn't stay the CEO if he doesn't serve the hypercreature's hunger.

I'll let you fill in other examples.

If the whole world were unified on AI alignment being an issue, it'd just be a problem to solve.

The problem that's upstream of this is the lack of will.

Same thing with cryonics really. Or aging.

But AI is particularly acute around here, so I'll stick to that.

The problem is that people's minds aren't clear enough to look at the problem for real. Most folk can't orient to AI risk without going nuts or numb or splitting out gibberish platitudes.

I think this is part accidental and part hypercreature-intentional.

The accidental part is like how advertisements do a kind of DDOS attack on people's sense of inherent self-worth. There isn't even a single egregore to point at as the cause of that. It's just that many, many such hypercreatures benefit from the deluge of subtly negative messaging and therefore tap into it in a sort of (for them) inverse tragedy of the commons. (Victory of the commons?)

In the same way, there's a very particular kind of stupid that (a) is pretty much independent of g factor and (b) is super beneficial for these hypercreatures as a pathway to possession.

And I say "stupid" both because it's evocative but also because of ties to terms like "stupendous" and "stupefy". I interpret "stupid" to mean something like "stunned". Like the mind is numb and pliable.

It so happens that the shape of this stupid keeps people from being grounded in the physical world. Like, how do you get a bunch of trucks out of a city? How do you fix the plumbing in your house? Why six feet for social distancing? It's easier to drift to supposed-to's and blame minimization. A mind that does that is super programmable.

The kind of clarity that you need to de-numb and actually goddamn look at AI risk is pretty anti all this. It's inoculation to zombiism.

So for one, that's just hard.

But for two, once a hypercreature (of this type) notices this immunity taking hold, it'll double down. Evolve weaponry.

That's the "intentional" part.

This is where people — having their minds coopted for Matrix-like computation — will pour their intelligence into dismissing arguments for AI risk.

This is why we can't get serious enough buy-in to this problem.

Which is to say, the problem isn't a need for AI alignment research.

The problem is current hypercreature unFriendliness.

From what I've been able to tell, AI alignment folk for the most part are trying to look at this external thing, this AGI, and make it aligned.

I think this is doomed.

Not just because we're out of time. That might be.

But the basic idea was already self-defeating.

Who is aligning the AGI? And to what is it aligning?

This isn't just a cute philosophy problem.

A common result of egregoric stupefaction is identity fuckery. We get this image of ourselves in our minds, and then we look at that image and agree "Yep, that's me." Then we rearrange our minds so that all those survival instincts of the body get aimed at protecting the image in our minds.

How did you decide which bits are "you"? Or what can threaten "you"?

I'll hop past the deluge of opinions and just tell you: It's these superintelligences. They shaped your culture's messages, probably shoved you through public school, gripped your parents to scar you in predictable ways, etc.

It's like installing a memetic operating system.

If you don't sort that out, then that OS will drive how you orient to AI alignment.

My guess is, it's a fuckton easier to sort out Friendliness/alignment within a human being than it is on a computer. Because the stuff making up Friendliness is right there.

And by extension, I think it's a whole lot easier to create/invoke/summon/discover/etc. a Friendly hypercreature than it is to solve digital AI alignment. The birth of science was an early example.

I'm pretty sure this alignment needs to happen in first person. Not third person. It's not (just) an external puzzle, but is something you solve inside yourself.

A brief but hopefully clarifying aside:

Stephen Jenkinson argues that most people don't know they're going to die. Rather, they know that everyone else is going to die.

That's what changes when someone gets a terminal diagnosis.

I mean, if I have a 100% reliable magic method for telling how you're going to die, and I tell you "Oh, you'll get a heart attack and that'll be it", that'll probably feel weird but it won't fill you with dread. If anything it might free you because now you know there's only one threat to guard against.

But there's a kind of deep, personal dread, a kind of intimate knowing, that comes when the doctor comes in with a particular weight and says "I've got some bad news."

It's immanent.

You can feel that it's going to happen to you.

Not the idea of you. It's not "Yeah, sure, I'm gonna die someday."

It becomes real.

You're going to experience it from behind the eyes reading these words.

From within the skin you're in as you witness this screen.

When I talk about alignment being "first person and not third person", it's like this. How knowing your mortality doesn't happen until it happens in first person.

Any kind of "alignment" or "Friendliness" or whatever that doesn't put that first person ness at the absolute very center isn't a thing worth crowing about.

I think that's the core mistake anyway. Why we're in this predicament, why we have unaligned superintelligences ruling the world, and why AGI looks so scary.

It's in forgetting the center of what really matters.

It's worth noting that the only scale that matters anymore is the hypercreature one.

I mean, one of the biggest things a single person can build on their own is a house. But that's hard, and most people can't do that. Mostly companies build houses.

Solving AI alignment is fundamentally a coordination problem. The kind of math/programming/etc. needed to solve it is literally superhuman, the way the four color theorem was (and still kind of is) superhuman.

"Attempted solutions to coordination problems" is a fine proto-definition of the hypercreatures I'm talking about.

So if the creatures you summon to solve AI alignment aren't Friendly, you're going to have a bad time.

And for exactly the same reason that most AGIs aren't Friendly, most emergent egregores aren't either.

As individuals, we seem to have some glimmer of ability to lean toward resonance with one hypercreature or another. Even just choosing what info diet you're on can do this. (Although there's an awful lot of magic in that "just choosing" part.)

But that's about it.

We can't align AGI. That's too big.

It's too big the way the pandemic was too big, and the Ukraine/Putin war is too big, and wokeism is too big.

When individuals try to act on the "god" scale, they usually just get possessed. That's the stupid simple way of solving coordination problems.

So when you try to contribute to solving AI alignment, what egregore are you feeding?

If you don't know, it's probably an unFriendly one.

(Also, don't believe your thoughts too much. Where did they come from?)

So, I think raising the sanity waterline is upstream of AI alignment.

It's like we've got gods warring, and they're threatening to step into digital form to accelerate their war.

We're freaking out about their potential mech suits.

But the problem is the all-out war, not the weapons.

We have an advantage in that this war happens on and through us. So if we take responsibility for this, we can influence the terrain and bias egregoric/memetic evolution to favor Friendliness.

Anything else is playing at the wrong level. Not our job. Can't be our job. Not as individuals, and it's individuals who seem to have something mimicking free will.

Sorting that out in practice seems like the only thing worth doing.

Not "solving xrisk". We can't do that. Too big. That's worth modeling, since the gods need our minds in order to think and understand things. But attaching desperation and a sense of "I must act!" to it is insanity. Food for the wrong gods.

Ergo why I support rationality for its own sake, period.

That, at least, seems to target a level at which we mere humans can act.

Given the link, I think you're objecting to something I don't care about. I don't mean to claim that x-rationality is great and has promise to Save the World. Maybe if more really is possible and we do something pretty different to seriously develop it. Maybe. But frankly I recognize stupefying egregores here too and I don't expect "more and better x-rationality" to do a damn thing to counter those for the foreseeable future.

So on this point I think I agree with you… and I don't feel whatsoever dissuaded from what I'm saying.

The rest of what you're saying feels like it's more targeting what I care about though:

Right. And as I said in the OP, stupefaction often entails alienation from object-level reality.

It's also worth noting that LW exists mostly because Eliezer did in fact notice his own stupidity and freaked the fuck out. He poured a huge amount of energy into taking his internal mental weeding seriously in order to never ever ever be that stupid again. He then wrote all these posts to articulate a mix of (a) what he came to realize and (b) the ethos behind how he came to realize it.

That's exactly the kind of thing I'm talking about.

A deep art of sanity worth honoring might involve some techniques, awareness of biases, Bayesian reasoning, etc. Maybe. But focusing on that invites Goodhart. I think LW suffers from this in particular.

I'm pointing at something I think is more central: casting off systematic stupidity and replacing it with systematic clarity.

And yeah, I'm pretty sure one effect of that would be grounding one's thinking in the physical world. Symbolic thinking in service to working with real things, instead of getting lost in symbols as some kind of weirdly independently real.

I think you're objecting to my aesthetic, not my content.

I think it's well-established that my native aesthetic rubs many (most?) people in this space the wrong way. At this point I've completely given up on giving it a paint job to make it more palatable here.

But if you (or anyone else) cares to attempt a translation, I think you'll find what you're saying here to be patently false.

The "cultivate their will" part confuses me. I didn't mean to suggest doing that. I think that's anti-helpful for the most part. Although frankly I think all the stuff about "Tsuyoku Naritai!" and "Shut up and do the impossible" totally fits the bill of what I imagine when reading your words there… but yeah, no, I think that's just dumb and don't advise it.

Although I very much do think that getting damn clear on what you can and cannot do is important, as is ceasing to don responsibility for what you can't choose and fully accepting responsibility for what you can. That strikes me as absurdly important and neglected. As far as I can tell, anyone who affects anything for real has to at least stumble onto an enacted solution for this in at least some domain.

You seem to be weirdly strawmanning me here.

The trucking thing was a reverence to Zvi's repeated rants about how politicians didn't seem to be able to think about the physical world enough to solve the Canadian Trucker Convoy clog in Toronto. I bet that Holden, Eliezer, Paul, etc. would all do massively better than average at sorting out a policy that would physically work. And if they couldn't, I would worry about their "contributions" to alignment being more made of social illusion than substance.

Things like plumbing are physical skills. So is, say, football. I don't expect most football players to magically be better at plumbing. Maybe some correlation, but I don't really care.

But I do expect that someone who's mastering a general art of sanity and clarity to be able to think about plumbing in practical physical terms. Instead of "The dishwasher doesn't work" and vaguely hoping the person with the right cosmic credentials will cast a magic spell, there'd be a kind of clarity about what Gears one does and doesn't understand, and turning to others because you see they see more relevant Gears.

If the wizards you've named were no better than average at that, then that would also make me worry about their "contributions" to alignment.

I totally agree. Watching this dynamic play out within CFAR was a major factor in my checking out from it.

That's part of what I mean by "this space is still possessed". Stupefaction still rules here. Just differently.

I think you and I are imagining different things.

I don't think a LW or CFAR or MIRI flavored project that focuses on thinking about egregores and designing counters to stupefaction is promising. I think that'd just be a different flavor of the same stupid sauce.

(I had different hopes back in 2016, but I've been thoroughly persuaded otherwise by now.)

I don't mean to prescribe a collective action solution at all, honestly. I'm not proposing a research direction. I'm describing a problem.

The closest thing to a solution-shaped object I'm putting forward is: Look at the goddamned question.

Part of what inspired me to write this piece at all was seeing a kind of blindness to these memetic forces in how people talk about AI risk and alignment research. Making bizarre assertions about what things need to happen on the god scale of "AI researchers" or "governments" or whatever, roughly on par with people loudly asserting opinions about what POTUS should do.

It strikes me as immensely obvious that memetic forces precede AGI. If the memetic landscape slants down mercilessly toward existential oblivion here, then the thing to do isn't to prepare to swim upward against a future avalanche. It's to orient to the landscape.

If there's truly no hope, then just enjoy the ride. No point in worrying about any of it.

But if there is hope, it's going to come from orienting to the right question.

And it strikes me as quite obvious that the technical problem of AI alignment isn't that question. True, it's a question that if we could answer it might address the whole picture. But that's a pretty damn big "if", and that "might" is awfully concerning.

I do feel some hope about people translating what I'm saying into their own way of thinking, looking at reality, and pondering. I think a realistic solution might organically emerge from that. Or rather, what I'm doing here is an iteration of this solution method. The process of solving the Friendliness problem in human culture has the potential to go superexponential since (a) Moloch doesn't actually plan except through us and (b) the emergent hatching Friendly hypercreature(s) would probably get better at persuading people of its cause as more individuals allow it to speak through them.

But that's the wrong scale for individuals to try anything on.

I think all any of us can actually do is try to look at the right question, and hold the fact that we care about having an answer but don't actually have one.

Does that clarify?