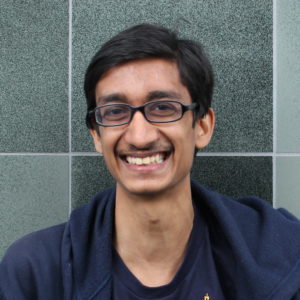

I along with several AI Impacts researchers recently talked to talked to Rohin Shah about why he is relatively optimistic about AI systems being developed safely. Rohin Shah is a 5th year PhD student at the Center for Human-Compatible AI (CHAI) at Berkeley, and a prominent member of the Effective Altruism community.

Rohin reported an unusually large (90%) chance that AI systems will be safe without additional intervention. His optimism was largely based on his belief that AI development will be relatively gradual and AI researchers will correct safety issues that come up.

He reported two other beliefs that I found unusual: He thinks that as AI systems get more powerful, they will actually become more interpretable because they will use features that humans also tend to use. He also said that intuitions from AI/ML make him skeptical of claims that evolution baked a lot into the human brain, and he thinks there’s a ~50% chance that we will get AGI within two decades via a broad training process that mimics the way human babies learn.

A full transcript of our conversation, lightly edited for concision and clarity, can be found here.

By Asya Bergal

Thanks for recording this conversation! Some thoughts:

I was pretty surprised to read the above--most of my intuitions about AI come down to repeatedly hearing the point that safety issues are very unpredictable and high variance, and that once a major safety issue happens, it's already too late. The arguments I've seen for this (many years of Eliezer-ian explanations of how hard it is to come out on top against superintelligent agents who care about different things than you) also seem pretty straightforward. And Rohin Shah isn't a stranger to them. So what gives?

Well, look at the summary on top of the full transcript link. Here are some quotes reflecting the point that Rohin is making which is most interesting to me--

From the summary:

and, in more detail, from the transcript:

If I was very convinced of this perspective, I think I'd share Rohin's impression that AI Safety is attainable. This is because I also do not expect highly strategic and agential actions focused on a single long-term goal to be produced by something that "has been given a bunch of heuristics by gradient descent that tend to correlate well with getting high reward and then it just executes those heuristics." To elaborate on some of this with my own perspective:

So I agree that we have a good chance of ensuring that this kind of AI is safe--mainly because I don't think the level of heuristics involved invoke an AI take-off slow enough to clearly indicate safety risks before they become x-risks.

On the other hand, while I agree with Rohin and Hanson's side that there isn't One True Learning Algorithm, there are potentially a multitude of advanced heuristics that approximate extremely agent-y and strategic long-term optimizations. We even have a real-life, human-level example of this. His name is Eliezer Yudkowsky[1]. Moreover, if I got an extra fifty IQ points and a slightly different set of ethics, I wouldn't be surprised if the set of heuristics composing my brain could be an existential threat. I think Rohin would agree with this belief in heuristic kludges that are effecively agential despite not being a One True Algorithm and, alone, this belief doesn't imply existential risk. If these agenty heuristics manifest gradually over time, we can easily stop them just by noticing them and turning the AI off before they get refined into something truly dangerous.

However, I don't think that machine-learned heuristics are the only way we can get highly dangerous agenty heuristics. We've made a lot of mathematical process on understanding logic, rationality and decision theory and, while machine-learned heuristics may figure out approximately Perfect Reasoning Capabilities just by training, I think it's possible that we can directly hardcode heuristics that do the same thing based on our current understanding of things we associate with Perfect Reasoning Capabilities.

In other words, I think that the dangerously agent-y heuristics which we can develop through gradual machine-learning processes could also be developed by a bunch of mathematicians teaming up and building a kludge that is similarly agent-y right out of the box. The former possibility is something we can mitigate gradually (for instance, by not continuing to build AI once they start doing things that look too agent-y) but the latter seems much more dangerous.

Of course, even if mathematicians could directly kludge some heuristics that can perform long-term strategic planning, implementing such a kludge seems obviously dangerous to me. It also seems rather unnecessary. If we could also just get superintelligent AI that doesn't do scary agent-y stuff by just developing it as a gradual extension of our current machine-learning technology, why would you want to do it the risky and unpredictable way? Maybe it'd be orders of magnitude faster but this doesn't seem worth the trade--especially when you could just directly improve AI-compute capabilities instead.

As of finishing this comment, I think I'm less worried about AI existential risks than I was before.

[1] While this sentence might seem glib, I phrased it the way I did specifically most, while most people display agentic behaviors, most of us aren't that agentic in general. I do not know Eliezer personally but the person who wrote a whole set of sequences on rationality, developed a new decision theory and started up a new research institute focused on saving the world is the best example of an agenty person I can come up with off the top of my head.

Yeah, that statement is wrong. I was trying to make a more subtle point about how an AI that learns long-term planning on a shorter time-frame is not necessarily going to be able to generalize to longer time-frames (but in the context of superintelligent AIs capable of doing human leve tasks, I do think it will generalize--so that point is kind of irrelevant). I agree with Rohin's response.