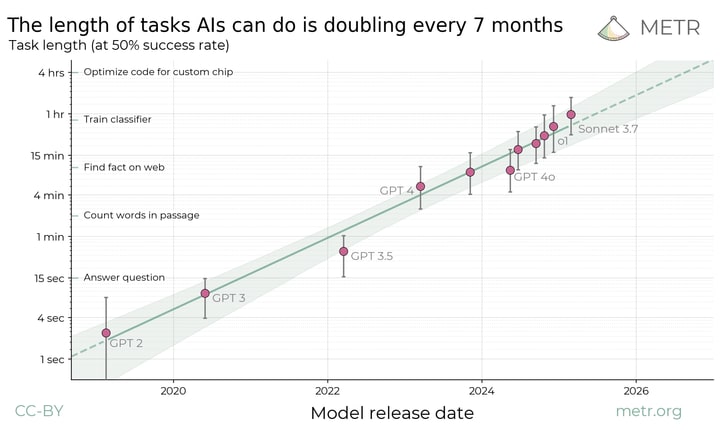

In METR: Measuring AI Ability to Complete Long Tasks found a Moore's law like trend relating (model release date) to (time needed for a human to do a task the model can do).

Here is their rationale for plotting this.

Current frontier AIs are vastly better than humans at text prediction and knowledge tasks. They outperform experts on most exam-style problems for a fraction of the cost. With some task-specific adaptation, they can also serve as useful tools in many applications. And yet the best AI agents are not currently able to carry out substantive projects by themselves or directly substitute for human labor. They are unable to reliably handle even relatively low-skill, computer-based work like remote executive assistance. It is clear that capabilities are increasing very rapidly in some sense, but it is unclear how this corresponds to real-world impact.

It seems that AI alignment research falls into this. The LLMs clearly have enough "expertise" at this point, but doing any kind of good research takes an expert a lot of time, even when it is purely on paper.

It seems therefore that we could use Metr's law to predict when AI will be capable of alignment research. Or at least when it could substantially help.

My question is what time t does "automatically do tasks that humans can do in t" let us do enough research to solve the alignment problem?

(Even if you're not a fan of automating alignment, if we do make it to that point we might as well give it a shot!)

This seems very related to what the Benchmarks and Gaps investigation is trying to answer, and it goes into quite a bit more detail and nuance than I'm able to get into here. I don't think there's a publicly accessible full version yet (but I think there will be at some later point).

It much more targets the question "when will we have AIs that can automate work at AGI companies?" which I realize is not really your pointed question. I don't have a good answer to your specific question because I don't know how hard alignment is or if humans realistically solve it on any time horizon without intelligence enhancement.

However, I tentatively expect safety research speedups to look mostly similar to capabilities research speedups, barring AIs being strategically deceptive and harming safety research.

I median-expect time horizons somewhere on the scale of a month (e.g. seeing an involved research project through from start to finish) to lead to very substantial research automation at AGI companies (maybe 90% research automation?), and we could see nonetheless startling macro-scale speedup effects at the scale of 1-day researchers. At 1-year researchers, things are very likely moving quite fast. I think this translates somewhat faithfully to safety orgs doing any kind of work that can be accelerated by AI agents.