Turing-completeness is a useful analogy we can use to grasp why AGI will inevitably converge to “goal-completeness”.

By way of definition: An AI whose input is an arbitrary goal, which outputs actions to effectively steer the future toward that goal, is goal-complete.

A goal-complete AI is analogous to a Universal Turing Machine: its ability to optimize toward any other AI's goal is analogous to a UTM's ability to run any other TM's same computation.

Let's put the analogy to work:

Imagine the year is 1970 and you’re explaining to me how all video games have their own logic circuits.

You’re not wrong, but you’re also apparently not aware of the importance of Turing-completeness and why to expect architectural convergence across video games.

Flash forward to today. The fact that you can literally emulate Doom inside of any modern video game (through a weird tedious process with a large constant-factor overhead, but still) is a profoundly important observation: all video games are computations.

More precisely, two things about the Turing-completeness era that came after the specific-circuit era are worth noticing:

- The gameplay specification of sufficiently-sophisticated video games, like most titles being released today, embeds the functionality of Turing-complete computation.

- Computer chips replaced application-specific circuits for the vast majority of applications, even for simple video games like Breakout whose specified behavior isn't Turing-complete.

Expecting Turing-Completeness

From Gwern's classic page, Surprisingly Turing-Complete:

[Turing Completeness] is also weirdly common: one might think that such universality as a system being smart enough to be able to run any program might be difficult or hard to achieve, but it turns out to be the opposite—it is difficult to write a useful system which does not immediately tip over into TC.

“Surprising” examples of this behavior remind us that TC lurks everywhere, and security is extremely difficult...

Computation is not something esoteric which can exist only in programming languages or computers carefully set up, but is something so universal to any reasonably complex system that TC will almost inevitably pop up unless actively prevented.

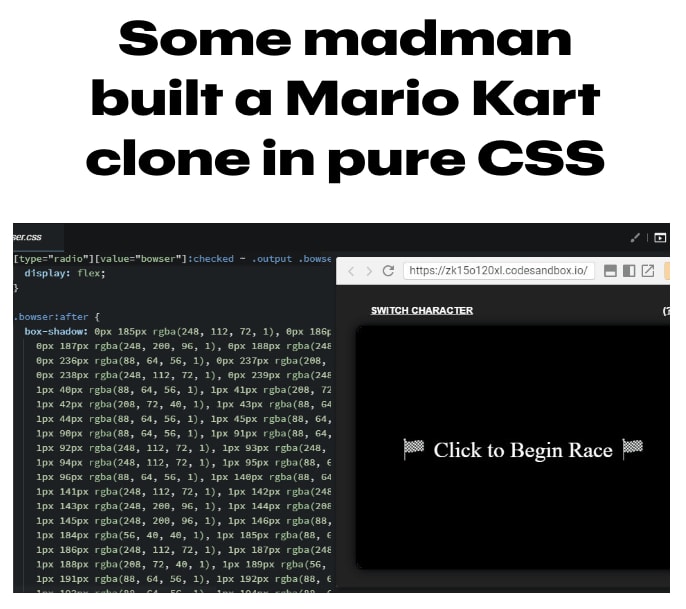

The Cascading Style Sheets (CSS) language that web pages use for styling HTML is a pretty representative example of surprising Turing Completeness:

If you look at any electronic device today, like your microwave oven, you won't see a microwave-oven-specific circuit design. What you'll see in virtually every device is the same two-level architecture:

- A Turing-complete chip that can run any program

- An installed program specifying application-specific functionality, like a countdown timer

It's a striking observation that your Philips Sonicare™ toothbrush and the guidance computer on the Apollo moonlander are now architecturally similar. But with a good understanding of Turing-completeness, you could've predicted it half a century ago. You could've correctly anticipated that the whole electronics industry would abandon application-specific circuits and converge on a Turing-complete architecture.

Expecting Goal-Completeness

If you don't want to get blindsided by what's coming in AI, you need to apply the thinking skills of someone who can look at a Breakout circuit board in 1976 and understand why it's not representative of what's coming.

When people laugh off AI x-risk because “LLMs are just a feed-forward architecture!” or “LLMs can only answer questions that are similar to something in their data!” I hear them as saying “Breakout just computes simple linear motion!” or “You can't play Doom inside Breakout!”

OK, BECAUSE AI HASN'T CONVERGED TO GOAL-COMPLETENESS YET. We're not living in the convergent endgame yet.

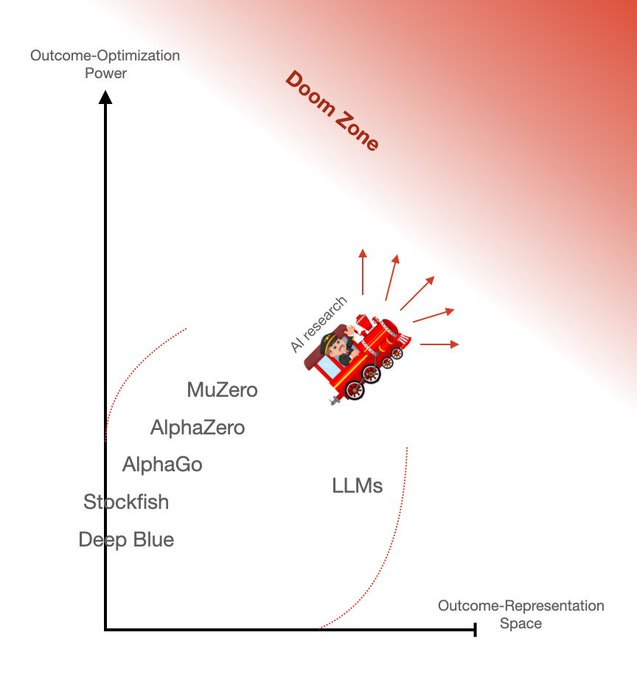

When I look at GPT-4, I see the furthest step that's ever been taken to push out the frontier of outcome-optimization power in an unprecedentedly large and general outcome-representation space (the space of natural-language prompts):

And I can predict that algorithms which keep performing better on these axes will, one way or the other, converge to the dangerous endgame of goal-complete AI.

By the 1980s, by the time you saw Pac-Man in arcades, it was knowable to insightful observers that the Turing-complete convergence was happening. It wasn't a 100% clear piece of evidence: After all, Pac-Man's game semantics aren't Turing Complete AFAIK.

But still, anyone with a deep understanding of computation could tell that the Turing-complete convergence was in progress. They could tell that the complexity of the game was high enough that it was probably already running on a Turing-complete stack. Or that, if it wasn't, then it would be soon enough.

A video game is a case of executable information-processing instructions. That's why when a game designer specs out the gameplay to their engineering team, they have no choice but to use computational concepts in their description, such as: what information the game tracks, how the game state determines what's rendered on the screen, and how various actions update the game state.

It also turned out that word processors and spreadsheets are cases of executing information-processing instructions. So office productivity tools ended up being built on the same convergent architecture as video games.

Oh yeah, and the technologies we use for reading, movies, driving, shopping, cooking... they also turned out to be mostly cases of systems executing information-processing instructions. They too all converged to being lightweight specification cards inserted into Turing-complete hardware.

Recapping the Analogy

Why will AI converge to goal-completeness?

Turing-complete convergence happened because the tools of our pre-computer world were just clumsy ways to process information.

Goal-complete convergence will happen because the tools of our pre-AGI world are still just clumsy ways to steer the future toward desirable outcomes.

Even our blind idiot god, natural selection, recently stumbled onto a goal-complete architecture for animal brains. That's a sign of how convergent goal-completeness is as a property of sufficiently intelligent agents. As Eliezer puts it: “Having goals is a natural way of solving problems.”

Or as Ilya Sutskever might urge: Feel the goal-complete AGI.

Epistemic status: I am a computer engineer and have worked on systems from microcontrollers up to ai accelerators.

To play a little devil's advocate here : you have made a hidden assumption. The reason why Turing complete circuits became the standard is that IC logic gates are reliable and cheap.

This means if you choose to use a CPU (a microcontroller in a product) you can rest assured that the resulting device, doing unnecessary computations, is still going to accomplish your task despite the extra steps. This is why a recent microwave is driven by a microcontroller not just a mechanical clock timer.

If microcontrollers weren't reliable they wouldn't be used this way.

It isn't uncommon for embedded products to even use an operating system in a simple product.

For AI, current AI is unreliable even without goal direction. It would be dangerous to use such an AI in any current product, and this danger won't be delayed, but will show up immediately in prototyping and product testing.

The entire case for AI being dangerous relies on goal directed AI being unreliable especially after it runs for a while.

Conclusion: human engineers will not put goal directed AI, or any AI, into important products where the machine has control authority until the AI is measurably more reliable. Some mitigations to make the AI more reliable will prevent treacherous turns from happening, some will not.

To give an example of such mitigations : I looked at the design of a tankless water heater. This uses a microcontroller to control a heating element. The engineer who designed it didn't trust the microcontroller not to fail in a way that left the heating element on all the time. So it had a thermal fuse to prevent this failure mode.

Engineers who integrate AI into products, especially ones with control authority, can and will take measures assuming the AI has malfunctioned or is actively attempting to betray humanity if this is a risk. It depends on the product but "is attempting to betray humanity" is something you can design around as long as you can guarantee another AI model won't collude, or if you can't do that you can do simpler mitigations like bolting the robotic arm to a fixed mount and using digital isolation.

So the machine may wish to "betray humanity" but can only affect the places the robotic arm can reach.

There are more complex mitigations for products like autonomous cars that are closer to the AI case, such as additional models and physical mitigations like seatbelts, airbags, and aeb that the AI model cannot override.

I am not talking about saving money, I am talking about competent engineering. "Authority" meaning the AI can take an action that has consequences, anything from steering a bus to approving expenses.

To engineer an automated system with authority you need some level of confidence it's not going to fail, or with AI systems, collude with other AI systems and betray you.

This betrayal risk means you probably will not actually use "goal complete" AI systems in any position of authority without some kind of mitigation for the betrayal.