I’m often asked: “what’s the probability of a really bad outcome from AI?”

There are many different versions of that question with different answers. In this post I’ll try to answer a bunch of versions of this question all in one place.

Two distinctions

Two distinctions often lead to confusion about what I believe:

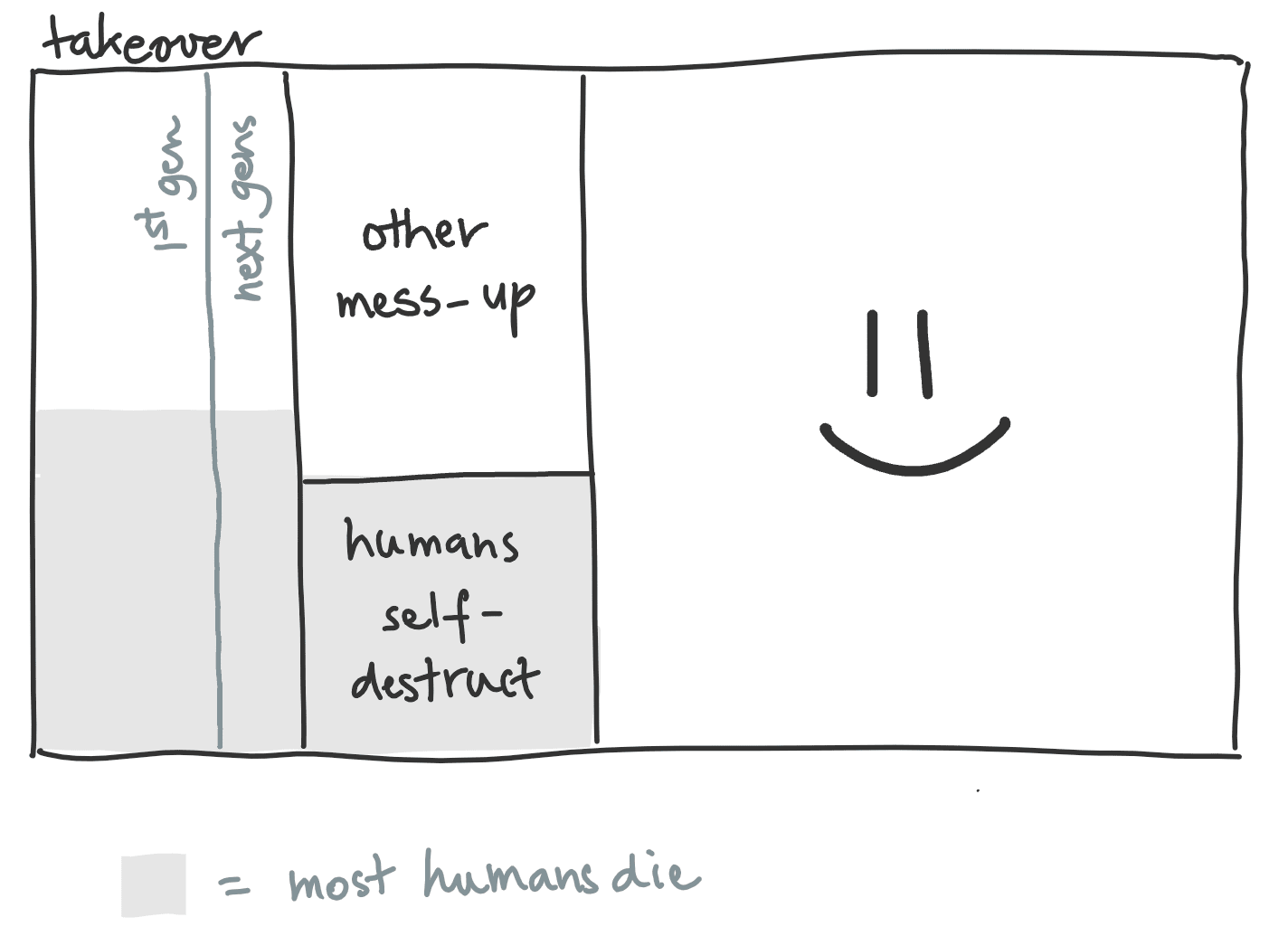

- One distinction is between dying (“extinction risk”) and having a bad future (“existential risk”). I think there’s a good chance of bad futures without extinction, e.g. that AI systems take over but don’t kill everyone.

- An important subcategory of “bad future” is “AI takeover:” an outcome where the world is governed by AI systems, and we weren’t able to build AI systems who share our values or care a lot about helping us. This need not result in humans dying, and it may not even be an objectively terrible future. But it does mean that humanity gave up control over its destiny, and I think in expectation it’s pretty bad.

- A second distinction is between dying now and dying later. I think that there’s a good chance that we don’t die from AI, but that AI and other technologies greatly accelerate the rate of change in the world and so something else kills us shortly later. I wouldn’t call this “from AI” but I do think it happens soon in calendar time and I’m not sure the distinction is comforting to most people.

Other caveats

I’ll give my beliefs in terms of probabilities, but these really are just best guesses — the point of numbers is to quantify and communicate what I believe, not to claim I have some kind of calibrated model that spits out these numbers.

Only one of these guesses is even really related to my day job (the 15% probability that AI systems built by humans will take over). For the other questions I’m just a person who’s thought about it a bit in passing. I wouldn’t recommend deferring to the 15%, but definitely wouldn’t recommend deferring to anything else.

A final source of confusion is that I give different numbers on different days. Sometimes that’s because I’ve considered new evidence, but normally it’s just because these numbers are just an imprecise quantification of my belief that changes from day to day. One day I might say 50%, the next I might say 66%, the next I might say 33%.

I’m giving percentages but you should treat these numbers as having 0.5 significant figures.

My best guesses

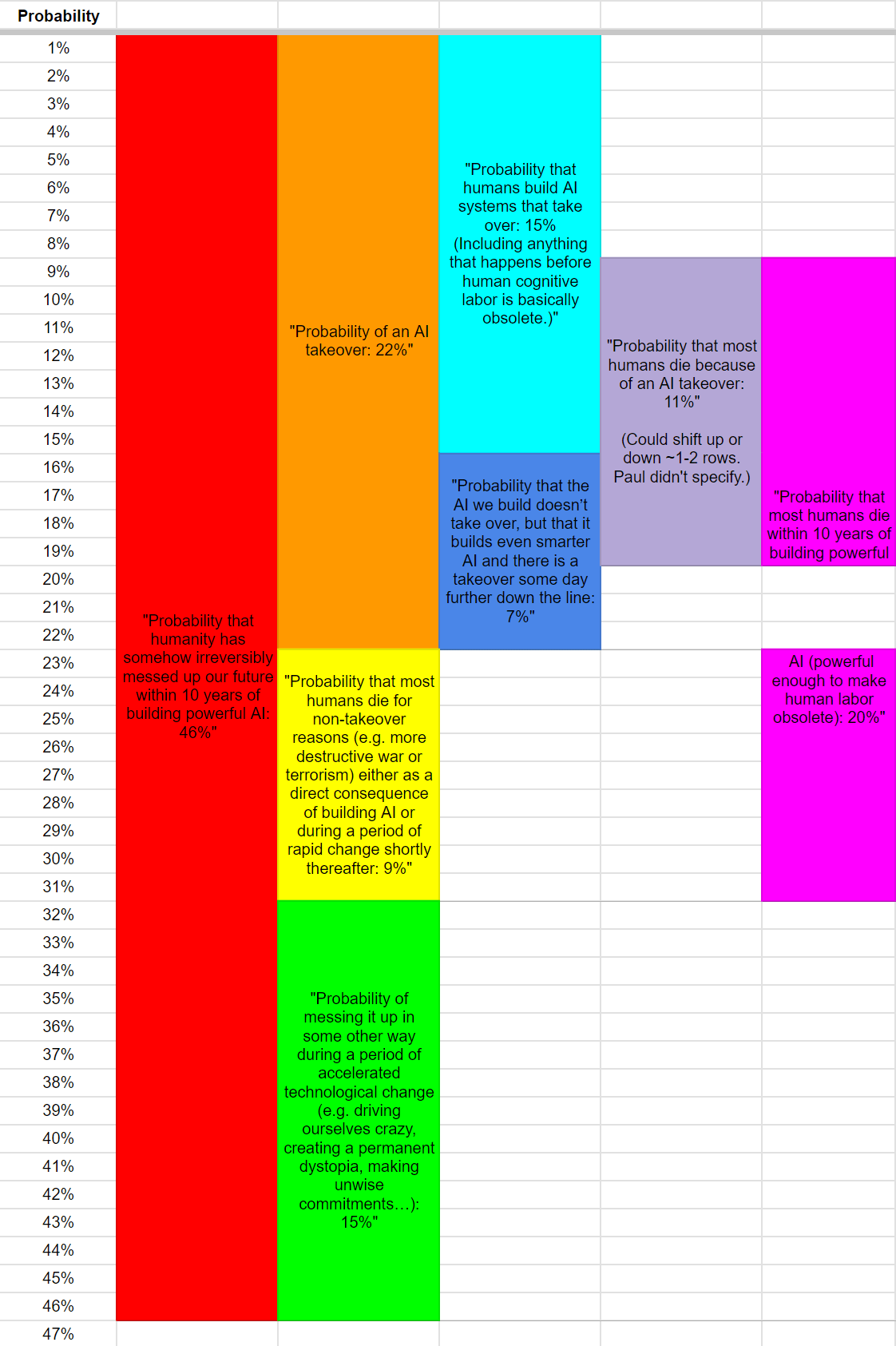

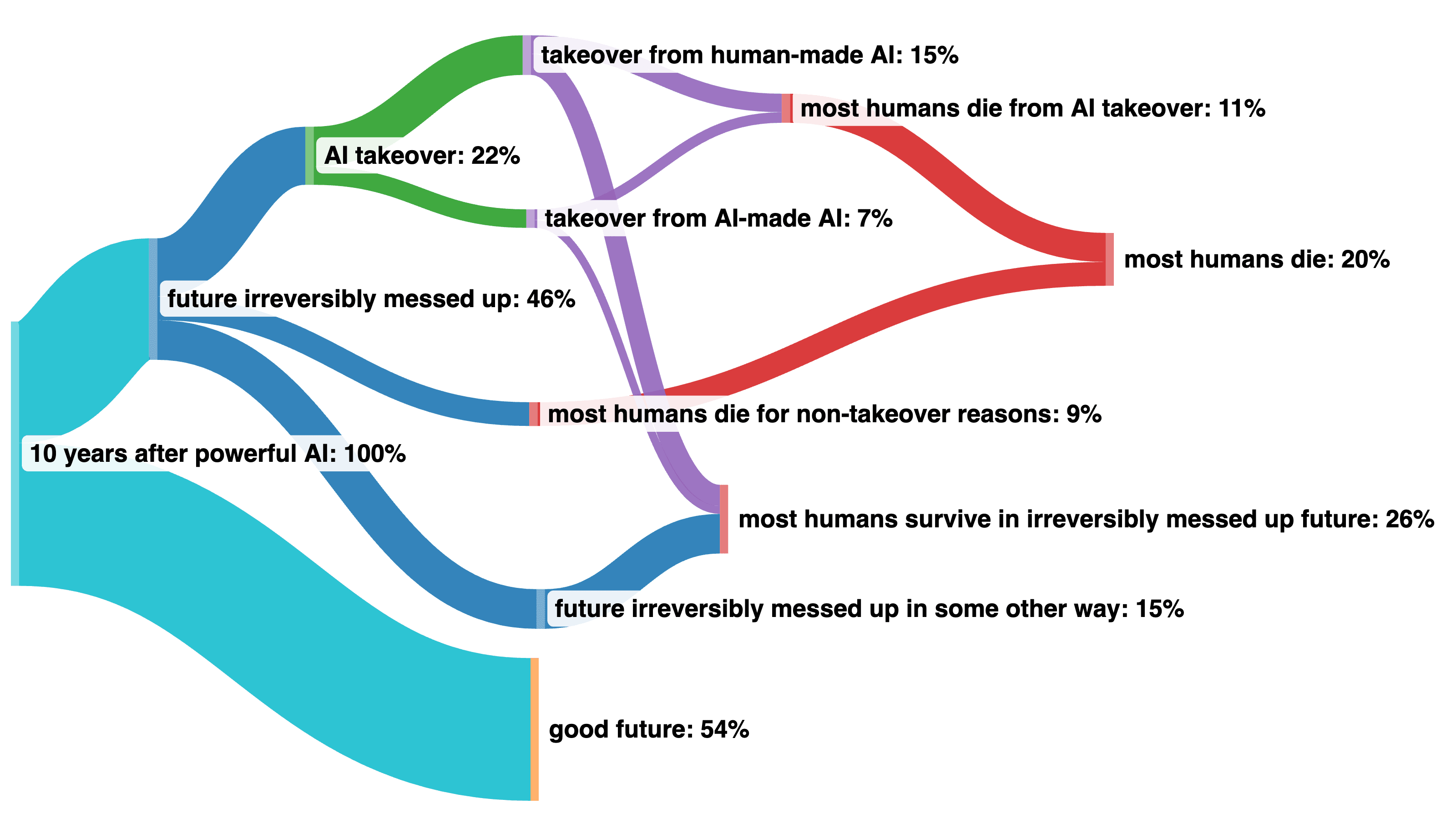

Probability of an AI takeover: 22%

- Probability that humans build AI systems that take over: 15%

(Including anything that happens before human cognitive labor is basically obsolete.) - Probability that the AI we build doesn’t take over, but that it builds even smarter AI and there is a takeover some day further down the line: 7%

Probability that most humans die within 10 years of building powerful AI (powerful enough to make human labor obsolete): 20%

- Probability that most humans die because of an AI takeover: 11%

- Probability that most humans die for non-takeover reasons (e.g. more destructive war or terrorism) either as a direct consequence of building AI or during a period of rapid change shortly thereafter: 9%

Probability that humanity has somehow irreversibly messed up our future within 10 years of building powerful AI: 46%

- Probability of AI takeover: 22% (see above)

- Additional extinction probability: 9% (see above)

- Probability of messing it up in some other way during a period of accelerated technological change (e.g. driving ourselves crazy, creating a permanent dystopia, making unwise commitments…): 15%

Epistemic Status: First read. Moderately endorsed.

I appreciate this post and I think it's generally good for this sort of clarification to be made.

This still seems ambiguous to me. Does "dying" here mean literally everyone? Does it mean "all animals," all mammals," "all humans," or just "most humans? If it's all humans dying, do all humans have to be killed by the AI? Or is it permissible that (for example) the AI leaves N people alive, and N is low enough that human extinction follows at the end of these people's natural lifespan?

I think I understand your sentence to mean "literally zero humans exist X years after the deployment of the AI as a direct causal effect of the AI's deployment."

It's possible that this specific distinction is just not a big deal, but I thought it's worth noting.

I think these questions are all still ambiguous, just a little bit less ambiguous.

I gave a probability for "most" humans killed, and I intended P(>50% of humans killed). This is fairly close to my estimate for E[fraction of humans killed].

I think if humans die it is very likely that many non-human animals die as well. I don't have a strong view about the insects and really haven't thought about it.

In the final bullet I implicitly assumed that the probability of most humans dying for non-takeover reasons shortly after building AI was very similar to the probability of human extinction; I was being imprecise, I think that's kind of close to true but am not sure exactly what my view is.