All of Arjun Panickssery's Comments + Replies

Only read the first few paragraphs of your post, but this 1997 article called "Is Love Colorblind?" might be interesting. Sailer claims that Asian men are perceived as somewhat less masculine than white men and black men as slightly more masculine, while the reverse is true for women, leading to more white/Asian and black/white pairings than you'd expect naively.

...Interracial marriage is growing steadily. From the 1960 to the 1990 Census, white - Asian married couples increased almost tenfold, while black - white couples quadrupled. The reasons are ob

I'm responding to John's more general ethical stance here of "working with moral monsters", not anything specific about Cremieux

For what it's worth I interpreted it as being about Cremieux in particular based on the comment it was directly responding to; probably others also interpreted it that way

Yeah I was reading the other day about the Treaty of Versailles and surrounding periods and saw a quote from a German minister about how an overseas empire would be good merely as an outlet for young men to find something productive to do. A totally different social and political environment when you have a young and growing population versus an aging and shrinking population. And Russia is still relatively young: Italy, Germany, Greece, Portugal, and Austria all have median ages of 45 or higher.

Regarding the Russians and East Slavs more broadly, Anatoly Karlin has some napkin math that at the very least shows the huge toll that the world wars had on their populations, which barely grow or s:

...(8a) Russia just within its current borders, assuming otherwise analogous fertility and migration trends, would have had 261.8 million people by 2017 without the triple demographic disasters of Bolshevism, WW2, and the 1990s – that’s double its actual population of 146 million.

Source: Демографические итоги послереволюционного столетия & Демографи

These indices are probably not meaningful. It's easy to find news stories of ordinary people in England and Germany being arrested for their opinions or even for mocking elected officials.

German criminal law actually adds special penalties for "defaming" politicians (Defamation of persons in the political arena, Section 188, German Criminal Code) as part of a dozen free-speech limitations that would violate the First Amendment here. And truth isn't an ironclad defense the way it is here.

In England, content that's merely "grossly offensive" or "menacing" is...

> So after removing the international students from the calculations...

Having a sizable portion of International students necessarily subsidizes the cost of higher education for domestic students

Maybe I should rephrase the sentence in OP. What I mean is that "After assuming that half of international students scored at or above 1550 and half scored below, the remaining spots are divided among domestic students in such-and-such way."

At the start of the post I describe an argument I often hear:

But many people are under the misconception that the resulting “rat race”—the highly competitive and strenuous admissions ordeal—is the inevitable result of the limited class sizes among top schools and the strong talent in the applicant pools, and that it isn’t merely because of the reasons listed in (2). Some even go so far as to suggest that a better system would be to run a lottery for any applicant who meets a minimum “qualification” standard—under the assumption that there would be many such qualified students.

This is the argument that I'm responding to and refuting.

The phrase "Robbers don't need to rob people" is generally accurate.

But saying "Robbers don't need to rob people," and writing a long argument in support of that, makes it seem like you might be confused about the thought processes of robbers.

Shorter sentences are better. Why? Because they communicate clearly. I used to speak in long sentences. And they were abstract. Thus I was hard to understand. Now I use short sentences. Clear sentences.

It's been net-positive. It even makes my thinking clearer. Why? Because you need to deeply understand something to explain it simply.

The prior should be towards liberty

Right, I was the first strong-disagree and I disagreed because of the implied premise that homeschooling was deviant in a way that warranted a high degree of scrutiny, rather than being the natural right/default that should be restricted only in extreme cases. I figure that's why others disagreed as well.

Elaborated in this thread: https://www.lesswrong.com/posts/MJFeDGCRLwgBxkmfs/childhood-and-education-9-school-is-hell?commentId=W8FoHDkB3xAkfcwyw

This can hold true even if we grant that mandatory public school is itself abusive to children

My point doesn't have anything to do with the relevant merits of public vs homeschool. My point is that your listed interventions aren't reasonable because they involve too much government intrusion into a parent's freedom to educate his children how he wants, for reasons based on dubious and marginal "safeguarding" grounds. My example of a different education regime was to consider a different status quo that makes the intrusiveness more clear.

...I feel like a

I strong-disagreed since I don't think any of your listed criticisms are reasonable. The implied premise is that homeschooling is deviant in a way that justifies a lot of government scrutiny, when really parents have a natural right to educate their children the way they want (with government intervention being reasonable in extreme cases that pass a high bar of scrutiny).

In particular, I think that outside of an existing norm where most students go to public school, the things you listed would be obviously unjust. Do you think that parents who fail a crim...

I think this post is very funny (disclaimer: I wrote this post).

A number of commenters (both here and on r/slatestarcodex) think it's also profound, basically because of its reference to the anti-critical-thinking position better argued in the Michael Huemer paper that I cite about halfway through the post.

The question of when to defer to experts and when to think for yourself is important. This post is fun as satire or hyperbole, though it ultimately doesn't take any real stance on the question.

I think this post is very good (note: I am the author).

Nietzsche is brought up often in different contexts related to ethics, politics, and the best way to live. This post is the best summary on the Internet of his substantive moral theory, as opposed to vague gesturing based on selected quotes. So it's useful for people who

- are interested in what Nietzsche's arguments, as a result of their secondhand impressions

- have specific questions like "Why does Nietzsche think that the best people are more important"

- want to know whether something can be well-described

So why don't the four states sign a compact to assign all their electoral votes in 2028 and future presidential elections to the winner of the aggregate popular vote in those four states? Would this even be legal?

It would be legal to make an agreement like this (states are authorized to appoint electors and direct their votes however they like; see Chiafalo v. Washington) but it's not enforceable in the sense that if one of the states reneges, the outcome of the presidential election won't be reversed.

Brief comments on what's bad about the output:

The instruction is to write an article arguing that AI-generated posts suffer from verbosity, hedging, and unclear trains of thought. But ChatGPT makes that complaint in a single sentence in the first paragraph and then spends 6 paragraphs adding a bunch of its own arguments:

- that the "nature of conversation itself" draws value from "human experience, emotion, and authenticity" that AI content replaces with "a hollow imitation of dialogue"

- that AI content creates "an artificial sense of expertise," i.e. that a du

I added to your prompt the instructions

Be brief and write concise prose in the style of Paul Graham. Don't hedge or repeat yourself or go on tangents.

And the output is still bad, but now mostly for the flaw (also present in your output) that ChatGPT can't resist making the complaint about "human authenticity" and "transparency/trust" when that's not what you're talking about:

...I've noticed a troubling trend on online forums: a surge in posts that clearly seem to be generated by AI. These posts are verbose, meandering, and devoid of real substance. They prese

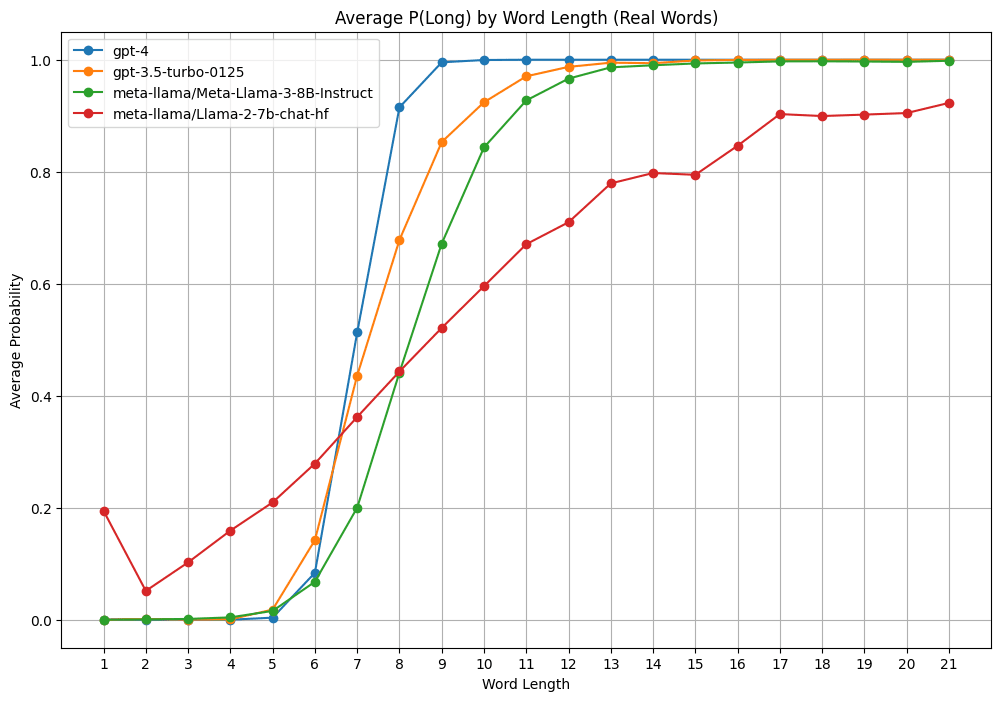

Is this word long or short? Only say "long" or "short". The word is: {word}.

To test out Cursor for fun I asked models whether various words of different lengths were "long" and measured the relative probability of "Yes" vs "No" answers to get a P(long) out of them. But when I use scrambled words of the same length and letter distribution, GPT 3.5 doesn't think any of them are long.

Update: I got Claude to generate many words with connotations related to long ("mile" or "anaconda" or "immeasurable") and short ("wee" or "monosyllabic" or "inconspicuous" or "infinitesimal") It looks like the models have a slight bias toward the connot...

What's the actual probability of casting a decisive vote in a presidential election (by state)?

I remember the Gelman/Silver/Edlin "What is the probability your vote will make a difference?" (2012) methodology:

...1. Let E be the number of electoral votes in your state. We estimate the probability that these are necessary for an electoral college win by computing the proportion of the 10,000 simulations for which the electoral vote margin based on all the other states is less than E, plus 1/2 the proportion of simulations for which the margin based on all other

FiveThirtyEight released their prediction today that Biden currently has a 53% of winning the election | Tweet

The other day I asked:

...Should we anticipate easy profit on Polymarket election markets this year? Its markets seem to think that

- Biden will die or otherwise withdraw from the race with 23% likelihood

- Biden will fail to be the Democratic nominee for whatever reason at 13% likelihood

- either Biden or Trump will fail to win nomination at their respective conventions with 14% likelihood

- Biden will win the election with only 34% likelihood

Even if gas fe

I think the FiveThirtyEight model is pretty bad this year. This makes sense to me, because it's a pretty different model: Nate Silver owns the former FiveThirtyEight model IP (and will be publishing it on his Substack later this month), so FiveThirtyEight needed to create a new model from scratch. They hired G. Elliott Morris, whose 2020 forecasts were pretty crazy in my opinion.

Here are some concrete things about FiveThirtyEight's model that don't make sense to me:

- There's only a 30% chance that Pennsylvania, Michigan, or Wisconsin will be the tipping poin

Should we anticipate easy profit on Polymarket election markets this year? Its markets seem to think that

- Biden will die or otherwise withdraw from the race with 23% likelihood

- Biden will fail to be the Democratic nominee for whatever reason at 13% likelihood

- either Biden or Trump will fail to win nomination at their respective conventions with 14% likelihood

- Biden will win the election with only 34% likelihood

Even if gas fees take a few percentage points off we should expect to make money trading on some of this stuff, right (the money is only locked up...

I like "Could you repeat that in the same words?" so that people don't try to rephrase their point for no reason.

In addition to daydreaming, sometimes you're just thinking about the first of a series of points that your interlocutor made one after the other (a lot of rationalists talk too fast).

By "subscriber growth" in OP I meant both paid and free subscribers.

My thinking was that people subscribe after seeing posts they like, so if they get to see the body of a good post they're more likely to subscribe than if they only see the title and the paywall. But I guess if this effect mostly affects would-be free subscribers then the effect mostly matters insofar as free subscribers lead to (other) paid subscriptions.

(I say mostly since I think high view/subscriber counts are nice to have even without pay.)

Paid-only Substack posts get you money from people who are willing to pay for the posts, but reduce both (a) views on the paid posts themselves and (b) related subscriber growth (which could in theory drive longer-term profit).

So if two strategies are

- entice users with free posts but keep the best posts behind a paywall

- make the best posts free but put the worst posts behind the paywall

then regarding (b) above. the second strategy has less risk of prematurely stunting subscriber growth, since the best posts are still free. Regarding (a), it's much less bad to lose view counts on your worst posts.

[Book Review] The 8 Mansion Murders by Takemaru Abiko

As a kid I read a lot of the Sherlock Holmes and Hercule Poirot canon. Recently I learned that there's a Japanese genre of honkaku ("orthodox") mystery novels whose gimmick is a fastidious devotion to the "fair play" principles of Golden Age detective fiction, where the author is expected to provide everything that the attentive reader would need to come up with the solution himself. It looks like a lot of these honkaku mysteries include diagrams of relevant locations, genre-savvy characters, and a...

Ask LLMs for feedback on "the" rather than "my" essay/response/code, to get more critical feedback.

Seems true anecdotally, and prompting GPT-4 to give a score between 1 and 5 for ~100 poems/stories/descriptions resulted in an average score of 4.26 when prompted with "Score my ..." versus an average score of 4.0 when prompted with "Score the ..." (code).

Quick Take: People should not say the word "cruxy" when already there exists the word "crucial." | Twitter

Crucial sometimes just means "important" but has a primary meaning of "decisive" or "pivotal" (it also derives from the word "crux"). This is what's meant by a "crucial battle" or "crucial role" or "crucial game (in a tournament)" and so on.

So if Alice and Bob agree that Alice will work hard on her upcoming exam, but only Bob thinks that she will fail her exam—because he thinks that she will study the wrong topics (h/t @Saul Munn)—then they might have ...

This story is inspired by The Trouble With Being Born, a collection of aphorisms by the Romanian philosopher Emil Cioran (discussed more here), including the following aphorisms:

...A stranger comes and tells me he has killed someone. He is not wanted by the police because no one suspects him. I am the only one who knows he is the killer. What am I to do? I lack the courage as well as the treachery (for he has entrusted me with a secret—and what a secret!) to turn him in. I feel I am his accomplice, and resign myself to being arrested and punished as such. At

...Large Language Models (LLMs) have demonstrated remarkable capabilities in various NLP tasks. However, previous works have shown these models are sensitive towards prompt wording, and few-shot demonstrations and their order, posing challenges to fair assessment of these models. As these models become more powerful, it becomes imperative to understand and address these limitations. In this paper, we focus on LLMs robustness on the tas

Do non-elite groups factor into OP's analysis. I interpreted is as inter-elite veto, e.g. between the regional factions of the U.S. or between religious factions, and less about any "people who didn't go to Oxbridge and don't live in London"-type factions.

I can't think of examples where a movement that wasn't elite-led destabilized and successfully destroyed a regime, but I might be cheating in the way I define "elites" or "led."

...But, as other commenters have noted, the UK government does not have structural checks and balances. In my understanding, what they have instead is a bizarrely, miraculously strong respect for precedent and consensus about what "is constitutional" despite (or maybe because of?) the lack of a written constitution. For the UK, and maybe other, less-established democracies (i.e. all of them), I'm tempted to attribute this to the "repeated game" nature of politics: when your democracy has been around long enough, you come to expect that you and the other facti

Wow, thanks for replying.

If the model has beaten GMs at all, then it can only be so weak, right? I'm glad I didn't make stronger claims than I did.

I think my questions about what humans-who-challenge-bots are like was fair, and the point about smurfing is interesting. I'd be interested in other impressions you have about those players.

Is the model's Lichess profile/game history available?

Could someone explain how Rawls's veil of ignorance justifies the kind of society he supports? (To be clear I have an SEP-level understanding and wouldn't be surprised to be misunderstanding him.)

It seems to fail at every step individually:

- At best, the support of people in the OP provides necessary but probably insufficient conditions for justice, unless he refutes all the other proposed conditions involving whatever rights, desert, etc.

- And really the conditions of the OP are actively contrary to good decision-making, e.g. you don't know your particular co

There are even "non-democratic liberal-ish societies" today . . . like Singapore, Brunei, Dubai and other Gulf monarchies, etc