All of Christopher Olah's Comments + Replies

Unfortunately, I don't think a detailed discussion of what we regard as safe to publish would be responsible, but I can share how we operate at a procedural level. We don't consider any research area to be blanket safe to publish. Instead, we consider all releases on a case by case basis, weighing expected safety benefit against capabilities/acceleratory risk. In the case of difficult scenarios, we have a formal infohazard review procedure.

[responded to wrong comment!]

how likely does Anthropic think each is? What is the main evidence currently contributing to that world view?

I wouldn't want to give an "official organizational probability distribution", but I think collectively we average out to something closer to "a uniform prior over possibilities" without that much evidence thus far updating us from there. Basically, there are plausible stories and intuitions pointing in lots of directions, and no real empirical evidence which bears on it thus far.

(Obviously, within the company, there's a wide range of views. Some pe...

The weird thing about a portfolio approach is that the things it makes sense to work on in “optimistic scenarios” often trade off against those you’d want to work on in more “pessimistic scenarios,” and I don't feel like this is really addressed.

Like, if we’re living in an optimistic world where it’s pretty chill to scale up quickly, and things like deception are either pretty obvious or not all that consequential, and alignment is close to default, then sure, pushing frontier models is fine. But if we’re in a world where the problem is nearly impossible, ...

I moderately disagree with this? I think most induction heads are at least primarily induction heads (and this points strongly at the underlying attentional features and circuits), although there may be some superposition going on. (I also think that the evidence you're providing is mostly orthogonal to this argument.)

I think if you're uncomfortable with induction heads, previous token heads (especially in larger models) are an even more crisp example of an attentional feature which appears, at least on casual inspection, to typically be monosematnica...

Can I summarize your concerns as something like "I'm not sure that looking into the behavior of "real" models on narrow distributions is any better research than just training a small toy model on that narrow distribution and interpreting it?" Or perhaps you think it's slightly better, but not considerably?

Between the two, I might actually prefer training a toy model on a narrow distribution! But it depends a lot on exactly how the analysis is done and what lessons one wants to draw from it.

Real language models seem to make extensive use of superpositio...

Regarding the more general question of "how much should interpretability make reference to the data distribution?", here are a few thoughts:

Firstly, I think we should obviously make use of the data distribution to some extent (and much of my work has done so!). If you're trying to reverse engineer a regular computer program, it's extremely useful to have traces of that program running. So too with neural networks!

However, the fundamental thing I care about is understanding whether models will be safe off-distribution, so an understanding which is tied to a...

Thanks for writing this up. It seems like a valuable contribution to our understanding of one-layer transformers. I particularly like your toy example – it's a good demonstration of how more complicated behavior can occur here.

For what it's worth, I understand this behavior as competition between skip-trigrams. We introduce "skip-trigrams" as a way to think of pairs of entries in the OV and QK-circuit matrices. The QK-circuit describes how much the attention head wants to attend to a given token in the attention softmax and implement a particular skip...

I'm curious how you'd define memorisation? To me, I'd actually count this as the model learning features ...

Qualitatively, when I discuss "memorization" in language models, I'm primarily referring to the phenomenon of languages models producing long quotes verbatim if primed with a certain start. I mean it as a more neutral term than overfitting.

Mechanistically, the simplest version I imagine is a feature which activates when the preceding N tokens match a particular pattern, and predicts a specific N+1 token. Such a feature is analogous to the "single ...

In this toy model, is it really the case that the datapoint feature solutions are "more memorizing, less generalizing" than the axis-aligned feature solutions? I don't feel totally convinced of this.

Well, empirically in this setup, (1) does generalize and get a lower test loss than (2). In fact, it's the only version that does better than random. 🙂

But I think what you're maybe saying is that from the neural network's perspective, (2) is a very reasonable hypothesis when T < N, regardless of what is true in this specific setup. And you could...

I feel pretty confused, but my overall view is that many of the routes I currently feel are most promising don't require solving superposition.

It seems quite plausible there might be ways to solve mechanistic interpretability which frame things differently. However, I presently expect that they'll need to do something which is equivalent to solving superposition, even if they don't solve it explicitly. (I don't fully understand your perspective, so it's possible I'm misunderstanding something though!)

To give a concrete example (although this is easie...

Sorry for not noticing this earlier. I'm not very active on LessWrong/AF. In case it's still helpful, a couple of thoughts...

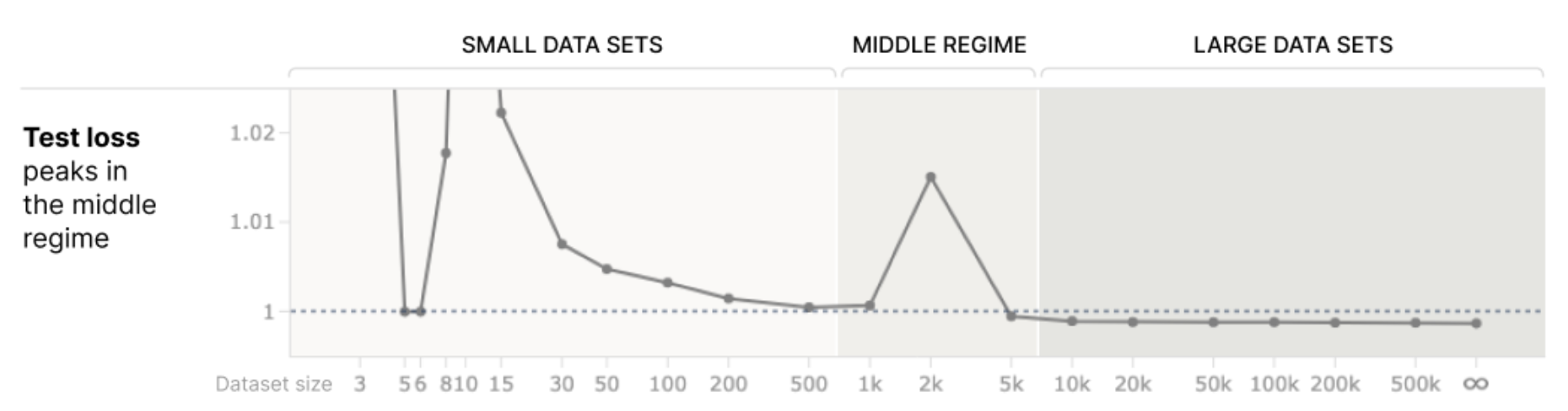

Firstly, I think people shouldn't take this graph too seriously! I made it for a talk in ~2017, I think and even then it was intended as a vague intuition, not something I was confident in. I do occasionally gesture at it as a possible intuition, but it's just a vague idea which may or may not be true.

I do think there's some kind of empirical trend where models in some cases become harder to understand and then easier. For ...

This is a good summary of our results, but just to try to express a bit more clearly why you might care...

I think there are presently two striking facts about overfitting and mechanistic interpretability:

(1) The successes of mechanistic interpretability have thus far tended to focus on circuits which seem to describe clean, generalizing algorithms which one might think of as the "non-overfitting parts of neural networks". We don't really know what "overfitting mechanistically is", and you could imagine a world where it's so fundamentally messy we jus...

See also the Curve Detectors paper for a very narrow example of this (https://distill.pub/2020/circuits/curve-detectors/#dataset-analysis -- a straight line on a log prob plot indicates exponential tails).

I believe the phenomena of neurons often having activation distributions with exponential tails was first informally observed by Brice Menard.

I just stumbled on this post and wanted to note that very closely related ideas are sometimes discussed in interpretability under the names "universality" or "convergent learning": https://distill.pub/2020/circuits/zoom-in/#claim-3

In fact, not only do the same features form across different neural networks, but we actually observe the same circuits as well (eg. https://distill.pub/2020/circuits/frequency-edges/#universality ).

Well, goodness, it's really impressive (and touching) that someone absorbed the content of our paper and made a video with thoughts building on it so quickly! It took me a lot longer to understand these ideas.

I'm trying to not work over the holidays, so I'll restrict myself to a few very quick remarks:

-

There's a bunch of stuff buried in the paper's appendix which you might find interesting, especially the "additional intuition" notes on MLP layers, convolutional-like structure, and bottleneck activations. A lot of it is quite closely related to the thin

Thanks for making that distinction, Steve. I think the reason things might sounds muddled is that many people expect that (1) will drive (2).

Why might one expect (1) to cause (2)? One way to think about it is that, right now, most ML experiments optimistically given 1-2 bits of feedback to the researcher, in the form of whether their loss went up or down from a baseline. If we understand the resulting model, however, that could produce orders of magnitude more meaningful feedback about each experiment. As a concrete example, in InceptionV1, there are a

...Evan, thank you for writing this up! I think this is a pretty accurate description of my present views, and I really appreciate you taking the time to capture and distill them. :)

I’ve signed up for AF and will check comments on this post occasionally. I think some other members of Clarity are planning to so as well. So everyone should feel invited to ask us questions.

One thing I wanted to emphasize is that, to the extent these views seem intellectually novel to members of the alignment community, I think it’s more accurate to attribute the no...

Subscribed! Thanks for the handy feature.

One thing I'd add, in addition to Evan's comments, is that the present ML paradigm and Neural Architecture Search are formidable competitors. It feels like there’s a big gap in effectiveness, where we’d need to make lots of progress for “principled model design” to be competitive with them in a serious way. The gap causes me to believe that we’ll have (and already have had) significant returns on interpretability before we see capabilities acceleration. If it felt like interpretability was accelerating capabilities on the present margin, I’d be a bit more

...I think that’s a fair characterization of my optimism.

I think the classic response to me is “Sure, you’re making progress on understanding vision models, but models with X are different and your approach won’t work!” Some common values of X are not having visual features, recurrence, RL, planning, really large size, and language-based. I think that this is a pretty reasonable concern (more so for some Xs than others). Certainly, one can imagine worlds where this line of work hits a wall and ends up not helping with more powerful systems. However, I would

...I'm curious what's Chris's best guess (or anyone else's) about where to place AlphaGo Zero on that diagram

Without the ability to poke around at AlphaGo -- and a lot of time to invest in doing so -- I can only engage in wild speculation. It seems like it must have abstractions that human Go players don’t have or anticipate. This is true of even vanilla vision models before you invest lots of time in understanding them (I've learned more than I ever needed to about useful features for distinguishing dog species from ImageNet models).

But I’d hope the abstr

...

We certainly think that abrupt changes of safety properties are very possible! See discussion of how the most pessimistic scenarios may seem optimistic until very powerful systems are created in this post, and also our paper on Predictability and Surprise.

With that said, I think we tend to expect a bit of continuity. Empirically, even the "abrupt changes" we observe with respect to model size tend to take place over order-of-magnitude changes in compute. (There are examples of things like the formation of induction heads where qualitative changes in model ... (read more)