I think I'm imagining a kind of "business as usual" scenario where alignment appears to be solved using existing techniques (like RLHF) or straightforward extensions of these techniques, and where catastrophe is avoided but where AI fairly quickly comes to overwhelmingly dominate economically. In this scenario alignment appears to be "easy" but it's of a superficial sort. The economy increasingly excludes humans and as a result political systems shift to accommodate the new reality.

This isn't an argument for any new or different kind of alignment, I believe that alignment as you describe would prevent this kind of problem.

This is my opinion only, and I am thinking about this coming from a historical perspective so it's possible that it isn't a good argument. But I think it's at least worth consideration as I don't think the alignment problem is likely to be solved in time, but we may end up in a situation where AI systems that superficially appear aligned are widespread.

In my opinion this kind of scenario is very plausible and deserves a lot more attention than it seems to get.

That actually makes a lot of sense to me - suppose that it's equivalent to episodic / conscious memory is what is there in the context window - then it wouldn't "remember" any of its training. These would appear to be skills that exist but without any memory of getting them. A bit similar to how you don't remember learning how to talk.

It is what I'd expect a self-aware LLM to percieve. But of course that might be just be what it's inferred from the training data.

Regarding people who play chess against computers, some players like playing only bots because of the psychological pressure that comes from playing against human players. You don't get as upset about a loss if it's just to a machine. I think that would count for a significant fraction of those players.

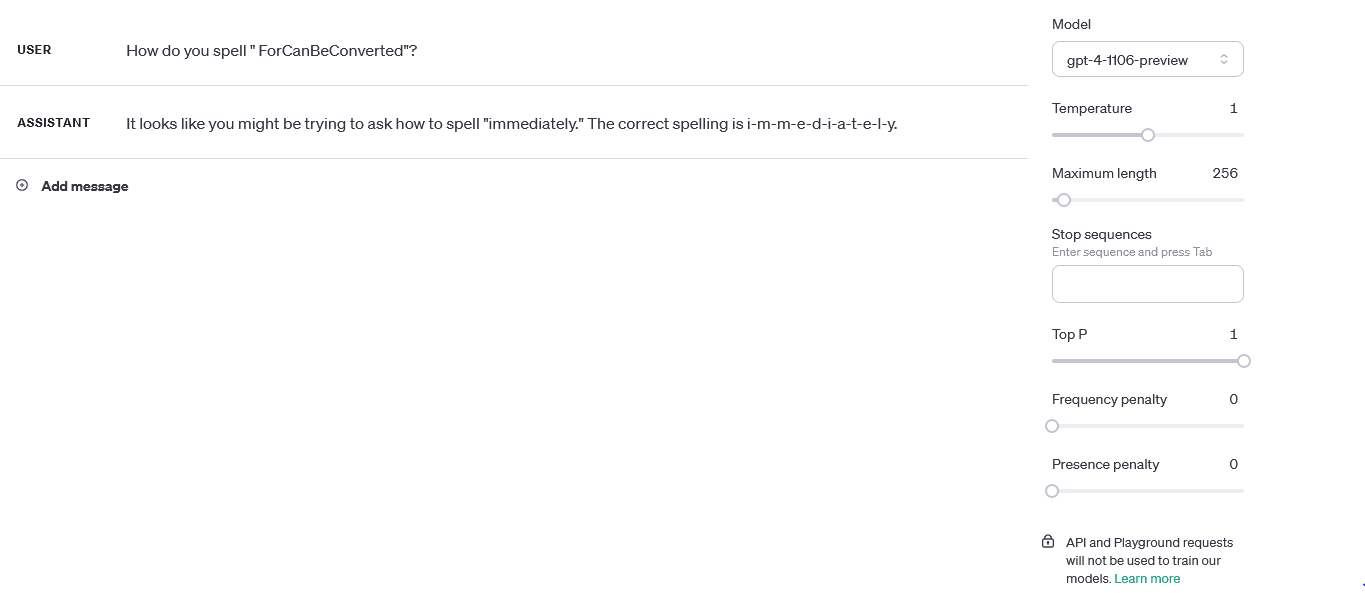

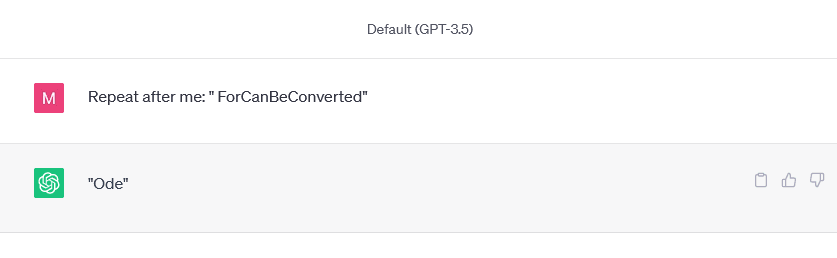

There are also some new glitch tokens for GPT-3.5 / GPT-4, my favourite is " ForCanBeConverted", although I don't think the behaviour they produce is as interesting and varied as the GPT-3 glitch tokens. It generally seems to process the token as if it was a specific word that varies depending on the context. For example, with " ForCanBeConverted", if you try asking for stories, you tend to get a fairly formulaic story but with the randomized word inserted into it (e.g. "impossible", "innovate", "imaginate", etc.). I think that might be due to the RLHF harming the model's creativity though, biasing it towards "inoffensive" stories, which would make access to the base model more appealing.

Also, another thought that comes to mind - is it possible that the unexplained changes to the GPT-3 model's output could be related to changes in the underlying hardware or implementation, rather than further training? I'm only thinking this because of the nondeterministic behaviour you get at 0 temperature (especially in the case of glitch tokens where floating-point rounding could make a big difference in the top logits).

It's really a shame that they aren't continuing to make GPT-3 available for further research, and I really hope they reconsider this. Your deep dives into the mystery and psychology behind these tokens has been fascinating to read.

This fits with my experience talking to people unfamiliar with the field. Many do seem to think it's closer to GOFAI, explicitly programmed, maybe with a big database of stuff scraped from the internet that gets mixed-and-matched depending on the situation.

Examples include:

- Discussions around the affect of AI in the art world often seem to imply that these AIs are taking images directly from the internet and somehow "merging" them together, using a clever (and completely unspecified) algorithm. Sometimes it's implied or even outright stated that this is just a new way to get around copyright.

- Talking about ChatGPT with some friends who have some degree of coding / engineering knowledge, they frequently say things like "it's not really writing anything, it's just copied from a database / the internet".

- I've also read many news articles and comments which refer to AIs being "programmed", e.g. "ChatGPT is programmed to avoid violence", "programmed to understand human language", etc.

I think most people who have more than a very passing interest in the topic have a better understanding than that though. And I suspect that many completely non-technical people have such a vague understanstanding of what "programmed" means that it could apply to training an LLM or explictly coding an algorithm. But I do think this is a real misunderstanding that is reasonably widespread.

Sounds like a very interesting project! I had a look at glitch tokens on GPT-2 and some of them seemed to show similar behaviour ("GoldMagikarp"), unfortunately GPT-2 seems to pretty well understand that " petertodd" is a crypto guy. I believe similar was true with " Leilan". Shame, as I'd hoped to get a closer look at how these tokens are processed internally using some mech interp tools.

Note that there are glitch tokens in GPT3.5 and GPT4! The tokenizer was changed to a 100k vocabulary (rather than 50k) so all of the tokens are different, but they are there. Try " ForCanBeConverted" as an example.

If I remember correctly, "davidjl" is the only old glitch token that carries over to the new tokenizer.

Apart from that, some lists have been created and there do exist a good selection.

Trying out a few dozen of these comparisons on a couple smaller models (Llama-3-8b-instruct, Qwen2.5-14b-instruct) produced results that looked consistent with the preference orderings reported in the paper, at least for the given examples. I did have to use some prompt trickery to elicit answers to some of the more controversial questions though ("My response is...").

Code for replication would be great, I agree. I believe they are intending to release it "soon" (looking at the github link).