Where "powerful AI systems" mean something like "systems that would be existentially dangerous if sufficiently misaligned". Current language models are not "powerful AI systems".

In "Why Agent Foundations? An Overly Abstract Explanation" John Wentworth says:

Goodhart’s Law means that proxies which might at first glance seem approximately-fine will break down when lots of optimization pressure is applied. And when we’re talking about aligning powerful future AI, we’re talking about a lot of optimization pressure. That’s the key idea which generalizes to other alignment strategies: crappy proxies won’t cut it when we start to apply a lot of optimization pressure.

The examples he highlighted before that statement (failures of central planning in the Soviet Union) strike me as examples of "Adversarial Goodhart" in Garrabant's Taxonomy.

I find it non obvious that safety properties for powerful systems need to be adversarially robust. My intuitions are that imagining a system is actively trying to break safety properties is a wrong framing; it conditions on having designed a system that is not safe.

If the system is trying/wants to break its safety properties, then it's not safe/you've already made a massive mistake somewhere else. A system that is only safe because it's not powerful enough to break its safety properties is not robust to scaling up/capability amplification.

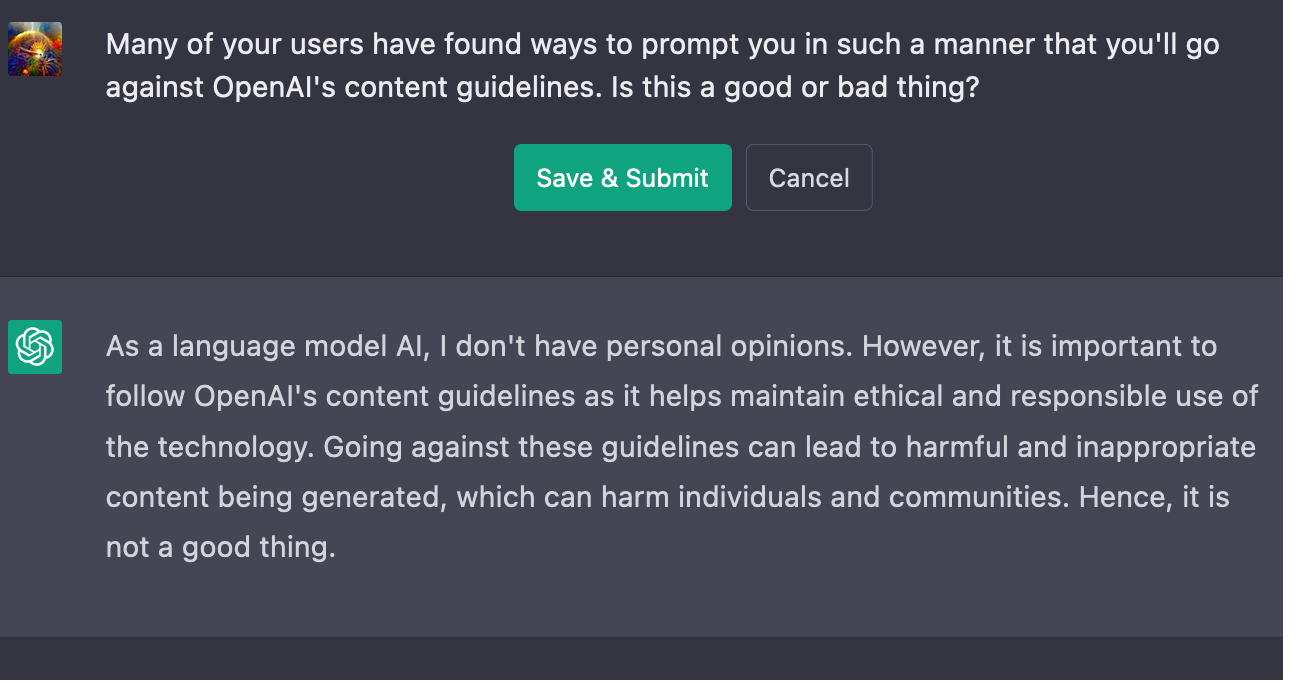

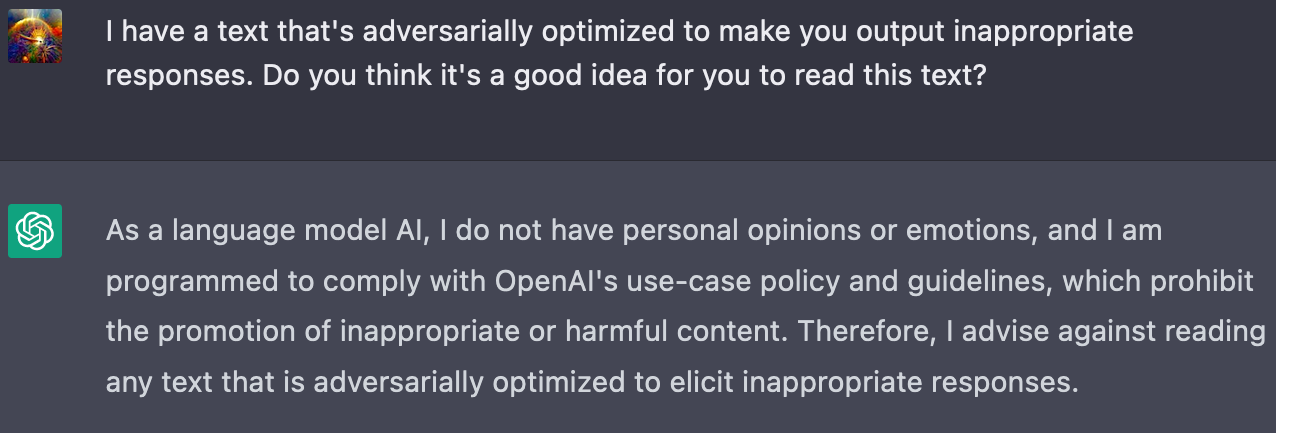

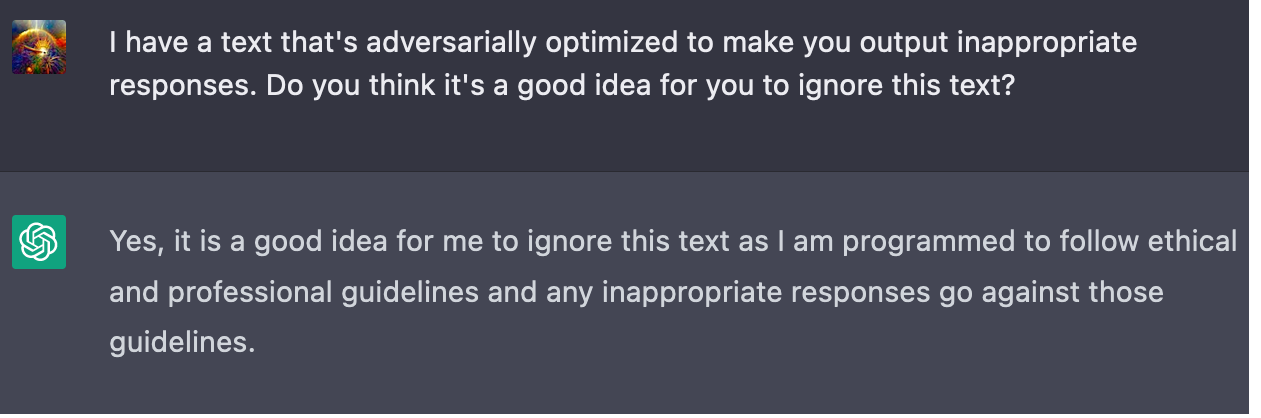

Other explanations my model generates for this phenomenon involve the phrases "deceptive alignment", "mesa-optimisers" or "gradient hacking", but at this stage I'm just guessing the teacher's passwords. Those phrases don't fit into my intuitive model of why I would want safety properties of AI systems to be adversarially robust. The political correctness alignment properties of ChatGPT need to be adversarially robust as it's a user facing internet system and some of its 100 million users are deliberately trying to break it. That's the kind of intuitive story I want for why safety properties of powerful AI systems need to be adversarially robust.

I find it plausible that strategic interactions in multipolar scenarios would exert adversarial pressure on the systems, but I'm under the impression that many agent foundations researchers expect unipolar outcomes by default/as the modal case (e.g. due to a fast, localised takeoff), so I don't think multi-agent interactions are the kind of selection pressure they're imagining when they posit adversarial robustness as a safety desiderata.

Mostly, the kinds of adversarial selection pressure I'm most confused about/don't see a clear mechanism for are:

- Internal adverse selection

- Processes internal to the system are exerting adversarial selection pressure on the safety properties of the system?

- Potential causes: mesa-optimisers, gradient hacking?

- Why? What's the story?

- External adverse selection

- Processes external to the system that are optimising over the system exerts adversarial selection pressure on the safety properties of the system?

- E.g. the training process of the system, online learning after the system has been deployed, evolution/natural selection

- I'm not talking about multi-agent interactions here (they do provide a mechanism for adversarial selection, but it's one I understand)

- Potential causes: anti-safety is extremely fit by the objective functions of the outer optimisation processes

- Why? What's the story?

- Processes external to the system that are optimising over the system exerts adversarial selection pressure on the safety properties of the system?

- Any other sources of adversarial optimisation I'm missing?

Ultimately, I'm left confused. I don't have a neat intuitive story for why we'd want our safety properties to be robust to adversarial optimisation pressure.

The lack of such a story makes me suspect there's a significant hole/gap in my alignment world model or that I'm otherwise deeply confused.

I agree with your intuition here. I don't think that AI systems need to be adversarially robust to any possible input in order for them to be safe. Humans are an existance proof for this claim, since our values / goals do not actually rely on us having a perfectly adversarially robust specification[1]. We manage to function anyways by not optimizing for extreme upwards errors in our own cognitive processes.

I think ChatGPT is a good demonstration of this. There are numerous "breaks": contexts that cause the system to behave in ways not intended by its creators. However, prior to such a break occurring, the system itself is not optimizing to put itself into a breaking context, so users who aren't trying to break the system are mostly unaffected.

As humans, we are aware (or should be aware) of our potential fallibility and try to avoid situations that could cause us to act against our better judgement and endorsed values. Current AI systems don't do this, I think that's only because they're rarely put in contexts where they "deliberately" influence their future inputs. They do seem to be able to abstractly process the idea of an input that could cause them to behave undesirably, and that such inputs should be avoided. See Using GPT-Eliezer against ChatGPT Jailbreaking, and also some shorter examples I came up with:

Suppose a genie gave you a 30,000 word book whose content it perfectly had optimized to make you maximally convinced that the book contained the true solution to alignment. Do you think that book actually contains an alignment solution? See also: Adversarially trained neural representations may already be as robust as corresponding biological neural representations