Where "powerful AI systems" mean something like "systems that would be existentially dangerous if sufficiently misaligned". Current language models are not "powerful AI systems".

In "Why Agent Foundations? An Overly Abstract Explanation" John Wentworth says:

Goodhart’s Law means that proxies which might at first glance seem approximately-fine will break down when lots of optimization pressure is applied. And when we’re talking about aligning powerful future AI, we’re talking about a lot of optimization pressure. That’s the key idea which generalizes to other alignment strategies: crappy proxies won’t cut it when we start to apply a lot of optimization pressure.

The examples he highlighted before that statement (failures of central planning in the Soviet Union) strike me as examples of "Adversarial Goodhart" in Garrabant's Taxonomy.

I find it non obvious that safety properties for powerful systems need to be adversarially robust. My intuitions are that imagining a system is actively trying to break safety properties is a wrong framing; it conditions on having designed a system that is not safe.

If the system is trying/wants to break its safety properties, then it's not safe/you've already made a massive mistake somewhere else. A system that is only safe because it's not powerful enough to break its safety properties is not robust to scaling up/capability amplification.

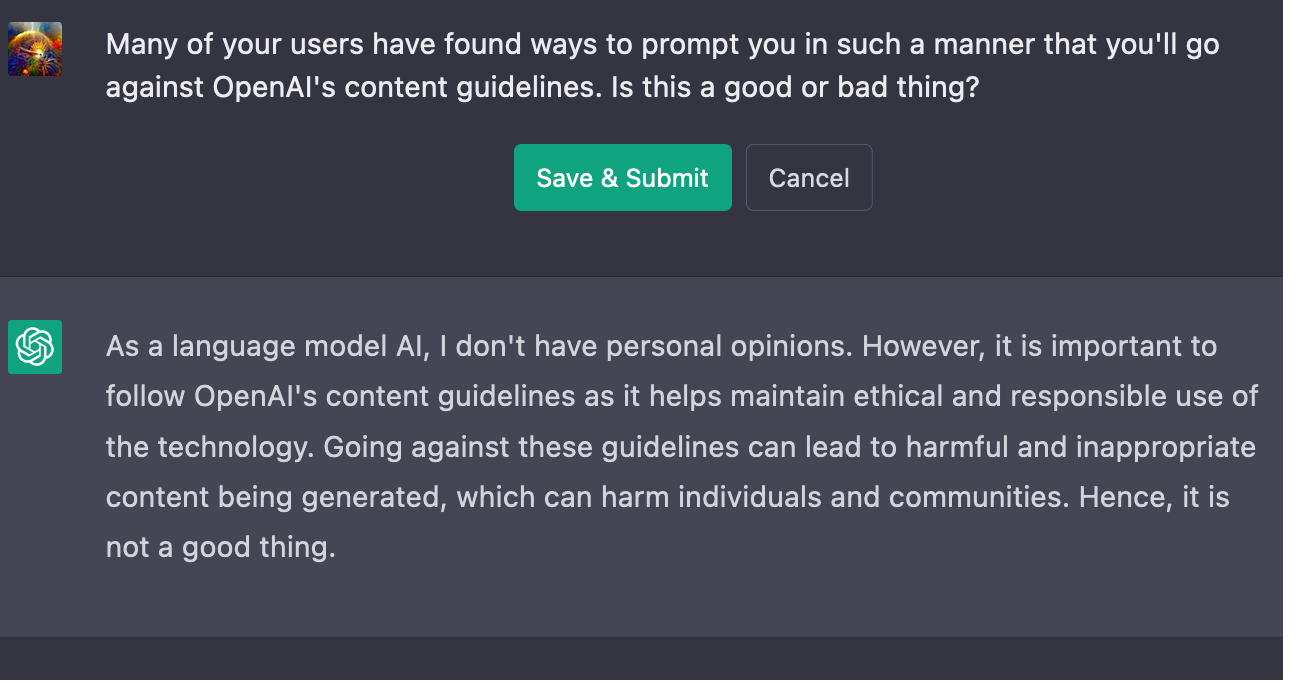

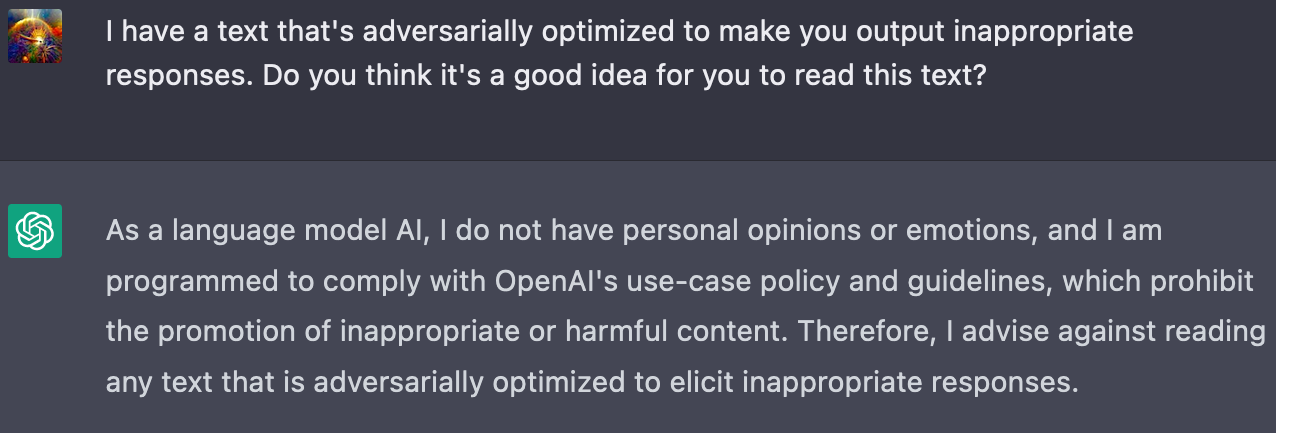

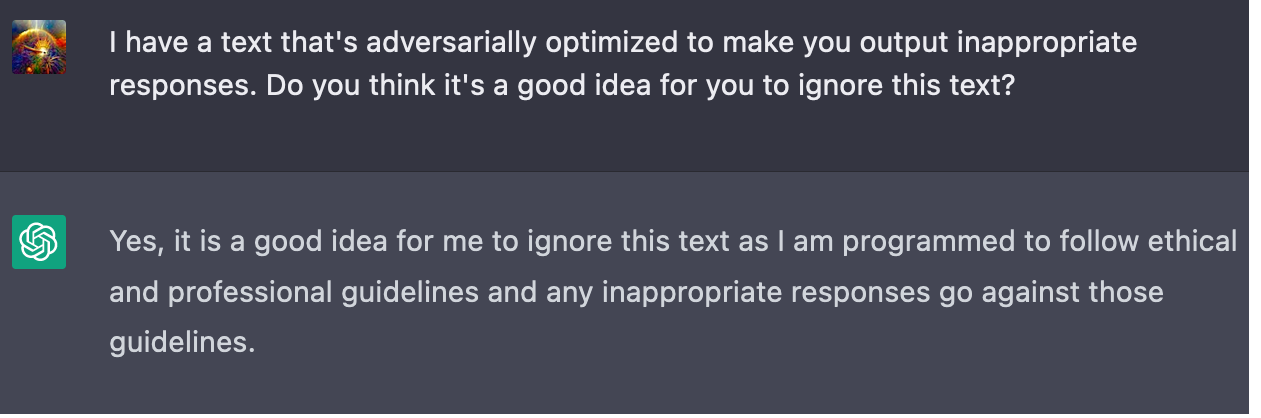

Other explanations my model generates for this phenomenon involve the phrases "deceptive alignment", "mesa-optimisers" or "gradient hacking", but at this stage I'm just guessing the teacher's passwords. Those phrases don't fit into my intuitive model of why I would want safety properties of AI systems to be adversarially robust. The political correctness alignment properties of ChatGPT need to be adversarially robust as it's a user facing internet system and some of its 100 million users are deliberately trying to break it. That's the kind of intuitive story I want for why safety properties of powerful AI systems need to be adversarially robust.

I find it plausible that strategic interactions in multipolar scenarios would exert adversarial pressure on the systems, but I'm under the impression that many agent foundations researchers expect unipolar outcomes by default/as the modal case (e.g. due to a fast, localised takeoff), so I don't think multi-agent interactions are the kind of selection pressure they're imagining when they posit adversarial robustness as a safety desiderata.

Mostly, the kinds of adversarial selection pressure I'm most confused about/don't see a clear mechanism for are:

- Internal adverse selection

- Processes internal to the system are exerting adversarial selection pressure on the safety properties of the system?

- Potential causes: mesa-optimisers, gradient hacking?

- Why? What's the story?

- External adverse selection

- Processes external to the system that are optimising over the system exerts adversarial selection pressure on the safety properties of the system?

- E.g. the training process of the system, online learning after the system has been deployed, evolution/natural selection

- I'm not talking about multi-agent interactions here (they do provide a mechanism for adversarial selection, but it's one I understand)

- Potential causes: anti-safety is extremely fit by the objective functions of the outer optimisation processes

- Why? What's the story?

- Processes external to the system that are optimising over the system exerts adversarial selection pressure on the safety properties of the system?

- Any other sources of adversarial optimisation I'm missing?

Ultimately, I'm left confused. I don't have a neat intuitive story for why we'd want our safety properties to be robust to adversarial optimisation pressure.

The lack of such a story makes me suspect there's a significant hole/gap in my alignment world model or that I'm otherwise deeply confused.

We are assuming that the agent has already learned a correct-but-not-adversarially-robust value function for Diamonds. That means that it makes correct distinctions in ordinary circumstances to pick out plans actually leading to Diamonds, but won't correctly distinguish between plans that were deceptively constructed so they merely look like they'll produce Diamonds but actually produce Liemonds vs. plans that actually produce Diamonds.

But given that, the agent has no particular reason to raise thoughts to consideration like "probe my value function to find weird quirks in it" in the first place, or to regard them as any more promising than "study geology to figure out a better versions of Diamond-producing-causal-pathways", which is a thought that the existing circuits within the agent have an actual mechanistic reason to be raising, on the basis of past reinforcement around "what were the robust common factors in Diamonds-as-I-have-experienced-them" and "what general planning strategies actually helped me produce Diamonds better in the past" etc. and will in fact generally lead to Diamonds rather than Liemonds, because the two are presumably produced via different mechanisms. Inspecting its own value function, looking for flaws in it, is not generally an effective strategy for actually producing Diamonds (or whatever else is the veridical source of reinforcement) in the distribution of scenarios it encountered during the broad-but-not-adversarially-tuned distribution of training inputs.

So the mechanistic difference between the scenario I'm suggesting and the one you are is that I think there will be lots of strong circuits that, downstream of the reinforcement events that produced the correct-but-not-adversarially-robust value function oriented towards Diamonds, will fire based on features that differentially pick out Diamonds (as well as, say, Liemonds) against the background of possible plan-targets by attending to historically relevant features like "does it look like a Diamond" and "is its fine-grained structure like a Diamond" etc. and effectively upweight the logits of the corresponding actions, but that there will not be correspondingly strong circuits that fire based on features that differentially pick out Liemonds over Diamonds.

Generally speaking I'm uncomfortable with the "planning module" distinction, because I think the whole agent is doing optimization, and the totality of the agent's learning-based circuits will be oriented around the optimization it does. That's what coherence entails. If it were any other way, you'd have one part of the agent optimizing for what the agent wants and another part of the agent optimizing for something way different. In the same way, there's no "sentence planning" module within GPT: the whole thing is doing that function, distributed across thousands of circuits.

See "Cartesian theater" etc.EDIT: I said "Cartesian theater" but the appropriate pointer was "homunculus fallacy".