Well. Damn.

As a vocal critic of the whole concept of superposition, this post has changed my mind a lot. An actual mathematical definition that doesn't depend on any fuzzy notions of what is 'human interpretable', and a start on actual algorithms for performing general, useful computation on overcomplete bases of variables.

Everything I've read on superposition before this was pretty much only outlining how you could store and access lots of variables from a linear space with sparse encoding, which isn't exactly a revelation. Every direction is a float, so of course the space can store about float precision to the -th power different states, which you can describe as superposed sparse features if you like. But I didn't need to use that lens to talk about the compression. I could just talk about good old non-overcomplete linear algebra bases instead. The basis vectors in that linear algebra description being the compositional summary variables the sparse inputs got compressed into. If basically all we can do with the 'superposed variables' is make lookup tables of them, there didn't seem to me to be much need for the concept at all to reverse engineer neural networks. Just stick with the summary variables, summarising is what intelligence is all about.

If we can do actual, general computation with the sparse variables? Computations with internal structure that we can't trivially describe just as well using floats forming the non-overcomplete linear basis of a vector space? Well, that would change things.

As you note, there's certainly work left to do here on the error propagation and checking for such algorithms in real networks. But even with this being an early proof of concept, I do now tentatively expect that better-performing implementations of this probably exist. And if such algorithms are possible, they sure do sound potentially extremely useful for an LLM's job.

On my previous superposition-skeptical models, frameworks like the one described in this post are predicted to be basically impossible. Certainly way more cumbersome than this looks. So unless these ideas fall flat when more research is done on the error tolerance, I guess I was wrong. Oops.

Haven't read everything yet, but that seems like excellent work. In particular, I think this general research avenue is extremely well-motivated.

Figuring out how to efficiently implement computations on the substrate of NNs had always seemed like a neglected interpretability approach to me. Intuitively, there are likely some methods of encoding programs into matrix multiplication which are strictly ground-truth better than any other encoding methods. Hence, inasmuch as what the SGD is doing is writing efficient programs on the NN substrate, it is likely doing so by making use of those better methods. And so nailing down the "principles of good programming" on the NN substrate should yield major insights regarding how the naturally-grown NN circuits are shaped as well.

This post seems to be a solid step in that direction!

(I haven't had the chance to read part 3 in detail, and I also haven't checked the proofs except insofar as they seem reasonable on first viewing. Will probably have a lot more thoughts after I've had more time to digest.)

This is very cool work! I like the choice of U-AND task, which seems way more amenable to theoretical study (and is also a much more interesting task) than the absolute value task studied in Anthropic's Toy Model of Superposition (hereafter TMS). It's also nice to study this toy task with asymptotic theoretical analysis as opposed to the standard empirical analysis, thereby allowing you to use a different set of tools than usual.

The most interesting part of the results was the discussion on the universality of universal calculation -- it reminds me of the interpretations of the lottery ticket hypothesis that claim some parts of the network happen to be randomly initialized to have useful features at the start.

Some examples that are likely to be boolean-interpretable are bigram-finding circuits and induction heads. However, it's possible that most computations are continuous rather than boolean[31].

My guess is that most computations are indeed closer to continuous than to boolean. While it's possible to construct boolean interpretations of bigram circuits or induction heads, my impression (having not looked at either in detail on real models) is that neither of these cleanly occur inside LMs. For example, induction heads demonstrate a wide variety of other behavior, and even on induction-like tasks, often seem to be implementing induction heuristics that involve some degree of semantic content.

Consequently, I'd be especially interested in exploring either the universality of universal calculation, or the extension to arithmetic circuits (or other continuous/more continuous models of computation in superposition).

Some nitpicks:

The post would probably be a lot more readable if it were chunked into 4. The 88 minute read time is pretty scary, and I'd like to comment only on the parts I've read.

Section 2:

Two reasons why this loss function might be principled are

- If there is reason to think of the model as a Gaussian probability model

- If we would like our loss function to be basis independent

A big reason to use MSE as opposed to eps-accuracy in the Anthropic model is for optimization purposes (you can't gradient descent cleanly through eps-accuracy).

Section 5:

4 How relevant are our results to real models?

This should be labeled as section 5.

Appendix to the Appendix:

Here, $f_i$ always denotes the vector.

[..]

with \[\sigma_1\leq n\) with

(TeX compilation failure)

Also, here's a summary I posted in my lab notes:

A few researchers (at Apollo, Cadenza, and IHES) posted this document today (22k words, LW says ~88 minutes).

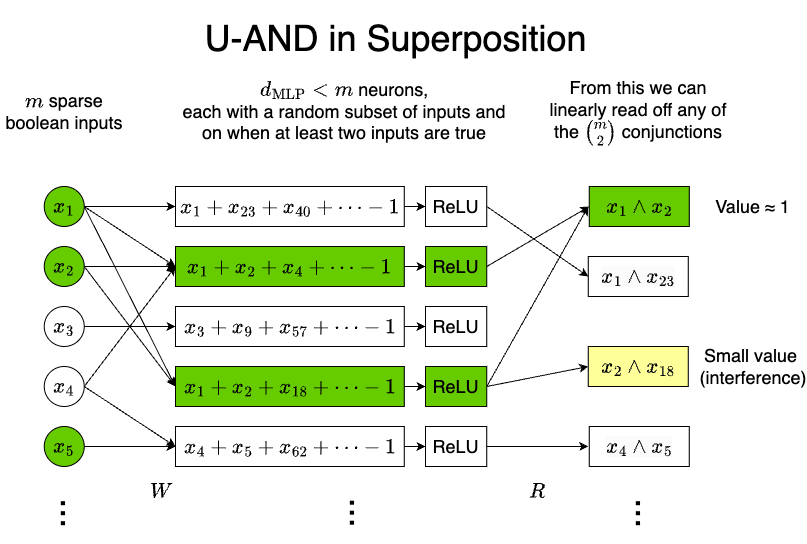

They propose two toy models of computation in superposition.

First, they posit a MLP setting where a single layer MLP is used to compute the pairwise ANDs of m boolean input variables up to epsilon-accuracy, where the input is sparse (in the sense that l < m are active at once). Notably, in this set up, instead of using O(m^2) neurons to represent each pair of inputs, you can instead use O(polylog(m)) neurons with random inputs, and “read off” the ANDs by adding together all neurons that contain the pair of inputs. They also show that you can extend this to cases where the inputs themselves are in superposition, though you need O(sqrt(m)) neurons. (Also, insofar as real neural networks implement tricks like this, this probably incidentally answers the Sam Mark’s XOR puzzle.)

They then consider a setting involving the QK matrix of an attention head, where the task is to attend to a pair of activations in a transformer, where the first activation contains feature i and the second feature j. While the naive construction can only check for d_head bigrams, they provide a construction involving superposition that allows the QK matrix to approximately check for Theta(d_head * d_residual) bigrams (that is, up to ~parameter count; this involves placing the input features in superposition).

If I’m understanding it correctly, these seem like pretty cool constructions, and certainly a massive step up from what the toy models of superposition looked like in the past. In particular, these constructions do not depend on human notions of what a natural “feature” is. In fact, here the dimensions in the MLP are just sums of random subsets of the input; no additional structure needed. Basically, what it shows is that for circuit size reasons, we’re going to get superposition just to get more computation out of the network.

Thanks for the kind feedback!

I'd be especially interested in exploring either the universality of universal calculation

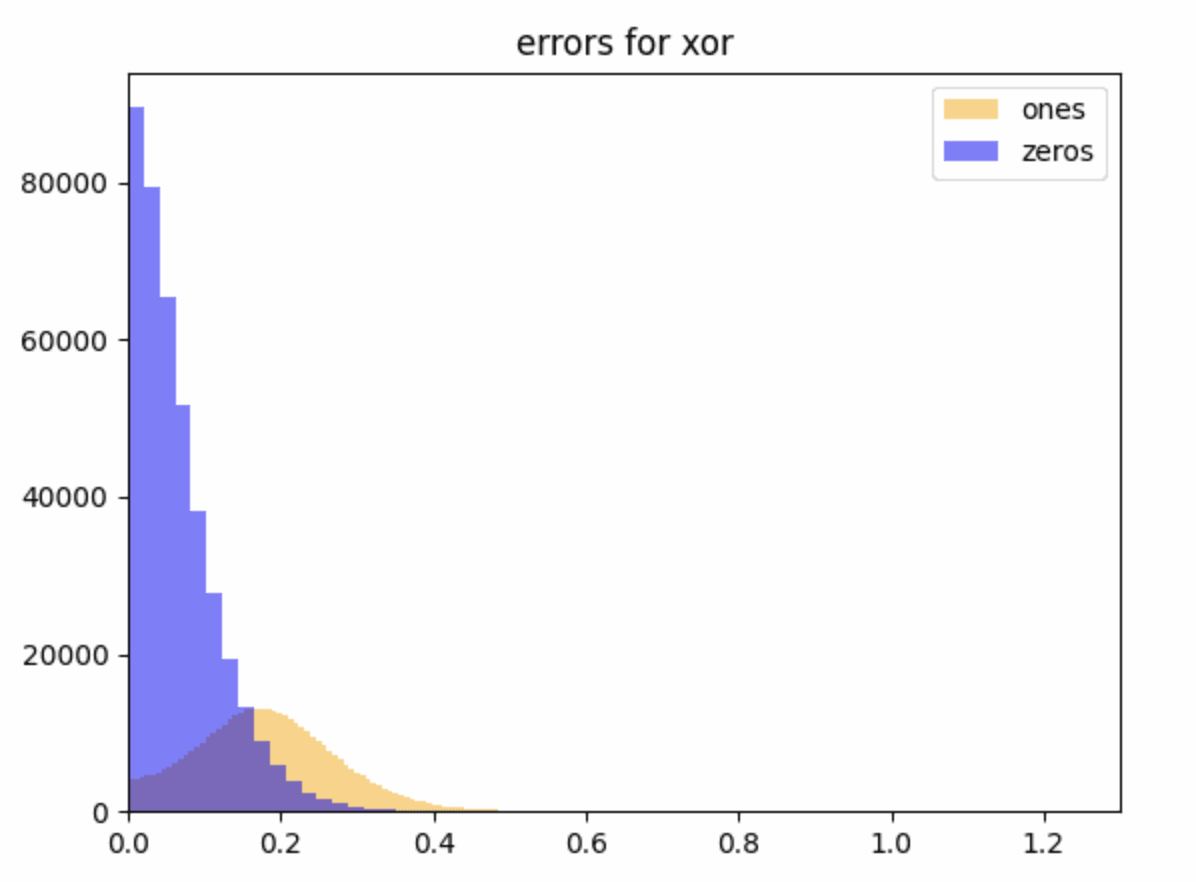

Do you mean the thing we call genericity in the further work section? If so, we have some preliminary theoretical and experimental evidence that genericity of U-AND is true. We trained networks on the U-AND task and the analogous U-XOR task, with a narrow 1-layer MLP and looked at the size of the interference terms after training with a suitable loss function. Then, we reinitialised and froze the first layer of weights and biases, allowing the network only to learn the linear readoff directions, and found that the error terms were comparably small in both cases.

This figure is the size of the errors for (which is pretty small) for readoffs which should be zero in blue and one in yellow (we want all these errors to be close to zero).

This suggests that the AND/XOR directions were -linearly readoffable at initialisation, but the evidence at this stage is weak because we don't have a good sense yet of what a reasonable value of is for considering the task to have been learned correctly: to answer this we want to fiddle around with loss functions and training for longer. For context, an affine readoff (linear + bias) directly on the inputs can read off with , which has an error of . This is larger than all but the largest errors here, and you can’t do anything like this for XOR with affine readoff.

After we did this, Kaarel came up with an argument that networks randomly initialised with weights from a standard Gaussian and zero bias solve U-AND with inputs not in superposition (although it probably can be generalised to the superposition case) for suitable readoffs. To sketch the idea:

Let be the vector of weights from the th input to the neurons. Then consider the linear readoff vector with th component given by:

where is the indicator function. There are 4 free parameters here, which are set by 4 constraints given by requiring that the expectation of this vector dotted with the activation vector has the correct value in the 4 cases . In the limit of large the value of the dot product will be very close to its expectation and we are done. There are a bunch of details to work out here and, as with the experiments, we aren't 100% sure the details all work out, but we wanted to share these new results since you asked.

A big reason to use MSE as opposed to eps-accuracy in the Anthropic model is for optimization purposes (you can't gradient descent cleanly through eps-accuracy).

We've suggested that perhaps it would be more principled to use something like loss for larger than 2, as this is closer to -accuracy. It's worth mentioning that we are currently finding that the best loss function for the task seems to be something like with extra weighting on the target values that should be . We do this to avoid the problem that if the inputs are sparse, then the ANDs are sparse too, and the model can get good loss on (for low ) by sending all inputs to the zero vector. Once we weight the ones appropriately, we find that lower values of may be better for training dynamics.

or the extension to arithmetic circuits (or other continuous/more continuous models of computation in superposition)

We agree and are keen to look into that!

(TeX compilation failure)

Thanks - fixed.

How are you setting when ? I might be totally misunderstanding something but at - feels like you need to push up towards like 2k to get something reasonable? (and the argument in 1.4 for using clearly doesn't hold here because it's not greater than for this range of values).

So, all our algorithms in the post are hand constructed with their asymptotic efficiency in mind, but without any guarantees that they will perform well at finite . They haven't even really been optimised hard for asymptotic efficiency - we think the important point is in demonstrating that there are algorithms which work in the large limit at all, rather than in finding the best algorithms at any particular or in the limit. Also, all the quantities we talk about are at best up to constant factors which would be important to track for finite . We certainly don't expect that real neural networks implement our constructions with weights that are exactly 0 or 1. Rather, neural networks probably do a messier thing which is (potentially substantially) more efficient, and we are not making predictions about the quantitative sizes of errors at a fixed .

In the experiment in my comment, we randomly initialised a weight matrix with each entry drawn from , and set the bias to zero, and then tried to learn the readoff matrix , in order to test whether U-AND is generic. This is a different setup to the U-AND construction in the post, and I offered a suggestion of readoff vectors for this setup in the comment, although that construction is also asymptotic: for finite and a particular random seed, there are almost definitely choices of readoff vectors that achieve lower error.

FWIW, the average error in this random construction (for fixed compositeness; a different construction would be required for inputs with varying compositeness) is (we think) with a constant that can be found by solving some ugly gaussian integrals but I would guess is less than 10, and the max error is whp, with a constant that involves some even uglier gaussian integrals.

Interesting post, thanks for writing it!

I think that the QK section somewhat under-emphasises the importance of the softmax. My intuition is that models rarely care about as precise a task as counting the number of pairs of matching query-key features at each pair of token positions, and that instead softmax is more of an "argmax-like" function that finds a handful of important token positions (though I have not empirically tested this, and would love to be proven wrong!). This enables much cheaper and more efficient solutions, since you just need the correct answer to be the argmax-ish.

For example, ignoring floating point precision, you can implement a duplicate token head with and arbitrarily high . If there are vocab elements, map the th query and key to the point of the way round the unit circle. The dot product is maximised when they are equal.

If you further want the head to look at a resting position unless the duplicate token is there, you can increase , and have a dedicated BOS dimension with a score of , so you only get a higher score for a perfect match. And then make the softmax temperature super low so it's an argmax.

Thanks for the comment!

In more detail:

In our discussion of softmax (buried in part 1 of section 4), we argue that our story makes the most sense precisely when the temperature is very low, in which case we only attend to the key(s) that satisfy the most skip feature-bigrams. Also, when features are very sparse, the number of skip feature bigrams present in one query-key pair is almost always 0 or 1, and we aren't trying to super precisely track whether its, say, 34 or 35.

I agree that if softmax is just being an argmax, then one implication is that we don't need error terms to be , instead, they can just be somewhat less than 1. However, at least in our general framework, this doesn't help us beyond changing the log factor in the tilde inside ). There still will be some log factor because we require the average error to be to prevent the worst-case error being greater than 1. Also, we may want to be able to accept 'ties' in which a small number of token positions are attended to together. To achieve this (assuming that at most one SFB is present for each QK pair for simplicity) we'd want the variation in the values which should be 1 to be much smaller than the gap between the smallest value which should be 1 and the largest value which should be 0.

A few comments about your toy example:

To tell a general story, I'd like to replace the word 'token' with 'feature' in your construction. In particular, I might want to express what the attention head does using the same features as the MLP. The choice of using tokens in your example is special, because the set of features {this is token 1, this is token 2, ...} are mutually exclusive, but once I allow for the possibility that multiple features can be present (for example if I want to talk in terms of features involved in MLP computation), your construction breaks. To avoid this problem, I want the maximum dot product between f-vectors to be at most 1/(the maximum number of features that can be present at once). If I allow several features to be present at once, this starts to look like an -orthogonal basis again. I guess you could imagine a case where the residual stream is divided into subspaces, and inside each subspace is a set of mutually exclusive features (à la tegum products of TMS). In your picture, there would need to be a 2d subspace allocated to the 'which token' features anyway. This tegum geometry would have to be specifically learned — these orthogonal subspaces do not happen generically, and we don't see a good reason to think that they are likely to be learned by default for reasons not to do with the attention head that uses them, even in the case that there are these sets of mutually exclusive features.

It takes us more than 2 dimensions, but in our framework, it is possible to do a similar construction to yours in dimensions assuming random token vectors (ie without the need for any specific learned structure in the embeddings for this task): simply replace the rescaled projection matrix with where is and is a projection matrix to a -dimensional subspace. Now, with high probability, each vector has a larger dot product with its own projection than another vector's projection (we need to be this large to ensure that projected vectors all have a similar length). Then use the same construction as in our post, and turn the softmax temperature down to zero.

Someone suggested this comment was inscrutable so here's a summary:

I don't think that how argmax-y softmax is being is a crux between us - we think our picture makes the most sense when softmax acts like argmax or top-k so we hope you're right that softmax is argmax-ish. Instead, I think the property that enables your efficient solution is that the set of features 'this token is token (i)' is mutually exclusive, ie. only one of these features can activate on an input at once. That means that in your example you don't have to worry about how to recover feature values when multiple features are present at once. For more general tasks implemented by an attention head, we do need to worry about what happens when multiple features are present at the same time, and then we need the f-vectors to form a nearly orthogonal basis and your construction becomes a special case of ours I think.

Having digested this a bit more, I've got a question regarding the noise terms, particularly for section 1.3 that deals with constructing general programs over sparse superposed variables.

Unfortunately, since the are random vectors, their inner product will have a typical size of . So, on an input which has no features connected to neuron , the preactivation for that neuron will not be zero: it will be a sum of these interference terms, one for each feature that is connected to the neuron. Since the interference terms are uncorrelated and mean zero, they start to cause neurons to fire incorrectly when neurons are connected to each neuron. Since each feature is connected to each neuron with probability this means neurons start to misfire when [13].

It seems to me that the assumption of uncorrelated errors here is rather load-bearing. If you don't get uncorrelated errors over the inputs you actually care about, you are forced to scale back to connecting only features to every neuron, correct? And the same holds for the construction right after this one, and probably most of the other constructions shown here?

And if you only get connected features per neuron, you scale back to only being able to compute arbitrary AND gates per layer, correct?

Now, the reason these errors are 'uncorrelated' is that the features were embedded as random vectors in our layer space. In other words, the distributions over which they are uncorrelated is the distribution of feature embeddings and sets of neurons chosen to connect to particular features. So for any given network, we draw from this distribution only once, when the weights of the network are set, and then we are locked into it.

So this noise will affect particular sets of inputs strongly, systematically, in the same direction every time. If I divide the set of features into two sets, where features in each half are embedded along directions that have a positive inner product with each other[1], I can't connect more than from the same half to the same neuron without making it misfire, right? So if I want to implement a layer that performs ANDs on exactly those features that happen to be embedded within the same set, I can't really do that. Now, for any given embedding, that's maybe only some particular sets of features which might not have much significance to each other. But then the embedding directions of features in later layers depend on what was computed and how in the earlier layers, and the limitations on what I can wire together apply every time.

I am a bit worried that this and similar assumptions about stochasticity here might turn out to prevent you from wiring together the features you need to construct arbitrary programs in superposition, with 'noise' from multiple layers turning out to systematically interact in exactly such a way as to prevent you from computing too much general stuff. Not because I see a gears-level way this could happen right now, but because I think rounding off things to 'noise' that are actually systematic is one of these ways an exciting new theory can often go wrong and see a structure that isn't there, because you are not tracking the parts of the system that you have labeled noise and seeing how the systematics of their interactions constrain the rest of the system.

Like making what seems like a blueprint for perpetual motion machine because you're neglecting to model some small interactions with the environment that seem like they ought not to affect the energy balance on average, missing how the energy losses/gains in these interactions are correlated with each other such that a gain at one step immediately implies a loss in another.

Aside from looking at error propagation more, maybe a way to resolve this might be to switch over to thinking about one particular set of weights instead of reasoning about the distribution the weights are drawn from?

- ^

E.g. pick some hyperplanes and declare everything on one side of all of them to be the first set.

Thinking the example through a bit further: In a ReLU layer, features are all confined to the positive quadrant. So superposed features computed in a ReLU layer all have positive inner product. So if I send the output of one ReLU layer implementing AND gates in superposition directly to another ReLU layer implementing another ANDs on a subset of the outputs of that previous layer[1], the assumption that input directions are equally likely to have positive and negative inner products is not satisfied.

Maybe you can fix this with bias setoffs somehow? Not sure at the moment. But as currently written, it doesn't seem like I can use the outputs of one layer performing a subset of ANDs as the inputs of another layer performing another subset of ANDs.

EDIT: Talked it through with Jake. Bias setoff can help, but it currently looks to us like you still end up with AND gates that share a variable systematically having positive sign in their inner product. Which might make it difficult to implement a valid general recipe for multi-step computation if you try to work out the details.

- ^

A very central use case for a superposed boolean general computer. Otherwise you don't actually get to implement any serial computation.

Really like this post. I had actually come to a very basic version of some of thus but not nearly as in-depth. Essentially it was realizing the Relu-Activated MLPs could be modeled as c-semirings and thus an information algebra. Some of the very basic concepts of superposition seemed to fall out of that. Would love to relate the work you guys have done to that, as your framework seems to answer some very deep questions.

In general, computing a boolean expression with terms without the signal being drowned out by the noise will require if the noise is correlated, and if the noise is uncorrelated.

Shouldn't the second one be ?

the past token can use information that is not accessible to the token generating the key (as it is in its “future” – this is captured e.g. by the attention mask)

Is this meant to say "last token" instead of "past token"?

This looks really cool! Haven't digested it all yet but I'm especially interested in the QK superposition as I'm working on something similar. I'm wondering what your thoughts are on the number of bigrams being represented by a QK circuit not being bounded by interference but by its interaction with the OV circuit. IIUC it looks like a head can store a surprising number of d_resid bigrams, but since the OV circuit is only a function of the key, then having the same key feature be in a clique with a large number of different query features means the OV-circuit will be unable to differentially copy information based on which bigram is present. I don't think this has been explored outside of toy models from Anthropic though

It's really interesting that (so far it seems) the quadratic activation can achieve the universal AND almost exponentially more efficiently than the ReLU function.

It seems plausible to me that the ReLU activation can achieve the same effect to approximate a quadratic function in a piecewise way. From the construction it seems that each component of the space is the sum of at most 2 random variables, and it seems like when you add a sparse combination of a large number of nearly orthogonal vectors, each component of the output would be approximately normally distributed. So each component could be pretty easily bounded at high probability, and a quadratic function can be approximated within the bounds as a piecewise linear function. I'm not sure how the math on the error bounds works for this, but it sounds plausible to me that the error using the piecewise approximation is low enough to accurately calculate the boolean AND function.

Also I wonder if it's possible to find experimental evidence from trained neural networks that it is using ReLU to implement the function in a piecewise way like this? Basically, after training a ReLU network to compute the AND of all pairs of inputs, we check whether the network contains repeated implementations of a circuit that flip the sign of negative inputs and increases the output at a higher slope when the input is of large enough magnitude. Though the duplication of inputs before the ReLU and the recombination would be linear operations that get mixed into the linear embedding and linear readout matrices, making it confusing to find which entries correspond to which... Maybe it can be done by testing individual boolean variables at a time. If a trained network is doing this, then it would be evidence that this is how ReLU networks achieve exponential size circuits.

This work is very exciting to me, and I'm curious to hear the authors' thoughts on whether we could verify specific predictions made by this model in real models.

- For example, the proposed U-AND operator - do we expect this to occur in real LLMs, and could we try to find evidence of this by applying mech interp to carefully-chosen toy models?

I have a more detailed write-up on model organisms of superposition here: https://docs.google.com/document/d/1hwI30HNNB2MkOrtEzo7hppG9X7Cn7Xm9a-1LBqcttWc/edit?usp=sharing

Would love to discuss this more!

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

Author order randomized. Authors contributed roughly equally — see attribution section for details.

Update as of July 2024: we have collaborated with @LawrenceC to expand section 1 of this post into an arXiv paper, which culminates in a formal proof that computation in superposition can be leveraged to emulate sparse boolean circuits of arbitrary depth in small neural networks.

What kind of document is this?

What you have in front of you is so far a rough writeup rather than a clean text. As we realized that our work is currently highly relevant to recent questions posed by interpretability researchers, we put together a lightly edited version of private notes we've written over the last ~4 months. If you'd be interested in writing up a cleaner version, get in touch, or just do it. We're making these notes public before we're done with the project because of some combination of (1) seeing others think along similar lines and wanting to make it less likely that people (including us) spend time duplicating work, (2) providing a frame which we think provides plenty of concrete immediate problems for people to independently work on[1] (3) seeking feedback to decrease the chance we spend a bunch of time on nonsense.

1 minute summary

Superposition is a mechanism that might allow neural networks to represent the values of many more features than they have neurons, provided that those features are present sparsely in the dataset. However, until now, an understanding of how computation can be done in a compressed way directly on these stored features has been limited to a few very specific tasks (for example here). The goal of this post is to lay the groundwork for a picture of how computation in superposition can be done in general. We hope this will enable future research to build interpretability techniques for reverse engineering circuits that are manifestly in superposition.

Our main contributions are:

10 minute summary

Thanks to Nicholas Goldowsky-Dill for producing an early version of this summary/diagrams and generally for being instrumental in distilling this post.

Central to our analysis of MLPs is the Universal-AND (U-AND) problem:

This problem is central to understanding computation in superposition because:

If m=d0 (the dimension of the input space), then we can store the input features using an orthonormal basis such as the neuron basis. A naive solution in this case would be to have one neuron per pair which is active if both inputs are true and 0 otherwise. This requires (m2)=Θ(d20) neurons, and involves no superposition:

On this input x1,x2 and x5 are true, and all other inputs are false.

We can do much better than this, computing all the pairwise ANDs up to a small error with many fewer neurons. To achieve this, we have each neuron care about a random subset of inputs, and we choose the bias such that each neuron is activated when at least two of them are on. This requires d=Θ(polylog(d)) neurons:

Importantly:

Our analysis of the QK part of an attention head centers on the task of skip feature-bigram checking:

This framing is valuable for understanding the role played by the attention mechanism in superposed computation because:

A nice way to construct WQK is as a sum of terms for each skip feature-bigram, each of which is a rank one matrix equal to outer product of the two feature vectors in the SFB. In the case that all feature vectors are orthogonal (no superposition) you should be thinking of something like this:

where each of the rank one matrices, when multiplied by a residual stream vector on the right and left, performs a dot product on each side:

→aTsWQK→at=∑i(→as⋅→fki)(→fqi⋅→at)

where (fk1,fq1),…,(fk|B|,fq|B|) are the feature bigrams in B with feature directions (→fki,→fqi), and →as is a residual stream vector at sequence position s. Each of these rank one matrices contributes a value of 1 to the value of →aTsWQK→at if and only if the corresponding SFB is present. Since the matrix cannot be higher rank than dhead, typically we can only check for up to ~Θ(dhead) SFBs this way.

In fact we can check for many more SFBs than this, if we tolerate some small error. The construction is straightforward once we think of WQK as this sum of tensor products: we simply add more rank one matrices to the sum, and then approximate the sum as a rank dhead matrix, using the SVD or even a random projection matrix P. This construction can be easily generalised to the case that the residual stream stores features in superposition (provided we take care to manage the size of the interference terms) in which case WQK can be thought of as being constructed like this:

When multiplied by a residual stream vector on the right and left, this expression is →aTsWQK→at=∑i(→as⋅→fki)(P→fqi⋅→at)

Importantly:

Indeed, there are many open directions for improving our understanding of computation in superposition, and we’d be excited for others to do future research (theoretical and empirical) in this area.

Some theoretical directions include:

Empirical directions include:

Structure of the Post

In Section 1, we define the U-AND task precisely, and then walk through our construction and show that it solves the task. Then we generalise the construction in 2 important ways: in Section 1.1, we modify the construction to compute ANDs of input features which are stored in superposition, allowing us to stack multiple U-AND layers together to simulate a boolean circuit. In Section 1.2 we modify the construction to compute ANDs of more than 2 variables at the same time, allowing us to compute all sufficiently small[4] boolean functions of the inputs with a single MLP. Then in Section 1.3 we explore efficiency gains from replacing the ReLU with a quadratic activation function, and explore the consequences.

In Section 2 we explore a series of questions around how to interpret the maths in Section 1, in the style of FAQs. Each part of Section 2 is standalone and can be skipped, but we think that many of the concepts discussed there are valuable and frequently misunderstood.

In section 3 we turn to the QK circuit, carefully introducing the skip feature-bigram checking task, and we explain our construction. We also discuss two scenarios that allow for more SFBs to be checked for than the simplest construction would allow.

We discuss the relevance of our constructions to real models in Section 4, and conclude in Section 5 with more discussion on Open Directions.

Notation and Conventions

In this post we make extensive use of Big-O notation and its variants, little o, Θ,Ω,ω. See wikipedia for definitions. We also make use of tilde notation, which means we ignore log factors. For example, by saying a function f(n) is Θ(g(n)), we mean that there are nonzero constants c1,c2>0 and a natural number N such that for all n>N, we have c1g(n)≤f(n)≤c2g(n). By saying a quantity is ~Θ(f(d)), we mean that this is true up to a factor that is a polynomial of logd — i.e., that it is asymptotically between f(d)/polylog(d) and f(d)polylog(d).

1 The Universal AND

We introduce a simple and central component in our framework, which we call the Universal AND component or U-AND for short. We start by introducing the most basic version of the problem this component solves. We then provide our solution to the simplest version of this problem. We later discuss a few generalizations: to inputs which store features in superposition, and to higher numbers of inputs to each AND gate. More elaboration on U-AND — in particular, addressing why we think it’s a good question to ask — is provided in Section 2.

1.1 The U-AND task

The basic boolean Universal AND problem: Given an input vector which stores an orthogonal set of boolean features, compute a vector from which can be linearly read off the value of every pairwise AND of input features, up to a small error. You are allowed to use only a single-layer MLP and the challenge is to make this MLP as narrow as possible.

More precisely: Fix a small parameter ϵ>0 and let d0 and ℓ be integers with d0≥ℓ[7]. Let →e1,…,→ed0 be the standard basis in Rd0, i.e. →ei is the vector whose ith component is 1 and whose other components are 0. Inputs are all at most ℓ-composite vectors, i.e., for each index set I⊆[d] with |I|≤ℓ, we have the input →xI=∑i∈I→ei∈Rd0. So, our inputs are in bijection with binary strings that contain at most ℓ ones[8]. Our task is to compute all (d02) pairwise ANDs of these input bits, where the notion of ‘computing’ a property is that of making it linearly represented in the output activation vector →a(→x)∈Rd. That is, for each pair of inputs i,j, there should be a linear function ri,j:Rd→R, or more concretely, a vector →ri,j∈Rd, such that →rTi,j→a(x)≈ϵANDi,j(x). Here, the ≈ϵ indicates equality up to an additive error ϵ and ANDi,j is 1 iff both bits i and j of x are 1. We will drop the subscript ϵ going forward.

We will provide a construction that computes these Θ(d20) features with a single d-neuron ReLU layer, i.e., a d0×d matrix W and a vector →b∈Rd such that →a(x)=ReLU(W→x+→b), with d≪d0. Stacking the readoff vectors →ri,j we provide as the rows of a readout matrix R, you can also see us as providing a parameter setting solving −−−−→ANDs(→x)≈ϵR(ReLU(W→x+→b)), where −−−−→ANDs(→x) denotes the vector of all (d02) pairwise ANDs. But we’d like to stress that we don’t claim there is ever something like this large, size (d02), layer present in any practical neural net we are trying to model. Instead, these features would be read in by another future model component, like how the components we present below (in particular, our U-AND construction with inputs in superposition and our QK circuit) do.

There is another kind of notion of a set of features having been computed, perhaps one that’s more native to the superposition picture: that of the activation vector (approximately) being a linear combination of f-vectors — we call these vectors f-vectors— corresponding to these properties, with coefficients that are functions of the values of the features. We can also consider a version of the U-AND problem that asks for output vectors which represent the set of all pairwise ANDs in this sense, maybe with the additional requirement that the f-vectors be almost orthogonal. Our U-AND construction solves this problem, too — it computes all pairwise ANDs in both senses. See the appendix for a discussion of some aspects of how the linear readoff notion of stuff having been computed, the linear combination notion of something having been computed, and almost orthogonality hang together.

1.2 The U-AND construction

We now present a solution to the U-AND task, computing (d02) new features with an MLP width that can be much smaller than (d02). We will go on to show how our solution can be tweaked to compute ANDs of more than 2 features at a time, and to compute ANDs of features which are stored in superposition in the inputs.

To solve the base problem, we present a random construction: W (with shape d0×d) has entries that are iid random variables which are 1 with probability p(d)≪1, and each entry in the bias vector is −1. We will pin down what p should be later.

We will denote by Si the set of neurons that are ‘connected’ to the ith input, in the sense that elements of the set are neurons for which the ith entry of the row of the weight vector that connects to that neuron is 1. →Si is used to denote the indicator set of Si: the vector which is 1 for every neuron in Si and 0 otherwise. So →Si is also the ith column of W.

Then we claim that for this choice of weight matrix, all the ANDs are approximately linearly represented in the MLP activation space with readoff vectors (and feature vectors, in the sense of Appendix B) given by

v(xi∧xj)=vij=−−−−−→Si∩Sj|Si∩Sj|

for all i,j, where we continue our abuse of notation to write Si∩Sj as shorthand for the vector which is an indicator for the intersection set, and |Si∩Sj| is the size of the set.

We preface our explanation of why this works with a technical note. We are going to choose d and p (as functions of d0) so that with high probability, all sets we talk about have size close to their expectation. To do this formally, one first shows that the probability of each individual set having size far from its expectation is smaller than any 1/poly(d0) using the Chernoff bound (Theorem 4 here), and one follows this by a union bound over all only poly(d0) sets to say that with probability 1−o(1), none of these events happen. For instance, if a set Si∩Sj has expected size log4d0, then the probability its size is outside of the range log4d0±log3d0 is at most 2e−μδ2/3=2e−log2d0=2ed−logd00 (following these notes, we let μ denote the expectation and δ denote the number of μ-sized deviations from the expectation — this bound works for δ<1 which is the case here). Technically, before each construction to follow, we should list our parameters d,p and all the sets we care about (for this first construction, these are the double and triple intersections between the Si) and then argue as described above that with high probability, they all have sizes that only deviate by a factor of 1+o(1) from their expected size and always carry these error terms around in everything we say, but we will omit all this in the rest of the U-AND section.

So, ignoring this technicality, let’s argue that the construction above indeed solves the U-AND problem (with high probability). First, note that |Si∩Sj|∼Bin(d,p2). We require that p is big enough to ensure that all intersection sets are non-empty with high probability, but subject to that constraint we probably want p to be as small as possible to minimise interference[9]. We'll choose p=log2d0/√d, such that the intersection sets have size |Si∩Sj|≈log4d0. We split the check that the readoff works out into a few cases:

So we see that this readoff is indeed the AND of i and j up to error ϵ=O(1/log2d0).

To finish, we note without much proof that everything is also computed in the sense that 'the activation vector is a linear combination of almost orthogonal features' (defined in Appendix B). The activation vector being an approximate linear combination of pairwise intersection indicator vectors with coefficients being given by the ANDs follows from triple intersections being small, as does the almost-orthogonality of these feature vectors.

U-AND allows for arbitrary XORs to be efficiently calculated

A consequence of the precise (up to ϵ) nature of our universal AND is the existence of a universal XOR, in the sense of every XOR of features being computed. In this post by Sam Marks, it is tentatively observed that real-life transformers linearly compute XOR of arbitrary features in the weak sense of being able to read off tokens where XOR of two tokens is true using a linear probe (not necessarily with ϵ accuracy). This weak readoff behavior for AND would be unsurprising, as the residual stream already has this property (using the readoff vector →fi+→fj which has maximal value if and only if fi and fj are both present). However, as Sam Marks observes, it is not possible to read off XOR in this weak way from the residual stream. We can however see that such a universal XOR (indeed, in the strong sense of ϵ-accuracy) can be constructed from our strong (i.e., ϵ-accurate) universal AND. To do so, assume that in addition to the residual stream containing feature vectors →fi and →fj, we’ve also already almost orthogonally computed universal AND features →fANDi,j into the residual stream. Then we can weakly (and in fact, ϵ-accurately) read off XOR from this space by taking the dot product with the vector →fXORi,j:=→fi+→fj−2→fANDi,j. Then we see that if we had started with the two-hot pair →fi′+→fj′, the result of this readoff will be, up to a small error O(ϵ),

⎧⎨⎩0=0−0,|{i,j}∩{i′,j′}|=0(neither coefficient agrees)1=1−0,|{i,j}∩{i′,j′}|=1(one coefficient agrees)0=2−2,{i,j}={i′,j′}(both coefficients agree)

This gives a theoretical feasibility proof of an efficiently computable universal XOR circuit, something Sam Marks believed to be impossible.

1.3 Handling inputs in superposition: sparse boolean computers

Any boolean circuit can be written as a sequence of layers executing pairwise ANDs and XORs[11] on the binary entries of a memory vector. Since our U-AND can be used to compute any pairwise ANDs or XORs of features, this suggests that we might be able to emulate any boolean circuit by applying something like U-AND repeatedly. However, since the outputs of U-AND store features in superposition, if we want to pass these outputs as inputs to a subsequent U-AND circuit, we need to work out the details of a U-AND construction that can take in features in superposition. In this section we explore the subtleties of modifying U-AND in this way. In so doing, we construct an example of a circuit which acts entirely in superposition from start to finish — nowhere in the construction are there as many dimensions as features! We consider this to be an interesting result in its own right.

U-ANDs ability to compute many boolean functions of inputs features stored in superposition provides an efficient way to use all the parameters of the neural net to compute (up to a small error) a boolean circuit with a memory vector that is wider than the layers of the NN[12]. We call this emulating a ‘boolean computer’. However, three limitations prevent any boolean circuit from being computed:

Therefore, the boolean circuits we expect can be emulated in superposition (1) are sparse circuits (2) have few layers (3) have memory vectors which are not larger than the square of the activation space dimension.

Construction details for inputs in superposition

Now we generalize U-AND to the case where input features can be in superposition. With f-vectors →f1,…,→fm∈Rd0, we give each feature a random set of neurons to map to, as before. After coming up with such an assignment, we set the ith row of W to be the sum of the f-vectors for features which map to the ith neuron. In other words, let F be the m×d0 matrix with ith row given by the components of →fi in the neuron basis:

F=⎛⎜ ⎜ ⎜⎝→f1→⋮→fm→⎞⎟ ⎟ ⎟⎠

Now let \hat{W} be a sparse matrix (with shape d×m) with entries that are iid Bernoulli random variables which are 1 with probability p(d)≪1. Then:

W=^WF

Unfortunately, since the →f1,…,→fm are random vectors, their inner product will have a typical size of 1/√d0. So, on an input which has no features connected to neuron i, the preactivation for that neuron will not be zero: it will be a sum of these interference terms, one for each feature that is connected to the neuron. Since the interference terms are uncorrelated and mean zero, they start to cause neurons to fire incorrectly when Θ(d0) neurons are connected to each neuron. Since each feature is connected to each neuron with probability p=log2d0√d) this means neurons start to misfire when m=~Θ(d0√d)[13]. At this point, the number of pairwise ANDs we have computed is (m2)=~Θ(d20d).

This is a problem, if we want to be able to do computation on input vectors storing potentially exponentially many features in superposition, or even if we want to be able to do any sequential boolean computation at all:

Consider an MLP with several layers, all of width dMLP, and assume that each layer is doing a U-AND on the features of the previous layer. Then if the features start without superposition, there are initially dMLP features. After the first U-AND, we have Θ(d2MLP) new features, which is already too many to do a second U-AND on these features!

Therefore, we will have to modify our goal when features are in superposition. That said, we're not completely sure there isn't any modification of the construction that bypasses such small polynomial bounds. But e.g. one can't just naively make ^W sparser — p can't be taken below d−1/2 without the intersection sets like |Si∩Sj| becoming empty. When features were not stored in superposition, solving U-AND corresponded to computing d20 many new features. Instead of trying to compute all pairwise ANDs of all (potentially exponentially many) input features in superposition, perhaps we should try to compute a reasonably sized subset of these ANDs. In the next section we do just that.

A construction which computes a subset of ANDs of inputs in superposition

Here, we give a way to compute ANDs of up to d0d particular feature pairs (rather than all (m2) ANDs) that works even for m that is superpolynomial in d0[14]. (We’ll be ignoring log factors in much of what follows.)

In U-AND, we take ^W to be a random matrix with iid 0/1 entries with probability p=log2d0√d. If we only need/want to compute a subset of all the pairwise ANDs — let E be this set of all pairs of inputs {i,j} for which we want to compute the AND of i and j — then whenever {i,j}∈E, we might want each pair of corresponding entries in the corresponding columns i and j of the adjacency matrix ^W, i.e., each pair (^W)ki, (^W)kj to be a bit more correlated than an analogous pair in column i′ and j′ with {i′,j′}∉E. Or more precisely, we want to make such pairs of columns {i,j} have a surprisingly large intersection for the general density of the matrix — this is to make sure that we get some neurons which we can use to read off the AND of {i,j}, while choosing the general density in ^W to be low enough that we don’t cross the density threshold at which a neuron needs to care about too many input features.

One way to do this is to pick a uniformly random set of log4d0 neurons for each {i,j}∈E, and to set the column of ^W corresponding to input i to be the indicator vector of the union of these sets (i.e., just those assigned to gates involving i). This way, we can compute up to around |E|=~Θ(d0d) pairwise ANDs without having any neuron care about more than d0 input features, which is the requirement from the previous section to prevent neurons misfiring when input f-vectors are random vectors in superposition with typical interference size Θ(1/√d0).

1.4 ANDs with many inputs: computation of small boolean circuits in a single layer

It is known that any boolean circuit with k inputs can be written as a linear combination (with possibly exponential in k terms, which is a substantial caveat) ANDs with up to k inputs (fan-in up to k)[15]. This means that, if we can compute not just pairwise ANDs, but ANDs of all fan-ins up to k, then we can write down a ‘universal’ computation that computes (simultaneously, in a linearly-readable sense) all possible circuits that depend on some up to k inputs.

The U-AND construction for higher fan-in

We will modify the standard, non-superpositional U-AND construction to allow us to compute all ANDs of a specific fan-in k.

We'll need two modifications:

Now we read off the AND of a set I of input features along the vector ⋂i∈ISi.

We can straightforwardly simultaneously compute all ANDs of fan-ins ranging from 2 to k by just evenly partitioning the d neurons into k−1 groups — let’s label these 2,3,…,k — and setting the weights into group i and the biases of group i as in the fan-in i U-AND construction.

A clever choice of density can give us all the fan-ins at once

Actually, we can calculate all ANDs of up to some constant fan-ink in a way that feels more symmetric than the option involving a partition above[16] by reusing the fan-in 2 U-AND with (let’s say) d=d0 and a careful choice of p=1log2d0 . This choice of p is larger than log2d0d1/k for any k, ensuring that every intersection set is non-empty. Then, one can read off ANDi,j from Si∩Sj as usual, but one can also read off ANDi,j,k with the composite vector

−Si∩Sj∩Sk|Si∩Sj∩Sk|+Si∩Sj|Si∩Sj|+Si∩Sk|Si∩Sk|+Sj∩Sk|Sj∩Sk| In general, one can read off the AND of an index set I with the vector ∑I′⊆I s.t. |I′|≥2(−1)|I|−|I′|+1vI′ where vI′=⋂i∈I′Si∣∣⋂i∈I′Si∣∣One can show that this inclusion-exclusion style formula works by noting that if the subset of indices of I which are on is J, then the readoff will be approximately ∑I′⊆I s.t. |I′|≥2(−1)|I|−|I′|+1max(0,|I′∩J|−1). We’ll leave it as an exercise to show that this is 0 if J≠I and 1 if J=I.

Extending the targeted superpositional AND to other fan-ins

It is also fairly straightforward to extend the construction for a subset of ANDs when inputs are in superposition to other fan-ins, doing all fan-ins on a common set of neurons. Instead of picking a set for each pair that we need to AND as above, we now pick a set for each larger AND gate that we care about. As in the previous sparse U-AND, each input feature gets sent to the union of the sets for its gates, but this time, we make the weights depend on the fan-in. Letting K denote the max fan-in over all gates, for a fan-in k gate, we set the weight from each input to K/k, and set the bias to −K+1. This way, still with at most about ~Θ(d2) gates, and at least assuming inputs have at most some constant number of features active, we can read the output of a gate off with the indicator vector of its set.

1.5 Improved Efficiency with a Quadratic Nonlinearity

It turns out that, if we use quadratic activation functions x↦x2 instead of ReLU's x↦ReLU(x), we can write down a much more efficient universal AND construction. Indeed, the ReLU universal AND we constructed can compute the universal AND of up to ~Θ(d3/2) features in a d-dimensional residual stream. However, in this section we will show that with a quadratic activation, for ℓ-composite vectors, we can compute all pairwise ANDs of up to m=Ω(exp(12ℓϵ2√d))[17] features stored in superposition (this is exponential in √d, so superpolynomial in d(!)) that admit a single-layer universal AND circuit.

The idea of the construction is that, on the large space of features Rm, the AND of the boolean-valued feature variables fi,fj can be written as a quadratic function qi,j:{0,1}m↦{0,1}; explicitly, qi,j(f1,…,fm)=fi⋅fj. Now if we embed feature space Rm onto a smaller Rr in an ϵ-almost-orthogonal way, it is possible to show that the quadratic function qi,j on Rm is well-approximated on sparse vectors by a quadratic function on Rr (with error bounded above by 2ϵ on 2-sparse inputs in particular). Now the advantage of using quadratic functions is that any quadratic function on Rr can be expressed as a linear read-off of a special quadratic function Q:Rr→Rr2 given by the composition of a linear function Rr→Rr2 and a quadratic element-wise activation function on Rr2 which creates a set of neurons which collectively form a basis for all quadratic functions. Now we can set d=r2 to be the dimension of the residual stream and work with an r-dimensional subspace V of the residual stream, taking the almost-orthogonal embedding Rm→V. Then the map VQ→Rd provides the requisite universal AND construction. We make this recipe precise in the following section

Construction Details

In this section we use slightly different notation to the rest of the post, dropping overarrows for vectors, and we drop the distinction between features and f-vectors.

Let V=Rr be as above. There is a finite-dimensional space of quadratic functions on Rr, with basis qij=xixj of size r2 (such that we can write every quadratic function as a linear combination of these basis functions); alternatively, we can write qij(v)=(v⋅ei)(v⋅ej), for ei,ej the basis vectors. We note that this space is spanned by a set of functions which are squares of linear functions of {xi}:

L(1)i(x1,…,xr)=xiL(2)i,j(x1,…,xr)=xi+xjL(3)i,j(x1,…,xr)=xi−xj

The squares of these functions are a valid basis for the space of quadratic functions on Rr since qii=(L(1)i)2 and for i≠j, we have qij=(L(2)i,j)2−(L(3)i,j)24. There are m distinct functions of type (1), and (m2) functions each of type (2) and (3), for a total of r2 basis functions as before. Thus there exists a single-layer quadratic-activation neural net Q:x↦y from Rr→Rr2 such that any quadratic function on Rr is realizable as a "linear read-off", i.e., given by composing Q with a linear function Rr2→R. In particular, we have linear "read-off" functions Λij:Rr2→R such that Lij(Q(x))=qij(x).

Now suppose that f1,…,fm is a collection of f-vectors which are ϵ-almost-orthogonal, i.e., such that |fi|=1 for any i and |fi⋅fj|<ϵ∀i<j≤m. Note that (for fixed ϵ<1), there exist such collections with exponential (in r) number of vectors m. We can define a new collection of symmetric bilinear functions (i.e., functions in two vectors v,w∈Rn which are linear in each input independently and symmetric to switching v,w), ϕi,j, for a pair of (not necessarily distinct) indices 0<i≤j≤m, defined by ϕi,j(v)=(v⋅fi)(v⋅fj) (this is a product of two linear functions, hence quadratic). We will use the following result:

Proposition 1 Suppose ϕi,j is as above and 0<i′≤j′<m is another pair of (not necessarily distinct) indices associated to feature vectors vi,vj. Then

ϕi,j(vi′,vj′)⎧⎨⎩=1,i=i′ and j=j′∈(−ϵ,ϵ),(i,j)≠(i′,j′)∈(−ϵ2,ϵ2),{i,j}∩{i′,j′}=∅ (i.e., no indices in common)

This proposition follows immediately from the definition of ϕk,ℓ and the almost orthogonality property. □

Now define the single-valued quadratic function ϕsinglei,j(v):=12ϕi,j(v,v), by applying the bilinear form to two copies of the same vector and dividing by 2. Then the proposition above implies that, for two pairs of distinct indices 0<i<j≤m and 0<i′<j′≤m we have the following behavior on the sum of two features (the superpositional analog of a two-hot vector):

ϕsinglei,j(vi′+vj′)=ϕi,j(vi′,vi′)+2ϕi,j(vi′,vj′)+ϕi,j(vj′,vj′)2=ϕi,j(vi′,vj′)+O(ϵ).

The first formula follows from bilinearity (which is equivalent to the statement that the two entries in ϕi,j behave distributively) and the last formula follows from the proposition since we assumed (i,j) are distinct indices, hence cannot match up with a pair of identical indices (i′,i′) or (j′,j′). Moreover, O(ϵ) term in the formula above is bounded in absolute value by 2ϵ2=ϵ.

Combining this formula with Proposition 1, we deduce:

Proposition 2

ϕsinglei,j(vi′+vj′)=⎧⎨⎩1+O(ϵ),i=i′ and j=j′O(ϵ),(i,j)≠(i′,j′)O(ϵ2),i≠i′.

Moreover, by the triangle inequality, the linear constants inherent in the O(...) notation are ≤2. □

Corollary ϕi,j(vi′+vj′)=δ(i,j),(i′,j′)+O(ϵ), where the δ notation returns 1 when the two pairs of indices are equal and 0 otherwise.

We can now write down the universal AND function by setting d=r2 above. Assume we have m<exp(ϵ22r). This guarantees (with probability approaching 1) that m random vectors in V≅Rr are (ϵ-)almost orthogonal, i.e., have dot products <ϵ. We assume the vectors v1,…,vm are initially embedded in V⊂Rd. (Note that we can instead assume they were initially randomly embedded in Rd, then re-embedded in Rr by applying a random projection and rescaling appropriately.) Let Q:Rr→Rd=r2 be the universal quadratic map as above; we let qij:Rd→R be the quadratic functions as above. Now we claim that Q is a universal AND with respect to the feature vectors v1,…,vN. Note that, since the function ϕsinglei,j(v) is quadratic on Rr, it can be factorized as ϕsinglei,j(x)=Φi,j(Q(x)), for Φi,j some linear function on Rr2[18]. We now see that the linear maps Φi,j are valid linear read-offs for ANDs of features: indeed,

Φi,j(Q(vi′+vj′))=ϕsinglei,j(vi′,vj′)=δ(i,j),(i′,j′)+O(ϵ)=AND(bi′,j′i,bi′,j′j),

where bi′,j′ is the two-hot boolean indicator vector with 1s in positions i′ and j′. Thus the AND of any two indices i,j can be computed via the readout linear function Φi,j on any two-hot input bi′,j′. Moreover, applying the same argument to a larger sparse sum gives Φi,j(Q(∑mk=1bkvk))=AND(bi,bj)+O(s2ϵ), where s=∑mk=1bk is the sparsity[19].

Scaling and comparison with ReLU activations

It is surprising that the universal AND circuit we wrote down for quadratic activations is so much more expressive than the one we have for ReLU activations, since the conventional wisdom for neural nets is that the expressivity of different (suitably smooth) activation functions does not increase significantly when we replace arbitrary activations by quadratic ones. We do not know if this is a genuine advantage of quadratic activations over others (and indeed might be implemented in transformers in some sophisticated way involving attention nonlinearities), or whether there is some yet-unknown reason that (perhaps assuming nice properties of our features), ReLU's can give more expressive universal AND circuits than we have been able to find in the present work. We list this discrepancy as an interesting open problem that follows from our work.

Generalizations

Note that the nonlinear function Q above lets us read off not only the AND of two sparse boolean vectors, but more generally the sum of products of coordinates of any sufficiently sparse linear combination of feature vectors vi (not necessarily boolean). More generally, if we replace quadratic activations with cubic or higher, we can get cubic expressions, such as the sum of triple ANDs (or, more generally, products of triples of coordinates). A similar effect can be obtained by chaining l sequential levels of quadratic activations to get polynomial nonlinearities with exponent e=2l. Then so long as we can fit O(re)[20] features in the residual stream in an almost-orthogonal way (corresponding to a basis of monomials of degree d on r-dimensional space), we can compute sums of any degree-e monomial over features, and thus any boolean circuit of degree e, up to O(ϵ), where the linear constant implicit in the O depends on the exponent e. This implies that for any value e, there is a dimension d universal nonlinear map Rd→Rd with ⌈log2(e)⌉ quadratic activations such that any sparse boolean circuit involving ≤e elements is linearly represented (via an appropriate readoff vector). Moreover, keeping e fixed, d grows only as O(log(n))e. However, the constant associated with the big-O notation might grow quite quickly as the exponent e increases. It would be interesting to analyse this scaling behavior more carefully, but that is outside the scope of the present work.

1.6 Universal Keys: an application of parallel boolean computation

So far, we have used our universal boolean computation picture to show that superpositional computation in a fully-connected neural network can be more efficient (specifically, compute roughly as many logical gates as there are parameters rather than non-superpositional implementations, which are bounded by number of neurons). This does not fully use the universality of our constructions: i.e., we must at every step read a polynomial (at most quadratic) number of features from a vector which can (in either the fan-in-k or quadratic-activation contexts) compute a superpolynomial number of boolean circuits. At the same time, there is a context in transformers where precisely this universality can give a remarkable (specifically, superpolynomial in certain asymptotics) efficiency improvement. Namely, recall that the attention mechanism of a transformer can be understood as a way for the last-token residual stream to read information from past tokens which pass a certain test associated to the query-key component. In our simplified boolean model, we can conceptualize this as follows:

Importantly, there is an information asymmetry between the “past” tokens (which contribute the key) and the last token that implements the linear read-off via query: in generating the boolean relevance function, the past token can use information that is not accessible to the token generating the key (as it is in its “future” – this is captured e.g. by the attention mask). One might previously have assumed that in generating a key vector, tokens need to “guess” which specific combinations of key features may be relevant to future tokens, and separately generate some read-off for each; this limits the possible expressivity of choosing the relevance function g to a small (e.g. linear in parameter number) number of possibilities.

However, our discovery of circuits that implement universal calculation suggests a surprising way to resolve this information asymmetry: namely, using a universal calculation, the key can simultaneously compute, in an approximately linearly-readable way, ALL possible simple circuits of up to Olog(dresid) inputs. This increases the number of possibilities of the relevance function g to allow all such simple circuits; this can be significantly larger than the number of parameters and asymptotically (for logarithmic fan-ins) will in fact be superpolynomial[21]. As far as we are aware, this presents a qualitative (from a complexity-theoretic point of view) update to the expressivity of the attention mechanism compared to what was known before.

Sam Marks’ discovery of the universal XOR was done in this context: he observed using a probe that it is possible for the last token of a transformer to attend to past tokens that return True as the XOR of an arbitrary pair of features, something that he originally believed was computationally infeasible.

We speculate that this will be noticeable in real-life transformers, and can partially explain the observation that transformers tend to implement more superposition than fully-connected neural networks.

2 U-AND: discussion

We discuss some conceptual matters broadly having to do with whether the formal setup from the previous section captures questions of practical interest. Each of these subsections is standalone, and you needn’t read any to read Section 3.

Aren't the ANDs already kinda linearly represented in the U-AND input?

This subsection refers to the basic U-AND construction from Section 1.1, with inputs not in superposition, but the objection we consider here could also be raised against other U-AND variants. The objection is this: aren’t ANDs already linearly present in the input, so in what sense have we computed them with the U-AND? Indeed, if we take the dot product of a particular 2-hot input with (→ei+→ej)/2, we get 0 if neither the ith nor the jth features are present, 1/2 if 1 of them is present, and 1 if they are both present. If we add a bias of −1/4, then without any nonlinearity at all, we get a way to read off pairwise U-AND for ϵ=1/4. The only thing the nonlinearity lets us do is to reduce this “interference” ϵ=1/4 to a smaller ϵ. Why is this important?

In fact, one can show that you can't get more accurate than ϵ=1/4 without a nonlinearity, even with a bias, and ϵ=1/4 is not good enough for any interesting boolean circuit. Here’s an example to illustrate the point:

Suppose that I am interested in the variable z=∧(xi,xj)+∧(xk,xl). z takes on a value in {0,1,2} depending on whether both, one, or neither of the ANDs are on. The best linear approximation to z is 1/2(xi+xj+xk+xl−1), which has completely lost the structure of z. In this case, we have lost any information about which way the 4 variables were paired up in the ANDs.

In general, computing a boolean expression with k terms without the signal being drowned out by the noise will require ϵ<1/k if the noise is correlated, and ϵ<1/k2 if the noise is uncorrelated. In other words, noise reduction matters! The precision provided by ϵ-accuracy allows us to go from only recording ANDs to executing more general circuits in an efficient or universal way. Indeed, linear combinations of linear combinations just give more linear combinations – the noise reduction is the difference between being able to express any boolean function and being unable to express anything nonlinear at all. The XOR construction (given above) is another example that can be expressed as a linear combination involving the U-AND and would not work without the nonlinearity.

Aren’t the ANDs already kinda nonlinearly represented in the U-AND input?

This subsection refers to the basic U-AND construction from Section 1.1, with inputs not in superposition, but the objection we consider here could also be raised against other U-AND variants. While one cannot read off the ANDs linearly before the ReLU, except with a large error, one could certainly read them off with a more expressive model class on the activations. In particular, one can easily read ANDi,j off with a ReLU probe, by which we mean ReLU(rTx+b), with r=ei+ej and b=−1. We think there’s some truth to this: we agree that if something can be read off with such a probe, it’s indeed at least almost already there. And if we allowed multi-layer probes, the ANDs would be present already when we only have some pre-input variables (that our input variables are themselves nonlinear functions of). To explore a limit in ridiculousness: if we take stuff to be computed if it is recoverable by a probe that has the architecture of GPT-3 minus the embed and unembed and followed by a projection on the last activation vector of the last position residual stream, then anything that is linearly accessible in the last layer of GPT-3 is already ‘computed’ in the tuple of input embeddings. And to take a broader perspective: any variable ever computed by a deterministic neural net is in fact a function of the input, and is thus already ‘there in the input’ in an information-theoretic sense (anything computed by the neural net has zero conditional entropy given the input). The information about the values of the ANDs is sort of always there, but we should think of it as not having been computed initially, and as having been computed later[22].

Anyway, while taking something to be computed when it is affinely accessible seems natural when considering reading that information into future MLPs, we do not have an incredibly strong case that it’s the right notion. However, it seems likely to us that once one fixes some specific notion of stuff having been computed, then either exactly our U-AND construction or some minor variation on it would still compute a large number of new features (with more expressive readoffs, these would just be more complex properties — in our case, boolean functions of the inputs involving more gates). In fact, maybe instead of having a notion of stuff having been computed, we should have a notion of stuff having been computed for a particular model component, i.e. having been represented such that a particular kind of model component can access it to ‘use it as an input’. In the case of transformers, maybe the set of properties that have been computed as far as MLPs can tell is different than the set of properties that have been computed as far as attention heads (or maybe the QK circuit and OV circuit separately) can tell. So, we’re very sympathetic to considering alternative notions of stuff having been computed, but we doubt U-AND would become much less interesting given some alternative reasonable such notion.

If you think all this points to something like it being weird to have such a discrete notion of stuff having been computed vs not at all, and that we should maybe instead see models as ‘more continuously cleaning up representations’ rather than performing computation: while we don’t at present know of a good quantitative notion of ‘representation cleanliness’, so we can’t at present tell you that our U-AND makes amount x of representation cleanliness progress and x is sort of large compared to some default, it does seem intuitively plausible to us that it makes a good deal of such progress. A place where linear read-offs are clearly qualitatively important and better than nonlinear read-offs is in application to the attention mechanism of a transformer.

Does our U-AND construction really demonstrate MLP superposition?

This subsection refers to the basic U-AND construction from Section 1.1, with inputs not in superposition, but the objection we consider here could also be raised against other U-AND variants. One could try to tell a story that interprets our U-AND construction in terms of the neuron basis: we can also describe the U-AND as approximately computing a family of functions each of which record whether at least two features are present out of a particular subset of features[23]. Why should we see the construction as computing outputs into superposition, instead of seeing it as computing these different outputs on the neurons? Perhaps the 'natural' units for understanding the NN is in terms of these functions, as unintuitive as they may seem to a human.

In fact, there is a sense in which if one describes the sampled construction in the most natural way it can be described in the superposition picture, one needs to spend more bits than if one describes it in the most natural way it can be described in this neuron picture. In the neuron picture, one needs to specify a subset of size ~Θ(d0/√d) for each neuron, which takes dlog2(d0~Θ(d0/√d))≤~Θ(d20√d) bits to specify. In the superpositional picture, one needs to specify (d02) subsets of size ~Θ(1), which takes about ~Θ(d20) bits to specify[24]. If, let’s say, d=d0, then from the point of view of saving bits when representing such constructions, we might even prefer to see them in a non-superpositional manner!

We can imagine cases (of something that looks like this U-AND showing up in a model) in which we’d agree with this counterargument. For any fixed U-AND construction, we could imagine a setup where for each neuron, the inputs feeding into it form some natural family — slightly more precisely, that whether two elements of this family are present is a very natural property to track. In fact, we could imagine a case where we perform future computation that is best seen as being about these properties computed by the neurons — for instance, our output of the neural net might just be the sum of the activations of these neurons. For instance, perhaps this makes sense because having two elements of one of these families present is necessary and sufficient for an image to be that of a dog. In such a case, we agree it would be silly to think of the output as a linear combination of pairwise AND features.

However, we think there are plausible contexts in which such a circuit would show up in which it seems intuitively right to see the output as a sparse sum of pairwise ANDs: when the families tracked by particular neurons do not seem at all natural and/or when it is reasonable to see future model components as taking these pairwise AND features as inputs. Conditional on thinking that superposition is generic, it seems fairly reasonable to think that these latter contexts would be generic.

Is universal calculation generic?

The construction of the universal AND circuit in the “quadratic nonlinearity” section above can be shown to be stable to perturbations; a large family of suitably “random” circuits in this paradigm contain all AND computations in a linearly-readable way. This updates us to suspect that at least some of our universal calculation picture might be generic: i.e., that a random neural net, or a random net within some mild set of conditions (that we can’t yet make precise), is sufficiently expressive to (weakly) compute any small circuit. Thus linear probe experiments such as Sam Marks’ identification of the “universal XOR” in a transformer may be explainable as a consequence of sufficiently complex, “random-looking” networks. This means that the correct framing for what happens in a neural net executing superposition might not be that the MLP learns to encode universal calculation (such as the U-AND circuit), but rather that such circuits exist by default, and what the neural network needs to learn is, rather, a readoff vector for the circuit that needs to be executed. While we think that this would change much of the story (in particular, the question of “memorization” vs. “generalization” of a subset of such boolean circuit features would be moot if general computation generically exists), this would not change the core fact that such universal calculation is possible, and therefore likely to be learned by a network executing (or partially executing) superposition. In fact, such an update would make it more likely that such circuits can be utilized by the computational scheme, and would make it even more likely that such a scheme would be learned by default.

We hope to do a series of experiments to check whether this is the case: whether a random network in a particular class executes universal computation by default. If we find this is the case, we plan to train a network to learn an appropriate read-off vector starting from a suitably random MLP circuit, and, separately, to check whether existing neural networks take advantage of such structure (i.e., have features – e.g. found by dictionary learning methods – which linearly read off the results of such circuits). We think this would be particularly productive in the attention mechanism (in the context of “universal key” generation, as explained above).

What are the implications of using ϵ-accuracy? How does this compare to behavior found by minimizing some loss function?

A specific question here is:

The answer is that sometimes they are not going to be the same. In particular, our algorithm may not be given a low loss by MSE. Nevertheless, we think that ϵ-accuracy is a better thing to study for understanding superposition than MSE or other commonly considered loss functions (cross entropy would be much less wise than either!) This point is worth addressing properly, because it has implications for how we think about superposition and how we interpret results from the toy models of superposition paper and from sparse autoencoders, both of which typically use MSE.

For our U-AND task, we ask for a construction →f(→x) that approximately equals a 1-hot target vector →y, with each coordinate allowed to differ from its target value by at most epsilon. A loss function which would correspond to this task would look like a cube well with vertical sides (the inside of the region L∞(→f(→x),→y)<ϵ). This non-differentiable loss function would be useless for training. Let’s compare this choice to alternatives and defend it.

If we know that our target is always a 1-hot vector, then maybe we should have a softmax at the end of the network and use cross-entropy loss. We purposefully avoid this, because we are trying to construct a toy model of the computation that happens in intermediate layers of a deep neural network, taking one activation vector to a subsequent activation vector. In the process there is typically no softmax involved. Also, we want to be able to handle datapoints in which more than 1 AND is present at a time: the task is not to choose which AND is present, but *which of the ANDs* are present.