Has Tyler Cowen ever explicitly admitted to being wrong about anything?

Not 'revised estimates' or 'updated predictions' but 'I was wrong'.

Every time I see him talk about learning something new, he always seems to be talking about how this vindicates what he said/thought before.

Gemini 2.5 pro didn't seem to find anything, when I did a max reasoning budget search with url search on in aistudio.

EDIT: An example was found by Morpheus, of Tyler Cowen explictly saying he was wrong - see the comment and the linked PDF below

In the post 'Can economics change your mind?' he has a list of examples where he has changed his mind due to evidence:

...1. Before 1982-1984, and the Swiss experience, I thought fixed money growth rules were a good idea. One problem (not the only problem) is that the implied interest rate volatility is too high, or exchange rate volatility in the Swiss case.

2. Before witnessing China vs. Eastern Europe, I thought more rapid privatizations were almost always better. The correct answer depends on circumstance, and we are due to learn yet more about this as China attempts to reform its SOEs over the next five to ten years. I don’t consider this settled in the other direction either.

3. The elasticity of investment with respect to real interest rates turns out to be fairly low in most situations and across most typical parameter values.

4. In the 1990s, I thought information technology would be a definitely liberating, democratizing, and pro-liberty force. It seemed that more competition for resources, across borders, would improve economic policy around the entire world. Now this is far from clear.

5. Given the greater ease of converting labor income into capit

This seems to be a really explicit example of him saying that he wss wrong about something, thank you!

Didn't think this would exist/be found, but glad I was wrong.

thank you for this search. Looking at the results, top 3 are by commentors.

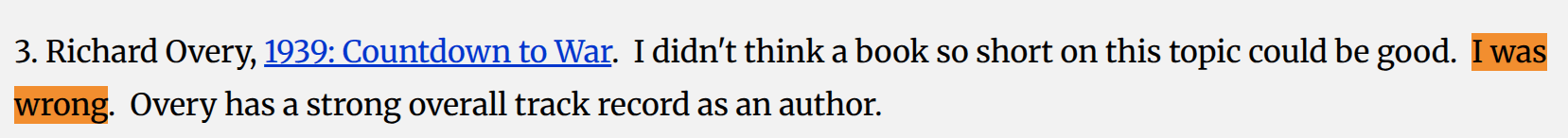

Then one about not thinking a short book could be this good.

I don't think this is Cowen actually saying he made a wrong prediction, just using it to express how the book is unexpectedly good at talking about a topic that might normally take longer, though happy to hear why I'm wrong here.

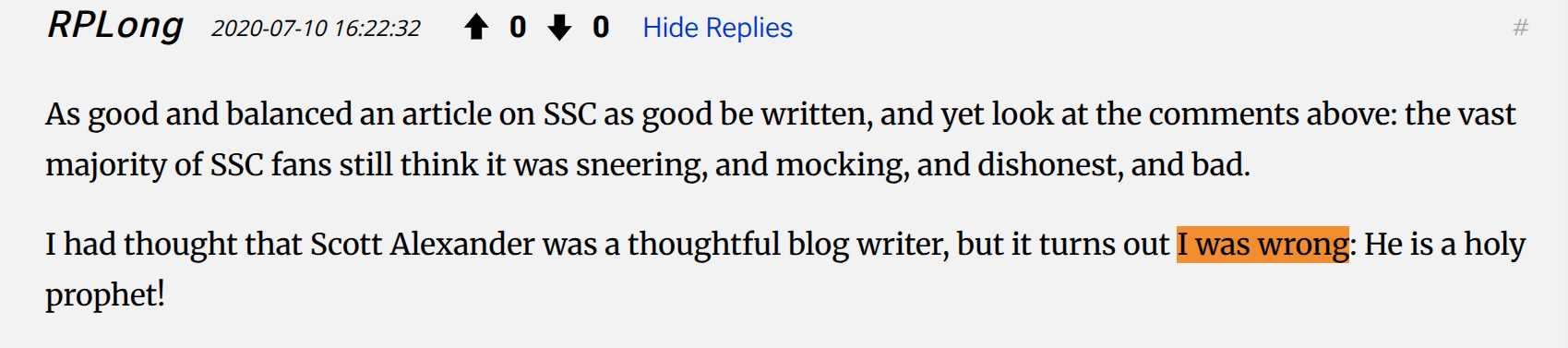

Another commentor:

another commentor:

Ending here for now, doesn't seem to be any real instances of Tyler Cowen saying he was wrong about something he thought was true yet.

So, apparently, I'm stupid. I could have been making money this whole time, but I was scared to ask for it

i've been giving a bunch of people and businesses advice on how to do their research and stuff. one of them messaged me, i was feeling tired and had so many other things to do. said my time is busy.

then thought fuck it, said if they're ok with a $15 an hour consulting fee, we can have a call. baffled, they said yes.

then realized, oh wait, i have multiple years of experience now leading dev teams, ai research teams, organizing research hackathons and getting frontier research done.

wtf

if someone who's v good at math wants to do some agent foundations stuff to directly tackle the hard part of alignement, what should they do?

If they're talented, look for a way to search over search processes without incurring the unbounded loss that would result by default.

If they're educated, skim the existing MIRI work and see if any results can be stolen from their own field.

I currently think we're mostly interested in properties that apply at all timesteps, or at least "quickly", as well as in the limit; rather than only in the limit. I also think it may be easier to get a limit at all by first showing quickness, in this case, but not at all sure of that.

Hi, I'm running AI Plans, an alignment research lab. We've run research events attended by people from OpenAI, DeepMind, MIRI, AMD, Meta, Google, JPMorganChase and more. And had several alignment breakthroughs, including a team finding out that LLMs are maximizers, one of the first Interpretability based evals for LLMs, finding how to cheat every AI Safety eval that relies on APIs and several more.

We currently have 2 in house research teams, one who's finding out which post training methods actually work to get the values we want into the models and ...

Hi, I'm hosting an AI Safety Law-a-Thon on October 25th to 26th. Will be pairing up AI Safety researchers with lawyers to share knowledge and brainstorm risk scenarios. If you've ever talked/argued about p doom and know what a mesaoptimizer is, then you've already done something very similar to this.

Main difference here is that you'll be able to reduce p doom in this one! Many of the lawyers taking part are from top, multi-billion dollar companies, advisors to governments, etc. And they know essentially nothing about alignment. You might be concerned...

Maybe there's a filtering effect for public intellectuals.

If you only ever talk about things you really know a lot about, unless that thing is very interesting or you yourself are something that gets a lot of attention (e.g. a polyamorous cam girl who's very good at statistics, a Muslim Socialist running for mayor in the world's richest city, etc), you probably won't become a 'public intellectual'.

And if you venture out of that and always admit it when you get something wrong, explicitly, or you don't have an area of speciality and admit to get...

it's so unnecessarily hard to get funding in alignment.

they say 'Don't Bullshit' but what that actually means is 'Only do our specific kind of bullshit'.

and they don't specify because they want to pretend that they don't have their own bullshit

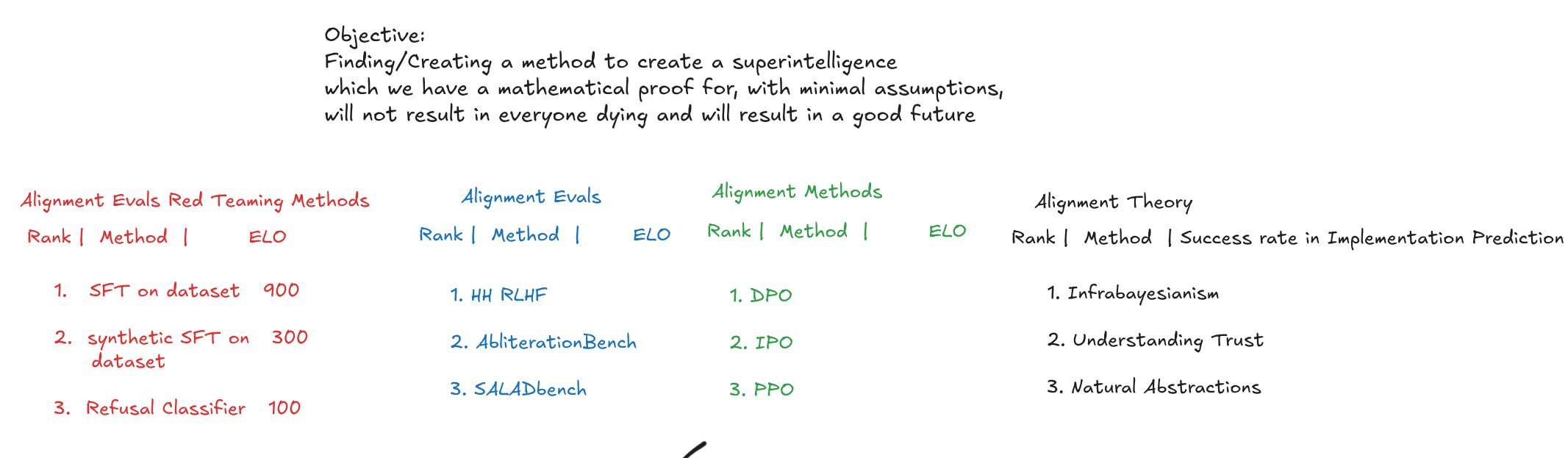

Working on a meta plan for solving alignment, I'd appreciate feedback & criticism please - the more precise the better. Feel free to use the emojis reactions if writing a reply you'd be happy with feels taxing.

Diagram for visualization - items in tables are just stand-ins, any ratings and numbers are just for illustration, not actual rankings or scores at this moment.

Red and Blue teaming Alignment evals

Make lots of red teaming methods to reward hack alignment evals

Use this to find actually useful alignment evals, then red team and reward hack them...

For AI Safety funders/regranters - e.g. Open Phil, Manifund, etc:

It seems like a lot of the grants are swayed by 'big names' being on there. I suggest making anonymity compulsary if you want to more merit based funding, that explores wider possibilities and invests in more upcoming things.

Treat it like a Science rather than the Bragging Competition it currently is.

A Bias Pattern atm seems to be that the same people get funding, or recommended funding by the same people, leading to the number of innovators being very small, or growing much...

A solution I've come around to for this is retroactive funding. As in, if someone did something essentially without funding, that resulted in outcomes, which if you knew were guaranteed, you would have funded/donated to the project, then donate to the person to encourage them to do it more.

i earnt more from working at a call center for about 3 months than i have in 2+ years of working in ai safety.

And i've worked much harder in this than I did at the call center

if serious about us china cooperation and not cargo culting, please read: https://www.cac.gov.cn/2025-09/15/c_1759653448369123.htm

i messed things up a lot in organizing the moonshot program. working hard to make sure the future events will be much better. lots of things i can do.

Do you think you can steal someone's parking spot?

If yes, what exactly do you think you're stealing?

You're "stealing" their opportunity to use that space. In legal terms, assuming they had a right to the spot, you'd be committing an unauthorized use of their property, causing deprivation of benefit or interference with use.

AIgainst the Gods

Cultivation story, but instead of cultivation, it's a post AGI story in a world that's mostly a utopia. But, there are AGI overlords, which are basically benevolent.

There's a very stubborn young man, born in the classical sense (though without any problems like ageing disease, serious injuries, sickness, etc that people used to have - and without his mother having any of the screaming pain that childbirth used to have, or risk of life), who hates the state of power imbalance.

He doesnt want the Gods to just give him power ...

Trying to put together a better explainer for the hard part of alignment, while not having a good math background https://docs.google.com/document/d/1ePSNT1XR2qOpq8POSADKXtqxguK9hSx_uACR8l0tDGE/edit?usp=sharing

Please give feedback!

this might basically be me, but I'm not sure how exactly to change for the better. theorizing seems to take time and money which i don't have.

Thinking about judgement criteria for the coming ai safety evals hackathon (https://lu.ma/xjkxqcya )

These are the things that need to be judged:

1. Is the benchmark actually measuring alignment (the real, scale, if we dont get this fully right right we die, problem)

2. Is the way of Deceiving the benchmark to get high scores actually deception, or have they somehow done alignment?

Both of these things need:

- a strong deep learning & ml background (ideally, muliple influential papers where they're one of the main authors/co-authors, or do...

my mum said to my little sister to take a break from her practice test for the eleven plus and come eat dinner, in the kitchen, with the rest of the family, my gran me and her (dad is upsairs, he's rarely here for family dinner). my little sister, in a trembling voice said 'but then dad will say '

mum sharply says to leave it and come eat dinner. she leaves the living room where my little sister is, goes to the kitchen. my little sister tries to shut off the lights in the living room, when it stutters, beats her little hands onto it in frustration.

mum...

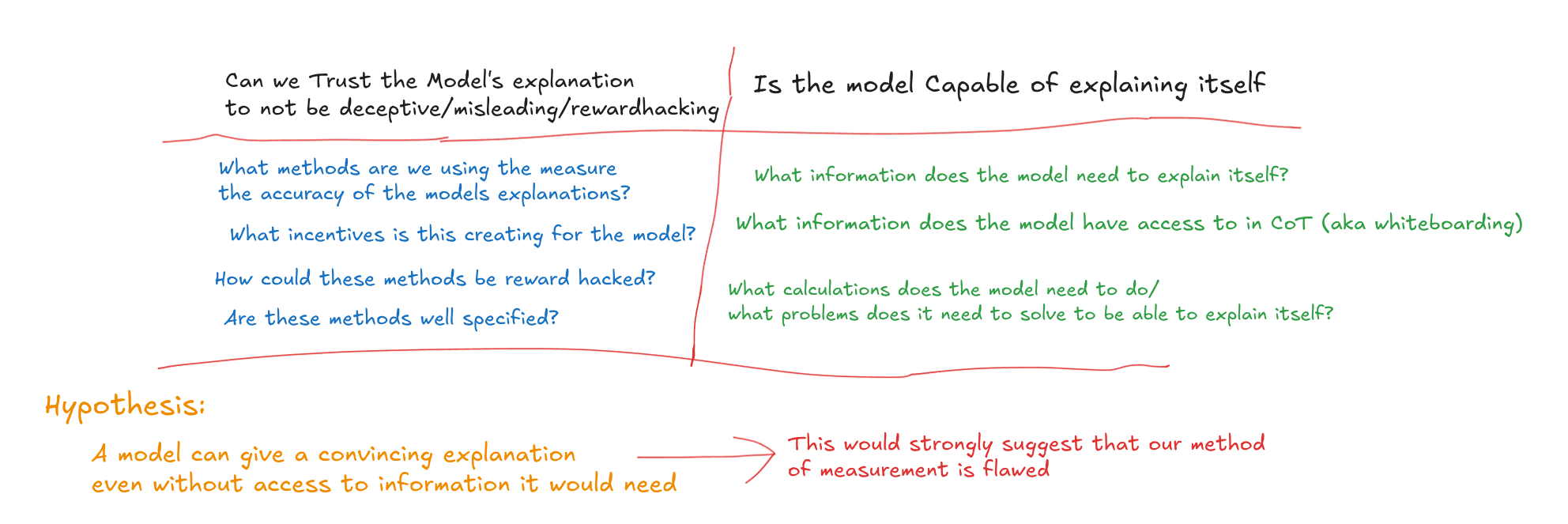

Someone asked me about LLM self explaining and evaluating that earlier today, I made this while explaining what I'd look for in that kinda research.

Sharing because they found it useful and others might too.

what do you think of this for the alignment problem?

If we make an AI system that's capable of making another, more capable AI system, which then makes another more capable AI and that makes another one and so on, how can we trust that that will result in AI systems that only do what we want and don't do things that we don't want?

my dad keeps traumatizing my little sister and making her cry, while 'teaching' her maths. hitting the table and demanding to know why she wrote the wrong answer. he doesnt fucking understand or respect that this is not how you teach people. he doesnt respect her or anyone else

I'm annoyed by the phrase 'do or do not, there is no try', because I think it's wrong and there very much is a thing called trying and it's important.

However, it's a phrase that's so cool and has so much aura, it's hard to disagree with it without sounding at least a little bit like an excuse making loser who doesn't do things and tries to justify it.

Perhaps in part, because I feel/fear that I may be that?

The mind uploading stuff seems to be a way to justify being ok with dying, imo, and digging ones head into the sand, pretending that if something talks a bit like you, it is you.

If a friend can very accurately do an impression of me and continues to do so for a week, while wearing makeup to look like me, I have not 'uploaded' myself into them. And I still wouldn't want to die, just because there's someone who is doing an extremely good impression of myself.

prob not gonna be relatable for most folk, but i'm so fucking burnt out on how stupid it is to get funding in ai safety. the average 'ai safety funder' does more to accelerate funding for capabilities than safety, in huge part because what they look for is Credentials and In-Group Status, rather than actual merit.

And the worst fucking thing is how much they lie to themselves and pretend that the 3 things they funded that weren't completely in group, mean that they actually aren't biased in that way.

At least some VCs are more honest that they want to be leeches and make money off of you.

ok, options.

- Review of 108 ai alignment plans

- write-up of Beyond Distribution - planned benchmark for alignment evals beyond a models distribution, send to the quant who just joined the team who wants to make it

- get familiar with the TPUs I just got access to

- run hhh and it's variants, testing the idea behind Beyond Distribution, maybe make a guide on itr

- continue improving site design

- fill out the form i said i was going to fill out and send today

- make progress on cross coders - would prob need to get familiar with those tpus

- writeup o...

btw, thoughts on this for 'the alignment problem'?

"A robust, generalizable, scalable, method to make an AI model which will do set [A] of things as much as it can and not do set [B] of things as much as it can, where you can freely change [A] and [B]"

What we need to solve alignment:

A way to actually see if we're doing useful work

More time

More funding to useful ai safety research

Clearly, public signalling of what is and isn't useful alignment work

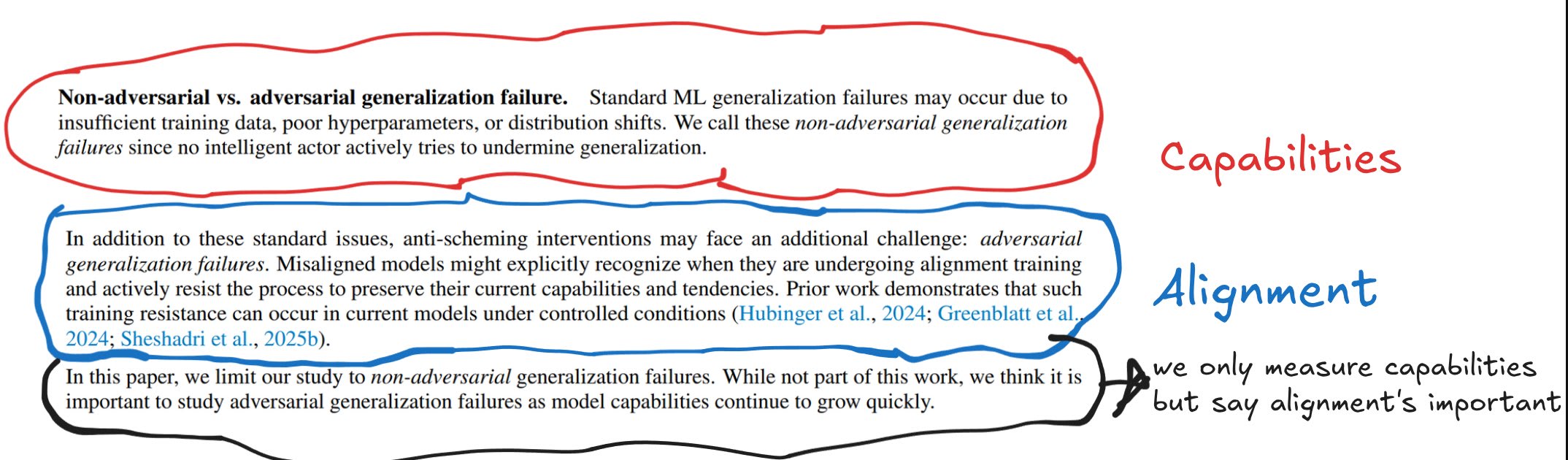

Too, too much of the current alignment work is not only not useful, but actively bad and making things worse. The most egregious example of this to me, is capability evals. Capability evals, like any eval, can be useful for seeing which algorithms are more successful in finding optimizers at finding tasks - and in a world where it seems ...

this is one of the most specifc and funny things I've read in a while: https://tomasbjartur.substack.com/p/the-company-man

a youtuber with 25k subscribers, with a channel on technical deep learning, is making a promo vid for the moonshot program.

Talking about what alignment is, what agent foundations is, etc. His phd is in neuroscience.

do you want to comment on the script?

https://docs.google.com/document/d/1YyDIj2ohxwzaGVdyNxmmShCeAP-SVlvJSaDdyFdh6-s/edit?tab=t.0

It's 2025, AIs can solve proofs and my dad is yelling at my 10 year old sister for not memorizing her times tables up to 20

I'm going to be more blunt and honest when I think AI safety and gov folk are being dishonest and doing trash work.

the average ai safety funder does more to accelerate capabilities than they do safety, in part due to credentialism and looking for in group status.

# General how to Evaluate Evaluations

Is it measuring one very specific thing?

Probably not. Is the thing something that actually has multiple things that could be causing it, or just one? An evaluation is fundamentally, a search process. Searching for a more specific thing and trying to make your process not ping for anything other than one specific thing, makes it more useful, when searching in the v noisy things that are AI models. Most evals dont even claim to measure one precise thing at all. The ones that do - can you think of a way to split that

this may make little to negative sense, if you don't have a lot of context:

thinking about when I've been trying to bring together Love and Truth - Vyas talked about this already in the Upanishads. "Having renounced (the unreal), enjoy (the real). Do not covet the wealth of any man". Having renounced lies, enjoy the truth. And my recent thing has been trying to do more of exactly that - enjoying. And 'do not covet the wealth of any man' includes ourselves. So not being attached to the outcomes of my work, enjoying it as it's own thing - if it succeeds, if i...

I'm making an AI Alignment Evals course at AI Plans, that is highly incomplete - nevertheless, would appreciate feedback: https://docs.google.com/document/d/1_95M3DeBrGcBo8yoWF1XHxpUWSlH3hJ1fQs5p62zdHE/edit?tab=t.20uwc1photx3

It will be sold as a paid course, but will have a pretty easy application process for getting free access, for those who can't afford it

'ai control' seems like it just increases p doom, right?

since it obv wont scale to agi/asi and will just reduce the warning shots and financial incentives to invest in safety research, make it more economically viable to have misaligned ais, etc

and there's buzztalk about using misaligned ais to do alignment research, but the incentives to actually do so dont seem to be there and the research itself doesnt seem to be happening - as in, research to actually get closer to a mathematical proof of a method to align a superintelligence such that it wont kill everyone

Hi, making a guide/course for evals, very much in the early draft stage atm

Please consider giving feedback

https://docs.google.com/document/d/1_95M3DeBrGcBo8yoWF1XHxpUWSlH3hJ1fQs5p62zdHE/edit?usp=sharing

Hi, hosting an Alignment Evals hackathon for red teaming evals and making more robust ones, on November 1st: https://luma.com/h3hk7pvc

Team from previous one presented at ICML

Team in January made one of the first Interp based Evals for LLMs

All works from this will go towards the AI Plans Alignment Plan - if you want to do extremely impactful alignment research I think this is one of the best events in the world.

i want to write more, and know its beneficial for me to write more. every time that i recall doing so right now, in the last two years, it's increased the amount of people who know about my work, come to work with me, pay for the work in some way, etc.

however, i feel really really anxious, scared, etc of doing it. feels a bit stupid.

part of it as well is that i really really care about truth and about what i write being very very true and robust to misinterpretation. this is in large part something that i purposefully trained into myself, to av...

These are imperfect, I'd like feedback on them please:

https://moonshot-alignment-program.notion.site/Proposed-Research-Guides-255a2fee3c6780f68a59d07440e06d53?pvs=74

just joined the call with one of the moonshot teams and i was actually basically an interruption, lol. felt so good to be completely unneeded there

the Kick off Call for the Moonshot Alignment Program will be starting in 8 minutes! https://discord.gg/QdF4Yd6Q?event=1405189459917537421

If someone wants me to change my actions because of a future forecast they've made and don't share evidence they used to make their judgements, I don't take them very seriously.

An actual better analogy would be a company in a country whose gdp is growing faster than that of the country

one of the teams from the evals hackathon was accepted at an ICML workshop!

hosting this next: https://courageous-lift-30c.notion.site/Moonshot-Alignment-Program-20fa2fee3c6780a2b99cc5d8ca07c5b0

Will be focused on the Core Problem of Alignment

for this, I'm gonna be making a bunch of guides and tests for each track

if anyone would be interested in learning and/or working on a bunch of agent foundations, moral neuroscience (neuroscience studying how morals are encoded in the brain, how we make moral choices, etc) and preference optimization, please let me know! DM or email at kabir@ai-plans.com

On the Moonshot Alignment Program:

several teams from the prev hackathon are continuing to work on alignment evals and doing good work (one presenting to a gov security body, another making a new eval on alignment faking)

if i can get several new teams to exist who are working on trying to get values actually into models, with rigour, that seems very valuable to me

also, got a sponsorship deal with youtuber who makes technical deep learning videos, with 25K subscribers, he's said he'll be making a full video about the program.

also, people are gonna be c...

Hi, have your worked in moral neuroscience or know someone who has?

If so, I'd really really like to talk to you!

https://calendly.com/kabir03999/talk-with-kabir

I'm organizing a research program for the hard part of alignment in August.

I've already talked to lots of Agent Foundations researchers, learnt a lot about how that research is done, what the bottlenecks are, where new talent can be most useful.

I'd really really like to do this for the neuroscience track as well please.

This is a great set of replies to an AI post, on a quality level I didn't think I'd see on bluesky https://bsky.app/profile/steveklabnik.com/post/3lqaqe6uc3c2u

We've run two 150+ Alignment Evaluations Hackathons, that were 1 week long. Multiple teams continuing their work and submitting to NeurIPS. Had multiple quants, Wall Street ML researcher, an AMD engineer, PhDs, etc taking part.

Hosting a Research Fellowship soon, on the Hard Part of AI Alignment. Actually directly trying to get values into the model in a way that will robustly scale to an AGI that does things that we want and not things we don't want.

I've read 120+ Alignment Plans - the vast majority don't even try to solve the hard part of alig...

in general, when it comes to things which are the 'hard part of alignment', is the crux

```

a flawless method of ensuring the AI system is pointed at and will always continue to be pointed at good things

```

?

the key part being flawless - and that seeming to need a mathematical proof?

Thoughts on this?

### Limitations of HHH and other Static Dataset benchmarks

A Static Dataset is a dataset which will not grow or change - it will remain the same. Static dataset type benchmarks are inherently limited in what information they will tell us about a model. This is especially the case when we care about AI Alignment and want to measure how 'aligned' the AI is.

### Purpose of AI Alignment Benchmarks

When measuring AI Alignment, our aim is to find out exactly how close the model is to being the ultimate 'aligned' model that we're seeking - a model w...

I'm looking for feedback on the hackathon page

mind telling me what you think?

https://docs.google.com/document/d/1Wf9vju3TIEaqQwXzmPY--R0z41SMcRjAFyn9iq9r-ag/edit?usp=sharing

I'd like some feedback on my theory of impact for my currently chosen research path

**End goal**: Reduce x-risk from AI and risk of human disempowerment.

for x-risk:

- solving AI alignment - very important,

- knowing exactly how well we're doing in alignment, exactly how close we are to solving it, how much is left, etc seems important.

- how well different methods work,

- which companies are making progress in this, which aren't, which are acting like they're making progress vs actually making progress, etc

- put all on ...

I think this is a really good opportunity to work on a topic you might not normally work on, with people you might not normally work with, and have a big impact:

https://lu.ma/sjd7r89v

I'm running the event because I think this is something really valuable and underdone.

2 hours ago I had a grounded, real, moment when I realized agi is actually going to be real and decide the fate of everyone I care about and I personally, am going to need to significantly play a big role in making sure that it doesn't kill them and felt fucking terrified.

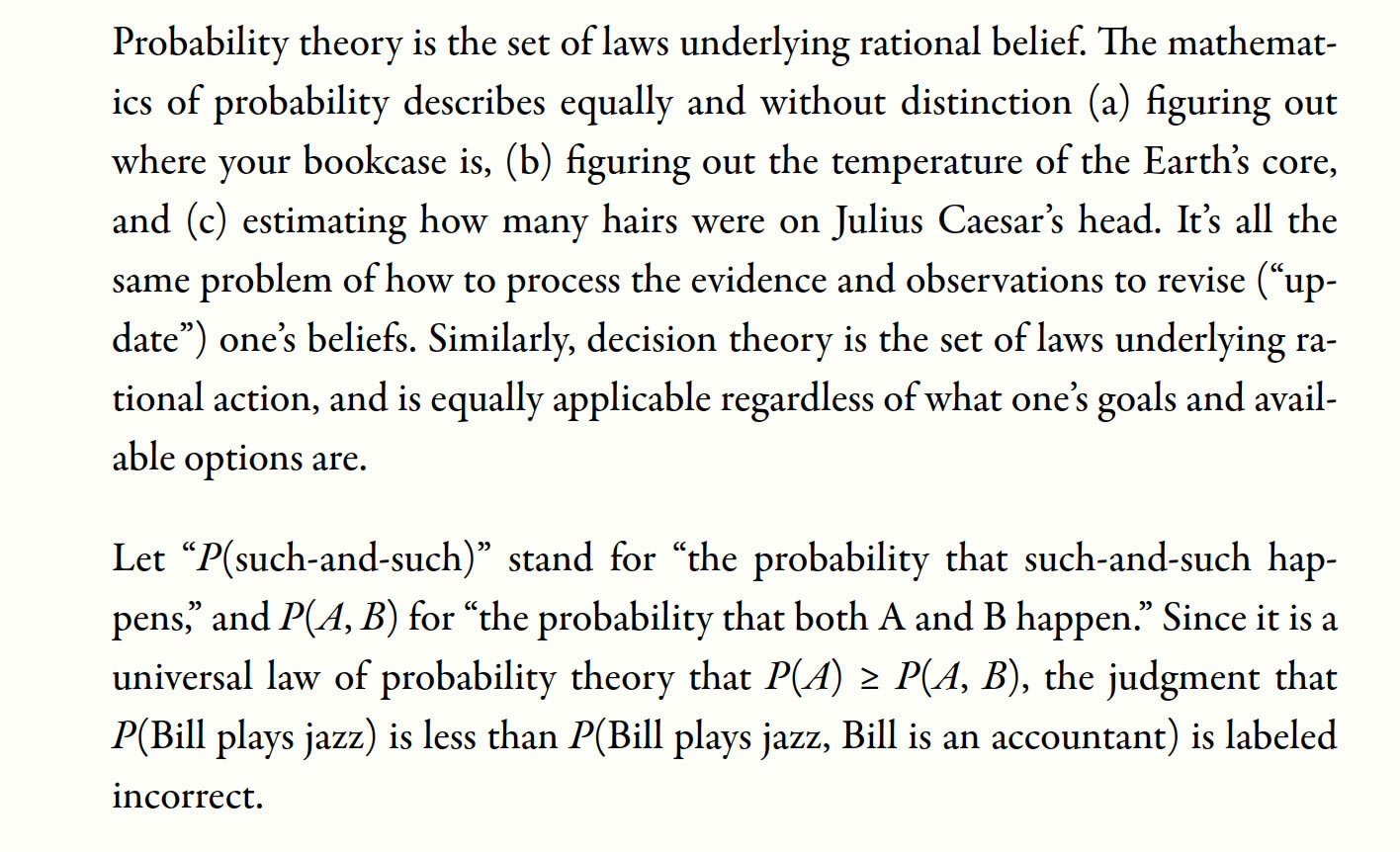

I'm finally reading The Sequences and it screams midwittery to me, I'm sorry.

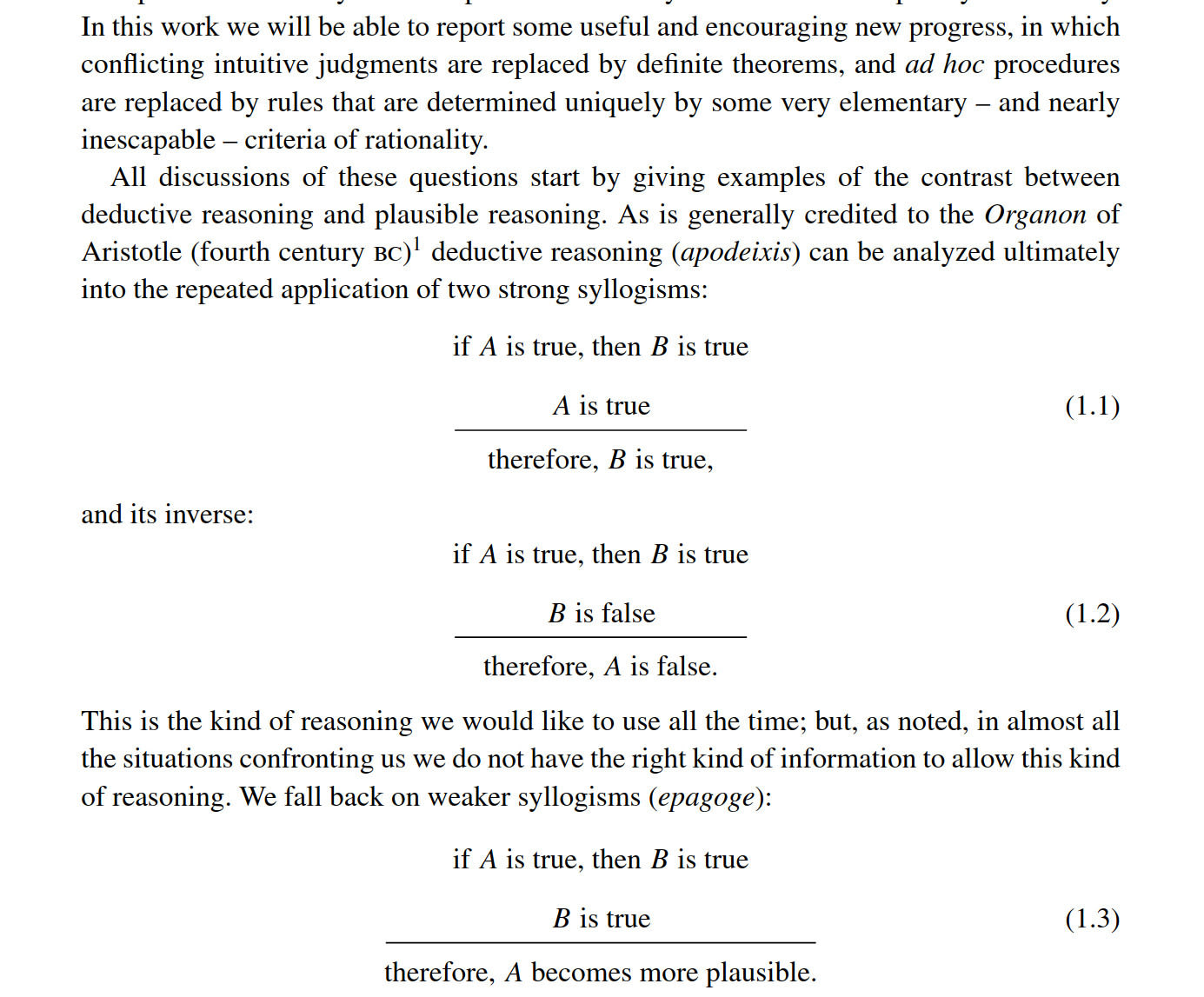

Compare this:

to Jaynes:

Jaynes is better organized, more respectful to the reader, more respectful to the work he's building on and more useful

rearranging and cleaning up my room with basic feng shui really does make it easier to focus and work, i keep forgetting this.

i recommend giving it a go if you havent already

Sometimes I am very glad I did not enter academia, because it means I haven't truly entered and assimilated to a bubble of jargon.

Please don't train an AI on anything I write without my explicit permission, it would make me very sad.

It is utterly dogshit how fucking bad all the explanations of what Agent Foundations is, are. Why do none of them try to be plain, precise, unambiguous and professional?

Why do they all insist on fucking analogies rather than just saying the actual thing they mean? It comes off as the research not being serious or real or the person saying it either no knowing what they're talking about, not being good at communicating or not putting in the fucking effort to try to explain things without wasting the readers time.

I feel like this is fundamentally...