At the beginning of last year, I noticed that neuroscience is very confused about what suffering is. This bothered me quite a lot, because I couldn't see how Utilitarianism or even Consequentialism could ever be coherent without a coherent understanding of suffering.

The following is my simple model that has helped me think through some issues and clarified my intuitions a bit. I am both posting this to share what I feel is a useful tool, and inviting criticism.

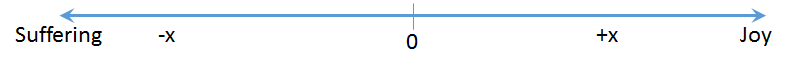

"Utilitarianism" (which term I will use to refer to all applicable formulations of Utilitarianism) assumes a space of subjective states that looks like a number line.

Suffering is bad. Joy is good. They are negatives of each other, and straightforwardly additive.

This has always struck me as nonsense, not at all reflective of my inner experience, and thus a terrible basis for extrapolating ethics over populations.

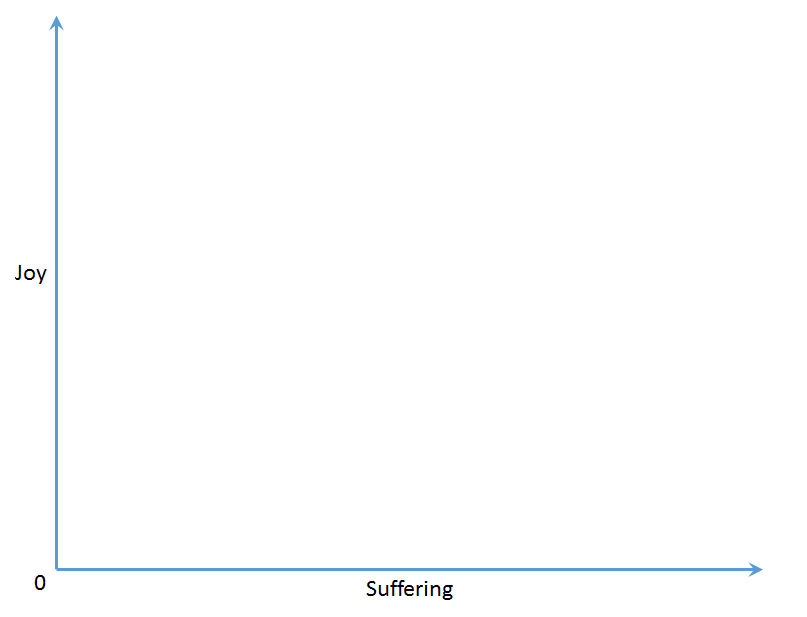

It feels more natural to treat Joy and Suffering as two axes. There may or may not be possible mappings between them depending on context. It does not seem natural to assume they are fully orthogonal, but there is also no reason to assume any particular mapping. There is particularly no reason to assume the mapping is anything like "+1 suffering is identical to -1 joy". There is extra-super-no-reason to assume that there's some natural definition of a vector magnitude of joy-suffering that is equivalent to "utility".

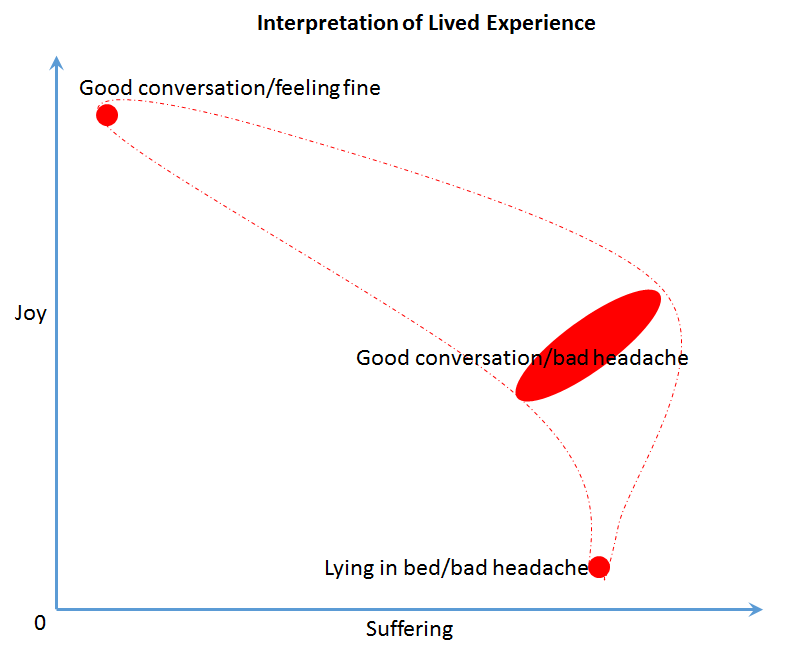

If you're having a nice conversation with an old friend you haven't seen in a while and you're feeling good, it's likely that your subjective sense of suffering is quite low and your subjective sense of joy is quite high.

If you have a bad headache and you're lying in bed trying to rest, your subjective sense of suffering is pretty high, and your level of joy is quite low.

What interests me is the "mixed" scenario, where your friend is only in town briefly, and you happen to have a headache. You're happy to be able to visit with them, so there's a definite quality of joy to your subjectivity. You wish you didn't have a headache, but the headache doesn't somehow negate the joy in being able to talk to them. It's possible that forcing yourself to talk to them, rather than lie in bed, is making the headache worse, thus increasing the "suffering" component of your experience, but this is in a very important sense worth it because of the joy premium.

It's also entirely possible that the conversation is so good that you become distracted from the headache and your subjective experience reflects less suffering than if you were lying in bed resting! Suffering and joy interact complexly and in context-dependent ways! Thus there emerges something like an envelope of possible states, as depicted by the dashed red region.

Could you collapse those axes onto a number line, somehow? Yeah, I guess. Why would you? Why would you throw out all the resolution you gain by viewing joy and suffering as separate but interrelated quantities? Why would you throw all this out in favor of some lossy definition of "utility"?

If your answer is, "So I can perform interpersonal utilitarian calculations," that's a terrible answer.

Let's briefly look at a few popular Utilitarian problems. I don't propose that my simple model really solves anything. It's more that I find it to be an interesting framework that sometimes clarifies intuitions.

My intuitions have never been satisfied that I should choose Torture over Dust Specks. In the two-axis model, Dust Specks is essentially a slight, transient increase along the Suffering axis, multiplied by an astronomical number. The Dust Speck "intervention" can happen to any person at any point in the Joy/Suffering field. They can be skydiving, they can be doing paperwork, they can be undergoing a different kind of torture already. The Dust Speck always induces a slight, transient increase in suffering, relative to where they started out.

Torture is the long-term imposition of a "ceiling" on joy, and a "floor" on suffering, for an individual. You are confining a person's subjectivity to that narrow, horrible lower-right-hand envelope of subjectivity-space. I would argue that this feels morally distinct, in some important sense, from just inducing a large number of transient suffering-impulses.

"But Matt, couldn't you just reduce all this to vector-sums of Suffering/Joy and collapse the problem to the typical Utilitarian formulation?" Yeah, you could, I guess. But I feel like you lose something important when you do that blindly. You throw out that intuition that these 50 years of torture is different from 3^3^3 dust specks. And what's the point of an ethics that throws out important intuitions?

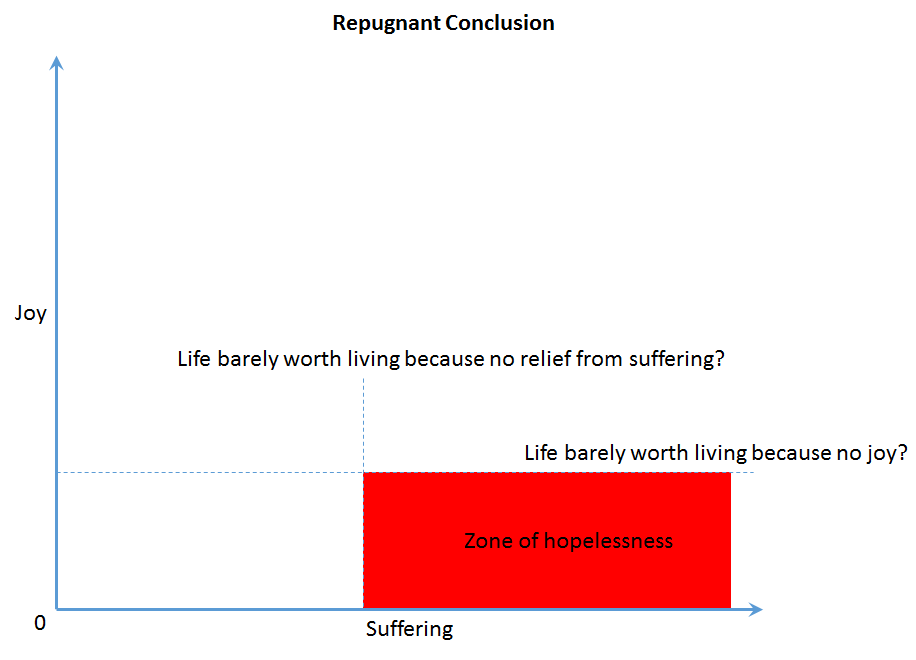

I have even less insight to provide on the Repugnant Conclusion, other than to say, again, that there seems to be something deeply, intuitively monstrous about confining humans permanently to undesirable zones of Joy/Suffering space, and that it might be worth thinking about why, exactly.

For example, it appears that life may be not-worth-living because of a permanently high state of suffering, or because of a permanently low state of joy, or both. This insight, while minor, is not something that falls out of a utility-number-line analysis.

The more progress I make in meditation, the more correct this perspective feels, and the less correct number-line utilitarianism feels. "Suffering" and "Joy" appear more and more as distinct mental phenomena. Reducing suffering feels less and less like the same thing as increasing joy, and vice versa.

I welcome your feedback.

No, my procedure is a decision procedure that answers the question "what should our group do". It's a very sensible question. What it means for it to "work" is debatable, but I meant that the procedure would generate decisions that generally seem fair to everyone. I'll be condescending again - it's very bad that you can't figure out what sort of questions we're trying to answer here.

Let me recap what our discussion on this topic looks like from my point of view. I said that "we can construct a utility function after we have verified the axioms". You asked how. I understand why my first claim might have been misleading, as if the function poofed into existence with U("eat pancakes")=3.91 already set by itself. I immediately explain that I didn't mean zero comparisons, I just meant fewer comparisons than you would need without the axioms (I wonder if that was misunderstood as well). You asked how. I then give a trivial example of a comparison that I don't need to make if I used the axioms. Then you said that this is irrelevant.

Well, it's not irrelevant, it's a direct answer to your question and a trivial proof of my earlier claim. "Irrelevant" is not a reply I could have predicted, it took me completely by surprise. It is important to me to figure out what happened here. Presumably one (or both) of us struggles with the English language, or with basic logic, or just isn't paying any attention. If we failed to communicate this badly on this topic, are we failing equally badly on all other topics? If we are, is there any point in continuing the discussion, or can it be fixed somehow?