May I suggest adding an explanation for HLMI? I assume it means human level machine intelligence, but it might be useful to explicitly expand it.

Added the definition, thanks. It stands for "high-level machine intelligence". AI Impacts goes on to describe it as "when unaided machines can accomplish every task better and more cheaply than human workers. Ignore aspects of tasks for which being a human is intrinsically advantageous, e.g. being accepted as a jury member. Think feasibility, not adoption."

Introduction

To help grow the pipeline of AI safety researchers, I conducted a project to determine how demographic information (e.g. level of experience, exposure to AI arguments) affects AI researchers’ responses to AI safety. In addition, I examined additional AI safety surveys to uncover current issues preventing people from becoming AI safety researchers. Specifically, I analyzed the publicly-available data from the AI Impacts survey and also asked AI Safety Support and AGI Safety Fundamentals for their survey data (huge thank you to all three organizations). Below are my results, which I hope will be informative to future field-building efforts.

This work was done as part of the AI Safety Field-Building Hub; thanks to Vael Gates for comments and support. Comments and feedback are very welcome, and all mistakes are my own.

TLDR

Data

AI Impacts

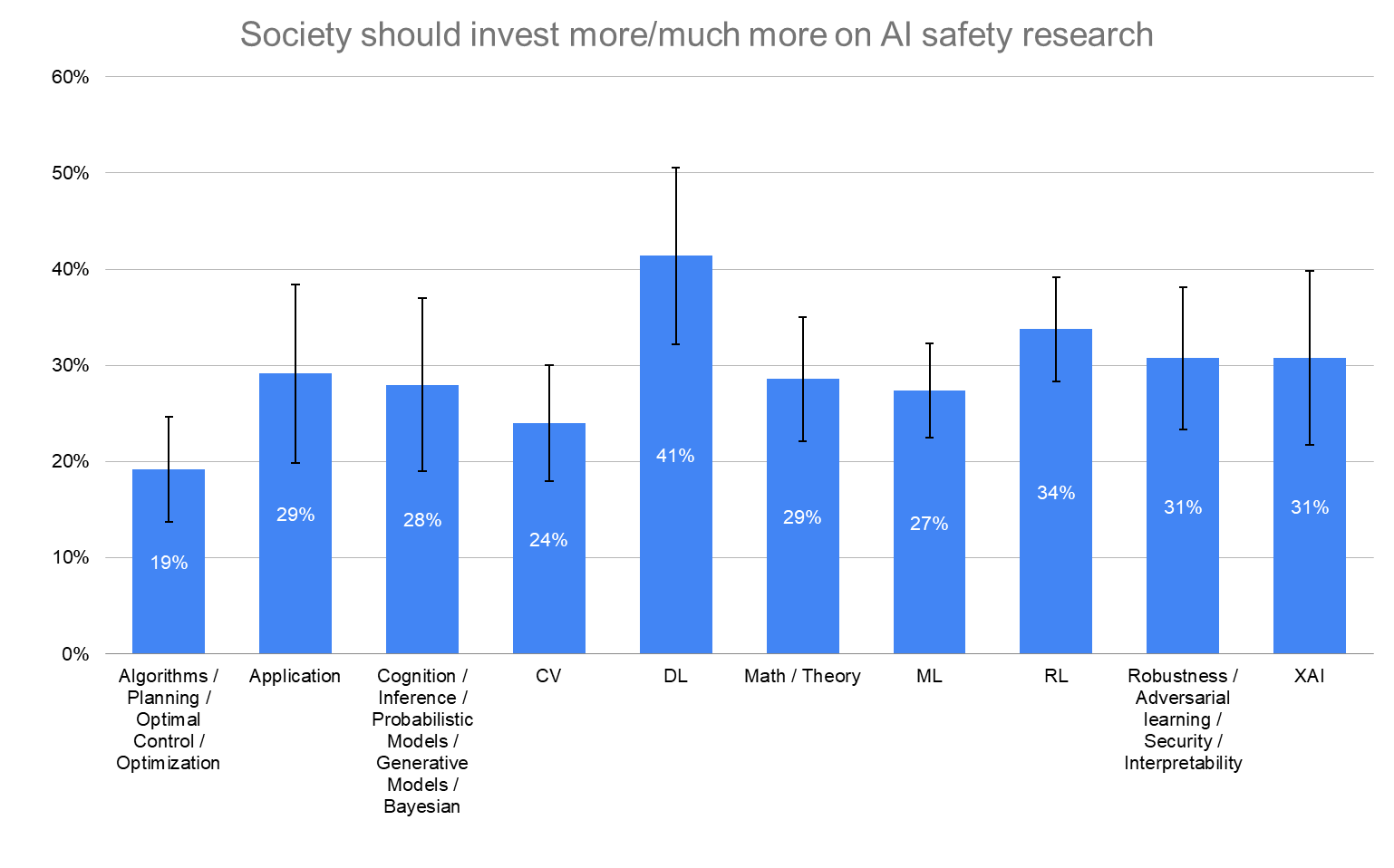

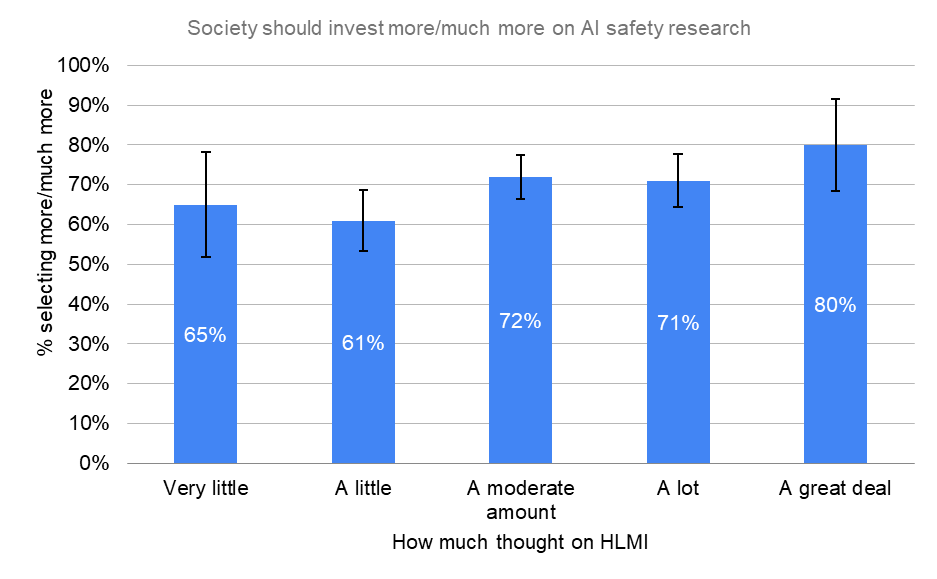

The survey ran from June-August 2022 with participants representing a wide range of machine learning researchers. Note: error bars are standard error for proportions.

Summary

By Industry

By Specific AI Field

Legend

Note: This section is quite rough; Vael manually categorized these (not an expert in the field) very quickly, and Ash selected the first sub-speciality of the labels to make this graph."

By How Much Thought to HLMI

By Undergraduate Continent

AI Safety Support

AI Safety Support conducted a broad survey to those interested in AI safety. One limitation to this data is that it's from early 2021. Since then, new programs have come into existence that may slightly change the responses if they were provided today.

Summary

What information are you missing?

Industry n=38, Academia n=27

What would help you?

Industry n=89, Academia n=31

Why not work on AI safety?

AGISF

The AGISF survey was announced on LessWrong and taken by a broad range of people.

Summary

n=61

Additional

Vael Gates's postdoc research

Marius Hobbhahn's post

Limitations

Conclusion

I hope sharing my findings with the larger community will help build and grow the pipeline of AI safety and alignment research. This corpus of data is meant as a starting point. It will evolve as new programs and organizations sprout up to help solve some of these existing issues. AGI capability progress and funding will also be paramount in how views change going forward.

If you have any questions, please leave a comment below.